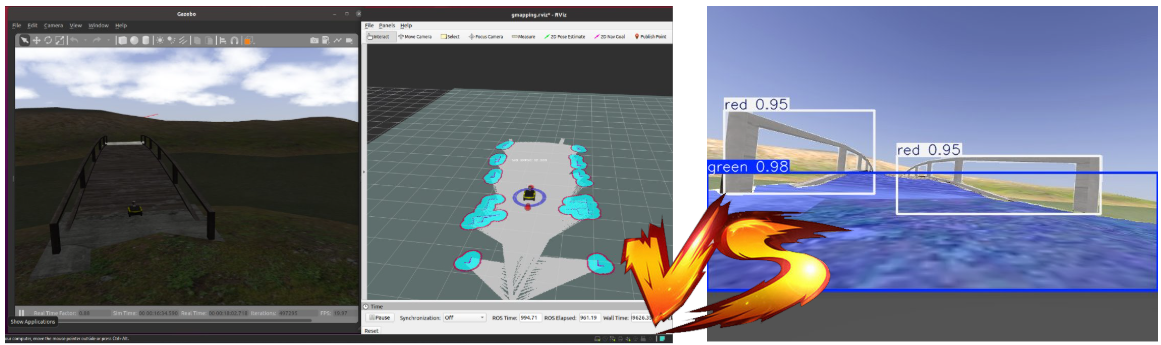

Welcome to the Autonomous Navigation project! This repository demonstrates and compares traditional and AI-enhanced navigation methods for robotic systems in simulated environments using ROS Noetic and Gazebo. 🚀

This project explores two navigation paradigms:

- Traditional Navigation: Using SLAM and A* for pathfinding.

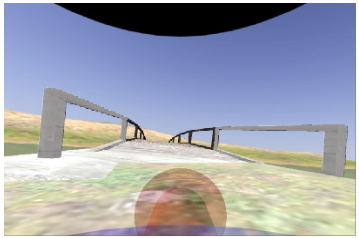

- AI-Based Navigation: Leveraging semantic segmentation with YOLOv8 to classify terrain into safe (green), challenging (yellow), and restricted (red) zones.

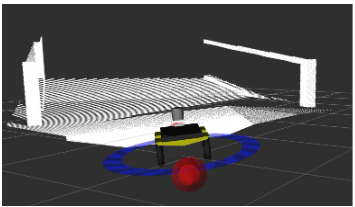

The Jackal Robot was employed in a simulated Gazebo environment, equipped with:

- 🛠 Sensors: LiDAR, Intel RealSense D455, and IMU.

- 🌐 Simulation Environment: Clearpath Robotics' Inspection World.

Ensure the following are installed:

- ROS Noetic

- Gazebo

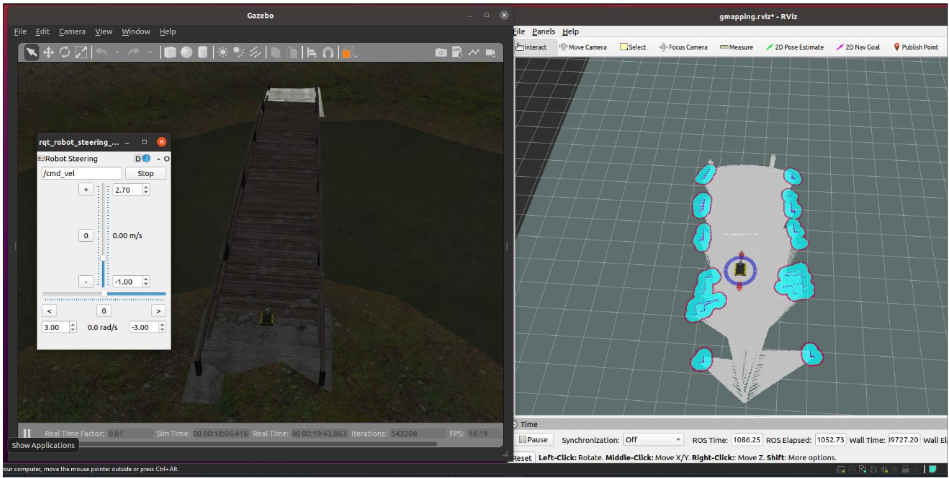

- RVIZ

- Python 3.9

- Dependencies listed here

git clone https://github.com/AdharshKan42/AI_Based_Auton_Nav

cd AI_Based_Auton_Navrosdep update

rosdep install --from-paths src --ignore-src -rcatkin_make

source devel/setup.bashTo start the Gazebo environment with the Jackal robot:

roslaunch cpr_inspection_gazebo inspection_world.launch platform:=jackalroslaunch jackal_viz view_robot.launchrosrun teleop_twist_keyboard teleop_twist_keyboard.py cmd_vel:=/cmd_velrosrun rqt_gui rqt_gui -s rqt_robot_steeringrosrun rqt_image_view rqt_image_viewroslaunch cpr_inspection_gazebo inspection_world.launch platform:=jackalroslaunch jackal_navigation gmapping_demo.launch scan_topic:=lidar/scanroslaunch jackal_viz view_robot.launch config:=gmappingrosrun map_server map_saver -f mymaproslaunch jackal_navigation amcl_demo.launch map_file:=/path/to/my/map.yamlroslaunch jackal_viz view_robot.launch config:=localization- View camera:

rosrun rqt_image_view rqt_image_view

Create a goal waypoint based on the robot's current position.

rosrun utm_to_robot waypoint_creation.pyStart YOLOv8 Inference on the Realsense Camera

rosrun utm_to_robot yolo_inference.pyRun the navigation algorithm to move towards the goal position

rosrun utm_to_robot mock_movebase_combined.py| Metric | Traditional (A*) | AI-Based (YOLOv8) |

|---|---|---|

| Path Length (meters) | 18.6 | 17.3 |

| Traversal Time (secs) | 45.2 | 39.8 |

| Collision Rate | 1 | 0 |

| Computational Load | 100% CPU | 65% CPU, 30% GPU |

| Adaptability | Low | High |

- Traditional Approach: Reliable for static environments, struggles with dynamic changes.

- AI-Based Approach: Adapts well to unstructured terrains with efficient navigation.

This project was a collaborative effort by:

- @AdharshKan42 Adharsh Kandula: Environment setup and AI model integration.

- @lowwhit Lohith Venkat Chamakura: Implementation of Traditional navigation algorithms.

- @nishitpopat Nishit Popat: Mapping algorithms Pathfinding optimizations.

- @rrrraghav Raghav Mathur: Testing of multiple models and finetuning.

- @SwordAndTea Wei Xiang: Data collection and preprocessing, AI model training and evaluation.

Feel free to contribute! Submit issues or pull requests to improve this repository. 🎉