diff --git a/.circleci/test.yml b/.circleci/test.yml

index 81af82a14e4..0eefb195dd6 100644

--- a/.circleci/test.yml

+++ b/.circleci/test.yml

@@ -136,6 +136,8 @@ jobs:

machine:

image: ubuntu-2004-cuda-11.4:202110-01

resource_class: gpu.nvidia.small

+ environment:

+ MKL_SERVICE_FORCE_INTEL: 1

parameters:

torch:

type: string

diff --git a/.dev_scripts/fill_metafile.py b/.dev_scripts/fill_metafile.py

index d0f49a84a8a..2541d763b22 100644

--- a/.dev_scripts/fill_metafile.py

+++ b/.dev_scripts/fill_metafile.py

@@ -20,8 +20,10 @@

MMCLS_ROOT = Path(__file__).absolute().parents[1].resolve().absolute()

console = Console()

-dataset_completer = FuzzyWordCompleter(

- ['ImageNet-1k', 'ImageNet-21k', 'CIFAR-10', 'CIFAR-100'])

+dataset_completer = FuzzyWordCompleter([

+ 'ImageNet-1k', 'ImageNet-21k', 'CIFAR-10', 'CIFAR-100', 'RefCOCO', 'VQAv2',

+ 'COCO', 'OpenImages', 'Object365', 'CC3M', 'CC12M', 'YFCC100M', 'VG'

+])

def prompt(message,

@@ -83,53 +85,57 @@ def parse_args():

return args

-def get_flops(config_path):

+def get_flops_params(config_path):

import numpy as np

import torch

- from fvcore.nn import FlopCountAnalysis, parameter_count

- from mmengine.config import Config

+ from mmengine.analysis import FlopAnalyzer, parameter_count

from mmengine.dataset import Compose

from mmengine.model.utils import revert_sync_batchnorm

from mmengine.registry import DefaultScope

- import mmpretrain.datasets # noqa: F401

- from mmpretrain.apis import init_model

-

- cfg = Config.fromfile(config_path)

-

- if 'test_dataloader' in cfg:

- # build the data pipeline

- test_dataset = cfg.test_dataloader.dataset

- if test_dataset.pipeline[0]['type'] == 'LoadImageFromFile':

- test_dataset.pipeline.pop(0)

- if test_dataset.type in ['CIFAR10', 'CIFAR100']:

- # The image shape of CIFAR is (32, 32, 3)

- test_dataset.pipeline.insert(1, dict(type='Resize', scale=32))

-

- with DefaultScope.overwrite_default_scope('mmpretrain'):

- data = Compose(test_dataset.pipeline)({

- 'img':

- np.random.randint(0, 256, (224, 224, 3), dtype=np.uint8)

- })

- resolution = tuple(data['inputs'].shape[-2:])

- else:

- # For configs only for get model.

- resolution = (224, 224)

+ from mmpretrain.apis import get_model

+ from mmpretrain.models.utils import no_load_hf_pretrained_model

- model = init_model(cfg, device='cpu')

+ with no_load_hf_pretrained_model():

+ model = get_model(config_path, device='cpu')

model = revert_sync_batchnorm(model)

model.eval()

-

- with torch.no_grad():

- model.forward = model.extract_feat

- model.to('cpu')

- inputs = (torch.randn((1, 3, *resolution)), )

- analyzer = FlopCountAnalysis(model, inputs)

- analyzer.unsupported_ops_warnings(False)

- analyzer.uncalled_modules_warnings(False)

- flops = analyzer.total()

- params = parameter_count(model)['']

- return int(flops), int(params)

+ params = int(parameter_count(model)[''])

+

+ # get flops

+ try:

+ if 'test_dataloader' in model._config:

+ # build the data pipeline

+ test_dataset = model._config.test_dataloader.dataset

+ if test_dataset.pipeline[0]['type'] == 'LoadImageFromFile':

+ test_dataset.pipeline.pop(0)

+ if test_dataset.type in ['CIFAR10', 'CIFAR100']:

+ # The image shape of CIFAR is (32, 32, 3)

+ test_dataset.pipeline.insert(1, dict(type='Resize', scale=32))

+

+ with DefaultScope.overwrite_default_scope('mmpretrain'):

+ data = Compose(test_dataset.pipeline)({

+ 'img':

+ np.random.randint(0, 256, (224, 224, 3), dtype=np.uint8)

+ })

+ resolution = tuple(data['inputs'].shape[-2:])

+ else:

+ # For configs only for get model.

+ resolution = (224, 224)

+

+ with torch.no_grad():

+ # Skip flops if the model doesn't have `extract_feat` method.

+ model.forward = model.extract_feat

+ model.to('cpu')

+ inputs = (torch.randn((1, 3, *resolution)), )

+ analyzer = FlopAnalyzer(model, inputs)

+ analyzer.unsupported_ops_warnings(False)

+ analyzer.uncalled_modules_warnings(False)

+ flops = int(analyzer.total())

+ except Exception:

+ print('Unable to calculate flops.')

+ flops = None

+ return flops, params

def fill_collection(collection: dict):

@@ -202,12 +208,9 @@ def fill_model_by_prompt(model: dict, defaults: dict):

params = model.get('Metadata', {}).get('Parameters')

if model.get('Config') is not None and (

MMCLS_ROOT / model['Config']).exists() and (flops is None

- or params is None):

- try:

- print('Automatically compute FLOPs and Parameters from config.')

- flops, params = get_flops(str(MMCLS_ROOT / model['Config']))

- except Exception:

- print('Failed to compute FLOPs and Parameters.')

+ and params is None):

+ print('Automatically compute FLOPs and Parameters from config.')

+ flops, params = get_flops_params(str(MMCLS_ROOT / model['Config']))

if flops is None:

flops = prompt('Please specify the [red]FLOPs[/]: ')

@@ -222,7 +225,8 @@ def fill_model_by_prompt(model: dict, defaults: dict):

model['Metadata'].setdefault('FLOPs', flops)

model['Metadata'].setdefault('Parameters', params)

- if model.get('Metadata', {}).get('Training Data') is None:

+ if 'Training Data' not in model.get('Metadata', {}) and \

+ 'Training Data' not in defaults.get('Metadata', {}):

training_data = prompt(

'Please input all [red]training dataset[/], '

'include pre-training (input empty to finish): ',

@@ -259,12 +263,11 @@ def fill_model_by_prompt(model: dict, defaults: dict):

for metric in metrics_list:

k, v = metric.split('=')[:2]

metrics[k] = round(float(v), 2)

- if len(metrics) > 0:

- results = [{

- 'Dataset': test_dataset,

- 'Metrics': metrics,

- 'Task': task

- }]

+ results = [{

+ 'Task': task,

+ 'Dataset': test_dataset,

+ 'Metrics': metrics or None,

+ }]

model['Results'] = results

weights = model.get('Weights')

@@ -274,7 +277,7 @@ def fill_model_by_prompt(model: dict, defaults: dict):

if model.get('Converted From') is None and model.get(

'Weights') is not None:

- if Confirm.ask(

+ if '3rdparty' in model['Name'] or Confirm.ask(

'Is the checkpoint is converted '

'from [red]other repository[/]?',

default=False):

@@ -317,9 +320,9 @@ def update_model_by_dict(model: dict, update_dict: dict, defaults: dict):

# Metadata.Flops, Metadata.Parameters

flops = model.get('Metadata', {}).get('FLOPs')

params = model.get('Metadata', {}).get('Parameters')

- if config_updated or (flops is None or params is None):

+ if config_updated or (flops is None and params is None):

print(f'Automatically compute FLOPs and Parameters of {model["Name"]}')

- flops, params = get_flops(str(MMCLS_ROOT / model['Config']))

+ flops, params = get_flops_params(str(MMCLS_ROOT / model['Config']))

model.setdefault('Metadata', {})

model['Metadata']['FLOPs'] = flops

@@ -409,10 +412,15 @@ def format_model(model: dict):

def order_models(model):

order = []

+ # Pre-trained model

order.append(int('Downstream' not in model))

+ # non-3rdparty model

order.append(int('3rdparty' in model['Name']))

+ # smaller model

order.append(model.get('Metadata', {}).get('Parameters', 0))

+ # faster model

order.append(model.get('Metadata', {}).get('FLOPs', 0))

+ # name order

order.append(len(model['Name']))

return tuple(order)

@@ -442,7 +450,10 @@ def main():

collection = fill_collection(collection)

if ori_collection != collection:

console.print(format_collection(collection))

- model_defaults = {'In Collection': collection['Name']}

+ model_defaults = {

+ 'In Collection': collection['Name'],

+ 'Metadata': collection.get('Metadata', {}),

+ }

models = content.get('Models', [])

updated_models = []

diff --git a/.dev_scripts/generate_readme.py b/.dev_scripts/generate_readme.py

index 2fd0a5a2a48..e80d691a19c 100644

--- a/.dev_scripts/generate_readme.py

+++ b/.dev_scripts/generate_readme.py

@@ -331,6 +331,12 @@ def add_models(metafile):

'Image Classification',

'Image Retrieval',

'Multi-Label Classification',

+ 'Image Caption',

+ 'Visual Grounding',

+ 'Visual Question Answering',

+ 'Image-To-Text Retrieval',

+ 'Text-To-Image Retrieval',

+ 'NLVR',

]

for task in tasks:

diff --git a/README.md b/README.md

index ea24969459d..e6a0afbe21d 100644

--- a/README.md

+++ b/README.md

@@ -70,11 +70,19 @@ The `main` branch works with **PyTorch 1.8+**.

### Major features

- Various backbones and pretrained models

-- Rich training strategies(supervised learning, self-supervised learning, etc.)

+- Rich training strategies (supervised learning, self-supervised learning, multi-modality learning etc.)

- Bag of training tricks

- Large-scale training configs

- High efficiency and extensibility

- Powerful toolkits for model analysis and experiments

+- Various out-of-box inference tasks.

+ - Image Classification

+ - Image Caption

+ - Visual Question Answering

+ - Visual Grounding

+ - Retrieval (Image-To-Image, Text-To-Image, Image-To-Text)

+

+https://github.com/open-mmlab/mmpretrain/assets/26739999/e4dcd3a2-f895-4d1b-a351-fbc74a04e904

## What's new

@@ -117,6 +125,12 @@ mim install -e .

Please refer to [installation documentation](https://mmpretrain.readthedocs.io/en/latest/get_started.html) for more detailed installation and dataset preparation.

+For multi-modality models support, please install the extra dependencies by:

+

+```shell

+mim install -e ".[multimodal]"

+```

+

## User Guides

We provided a series of tutorials about the basic usage of MMPreTrain for new users:

diff --git a/README_zh-CN.md b/README_zh-CN.md

index 0576df108b7..50426aca2a2 100644

--- a/README_zh-CN.md

+++ b/README_zh-CN.md

@@ -68,11 +68,19 @@ MMPreTrain 是一款基于 PyTorch 的开源深度学习预训练工具箱,是

### 主要特性

- 支持多样的主干网络与预训练模型

-- 支持多种训练策略(有监督学习,无监督学习等)

+- 支持多种训练策略(有监督学习,无监督学习,多模态学习等)

- 提供多种训练技巧

- 大量的训练配置文件

- 高效率和高可扩展性

- 功能强大的工具箱,有助于模型分析和实验

+- 支持多种开箱即用的推理任务

+ - 图像分类

+ - 图像描述(Image Caption)

+ - 视觉问答(Visual Question Answering)

+ - 视觉定位(Visual Grounding)

+ - 检索(图搜图,图搜文,文搜图)

+

+https://github.com/open-mmlab/mmpretrain/assets/26739999/e4dcd3a2-f895-4d1b-a351-fbc74a04e904

## 更新日志

@@ -114,6 +122,12 @@ mim install -e .

更详细的步骤请参考 [安装指南](https://mmpretrain.readthedocs.io/zh_CN/latest/get_started.html) 进行安装。

+如果需要多模态模型,请使用如下方式安装额外的依赖:

+

+```shell

+mim install -e ".[multimodal]"

+```

+

## 基础教程

我们为新用户提供了一系列基础教程:

diff --git a/configs/_base_/datasets/coco_caption.py b/configs/_base_/datasets/coco_caption.py

new file mode 100644

index 00000000000..05d49349ce1

--- /dev/null

+++ b/configs/_base_/datasets/coco_caption.py

@@ -0,0 +1,69 @@

+# data settings

+

+data_preprocessor = dict(

+ type='MultiModalDataPreprocessor',

+ mean=[122.770938, 116.7460125, 104.09373615],

+ std=[68.5005327, 66.6321579, 70.32316305],

+ to_rgb=True,

+)

+

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='RandomResizedCrop',

+ scale=384,

+ interpolation='bicubic',

+ backend='pillow'),

+ dict(type='RandomFlip', prob=0.5, direction='horizontal'),

+ dict(type='CleanCaption', keys='gt_caption'),

+ dict(

+ type='PackInputs',

+ algorithm_keys=['gt_caption'],

+ meta_keys=['image_id'],

+ ),

+]

+

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='Resize',

+ scale=(384, 384),

+ interpolation='bicubic',

+ backend='pillow'),

+ dict(type='PackInputs', meta_keys=['image_id']),

+]

+

+train_dataloader = dict(

+ batch_size=32,

+ num_workers=5,

+ dataset=dict(

+ type='COCOCaption',

+ data_root='data/coco',

+ ann_file='annotations/coco_karpathy_train.json',

+ pipeline=train_pipeline),

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ persistent_workers=True,

+ drop_last=True,

+)

+

+val_dataloader = dict(

+ batch_size=16,

+ num_workers=5,

+ dataset=dict(

+ type='COCOCaption',

+ data_root='data/coco',

+ ann_file='annotations/coco_karpathy_val.json',

+ pipeline=test_pipeline,

+ ),

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ persistent_workers=True,

+)

+

+val_evaluator = dict(

+ type='COCOCaption',

+ ann_file='data/coco/annotations/coco_karpathy_val_gt.json',

+)

+

+# # If you want standard test, please manually configure the test dataset

+test_dataloader = val_dataloader

+test_evaluator = val_evaluator

diff --git a/configs/_base_/datasets/coco_retrieval.py b/configs/_base_/datasets/coco_retrieval.py

new file mode 100644

index 00000000000..8bc1c1f6754

--- /dev/null

+++ b/configs/_base_/datasets/coco_retrieval.py

@@ -0,0 +1,95 @@

+# data settings

+data_preprocessor = dict(

+ type='MultiModalDataPreprocessor',

+ mean=[122.770938, 116.7460125, 104.09373615],

+ std=[68.5005327, 66.6321579, 70.32316305],

+ to_rgb=True,

+)

+

+rand_increasing_policies = [

+ dict(type='AutoContrast'),

+ dict(type='Equalize'),

+ dict(type='Rotate', magnitude_key='angle', magnitude_range=(0, 30)),

+ dict(

+ type='Brightness', magnitude_key='magnitude',

+ magnitude_range=(0, 0.0)),

+ dict(type='Sharpness', magnitude_key='magnitude', magnitude_range=(0, 0)),

+ dict(

+ type='Shear',

+ magnitude_key='magnitude',

+ magnitude_range=(0, 0.3),

+ direction='horizontal'),

+ dict(

+ type='Shear',

+ magnitude_key='magnitude',

+ magnitude_range=(0, 0.3),

+ direction='vertical'),

+]

+

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='RandomResizedCrop',

+ scale=384,

+ crop_ratio_range=(0.5, 1.0),

+ interpolation='bicubic'),

+ dict(type='RandomFlip', prob=0.5, direction='horizontal'),

+ dict(

+ type='RandAugment',

+ policies=rand_increasing_policies,

+ num_policies=2,

+ magnitude_level=5),

+ dict(type='CleanCaption', keys='text'),

+ dict(

+ type='PackInputs',

+ algorithm_keys=['text', 'is_matched'],

+ meta_keys=['image_id']),

+]

+

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='Resize',

+ scale=(384, 384),

+ interpolation='bicubic',

+ backend='pillow'),

+ dict(type='CleanCaption', keys='text'),

+ dict(

+ type='PackInputs',

+ algorithm_keys=['text', 'gt_text_id', 'gt_image_id'],

+ meta_keys=['image_id']),

+]

+

+train_dataloader = dict(

+ batch_size=32,

+ num_workers=16,

+ dataset=dict(

+ type='COCORetrieval',

+ data_root='data/coco',

+ ann_file='annotations/caption_karpathy_train2014.json',

+ pipeline=train_pipeline),

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ persistent_workers=True,

+ drop_last=True,

+)

+

+val_dataloader = dict(

+ batch_size=64,

+ num_workers=16,

+ dataset=dict(

+ type='COCORetrieval',

+ data_root='data/coco',

+ ann_file='annotations/caption_karpathy_val2014.json',

+ pipeline=test_pipeline,

+ # This is required for evaluation

+ test_mode=True,

+ ),

+ sampler=dict(type='SequentialSampler', subsample_type='sequential'),

+ persistent_workers=True,

+)

+

+val_evaluator = dict(type='RetrievalRecall', topk=(1, 5, 10))

+

+# If you want standard test, please manually configure the test dataset

+test_dataloader = val_dataloader

+test_evaluator = val_evaluator

diff --git a/configs/_base_/datasets/coco_vg_vqa.py b/configs/_base_/datasets/coco_vg_vqa.py

new file mode 100644

index 00000000000..7ba0eac4685

--- /dev/null

+++ b/configs/_base_/datasets/coco_vg_vqa.py

@@ -0,0 +1,96 @@

+# data settings

+data_preprocessor = dict(

+ type='MultiModalDataPreprocessor',

+ mean=[122.770938, 116.7460125, 104.09373615],

+ std=[68.5005327, 66.6321579, 70.32316305],

+ to_rgb=True,

+)

+

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='RandomResizedCrop',

+ scale=(480, 480),

+ crop_ratio_range=(0.5, 1.0),

+ interpolation='bicubic',

+ backend='pillow'),

+ dict(type='RandomFlip', prob=0.5, direction='horizontal'),

+ dict(

+ type='RandAugment',

+ policies='simple_increasing', # slightly different from LAVIS

+ num_policies=2,

+ magnitude_level=5),

+ dict(type='CleanCaption', keys=['question', 'gt_answer']),

+ dict(

+ type='PackInputs',

+ algorithm_keys=['question', 'gt_answer', 'gt_answer_weight']),

+]

+

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='Resize',

+ scale=(480, 480),

+ interpolation='bicubic',

+ backend='pillow'),

+ dict(type='CleanCaption', keys=['question']),

+ dict(

+ type='PackInputs',

+ algorithm_keys=['question'],

+ meta_keys=['question_id']),

+]

+

+train_dataloader = dict(

+ batch_size=32,

+ num_workers=8,

+ dataset=dict(

+ type='ConcatDataset',

+ datasets=[

+ # VQAv2 train

+ dict(

+ type='COCOVQA',

+ data_root='data/coco',

+ data_prefix='train2014',

+ question_file=

+ 'annotations/v2_OpenEnded_mscoco_train2014_questions.json',

+ ann_file='annotations/v2_mscoco_train2014_annotations.json',

+ pipeline=train_pipeline,

+ ),

+ # VQAv2 val

+ dict(

+ type='COCOVQA',

+ data_root='data/coco',

+ data_prefix='val2014',

+ question_file=

+ 'annotations/v2_OpenEnded_mscoco_val2014_questions.json',

+ ann_file='annotations/v2_mscoco_val2014_annotations.json',

+ pipeline=train_pipeline,

+ ),

+ # Visual Genome

+ dict(

+ type='VisualGenomeQA',

+ data_root='visual_genome',

+ data_prefix='image',

+ ann_file='question_answers.json',

+ pipeline=train_pipeline,

+ )

+ ]),

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ persistent_workers=True,

+ drop_last=True,

+)

+

+test_dataloader = dict(

+ batch_size=32,

+ num_workers=8,

+ dataset=dict(

+ type='COCOVQA',

+ data_root='data/coco',

+ data_prefix='test2015',

+ question_file=

+ 'annotations/v2_OpenEnded_mscoco_test2015_questions.json', # noqa: E501

+ pipeline=test_pipeline,

+ ),

+ sampler=dict(type='DefaultSampler', shuffle=False),

+)

+test_evaluator = dict(type='ReportVQA', file_path='vqa_test.json')

diff --git a/configs/_base_/datasets/coco_vqa.py b/configs/_base_/datasets/coco_vqa.py

new file mode 100644

index 00000000000..7fb16bd241b

--- /dev/null

+++ b/configs/_base_/datasets/coco_vqa.py

@@ -0,0 +1,84 @@

+# data settings

+

+data_preprocessor = dict(

+ mean=[122.770938, 116.7460125, 104.09373615],

+ std=[68.5005327, 66.6321579, 70.32316305],

+ to_rgb=True,

+)

+

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='RandomResizedCrop',

+ scale=384,

+ interpolation='bicubic',

+ backend='pillow'),

+ dict(

+ type='PackInputs',

+ algorithm_keys=['question', 'gt_answer', 'gt_answer_weight'],

+ meta_keys=['question_id', 'image_id'],

+ ),

+]

+

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='Resize',

+ scale=(480, 480),

+ interpolation='bicubic',

+ backend='pillow'),

+ dict(

+ type='CleanCaption',

+ keys=['question'],

+ ),

+ dict(

+ type='PackInputs',

+ algorithm_keys=['question', 'gt_answer', 'gt_answer_weight'],

+ meta_keys=['question_id', 'image_id'],

+ ),

+]

+

+train_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ dataset=dict(

+ type='COCOVQA',

+ data_root='data/coco',

+ data_prefix='train2014',

+ question_file=

+ 'annotations/v2_OpenEnded_mscoco_train2014_questions.json',

+ ann_file='annotations/v2_mscoco_train2014_annotations.json',

+ pipeline=train_pipeline),

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ persistent_workers=True,

+ drop_last=True,

+)

+

+val_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ dataset=dict(

+ type='COCOVQA',

+ data_root='data/coco',

+ data_prefix='val2014',

+ question_file='annotations/v2_OpenEnded_mscoco_val2014_questions.json',

+ ann_file='annotations/v2_mscoco_val2014_annotations.json',

+ pipeline=test_pipeline),

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ persistent_workers=True,

+)

+val_evaluator = dict(type='VQAAcc')

+

+test_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ dataset=dict(

+ type='COCOVQA',

+ data_root='data/coco',

+ data_prefix='test2015',

+ question_file= # noqa: E251

+ 'annotations/v2_OpenEnded_mscoco_test2015_questions.json',

+ pipeline=test_pipeline),

+ sampler=dict(type='DefaultSampler', shuffle=False),

+)

+test_evaluator = dict(type='ReportVQA', file_path='vqa_test.json')

diff --git a/configs/_base_/datasets/nlvr2.py b/configs/_base_/datasets/nlvr2.py

new file mode 100644

index 00000000000..2f5314bcd14

--- /dev/null

+++ b/configs/_base_/datasets/nlvr2.py

@@ -0,0 +1,86 @@

+# dataset settings

+data_preprocessor = dict(

+ type='MultiModalDataPreprocessor',

+ mean=[122.770938, 116.7460125, 104.09373615],

+ std=[68.5005327, 66.6321579, 70.32316305],

+ to_rgb=True,

+)

+

+train_pipeline = [

+ dict(

+ type='ApplyToList',

+ # NLVR requires to load two images in task.

+ scatter_key='img_path',

+ transforms=[

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='RandomResizedCrop',

+ scale=384,

+ interpolation='bicubic',

+ backend='pillow'),

+ dict(type='RandomFlip', prob=0.5, direction='horizontal'),

+ ],

+ collate_keys=['img', 'scale_factor', 'ori_shape'],

+ ),

+ dict(type='CleanCaption', keys='text'),

+ dict(

+ type='PackInputs',

+ algorithm_keys=['text'],

+ meta_keys=['image_id'],

+ ),

+]

+

+test_pipeline = [

+ dict(

+ type='ApplyToList',

+ # NLVR requires to load two images in task.

+ scatter_key='img_path',

+ transforms=[

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='Resize',

+ scale=(384, 384),

+ interpolation='bicubic',

+ backend='pillow'),

+ ],

+ collate_keys=['img', 'scale_factor', 'ori_shape'],

+ ),

+ dict(

+ type='PackInputs',

+ algorithm_keys=['text'],

+ meta_keys=['image_id'],

+ ),

+]

+

+train_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ dataset=dict(

+ type='NLVR2',

+ data_root='data/nlvr2',

+ ann_file='dev.json',

+ data_prefix='dev',

+ pipeline=train_pipeline),

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ persistent_workers=True,

+ drop_last=True,

+)

+

+val_dataloader = dict(

+ batch_size=64,

+ num_workers=8,

+ dataset=dict(

+ type='NLVR2',

+ data_root='data/nlvr2',

+ ann_file='dev.json',

+ data_prefix='dev',

+ pipeline=test_pipeline,

+ ),

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ persistent_workers=True,

+)

+val_evaluator = dict(type='Accuracy')

+

+# If you want standard test, please manually configure the test dataset

+test_dataloader = val_dataloader

+test_evaluator = val_evaluator

diff --git a/configs/_base_/datasets/refcoco.py b/configs/_base_/datasets/refcoco.py

new file mode 100644

index 00000000000..f698e76c032

--- /dev/null

+++ b/configs/_base_/datasets/refcoco.py

@@ -0,0 +1,105 @@

+# data settings

+

+data_preprocessor = dict(

+ mean=[122.770938, 116.7460125, 104.09373615],

+ std=[68.5005327, 66.6321579, 70.32316305],

+ to_rgb=True,

+)

+

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='RandomApply',

+ transforms=[

+ dict(

+ type='ColorJitter',

+ brightness=0.4,

+ contrast=0.4,

+ saturation=0.4,

+ hue=0.1,

+ backend='cv2')

+ ],

+ prob=0.5),

+ dict(

+ type='mmdet.RandomCrop',

+ crop_type='relative_range',

+ crop_size=(0.8, 0.8),

+ allow_negative_crop=False),

+ dict(

+ type='RandomChoiceResize',

+ scales=[(384, 384), (360, 360), (344, 344), (312, 312), (300, 300),

+ (286, 286), (270, 270)],

+ keep_ratio=False),

+ dict(

+ type='RandomTranslatePad',

+ size=384,

+ aug_translate=True,

+ ),

+ dict(type='CleanCaption', keys='text'),

+ dict(

+ type='PackInputs',

+ algorithm_keys=['text', 'gt_bboxes', 'scale_factor'],

+ meta_keys=['image_id'],

+ ),

+]

+

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='Resize',

+ scale=(384, 384),

+ interpolation='bicubic',

+ backend='pillow'),

+ dict(type='CleanCaption', keys='text'),

+ dict(

+ type='PackInputs',

+ algorithm_keys=['text', 'gt_bboxes', 'scale_factor'],

+ meta_keys=['image_id'],

+ ),

+]

+

+train_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ dataset=dict(

+ type='RefCOCO',

+ data_root='data/coco',

+ data_prefix='train2014',

+ ann_file='refcoco/instances.json',

+ split_file='refcoco/refs(unc).p',

+ split='train',

+ pipeline=train_pipeline),

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ drop_last=True,

+)

+

+val_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ dataset=dict(

+ type='RefCOCO',

+ data_root='data/coco',

+ data_prefix='train2014',

+ ann_file='refcoco/instances.json',

+ split_file='refcoco/refs(unc).p',

+ split='val',

+ pipeline=test_pipeline),

+ sampler=dict(type='DefaultSampler', shuffle=False),

+)

+

+val_evaluator = dict(type='VisualGroundingMetric')

+

+test_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ dataset=dict(

+ type='RefCOCO',

+ data_root='data/coco',

+ data_prefix='train2014',

+ ann_file='refcoco/instances.json',

+ split_file='refcoco/refs(unc).p',

+ split='testA', # or 'testB'

+ pipeline=test_pipeline),

+ sampler=dict(type='DefaultSampler', shuffle=False),

+)

+test_evaluator = val_evaluator

diff --git a/configs/blip/README.md b/configs/blip/README.md

new file mode 100644

index 00000000000..ac449248bc3

--- /dev/null

+++ b/configs/blip/README.md

@@ -0,0 +1,90 @@

+# BLIP

+

+> [BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation](https://arxiv.org/abs/2201.12086)

+

+

+

+## Abstract

+

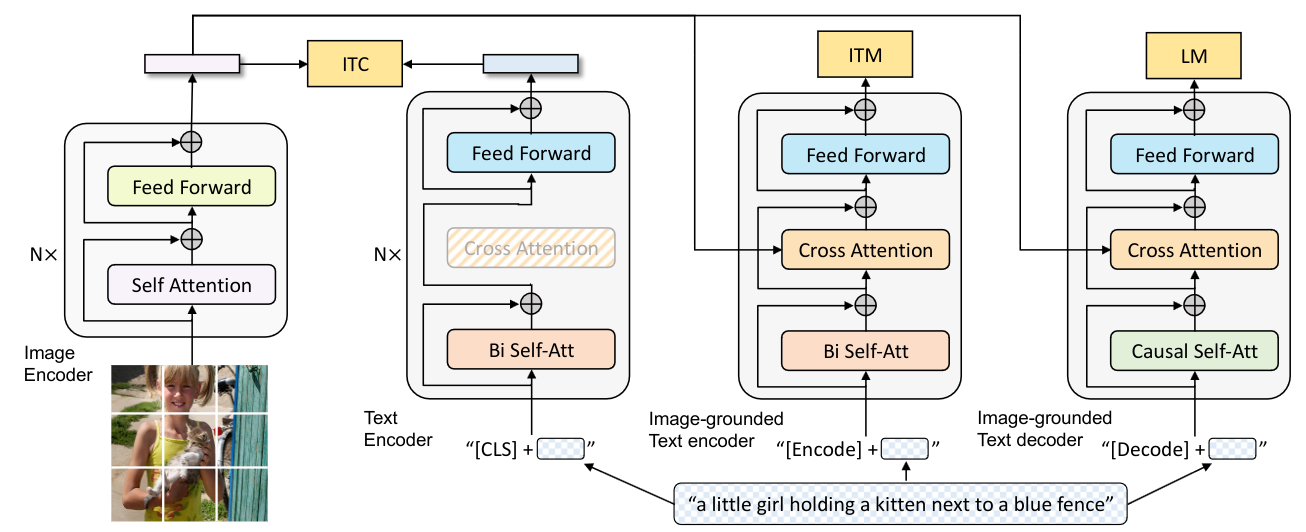

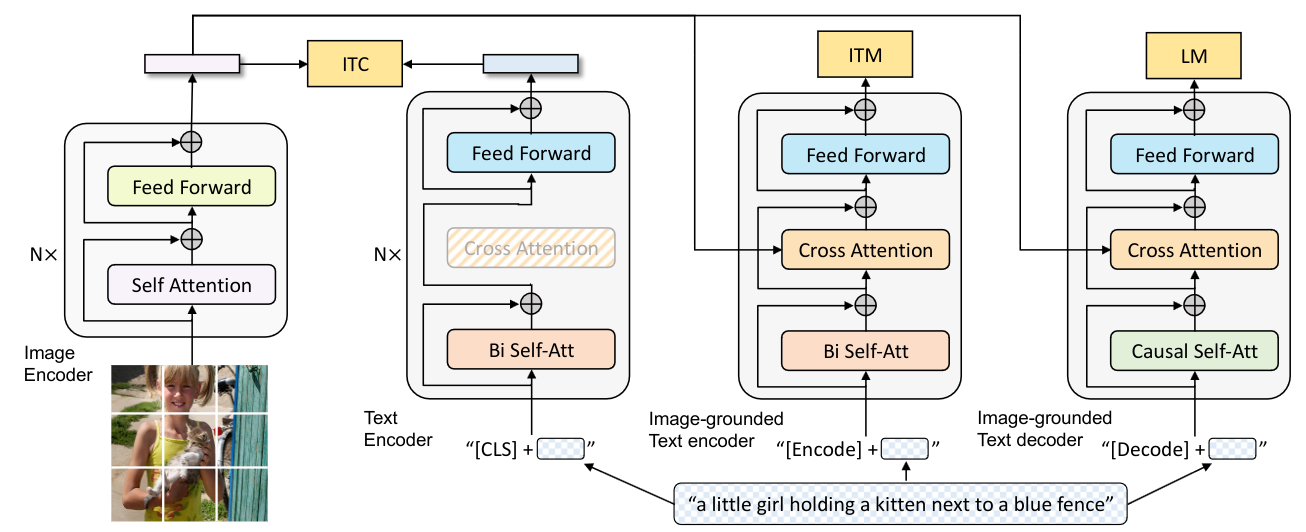

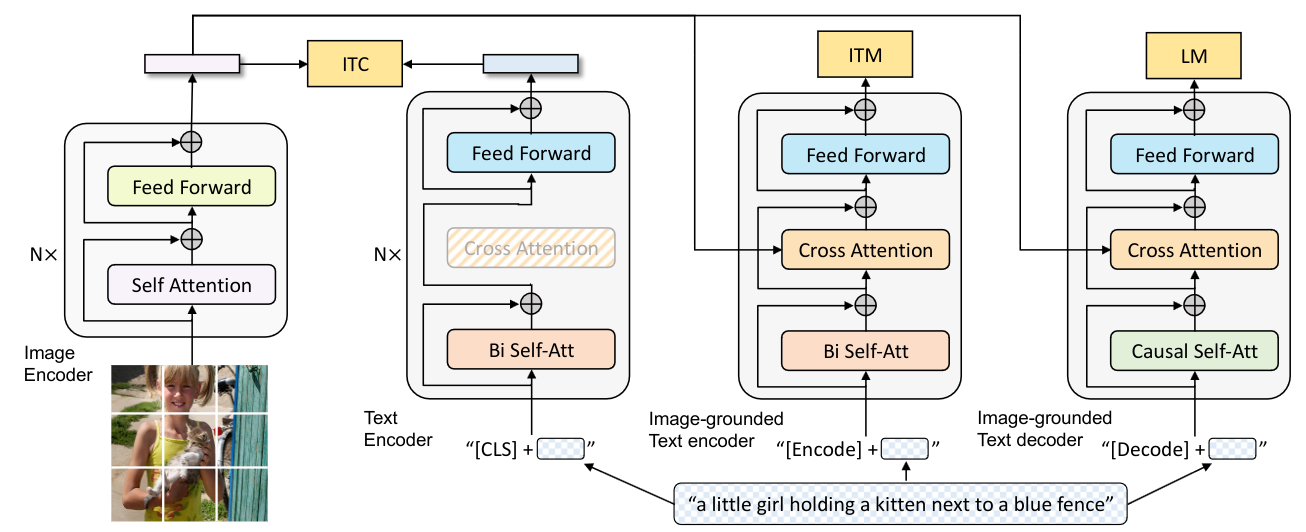

+Vision-Language Pre-training (VLP) has advanced the performance for many vision-language tasks. However, most existing pre-trained models only excel in either understanding-based tasks or generation-based tasks. Furthermore, performance improvement has been largely achieved by scaling up the dataset with noisy image-text pairs collected from the web, which is a suboptimal source of supervision. In this paper, we propose BLIP, a new VLP framework which transfers flexibly to both vision-language understanding and generation tasks. BLIP effectively utilizes the noisy web data by bootstrapping the captions, where a captioner generates synthetic captions and a filter removes the noisy ones. We achieve state-of-the-art results on a wide range of vision-language tasks, such as image-text retrieval (+2.7% in average recall@1), image captioning (+2.8% in CIDEr), and VQA (+1.6% in VQA score). BLIP also demonstrates strong generalization ability when directly transferred to video-language tasks in a zero-shot manner.

+

+

+

+

+

+

+

+

+

+

+

## Extract Features From Image

Compared with `model.extract_feat`, `FeatureExtractor` is used to extract features from the image files directly, instead of a batch of tensors.

In a word, the input of `model.extract_feat` is `torch.Tensor`, the input of `FeatureExtractor` is images.

-```

+```python

>>> from mmpretrain import FeatureExtractor, get_model

>>> model = get_model('resnet50_8xb32_in1k', backbone=dict(out_indices=(0, 1, 2, 3)))

>>> extractor = FeatureExtractor(model)

diff --git a/docs/zh_CN/conf.py b/docs/zh_CN/conf.py

index a98d275c620..2c372a8ae59 100644

--- a/docs/zh_CN/conf.py

+++ b/docs/zh_CN/conf.py

@@ -223,6 +223,8 @@ def get_version():

'torch': ('https://pytorch.org/docs/stable/', None),

'mmcv': ('https://mmcv.readthedocs.io/zh_CN/2.x/', None),

'mmengine': ('https://mmengine.readthedocs.io/zh_CN/latest/', None),

+ 'transformers':

+ ('https://huggingface.co/docs/transformers/main/zh/', None),

}

napoleon_custom_sections = [

# Custom sections for data elements.

diff --git a/docs/zh_CN/get_started.md b/docs/zh_CN/get_started.md

index 6d77e426bac..c2100815aed 100644

--- a/docs/zh_CN/get_started.md

+++ b/docs/zh_CN/get_started.md

@@ -74,6 +74,18 @@ pip install -U openmim && mim install "mmpretrain>=1.0.0rc7"

`mim` 是一个轻量级的命令行工具,可以根据 PyTorch 和 CUDA 版本为 OpenMMLab 算法库配置合适的环境。同时它也提供了一些对于深度学习实验很有帮助的功能。

```

+## 安装多模态支持 (可选)

+

+MMPretrain 中的多模态模型需要额外的依赖项,要安装这些依赖项,请在安装过程中添加 `[multimodal]` 参数,如下所示:

+

+```shell

+# 从源码安装

+mim install -e ".[multimodal]"

+

+# 作为 Python 包安装

+mim install "mmpretrain[multimodal]>=1.0.0rc7"

+```

+

## 验证安装

为了验证 MMPretrain 的安装是否正确,我们提供了一些示例代码来执行模型推理。

diff --git a/docs/zh_CN/stat.py b/docs/zh_CN/stat.py

index 70ea692d531..29e57563ccf 100755

--- a/docs/zh_CN/stat.py

+++ b/docs/zh_CN/stat.py

@@ -173,7 +173,10 @@ def generate_summary_table(task, model_result_pairs, title=None):

continue

name = model.name

params = f'{model.metadata.parameters / 1e6:.2f}' # Params

- flops = f'{model.metadata.flops / 1e9:.2f}' # Params

+ if model.metadata.flops is not None:

+ flops = f'{model.metadata.flops / 1e9:.2f}' # Flops

+ else:

+ flops = None

readme = Path(model.collection.filepath).parent.with_suffix('.md').name

page = f'[链接]({PAPERS_ROOT / readme})'

model_metrics = []

diff --git a/docs/zh_CN/user_guides/inference.md b/docs/zh_CN/user_guides/inference.md

index a5efb8bd0f7..068e42e16de 100644

--- a/docs/zh_CN/user_guides/inference.md

+++ b/docs/zh_CN/user_guides/inference.md

@@ -2,25 +2,45 @@

本文将展示如何使用以下API:

-1. [**`list_models`**](mmpretrain.apis.list_models) 和 [**`get_model`**](mmpretrain.apis.get_model) :列出 MMPreTrain 中的模型并获取模型。

-2. [**`ImageClassificationInferencer`**](mmpretrain.apis.ImageClassificationInferencer): 在给定图像上进行推理。

-3. [**`FeatureExtractor`**](mmpretrain.apis.FeatureExtractor): 从图像文件直接提取特征。

-

-## 列出模型和获取模型

+- [**`list_models`**](mmpretrain.apis.list_models): 列举 MMPretrain 中所有可用模型名称

+- [**`get_model`**](mmpretrain.apis.get_model): 通过模型名称或模型配置文件获取模型

+- [**`inference_model`**](mmpretrain.apis.inference_model): 使用与模型相对应任务的推理器进行推理。主要用作快速

+ 展示。如需配置进阶用法,还需要直接使用下列推理器。

+- 推理器:

+ 1. [**`ImageClassificationInferencer`**](mmpretrain.apis.ImageClassificationInferencer):

+ 对给定图像执行图像分类。

+ 2. [**`ImageRetrievalInferencer`**](mmpretrain.apis.ImageRetrievalInferencer):

+ 从给定的一系列图像中,检索与给定图像最相似的图像。

+ 3. [**`ImageCaptionInferencer`**](mmpretrain.apis.ImageCaptionInferencer):

+ 生成给定图像的一段描述。

+ 4. [**`VisualQuestionAnsweringInferencer`**](mmpretrain.apis.VisualQuestionAnsweringInferencer):

+ 根据给定的图像回答问题。

+ 5. [**`VisualGroundingInferencer`**](mmpretrain.apis.VisualGroundingInferencer):

+ 根据一段描述,从给定图像中找到一个与描述对应的对象。

+ 6. [**`TextToImageRetrievalInferencer`**](mmpretrain.apis.TextToImageRetrievalInferencer):

+ 从给定的一系列图像中,检索与给定文本最相似的图像。

+ 7. [**`ImageToTextRetrievalInferencer`**](mmpretrain.apis.ImageToTextRetrievalInferencer):

+ 从给定的一系列文本中,检索与给定图像最相似的文本。

+ 8. [**`NLVRInferencer`**](mmpretrain.apis.NLVRInferencer):

+ 对给定的一对图像和一段文本进行自然语言视觉推理(NLVR 任务)。

+ 9. [**`FeatureExtractor`**](mmpretrain.apis.FeatureExtractor):

+ 通过视觉主干网络从图像文件提取特征。

+

+## 列举可用模型

列出 MMPreTrain 中的所有已支持的模型。

-```

+```python

>>> from mmpretrain import list_models

>>> list_models()

['barlowtwins_resnet50_8xb256-coslr-300e_in1k',

'beit-base-p16_beit-in21k-pre_3rdparty_in1k',

- .................]

+ ...]

```

-`list_models` 支持模糊匹配,您可以使用 **\*** 匹配任意字符。

+`list_models` 支持 Unix 文件名风格的模式匹配,你可以使用 \*\* * \*\* 匹配任意字符。

-```

+```python

>>> from mmpretrain import list_models

>>> list_models("*convnext-b*21k")

['convnext-base_3rdparty_in21k',

@@ -28,30 +48,43 @@

'convnext-base_in21k-pre_3rdparty_in1k']

```

-了解了已经支持了哪些模型后,你可以使用 `get_model` 获取特定模型。

+你还可以使用推理器的 `list_models` 方法获取对应任务可用的所有模型。

+```python

+>>> from mmpretrain import ImageCaptionInferencer

+>>> ImageCaptionInferencer.list_models()

+['blip-base_3rdparty_caption',

+ 'blip2-opt2.7b_3rdparty-zeroshot_caption',

+ 'flamingo_3rdparty-zeroshot_caption',

+ 'ofa-base_3rdparty-finetuned_caption']

```

+

+## 获取模型

+

+选定需要的模型后,你可以使用 `get_model` 获取特定模型。

+

+```python

>>> from mmpretrain import get_model

-# 没有预训练权重的模型

+# 不加载预训练权重的模型

>>> model = get_model("convnext-base_in21k-pre_3rdparty_in1k")

-# 使用MMPreTrain中默认的权重

+# 加载默认的权重文件

>>> model = get_model("convnext-base_in21k-pre_3rdparty_in1k", pretrained=True)

-# 使用本地权重

+# 加载制定的权重文件

>>> model = get_model("convnext-base_in21k-pre_3rdparty_in1k", pretrained="your_local_checkpoint_path")

-# 您还可以做一些修改,例如修改 head 中的 num_classes。

+# 指定额外的模型初始化参数,例如修改 head 中的 num_classes。

>>> model = get_model("convnext-base_in21k-pre_3rdparty_in1k", head=dict(num_classes=10))

-# 您可以获得没有 neck,head 的模型,并直接从 backbone 中的 stage 1, 2, 3 输出

+# 另外一个例子:移除模型的 neck,head 模块,直接从 backbone 中的 stage 1, 2, 3 输出

>>> model_headless = get_model("resnet18_8xb32_in1k", head=None, neck=None, backbone=dict(out_indices=(1, 2, 3)))

```

-得到模型后,你可以进行推理:

+获得的模型是一个通常的 PyTorch Module

-```

+```python

>>> import torch

>>> from mmpretrain import get_model

>>> model = get_model('convnext-base_in21k-pre_3rdparty_in1k', pretrained=True)

@@ -63,45 +96,71 @@

## 在给定图像上进行推理

-这是一个使用 ImageNet-1k 预训练权重在给定图像上构建推理器的示例。

+这里是一个例子,我们将使用 ResNet-50 预训练模型对给定的 [图像](https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG) 进行分类。

+```python

+>>> from mmpretrain import inference_model

+>>> image = 'https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG'

+>>> # 如果你没有图形界面,请设置 `show=False`

+>>> result = inference_model('resnet50_8xb32_in1k', image, show=True)

+>>> print(result['pred_class'])

+sea snake

```

->>> from mmpretrain import ImageClassificationInferencer

+上述 `inference_model` 接口可以快速进行模型推理,但它每次调用都需要重新初始化模型,也无法进行多个样本的推理。

+因此我们需要使用推理器来进行多次调用。

+

+```python

+>>> from mmpretrain import ImageClassificationInferencer

+>>> image = 'https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG'

>>> inferencer = ImageClassificationInferencer('resnet50_8xb32_in1k')

->>> results = inferencer('https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG')

->>> print(results[0]['pred_class'])

+>>> # 注意推理器的输出始终为一个结果列表,即使输入只有一个样本

+>>> result = inferencer('https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG')[0]

+>>> print(result['pred_class'])

sea snake

+>>>

+>>> # 你可以对多张图像进行批量推理

+>>> image_list = ['demo/demo.JPEG', 'demo/bird.JPEG'] * 16

+>>> results = inferencer(image_list, batch_size=8)

+>>> print(len(results))

+32

+>>> print(results[1]['pred_class'])

+house finch, linnet, Carpodacus mexicanus

```

-result 是一个包含 pred_label、pred_score、pred_scores 和 pred_class 的字典,结果如下:

+通常,每个样本的结果都是一个字典。比如图像分类的结果是一个包含了 `pred_label`、`pred_score`、`pred_scores`、`pred_class` 等字段的字典:

-```{text}

-{"pred_label":65,"pred_score":0.6649366617202759,"pred_class":"sea snake", "pred_scores": [..., 0.6649366617202759, ...]}

+```python

+{

+ "pred_label": 65,

+ "pred_score": 0.6649366617202759,

+ "pred_class":"sea snake",

+ "pred_scores": array([..., 0.6649366617202759, ...], dtype=float32)

+}

```

-如果你想使用自己的配置和权重:

+你可以为推理器配置额外的参数,比如使用你自己的配置文件和权重文件,在 CUDA 上进行推理:

-```

+```python

>>> from mmpretrain import ImageClassificationInferencer

->>> inferencer = ImageClassificationInferencer(

- model='configs/resnet/resnet50_8xb32_in1k.py',

- pretrained='https://download.openmmlab.com/mmclassification/v0/resnet/resnet50_8xb32_in1k_20210831-ea4938fc.pth',

- device='cuda')

->>> inferencer('https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG')

+>>> image = 'https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG'

+>>> config = 'configs/resnet/resnet50_8xb32_in1k.py'

+>>> checkpoint = 'https://download.openmmlab.com/mmclassification/v0/resnet/resnet50_8xb32_in1k_20210831-ea4938fc.pth'

+>>> inferencer = ImageClassificationInferencer(model=config, pretrained=checkpoint, device='cuda')

+>>> result = inferencer(image)[0]

+>>> print(result['pred_class'])

+sea snake

```

-你还可以在CUDA上通过批处理进行多个图像的推理:

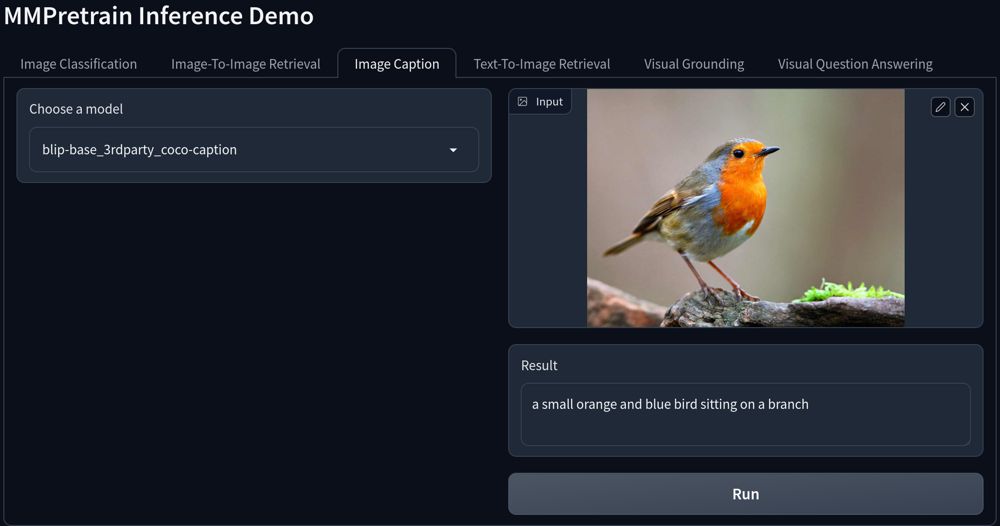

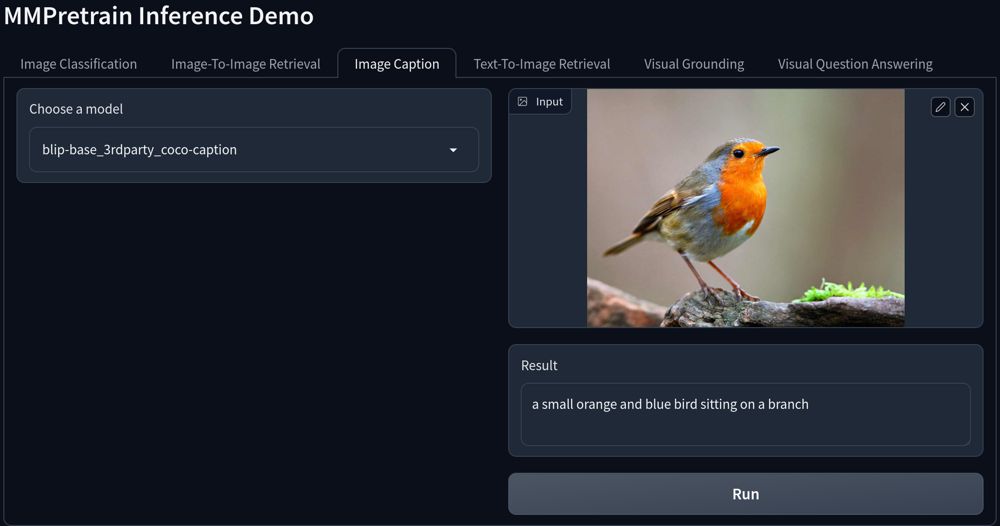

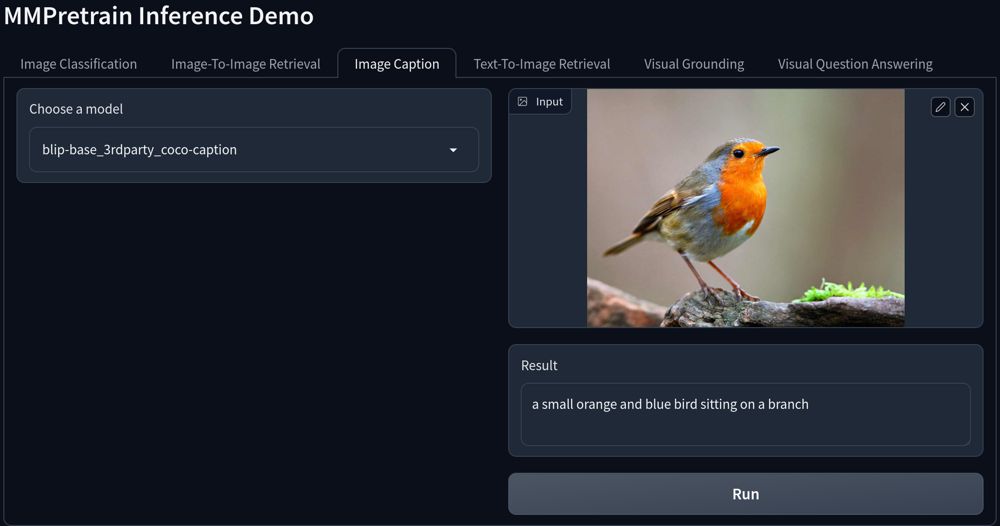

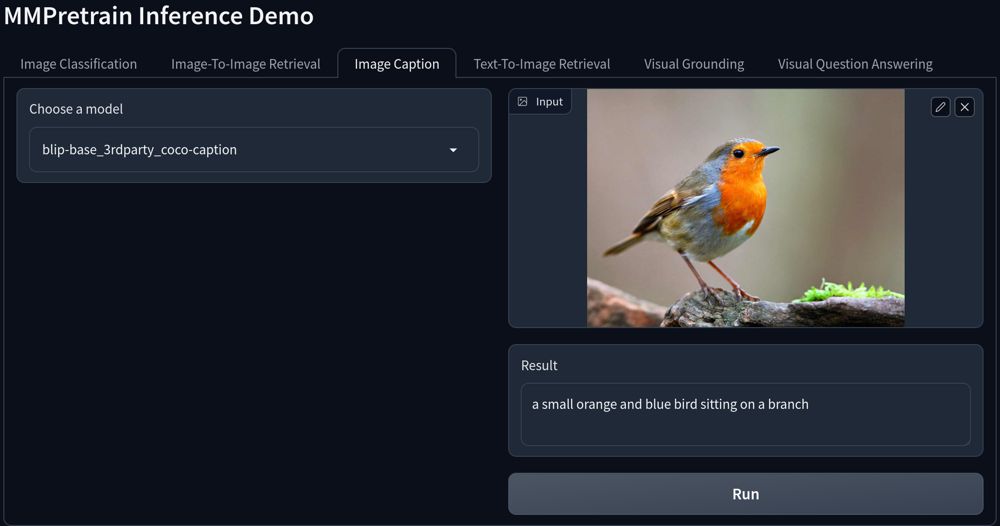

+## 使用 Gradio 推理示例

-```{python}

->>> from mmpretrain import ImageClassificationInferencer

+我们还提供了一个基于 gradio 的推理示例,提供了 MMPretrain 所支持的所有任务的推理展示功能,你可以在 [projects/gradio_demo/launch.py](https://github.com/open-mmlab/mmpretrain/blob/main/projects/gradio_demo/launch.py) 找到这一例程。

->>> inferencer = ImageClassificationInferencer('resnet50_8xb32_in1k', device='cuda')

->>> imgs = ['https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG'] * 5

->>> results = inferencer(imgs, batch_size=2)

->>> print(results[1]['pred_class'])

-sea snake

-```

+请首先使用 `pip install -U gradio` 安装 `gradio` 库。

+

+这里是界面效果预览:

+

+

+

## Extract Features From Image

Compared with `model.extract_feat`, `FeatureExtractor` is used to extract features from the image files directly, instead of a batch of tensors.

In a word, the input of `model.extract_feat` is `torch.Tensor`, the input of `FeatureExtractor` is images.

-```

+```python

>>> from mmpretrain import FeatureExtractor, get_model

>>> model = get_model('resnet50_8xb32_in1k', backbone=dict(out_indices=(0, 1, 2, 3)))

>>> extractor = FeatureExtractor(model)

diff --git a/docs/zh_CN/conf.py b/docs/zh_CN/conf.py

index a98d275c620..2c372a8ae59 100644

--- a/docs/zh_CN/conf.py

+++ b/docs/zh_CN/conf.py

@@ -223,6 +223,8 @@ def get_version():

'torch': ('https://pytorch.org/docs/stable/', None),

'mmcv': ('https://mmcv.readthedocs.io/zh_CN/2.x/', None),

'mmengine': ('https://mmengine.readthedocs.io/zh_CN/latest/', None),

+ 'transformers':

+ ('https://huggingface.co/docs/transformers/main/zh/', None),

}

napoleon_custom_sections = [

# Custom sections for data elements.

diff --git a/docs/zh_CN/get_started.md b/docs/zh_CN/get_started.md

index 6d77e426bac..c2100815aed 100644

--- a/docs/zh_CN/get_started.md

+++ b/docs/zh_CN/get_started.md

@@ -74,6 +74,18 @@ pip install -U openmim && mim install "mmpretrain>=1.0.0rc7"

`mim` 是一个轻量级的命令行工具,可以根据 PyTorch 和 CUDA 版本为 OpenMMLab 算法库配置合适的环境。同时它也提供了一些对于深度学习实验很有帮助的功能。

```

+## 安装多模态支持 (可选)

+

+MMPretrain 中的多模态模型需要额外的依赖项,要安装这些依赖项,请在安装过程中添加 `[multimodal]` 参数,如下所示:

+

+```shell

+# 从源码安装

+mim install -e ".[multimodal]"

+

+# 作为 Python 包安装

+mim install "mmpretrain[multimodal]>=1.0.0rc7"

+```

+

## 验证安装

为了验证 MMPretrain 的安装是否正确,我们提供了一些示例代码来执行模型推理。

diff --git a/docs/zh_CN/stat.py b/docs/zh_CN/stat.py

index 70ea692d531..29e57563ccf 100755

--- a/docs/zh_CN/stat.py

+++ b/docs/zh_CN/stat.py

@@ -173,7 +173,10 @@ def generate_summary_table(task, model_result_pairs, title=None):

continue

name = model.name

params = f'{model.metadata.parameters / 1e6:.2f}' # Params

- flops = f'{model.metadata.flops / 1e9:.2f}' # Params

+ if model.metadata.flops is not None:

+ flops = f'{model.metadata.flops / 1e9:.2f}' # Flops

+ else:

+ flops = None

readme = Path(model.collection.filepath).parent.with_suffix('.md').name

page = f'[链接]({PAPERS_ROOT / readme})'

model_metrics = []

diff --git a/docs/zh_CN/user_guides/inference.md b/docs/zh_CN/user_guides/inference.md

index a5efb8bd0f7..068e42e16de 100644

--- a/docs/zh_CN/user_guides/inference.md

+++ b/docs/zh_CN/user_guides/inference.md

@@ -2,25 +2,45 @@

本文将展示如何使用以下API:

-1. [**`list_models`**](mmpretrain.apis.list_models) 和 [**`get_model`**](mmpretrain.apis.get_model) :列出 MMPreTrain 中的模型并获取模型。

-2. [**`ImageClassificationInferencer`**](mmpretrain.apis.ImageClassificationInferencer): 在给定图像上进行推理。

-3. [**`FeatureExtractor`**](mmpretrain.apis.FeatureExtractor): 从图像文件直接提取特征。

-

-## 列出模型和获取模型

+- [**`list_models`**](mmpretrain.apis.list_models): 列举 MMPretrain 中所有可用模型名称

+- [**`get_model`**](mmpretrain.apis.get_model): 通过模型名称或模型配置文件获取模型

+- [**`inference_model`**](mmpretrain.apis.inference_model): 使用与模型相对应任务的推理器进行推理。主要用作快速

+ 展示。如需配置进阶用法,还需要直接使用下列推理器。

+- 推理器:

+ 1. [**`ImageClassificationInferencer`**](mmpretrain.apis.ImageClassificationInferencer):

+ 对给定图像执行图像分类。

+ 2. [**`ImageRetrievalInferencer`**](mmpretrain.apis.ImageRetrievalInferencer):

+ 从给定的一系列图像中,检索与给定图像最相似的图像。

+ 3. [**`ImageCaptionInferencer`**](mmpretrain.apis.ImageCaptionInferencer):

+ 生成给定图像的一段描述。

+ 4. [**`VisualQuestionAnsweringInferencer`**](mmpretrain.apis.VisualQuestionAnsweringInferencer):

+ 根据给定的图像回答问题。

+ 5. [**`VisualGroundingInferencer`**](mmpretrain.apis.VisualGroundingInferencer):

+ 根据一段描述,从给定图像中找到一个与描述对应的对象。

+ 6. [**`TextToImageRetrievalInferencer`**](mmpretrain.apis.TextToImageRetrievalInferencer):

+ 从给定的一系列图像中,检索与给定文本最相似的图像。

+ 7. [**`ImageToTextRetrievalInferencer`**](mmpretrain.apis.ImageToTextRetrievalInferencer):

+ 从给定的一系列文本中,检索与给定图像最相似的文本。

+ 8. [**`NLVRInferencer`**](mmpretrain.apis.NLVRInferencer):

+ 对给定的一对图像和一段文本进行自然语言视觉推理(NLVR 任务)。

+ 9. [**`FeatureExtractor`**](mmpretrain.apis.FeatureExtractor):

+ 通过视觉主干网络从图像文件提取特征。

+

+## 列举可用模型

列出 MMPreTrain 中的所有已支持的模型。

-```

+```python

>>> from mmpretrain import list_models

>>> list_models()

['barlowtwins_resnet50_8xb256-coslr-300e_in1k',

'beit-base-p16_beit-in21k-pre_3rdparty_in1k',

- .................]

+ ...]

```

-`list_models` 支持模糊匹配,您可以使用 **\*** 匹配任意字符。

+`list_models` 支持 Unix 文件名风格的模式匹配,你可以使用 \*\* * \*\* 匹配任意字符。

-```

+```python

>>> from mmpretrain import list_models

>>> list_models("*convnext-b*21k")

['convnext-base_3rdparty_in21k',

@@ -28,30 +48,43 @@

'convnext-base_in21k-pre_3rdparty_in1k']

```

-了解了已经支持了哪些模型后,你可以使用 `get_model` 获取特定模型。

+你还可以使用推理器的 `list_models` 方法获取对应任务可用的所有模型。

+```python

+>>> from mmpretrain import ImageCaptionInferencer

+>>> ImageCaptionInferencer.list_models()

+['blip-base_3rdparty_caption',

+ 'blip2-opt2.7b_3rdparty-zeroshot_caption',

+ 'flamingo_3rdparty-zeroshot_caption',

+ 'ofa-base_3rdparty-finetuned_caption']

```

+

+## 获取模型

+

+选定需要的模型后,你可以使用 `get_model` 获取特定模型。

+

+```python

>>> from mmpretrain import get_model

-# 没有预训练权重的模型

+# 不加载预训练权重的模型

>>> model = get_model("convnext-base_in21k-pre_3rdparty_in1k")

-# 使用MMPreTrain中默认的权重

+# 加载默认的权重文件

>>> model = get_model("convnext-base_in21k-pre_3rdparty_in1k", pretrained=True)

-# 使用本地权重

+# 加载制定的权重文件

>>> model = get_model("convnext-base_in21k-pre_3rdparty_in1k", pretrained="your_local_checkpoint_path")

-# 您还可以做一些修改,例如修改 head 中的 num_classes。

+# 指定额外的模型初始化参数,例如修改 head 中的 num_classes。

>>> model = get_model("convnext-base_in21k-pre_3rdparty_in1k", head=dict(num_classes=10))

-# 您可以获得没有 neck,head 的模型,并直接从 backbone 中的 stage 1, 2, 3 输出

+# 另外一个例子:移除模型的 neck,head 模块,直接从 backbone 中的 stage 1, 2, 3 输出

>>> model_headless = get_model("resnet18_8xb32_in1k", head=None, neck=None, backbone=dict(out_indices=(1, 2, 3)))

```

-得到模型后,你可以进行推理:

+获得的模型是一个通常的 PyTorch Module

-```

+```python

>>> import torch

>>> from mmpretrain import get_model

>>> model = get_model('convnext-base_in21k-pre_3rdparty_in1k', pretrained=True)

@@ -63,45 +96,71 @@

## 在给定图像上进行推理

-这是一个使用 ImageNet-1k 预训练权重在给定图像上构建推理器的示例。

+这里是一个例子,我们将使用 ResNet-50 预训练模型对给定的 [图像](https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG) 进行分类。

+```python

+>>> from mmpretrain import inference_model

+>>> image = 'https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG'

+>>> # 如果你没有图形界面,请设置 `show=False`

+>>> result = inference_model('resnet50_8xb32_in1k', image, show=True)

+>>> print(result['pred_class'])

+sea snake

```

->>> from mmpretrain import ImageClassificationInferencer

+上述 `inference_model` 接口可以快速进行模型推理,但它每次调用都需要重新初始化模型,也无法进行多个样本的推理。

+因此我们需要使用推理器来进行多次调用。

+

+```python

+>>> from mmpretrain import ImageClassificationInferencer

+>>> image = 'https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG'

>>> inferencer = ImageClassificationInferencer('resnet50_8xb32_in1k')

->>> results = inferencer('https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG')

->>> print(results[0]['pred_class'])

+>>> # 注意推理器的输出始终为一个结果列表,即使输入只有一个样本

+>>> result = inferencer('https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG')[0]

+>>> print(result['pred_class'])

sea snake

+>>>

+>>> # 你可以对多张图像进行批量推理

+>>> image_list = ['demo/demo.JPEG', 'demo/bird.JPEG'] * 16

+>>> results = inferencer(image_list, batch_size=8)

+>>> print(len(results))

+32

+>>> print(results[1]['pred_class'])

+house finch, linnet, Carpodacus mexicanus

```

-result 是一个包含 pred_label、pred_score、pred_scores 和 pred_class 的字典,结果如下:

+通常,每个样本的结果都是一个字典。比如图像分类的结果是一个包含了 `pred_label`、`pred_score`、`pred_scores`、`pred_class` 等字段的字典:

-```{text}

-{"pred_label":65,"pred_score":0.6649366617202759,"pred_class":"sea snake", "pred_scores": [..., 0.6649366617202759, ...]}

+```python

+{

+ "pred_label": 65,

+ "pred_score": 0.6649366617202759,

+ "pred_class":"sea snake",

+ "pred_scores": array([..., 0.6649366617202759, ...], dtype=float32)

+}

```

-如果你想使用自己的配置和权重:

+你可以为推理器配置额外的参数,比如使用你自己的配置文件和权重文件,在 CUDA 上进行推理:

-```

+```python

>>> from mmpretrain import ImageClassificationInferencer

->>> inferencer = ImageClassificationInferencer(

- model='configs/resnet/resnet50_8xb32_in1k.py',

- pretrained='https://download.openmmlab.com/mmclassification/v0/resnet/resnet50_8xb32_in1k_20210831-ea4938fc.pth',

- device='cuda')

->>> inferencer('https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG')

+>>> image = 'https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG'

+>>> config = 'configs/resnet/resnet50_8xb32_in1k.py'

+>>> checkpoint = 'https://download.openmmlab.com/mmclassification/v0/resnet/resnet50_8xb32_in1k_20210831-ea4938fc.pth'

+>>> inferencer = ImageClassificationInferencer(model=config, pretrained=checkpoint, device='cuda')

+>>> result = inferencer(image)[0]

+>>> print(result['pred_class'])

+sea snake

```

-你还可以在CUDA上通过批处理进行多个图像的推理:

+## 使用 Gradio 推理示例

-```{python}

->>> from mmpretrain import ImageClassificationInferencer

+我们还提供了一个基于 gradio 的推理示例,提供了 MMPretrain 所支持的所有任务的推理展示功能,你可以在 [projects/gradio_demo/launch.py](https://github.com/open-mmlab/mmpretrain/blob/main/projects/gradio_demo/launch.py) 找到这一例程。

->>> inferencer = ImageClassificationInferencer('resnet50_8xb32_in1k', device='cuda')

->>> imgs = ['https://github.com/open-mmlab/mmpretrain/raw/main/demo/demo.JPEG'] * 5

->>> results = inferencer(imgs, batch_size=2)

->>> print(results[1]['pred_class'])

-sea snake

-```

+请首先使用 `pip install -U gradio` 安装 `gradio` 库。

+

+这里是界面效果预览:

+

+ ## 从图像中提取特征

diff --git a/mmpretrain/apis/__init__.py b/mmpretrain/apis/__init__.py

index e82d897c9b3..6fbf443772a 100644

--- a/mmpretrain/apis/__init__.py

+++ b/mmpretrain/apis/__init__.py

@@ -1,12 +1,22 @@

# Copyright (c) OpenMMLab. All rights reserved.

+from .base import BaseInferencer

from .feature_extractor import FeatureExtractor

+from .image_caption import ImageCaptionInferencer

from .image_classification import ImageClassificationInferencer

from .image_retrieval import ImageRetrievalInferencer

from .model import (ModelHub, get_model, inference_model, init_model,

list_models)

+from .multimodal_retrieval import (ImageToTextRetrievalInferencer,

+ TextToImageRetrievalInferencer)

+from .nlvr import NLVRInferencer

+from .visual_grounding import VisualGroundingInferencer

+from .visual_question_answering import VisualQuestionAnsweringInferencer

__all__ = [

'init_model', 'inference_model', 'list_models', 'get_model', 'ModelHub',

'ImageClassificationInferencer', 'ImageRetrievalInferencer',

- 'FeatureExtractor'

+ 'FeatureExtractor', 'ImageCaptionInferencer',

+ 'TextToImageRetrievalInferencer', 'VisualGroundingInferencer',

+ 'VisualQuestionAnsweringInferencer', 'ImageToTextRetrievalInferencer',

+ 'BaseInferencer', 'NLVRInferencer'

]

diff --git a/mmpretrain/apis/base.py b/mmpretrain/apis/base.py

new file mode 100644

index 00000000000..ee4f44490c6

--- /dev/null

+++ b/mmpretrain/apis/base.py

@@ -0,0 +1,388 @@

+# Copyright (c) OpenMMLab. All rights reserved.

+from abc import abstractmethod

+from typing import Callable, Iterable, List, Optional, Tuple, Union

+

+import numpy as np

+import torch

+from mmengine.config import Config

+from mmengine.dataset import default_collate

+from mmengine.fileio import get_file_backend

+from mmengine.model import BaseModel

+from mmengine.runner import load_checkpoint

+

+from mmpretrain.structures import DataSample

+from mmpretrain.utils import track

+from .model import get_model, list_models

+

+ModelType = Union[BaseModel, str, Config]

+InputType = Union[str, np.ndarray, list]

+

+

+class BaseInferencer:

+ """Base inferencer for various tasks.

+

+ The BaseInferencer provides the standard workflow for inference as follows:

+

+ 1. Preprocess the input data by :meth:`preprocess`.

+ 2. Forward the data to the model by :meth:`forward`. ``BaseInferencer``

+ assumes the model inherits from :class:`mmengine.models.BaseModel` and

+ will call `model.test_step` in :meth:`forward` by default.

+ 3. Visualize the results by :meth:`visualize`.

+ 4. Postprocess and return the results by :meth:`postprocess`.

+

+ When we call the subclasses inherited from BaseInferencer (not overriding

+ ``__call__``), the workflow will be executed in order.

+

+ All subclasses of BaseInferencer could define the following class

+ attributes for customization:

+

+ - ``preprocess_kwargs``: The keys of the kwargs that will be passed to

+ :meth:`preprocess`.

+ - ``forward_kwargs``: The keys of the kwargs that will be passed to

+ :meth:`forward`

+ - ``visualize_kwargs``: The keys of the kwargs that will be passed to

+ :meth:`visualize`

+ - ``postprocess_kwargs``: The keys of the kwargs that will be passed to

+ :meth:`postprocess`

+

+ All attributes mentioned above should be a ``set`` of keys (strings),

+ and each key should not be duplicated. Actually, :meth:`__call__` will

+ dispatch all the arguments to the corresponding methods according to the

+ ``xxx_kwargs`` mentioned above.

+

+ Subclasses inherited from ``BaseInferencer`` should implement

+ :meth:`_init_pipeline`, :meth:`visualize` and :meth:`postprocess`:

+

+ - _init_pipeline: Return a callable object to preprocess the input data.

+ - visualize: Visualize the results returned by :meth:`forward`.

+ - postprocess: Postprocess the results returned by :meth:`forward` and

+ :meth:`visualize`.

+

+ Args:

+ model (BaseModel | str | Config): A model name or a path to the config

+ file, or a :obj:`BaseModel` object. The model name can be found

+ by ``cls.list_models()`` and you can also query it in

+ :doc:`/modelzoo_statistics`.

+ pretrained (str, optional): Path to the checkpoint. If None, it will

+ try to find a pre-defined weight from the model you specified

+ (only work if the ``model`` is a model name). Defaults to None.

+ device (str | torch.device | None): Transfer the model to the target

+ device. Defaults to None.

+ device_map (str | dict | None): A map that specifies where each

+ submodule should go. It doesn't need to be refined to each

+ parameter/buffer name, once a given module name is inside, every

+ submodule of it will be sent to the same device. You can use

+ `device_map="auto"` to automatically generate the device map.

+ Defaults to None.

+ offload_folder (str | None): If the `device_map` contains any value

+ `"disk"`, the folder where we will offload weights.

+ **kwargs: Other keyword arguments to initialize the model (only work if

+ the ``model`` is a model name).

+ """

+

+ preprocess_kwargs: set = set()

+ forward_kwargs: set = set()

+ visualize_kwargs: set = set()

+ postprocess_kwargs: set = set()

+

+ def __init__(self,

+ model: ModelType,

+ pretrained: Union[bool, str] = True,

+ device: Union[str, torch.device, None] = None,

+ device_map=None,

+ offload_folder=None,

+ **kwargs) -> None:

+

+ if isinstance(model, BaseModel):

+ if isinstance(pretrained, str):

+ load_checkpoint(model, pretrained, map_location='cpu')

+ if device_map is not None:

+ from .utils import dispatch_model

+ model = dispatch_model(

+ model,

+ device_map=device_map,

+ offload_folder=offload_folder)

+ elif device is not None:

+ model.to(device)

+ else:

+ model = get_model(

+ model,

+ pretrained,

+ device=device,

+ device_map=device_map,

+ offload_folder=offload_folder,

+ **kwargs)

+

+ model.eval()

+

+ self.config = model._config

+ self.model = model

+ self.pipeline = self._init_pipeline(self.config)

+ self.visualizer = None

+

+ def __call__(

+ self,

+ inputs,

+ return_datasamples: bool = False,

+ batch_size: int = 1,

+ **kwargs,

+ ) -> dict:

+ """Call the inferencer.

+

+ Args:

+ inputs (InputsType): Inputs for the inferencer.

+ return_datasamples (bool): Whether to return results as

+ :obj:`BaseDataElement`. Defaults to False.

+ batch_size (int): Batch size. Defaults to 1.

+ **kwargs: Key words arguments passed to :meth:`preprocess`,

+ :meth:`forward`, :meth:`visualize` and :meth:`postprocess`.

+ Each key in kwargs should be in the corresponding set of

+ ``preprocess_kwargs``, ``forward_kwargs``, ``visualize_kwargs``

+ and ``postprocess_kwargs``.

+

+ Returns:

+ dict: Inference and visualization results.

+ """

+ (

+ preprocess_kwargs,

+ forward_kwargs,

+ visualize_kwargs,

+ postprocess_kwargs,

+ ) = self._dispatch_kwargs(**kwargs)

+

+ ori_inputs = self._inputs_to_list(inputs)

+ inputs = self.preprocess(

+ ori_inputs, batch_size=batch_size, **preprocess_kwargs)

+ preds = []

+ for data in track(inputs, 'Inference'):

+ preds.extend(self.forward(data, **forward_kwargs))

+ visualization = self.visualize(ori_inputs, preds, **visualize_kwargs)

+ results = self.postprocess(preds, visualization, return_datasamples,

+ **postprocess_kwargs)

+ return results

+

+ def _inputs_to_list(self, inputs: InputType) -> list:

+ """Preprocess the inputs to a list.

+

+ Cast the input data to a list of data.

+

+ - list or tuple: return inputs

+ - str:

+ - Directory path: return all files in the directory

+ - other cases: return a list containing the string. The string

+ could be a path to file, a url or other types of string according

+ to the task.

+ - other: return a list with one item.

+

+ Args:

+ inputs (str | array | list): Inputs for the inferencer.

+

+ Returns:

+ list: List of input for the :meth:`preprocess`.

+ """

+ if isinstance(inputs, str):

+ backend = get_file_backend(inputs)

+ if hasattr(backend, 'isdir') and backend.isdir(inputs):

+ # Backends like HttpsBackend do not implement `isdir`, so only

+ # those backends that implement `isdir` could accept the inputs

+ # as a directory

+ file_list = backend.list_dir_or_file(inputs, list_dir=False)

+ inputs = [

+ backend.join_path(inputs, file) for file in file_list

+ ]

+

+ if not isinstance(inputs, (list, tuple)):

+ inputs = [inputs]

+

+ return list(inputs)

+

+ def preprocess(self, inputs: InputType, batch_size: int = 1, **kwargs):

+ """Process the inputs into a model-feedable format.

+

+ Customize your preprocess by overriding this method. Preprocess should

+ return an iterable object, of which each item will be used as the

+ input of ``model.test_step``.

+

+ ``BaseInferencer.preprocess`` will return an iterable chunked data,

+ which will be used in __call__ like this:

+

+ .. code-block:: python

+

+ def __call__(self, inputs, batch_size=1, **kwargs):

+ chunked_data = self.preprocess(inputs, batch_size, **kwargs)

+ for batch in chunked_data:

+ preds = self.forward(batch, **kwargs)

+

+ Args:

+ inputs (InputsType): Inputs given by user.

+ batch_size (int): batch size. Defaults to 1.

+

+ Yields:

+ Any: Data processed by the ``pipeline`` and ``default_collate``.

+ """

+ chunked_data = self._get_chunk_data(

+ map(self.pipeline, inputs), batch_size)

+ yield from map(default_collate, chunked_data)

+

+ @torch.no_grad()

+ def forward(self, inputs: Union[dict, tuple], **kwargs):

+ """Feed the inputs to the model."""

+ return self.model.test_step(inputs)

+

+ def visualize(self,

+ inputs: list,

+ preds: List[DataSample],

+ show: bool = False,

+ **kwargs) -> List[np.ndarray]:

+ """Visualize predictions.

+

+ Customize your visualization by overriding this method. visualize

+ should return visualization results, which could be np.ndarray or any

+ other objects.

+

+ Args:

+ inputs (list): Inputs preprocessed by :meth:`_inputs_to_list`.

+ preds (Any): Predictions of the model.

+ show (bool): Whether to display the image in a popup window.

+ Defaults to False.

+

+ Returns:

+ List[np.ndarray]: Visualization results.

+ """

+ if show:

+ raise NotImplementedError(

+ f'The `visualize` method of {self.__class__.__name__} '

+ 'is not implemented.')

+

+ @abstractmethod

+ def postprocess(

+ self,

+ preds: List[DataSample],

+ visualization: List[np.ndarray],

+ return_datasample=False,

+ **kwargs,

+ ) -> dict:

+ """Process the predictions and visualization results from ``forward``

+ and ``visualize``.

+

+ This method should be responsible for the following tasks:

+

+ 1. Convert datasamples into a json-serializable dict if needed.

+ 2. Pack the predictions and visualization results and return them.

+ 3. Dump or log the predictions.

+

+ Customize your postprocess by overriding this method. Make sure

+ ``postprocess`` will return a dict with visualization results and

+ inference results.

+

+ Args:

+ preds (List[Dict]): Predictions of the model.

+ visualization (np.ndarray): Visualized predictions.

+ return_datasample (bool): Whether to return results as datasamples.

+ Defaults to False.

+

+ Returns:

+ dict: Inference and visualization results with key ``predictions``

+ and ``visualization``

+

+ - ``visualization (Any)``: Returned by :meth:`visualize`

+ - ``predictions`` (dict or DataSample): Returned by

+ :meth:`forward` and processed in :meth:`postprocess`.

+ If ``return_datasample=False``, it usually should be a

+ json-serializable dict containing only basic data elements such

+ as strings and numbers.

+ """

+

+ @abstractmethod

+ def _init_pipeline(self, cfg: Config) -> Callable:

+ """Initialize the test pipeline.

+

+ Return a pipeline to handle various input data, such as ``str``,

+ ``np.ndarray``. It is an abstract method in BaseInferencer, and should

+ be implemented in subclasses.

+

+ The returned pipeline will be used to process a single data.

+ It will be used in :meth:`preprocess` like this:

+

+ .. code-block:: python

+ def preprocess(self, inputs, batch_size, **kwargs):

+ ...

+ dataset = map(self.pipeline, dataset)

+ ...

+ """

+

+ def _get_chunk_data(self, inputs: Iterable, chunk_size: int):

+ """Get batch data from dataset.

+

+ Args:

+ inputs (Iterable): An iterable dataset.

+ chunk_size (int): Equivalent to batch size.

+

+ Yields:

+ list: batch data.

+ """

+ inputs_iter = iter(inputs)

+ while True:

+ try:

+ chunk_data = []

+ for _ in range(chunk_size):

+ processed_data = next(inputs_iter)

+ chunk_data.append(processed_data)

+ yield chunk_data

+ except StopIteration:

+ if chunk_data:

+ yield chunk_data

+ break

+

+ def _dispatch_kwargs(self, **kwargs) -> Tuple[dict, dict, dict, dict]:

+ """Dispatch kwargs to preprocess(), forward(), visualize() and

+ postprocess() according to the actual demands.

+

+ Returns:

+ Tuple[Dict, Dict, Dict, Dict]: kwargs passed to preprocess,

+ forward, visualize and postprocess respectively.

+ """

+ # Ensure each argument only matches one function

+ method_kwargs = self.preprocess_kwargs | self.forward_kwargs | \

+ self.visualize_kwargs | self.postprocess_kwargs

+

+ union_kwargs = method_kwargs | set(kwargs.keys())

+ if union_kwargs != method_kwargs:

+ unknown_kwargs = union_kwargs - method_kwargs

+ raise ValueError(

+ f'unknown argument {unknown_kwargs} for `preprocess`, '

+ '`forward`, `visualize` and `postprocess`')

+

+ preprocess_kwargs = {}

+ forward_kwargs = {}

+ visualize_kwargs = {}

+ postprocess_kwargs = {}

+

+ for key, value in kwargs.items():

+ if key in self.preprocess_kwargs:

+ preprocess_kwargs[key] = value

+ if key in self.forward_kwargs:

+ forward_kwargs[key] = value

+ if key in self.visualize_kwargs:

+ visualize_kwargs[key] = value

+ if key in self.postprocess_kwargs:

+ postprocess_kwargs[key] = value

+

+ return (

+ preprocess_kwargs,

+ forward_kwargs,

+ visualize_kwargs,

+ postprocess_kwargs,

+ )

+

+ @staticmethod

+ def list_models(pattern: Optional[str] = None):

+ """List models defined in metafile of corresponding packages.

+

+ Args:

+ pattern (str | None): A wildcard pattern to match model names.

+

+ Returns:

+ List[str]: a list of model names.

+ """

+ return list_models(pattern=pattern)

diff --git a/mmpretrain/apis/feature_extractor.py b/mmpretrain/apis/feature_extractor.py

index 513717fc89f..b7c52c2fcbc 100644

--- a/mmpretrain/apis/feature_extractor.py

+++ b/mmpretrain/apis/feature_extractor.py

@@ -1,60 +1,43 @@

# Copyright (c) OpenMMLab. All rights reserved.

from typing import Callable, List, Optional, Union

-import numpy as np

import torch

from mmcv.image import imread

from mmengine.config import Config

from mmengine.dataset import Compose, default_collate

-from mmengine.device import get_device

-from mmengine.infer import BaseInferencer

-from mmengine.model import BaseModel

-from mmengine.runner import load_checkpoint

from mmpretrain.registry import TRANSFORMS

-from .model import get_model, list_models

-

-ModelType = Union[BaseModel, str, Config]

-InputType = Union[str, np.ndarray, list]

+from .base import BaseInferencer, InputType

+from .model import list_models

class FeatureExtractor(BaseInferencer):

"""The inferencer for extract features.

Args:

- model (BaseModel | str | Config): A model name or a path to the confi

+ model (BaseModel | str | Config): A model name or a path to the config

file, or a :obj:`BaseModel` object. The model name can be found

- by ``FeatureExtractor.list_models()``.

- pretrained (bool | str): When use name to specify model, you can

- use ``True`` to load the pre-defined pretrained weights. And you

- can also use a string to specify the path or link of weights to

- load. Defaults to True.

- device (str, optional): Device to run inference. If None, use CPU or

- the device of the input model. Defaults to None.

- """

-

- def __init__(

- self,

- model: ModelType,

- pretrained: Union[bool, str] = True,

- device: Union[str, torch.device, None] = None,

- ) -> None:

- device = device or get_device()

-

- if isinstance(model, BaseModel):

- if isinstance(pretrained, str):

- load_checkpoint(model, pretrained, map_location='cpu')

- model = model.to(device)

- else:

- model = get_model(model, pretrained, device)

-

- model.eval()

-

- self.config = model.config

- self.model = model

- self.pipeline = self._init_pipeline(self.config)

- self.collate_fn = default_collate

- self.visualizer = None

+ by ``FeatureExtractor.list_models()`` and you can also query it in

+ :doc:`/modelzoo_statistics`.

+ pretrained (str, optional): Path to the checkpoint. If None, it will

+ try to find a pre-defined weight from the model you specified

+ (only work if the ``model`` is a model name). Defaults to None.

+ device (str, optional): Device to run inference. If None, the available

+ device will be automatically used. Defaults to None.

+ **kwargs: Other keyword arguments to initialize the model (only work if

+ the ``model`` is a model name).

+

+ Example:

+ >>> from mmpretrain import FeatureExtractor

+ >>> inferencer = FeatureExtractor('resnet50_8xb32_in1k', backbone=dict(out_indices=(0, 1, 2, 3)))

+ >>> feats = inferencer('demo/demo.JPEG', stage='backbone')[0]

+ >>> for feat in feats:

+ >>> print(feat.shape)

+ torch.Size([256, 56, 56])

+ torch.Size([512, 28, 28])

+ torch.Size([1024, 14, 14])

+ torch.Size([2048, 7, 7])

+ """ # noqa: E501

def __call__(self,

inputs: InputType,

@@ -122,7 +105,7 @@ def load_image(input_):

pipeline = Compose([load_image, self.pipeline])

chunked_data = self._get_chunk_data(map(pipeline, inputs), batch_size)

- yield from map(self.collate_fn, chunked_data)

+ yield from map(default_collate, chunked_data)

def visualize(self):

raise NotImplementedError(

diff --git a/mmpretrain/apis/image_caption.py b/mmpretrain/apis/image_caption.py

new file mode 100644

index 00000000000..aef21878112

--- /dev/null

+++ b/mmpretrain/apis/image_caption.py

@@ -0,0 +1,164 @@

+# Copyright (c) OpenMMLab. All rights reserved.

+from pathlib import Path

+from typing import Callable, List, Optional

+

+import numpy as np

+from mmcv.image import imread

+from mmengine.config import Config

+from mmengine.dataset import Compose, default_collate

+

+from mmpretrain.registry import TRANSFORMS

+from mmpretrain.structures import DataSample

+from .base import BaseInferencer, InputType

+from .model import list_models

+

+

+class ImageCaptionInferencer(BaseInferencer):

+ """The inferencer for image caption.

+

+ Args:

+ model (BaseModel | str | Config): A model name or a path to the config

+ file, or a :obj:`BaseModel` object. The model name can be found

+ by ``ImageCaptionInferencer.list_models()`` and you can also

+ query it in :doc:`/modelzoo_statistics`.

+ pretrained (str, optional): Path to the checkpoint. If None, it will

+ try to find a pre-defined weight from the model you specified

+ (only work if the ``model`` is a model name). Defaults to None.

+ device (str, optional): Device to run inference. If None, the available

+ device will be automatically used. Defaults to None.

+ **kwargs: Other keyword arguments to initialize the model (only work if

+ the ``model`` is a model name).

+

+ Example:

+ >>> from mmpretrain import ImageCaptionInferencer

+ >>> inferencer = ImageCaptionInferencer('blip-base_3rdparty_caption')

+ >>> inferencer('demo/cat-dog.png')[0]

+ {'pred_caption': 'a puppy and a cat sitting on a blanket'}

+ """ # noqa: E501

+

+ visualize_kwargs: set = {'resize', 'show', 'show_dir', 'wait_time'}

+

+ def __call__(self,

+ images: InputType,

+ return_datasamples: bool = False,

+ batch_size: int = 1,

+ **kwargs) -> dict:

+ """Call the inferencer.

+

+ Args:

+ images (str | array | list): The image path or array, or a list of

+ images.

+ return_datasamples (bool): Whether to return results as

+ :obj:`DataSample`. Defaults to False.

+ batch_size (int): Batch size. Defaults to 1.

+ resize (int, optional): Resize the short edge of the image to the

+ specified length before visualization. Defaults to None.

+ draw_score (bool): Whether to draw the prediction scores

+ of prediction categories. Defaults to True.

+ show (bool): Whether to display the visualization result in a

+ window. Defaults to False.

+ wait_time (float): The display time (s). Defaults to 0, which means

+ "forever".

+ show_dir (str, optional): If not None, save the visualization

+ results in the specified directory. Defaults to None.

+

+ Returns:

+ list: The inference results.

+ """

+ return super().__call__(images, return_datasamples, batch_size,

+ **kwargs)

+

+ def _init_pipeline(self, cfg: Config) -> Callable:

+ test_pipeline_cfg = cfg.test_dataloader.dataset.pipeline

+ if test_pipeline_cfg[0]['type'] == 'LoadImageFromFile':

+ # Image loading is finished in `self.preprocess`.

+ test_pipeline_cfg = test_pipeline_cfg[1:]

+ test_pipeline = Compose(

+ [TRANSFORMS.build(t) for t in test_pipeline_cfg])

+ return test_pipeline

+

+ def preprocess(self, inputs: List[InputType], batch_size: int = 1):

+

+ def load_image(input_):

+ img = imread(input_)

+ if img is None:

+ raise ValueError(f'Failed to read image {input_}.')

+ return dict(

+ img=img,

+ img_shape=img.shape[:2],

+ ori_shape=img.shape[:2],

+ )

+

+ pipeline = Compose([load_image, self.pipeline])

+

+ chunked_data = self._get_chunk_data(map(pipeline, inputs), batch_size)

+ yield from map(default_collate, chunked_data)

+

+ def visualize(self,

+ ori_inputs: List[InputType],

+ preds: List[DataSample],

+ show: bool = False,

+ wait_time: int = 0,

+ resize: Optional[int] = None,

+ show_dir=None):

+ if not show and show_dir is None:

+ return None

+

+ if self.visualizer is None:

+ from mmpretrain.visualization import UniversalVisualizer

+ self.visualizer = UniversalVisualizer()

+

+ visualization = []

+ for i, (input_, data_sample) in enumerate(zip(ori_inputs, preds)):

+ image = imread(input_)

+ if isinstance(input_, str):

+ # The image loaded from path is BGR format.

+ image = image[..., ::-1]

+ name = Path(input_).stem

+ else:

+ name = str(i)

+

+ if show_dir is not None:

+ show_dir = Path(show_dir)

+ show_dir.mkdir(exist_ok=True)

+ out_file = str((show_dir / name).with_suffix('.png'))

+ else:

+ out_file = None

+

+ self.visualizer.visualize_image_caption(

+ image,

+ data_sample,

+ resize=resize,

+ show=show,

+ wait_time=wait_time,

+ name=name,

+ out_file=out_file)

+ visualization.append(self.visualizer.get_image())

+ if show:

+ self.visualizer.close()

+ return visualization

+

+ def postprocess(self,

+ preds: List[DataSample],

+ visualization: List[np.ndarray],

+ return_datasamples=False) -> dict:

+ if return_datasamples:

+ return preds

+

+ results = []

+ for data_sample in preds:

+ results.append({'pred_caption': data_sample.get('pred_caption')})

+

+ return results

+

+ @staticmethod

+ def list_models(pattern: Optional[str] = None):

+ """List all available model names.

+

+ Args:

+ pattern (str | None): A wildcard pattern to match model names.

+

+ Returns:

+ List[str]: a list of model names.

+ """

+ return list_models(pattern=pattern, task='Image Caption')

diff --git a/mmpretrain/apis/image_classification.py b/mmpretrain/apis/image_classification.py

index e261a568467..081672614c3 100644

--- a/mmpretrain/apis/image_classification.py

+++ b/mmpretrain/apis/image_classification.py

@@ -7,32 +7,28 @@

from mmcv.image import imread

from mmengine.config import Config

from mmengine.dataset import Compose, default_collate

-from mmengine.device import get_device

-from mmengine.infer import BaseInferencer