-

Notifications

You must be signed in to change notification settings - Fork 14

Getting Started with Gluu Flex using SUSE Rancher

Gluu Flex (“Flex”) is a cloud native digital identity platform which enables organisations to authenticate and authorize people and software through the use of open standards like OpenID Connect, OAuth and FIDO.

It is a downstream commercial distribution of the Linux Foundation Janssen Project software, plus two tools from Gluu: a web administration tool and a self-service web portal.

SUSE Rancher’s helm-based deployment approach simplifies the deployment and configuration of Flex, enabling organisations to take advantage of Flex’s modular design to improve their security posture while simultaneously enabling just-in-time auto-scaling.

The key services of Flex include:

-

Jans Auth Server: This component is the OAuth Authorization Server, the OpenID Connect Provider, the UMA Authorization Server for person and software authentication. This service must be internet facing.

-

Jans Fido: This component provides the server side endpoints to enroll and validate devices that use FIDO. It provides both FIDO U2F (register, authenticate) and FIDO 2 (attestation, assertion) endpoints. This service must be internet facing.

-

Jans SCIM: System for Cross-domain Identity Management (SCIM) is JSON/REST API to manage user data. Use it to add, edit and update user information. This service should not be Internet facing.

-

Jans Config API: The API to configure the auth-server and other components is consolidated in this component. This service should not be Internet-facing.

-

Gluu Casa: Casa ('Casa') is a self-service web portal for end-users to manage authentication and authorization preferences for their account in the Server. Self-service web portal which enables people to manage their MFA credentials.

-

Gluu Admin UI: Web admin tool for ad-hoc configuration.

In this Quickstart Guide, we will:

- Deploy Flex and add some users

- Enable two-factor authentication

- Configure the SUSE Rancher UI as an OpenID Connect Relying Party

- Protect content on an Apache web server with OpenID Connect.

This document is intended for DevOps engineers, site reliability engineers (SREs), platform engineers, software engineers, and developers who are responsible for managing and running stateful workloads in Kubernetes clusters.

In addition to the core services listed in the Introduction above, the SUSE Rancher deployment includes the following components:

- MySQL: SQL database dialect used to store configuration, people clients, sessions and other data needed for Gluu Flex operation.

- Key Rotation: Key rotation is a cronjob that implements Cert Manager to rotate the auth keys

- Cert Manager: Cert Manager is used for managing certificates lifecycle.

- Configuration job:

- ConfigMap:

- Secrets store:

- Persistence job:

-

SUSE Rancher installed with an accessible UI

-

Kubernetes cluster running on SUSE Rancher with at least 1 worker node

-

Sufficient RBAC permissions to deploy and manage applications in the cluster.

-

LinuxIO kernel modules on the worker nodes

-

Docker running locally (Linux preferred)

-

Essential tools and CLI utilities installed on your local workstation and are available in your

$PATH:curl,kubectl -

An entry in the

/etc/hostsfile of your local workstation to resolve the hostname of the Gluu Flex installation.

Summary of steps:

-

Install MYSQL:

To install a quick setup with

MySQlas the backend, you need to either provide the connection parameters of a fresh setup or follow the below instructions for a test setup :- Since SUSE Rancher currently doesn't have a

MySQLchart. Hence, we will install it manually. - Open a kubectl shell from the top right navigation menu

>_. - Run

helm repo add bitnami https://charts.bitnami.com/bitnami helm repo update kubectl create ns gluu- Pass in a custom password for the database. Here we used

Test1234#. The admin user will be left asroot. Notice we are installing in thegluunamespace. Run

helm install my-release --set auth.rootPassword=Test1234#,auth.database=jans bitnami/mysql -n gluu- After the installation is successful, you should have a MYSQL statefulset active in the rancher UI as shown in the screenshot below.

- Since SUSE Rancher currently doesn't have a

-

Install Gluu Flex:

- Once MySQL is up and running. Head to the

Apps & Marketplace-->Chartsand search forGluu - Click on

Installon the right side of the window. - Place

gluuas theNamespacethen click onNexton the right side of the window. - On the

Edit Optionstab which is the first one highlighted click onPersistence. - only change

SQL database host uritomy-release-mysql.gluu.svc.cluster.local,SQL database usernametorootandSQL passwordto the password you chose when you installed MySql. For us that would beTest1234#. - Click on the next section labeled

NGINXand enable all the endpoints. - Enable Casa and the Admin UI. Navigate to Optional Services and check the

Enable casaandboolean flag to enable admin UIboxes. - You may also customize the settings for the Flex installation like. Specifically

Optional Servicesfrom where you can enable different services like ClientApi and Jackrabbit. - Click on

Installon the bottom right of the window

- Once MySQL is up and running. Head to the

NOTE: To enable Casa and Admin Ui after having deployed the first time, go to the SUSE Rancher Dashboard -> Apps -> Installed Apps -> gluu -> Click on the 3 dots on the right, Upgrade -> Optional Services and check the Enable casa and boolean flag to enable admin UI boxes and click Update.

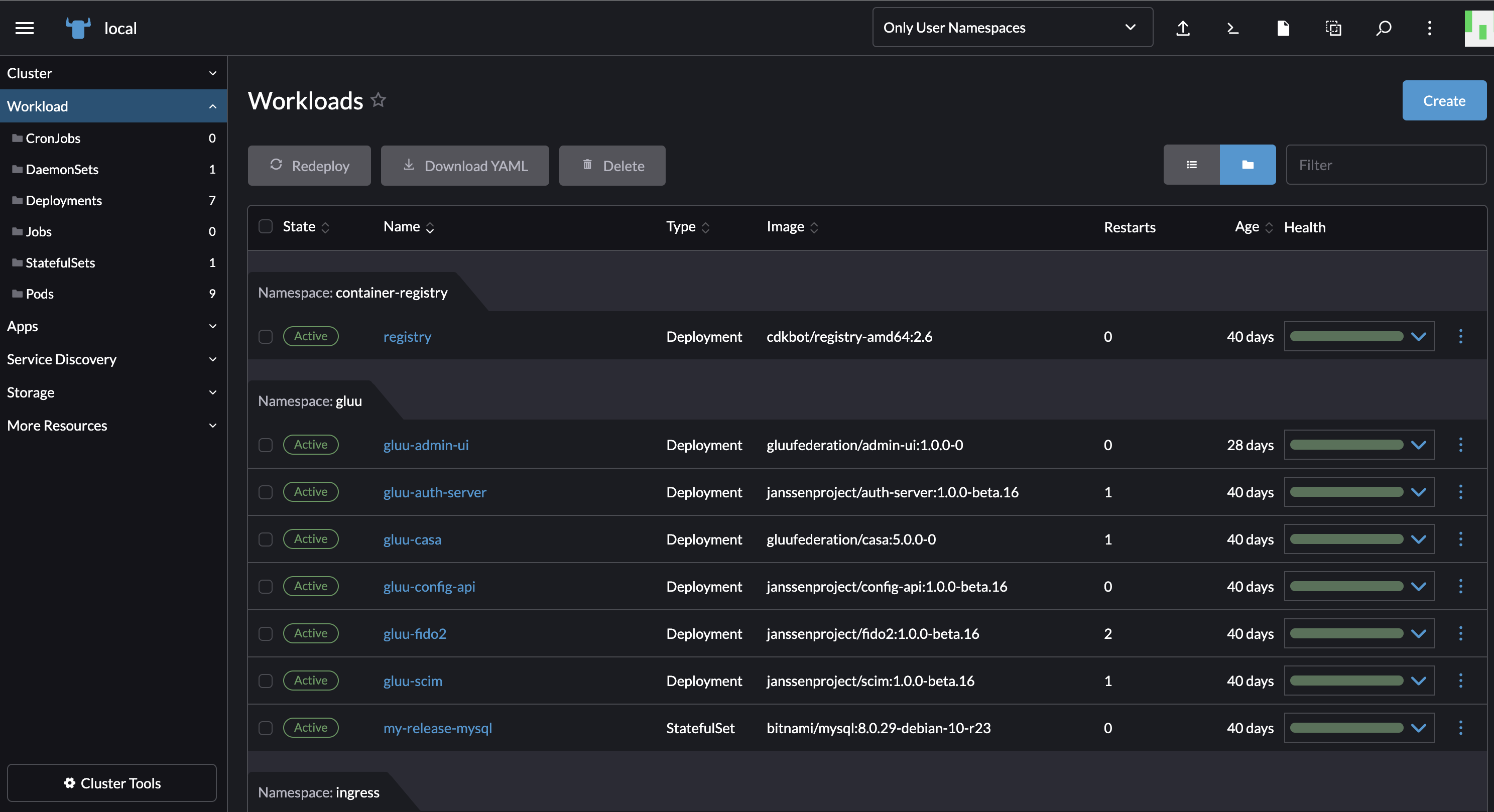

The running deployment and services of different Gluu Flex components like casa, admin ui, scim, auth server, etc can be viewed by navigating through the SUSE Rancher. Go to workloads and see the running pods, Go under service discovery and checkout the ingresses and services. All should be in a healthy and running state like in the screenshot shown below.

-

In order to access the setup from a browser or another VM, we need to change the ingress class annotation from

kubernetes.io/ingress.class: nginxtokubernetes.io/ingress.class: publice.g., for the specific component you want to access publicly in the browser;- Navigate to through the SUSE Rancher UI to

Service Discovery->Ingresses - Choose the name of the ingress for a component that points to a certain target / url e.g

gluu-nginx-ingress-fido2-configurationfor fido - Click on the three dots in the top right corner

- Click on

Edit Yaml - On line 6, change the

kubernetes.io/ingress.classannotation value fromnginxtopublic - Click

Save.

- Navigate to through the SUSE Rancher UI to

-

The ip of the SUSE vm need to get mapped inside

/etc/hostswith the domain chosen for gluu, e. if it's the domain you used in the setup is demoexample.gluu.org,

3.65.27.95 demoexample.gluu.org

-

Go to the browser and try accessing some Gluu Flex endpoints like

https://demoexample.gluu.org/.well-known/fido2-configurationthat we worked with in this example. You should get a similar response like the one below;{"version":"1.1","issuer":"https://demoexample.gluu.org","attestation":{"base_path":"https://demoexample.gluu.org/jans-fido2/restv1/attestation","options_enpoint":"https://demoexample.gluu.org/jans-fido2/restv1/attestation/options","result_enpoint":"https://demoexample.gluu.org/jans-fido2/restv1/attestation/result"},"assertion":{"base_path":"https://demoexample.gluu.org/jans-fido2/restv1/assertion","options_enpoint":"https://demoexample.gluu.org/jans-fido2/restv1/assertion/options","result_enpoint":"https://demoexample.gluu.org/jans-fido2/restv1/assertion/result"}} -

Kindly note that you can also access those endpoints via curl command, E.g.

curl -k https://demoexample.gluu.org/.well-known/openid-configuration -

You can do the same for every ingress of each component that you want to access publicly from the browser.

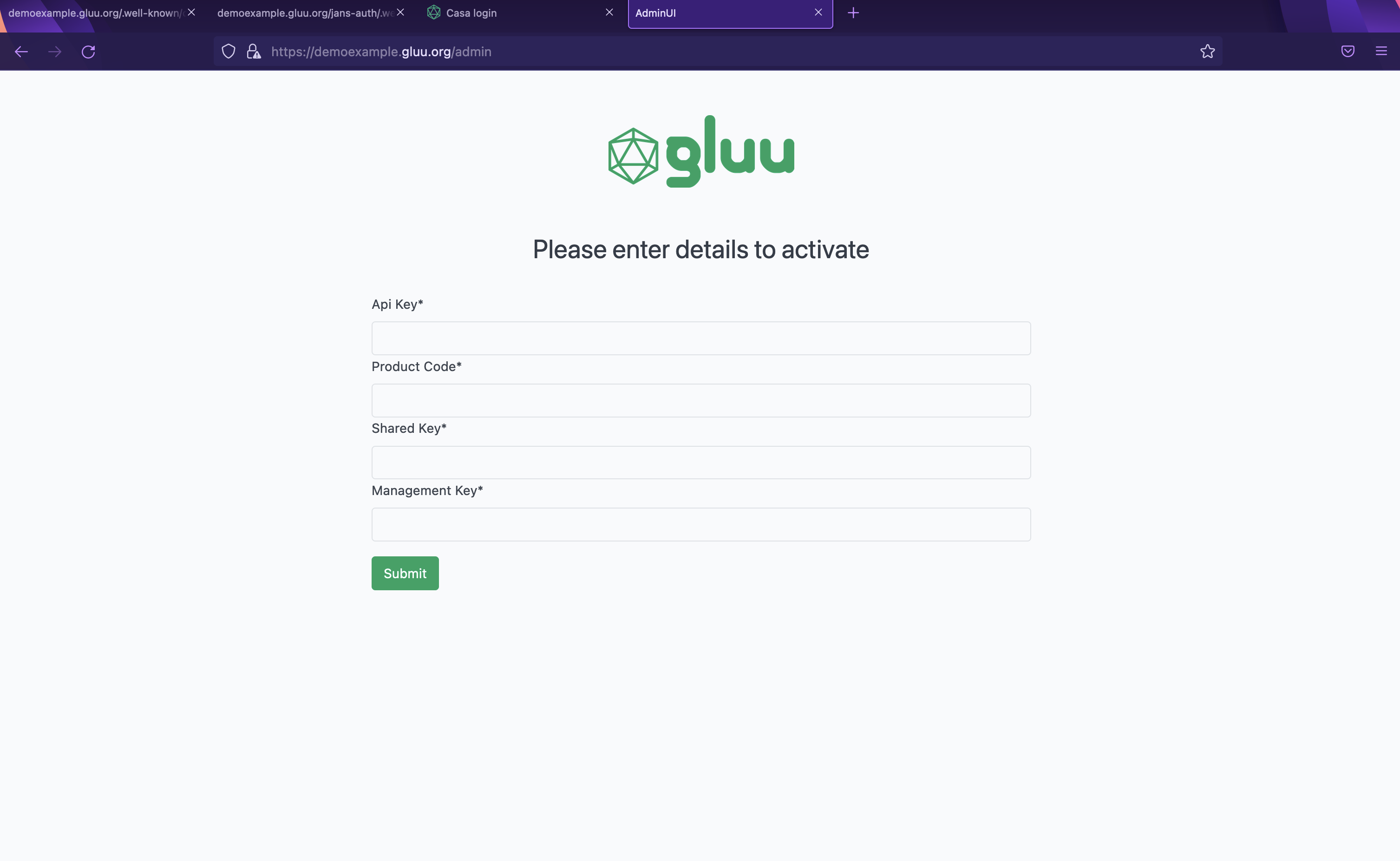

You would need to provide 4 keys to log into the Admin UI as shown in the screenshot below.

- Api Key

- Product Code

- Shared Key

- Management Key

Login to the Gluu Admin UI. To do this, you’ll have to make an ssh tunnel, because the Admin UI is not Internet facing.

You should be able to login as user admin. Remember, the password was set during installation.

You should also add another test user for foo via the admin UI, and this user should be used for testing Casa and 2FA.

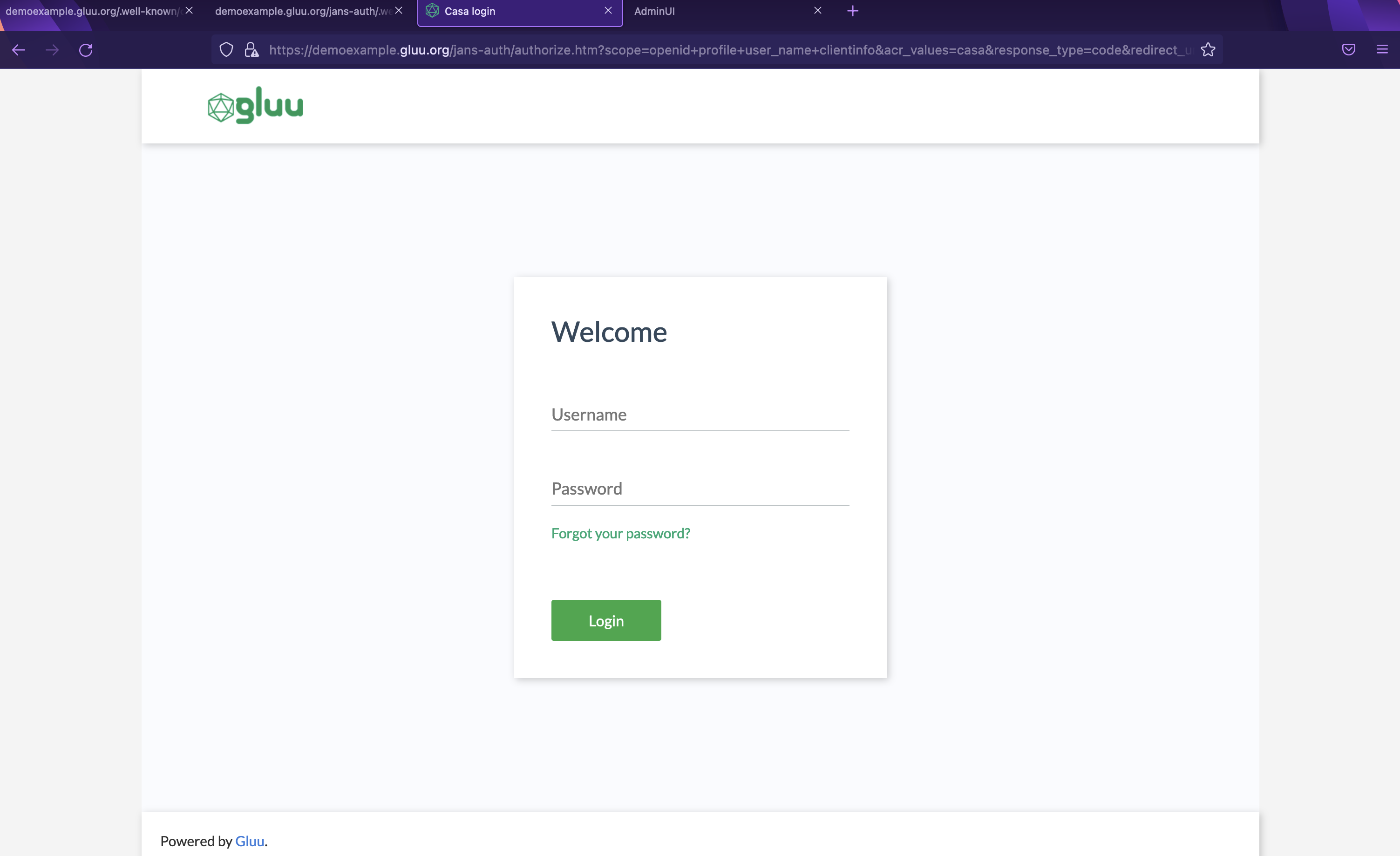

Although you have not enabled two-factor authentication yet, you should still be able to login to Casa as the admin user. Point your browser to https://demoexample.gluu.org/casa and you should be welcomed by the Casa login page as shown below.

In this part we are going to enable two standard authentication mechanisms: OTP and FIDO.

This can be done through the admin UI. The scripts have to be enabled in the AS. Then the OTP and FIDO components have to be enabled in the Casa admin UI.

After the AS and Casa are enabled, go back to Casa as an end user, and register an OTP device (i.e. Google Authenticator) and a FIDO device.

In this part we are going to configure the SUSE Rancher Admin UI to be an OpenID Connect relying party, and route to the Flex server for authentication.

- Create a client in Flex Admin UI with the correct response type and scopes

- Configure SUSE Rancher to use Gluu Flex for authentication

In this part we are going to use docker to locally configure an apache web server, and then install the mod_auth_openidc module and configure it accordingly.

Using local docker containers, our approach is to first register a client, then spin up two Apache containers, one serving static content (with server-side includes configured so we can display headers and environment information), and one acting as the OpenID Connect authenticating reverse proxy.

On Janssen server, register a new client. Do this in the Flex Admin UI. There are two ways you can register OIDC client with Janssen server, Manual Client Registration and Dynamic Client Registration (DCR).

-

Get schema file using this command

/opt/jans/jans-cli/config-cli.py --schema /components/schemas/Client -

Add values for required params and store this JSON in a text file. Take key note on the following properties.

displayName: <name-of-choice> application Type: web includeClaimsInIdToken [false]: Populate optional fields? y clientSecret: <secret-of-your-choice> subjectType: public tokenEndpointAuthMethod: client_secret_basic redirectUris: http://localhost:8111/oauth2callback scopes: email_,openid_,profile responseTypes: code grantTypes: authorization_code -

Now you can use this text file as input to the command below and register your client

/opt/jans/jans-cli/config-cli.py --operation-id post-oauth-openid-clients --data <path>/schema-json-file.json -

After the client is successfully registered, there will be data that describes the newly registered client. Some of these values, like

inumandclientSecret, will be required before we configuremod_auth_openidcSo keep in mind that we shall get back to this.

An application docker container will be run locally which will act as the protected resource (PR) / external application. The following files have code for the small application. We shall create a directory locally / on your machine called test and add the files.

- First create a project folder named

testby runningmkdir test && cd testand add the following files with their content;

app.conf

ServerRoot "/usr/local/apache2"

Listen 80

LoadModule mpm_event_module modules/mod_mpm_event.so

LoadModule authz_core_module modules/mod_authz_core.so

LoadModule include_module modules/mod_include.so

LoadModule filter_module modules/mod_filter.so

LoadModule mime_module modules/mod_mime.so

LoadModule log_config_module modules/mod_log_config.so

LoadModule setenvif_module modules/mod_setenvif.so

LoadModule unixd_module modules/mod_unixd.so

LoadModule dir_module modules/mod_dir.so

User daemon

Group daemon

<Directory />

AllowOverride none

Require all denied

</Directory>

DocumentRoot "/usr/local/apache2/htdocs"

<Directory "/usr/local/apache2/htdocs">

Options Indexes FollowSymLinks Includes

AllowOverride None

Require all granted

SetEnvIf X-Remote-User "(.*)" REMOTE_USER=$0

SetEnvIf X-Remote-User-Name "(.*)" REMOTE_USER_NAME=$0

SetEnvIf X-Remote-User-Email "(.*)" REMOTE_USER_EMAIL=$0

</Directory>

DirectoryIndex index.html

<Files ".ht*">

Require all denied

</Files>

ErrorLog /proc/self/fd/2

LogLevel warn

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\"" combined

LogFormat "%h %l %u %t \"%r\" %>s %b" common

CustomLog /proc/self/fd/1 common

TypesConfig conf/mime.types

AddType application/x-compress .Z

AddType application/x-gzip .gz .tgz

AddType text/html .shtml

AddOutputFilter INCLUDES .shtml

user.shtml

<html>

<head>

<title>Hello User</title>

</head>

<body>

<p>Hello <!--#echo var=REMOTE_USER_NAME -->!</p>

<p>You authenticated as: <!--#echo var=REMOTE_USER --></p>

<p>Your email address is: <!--#echo var=REMOTE_USER_EMAIL --></p>

<p>Environment:</>

<p><!--#printenv -->!</p>

</body>

</html>

index.html

<html>

<head>

<title>Hello World</title>

</head>

<body>

<p>Hello world!</p>

</body>

</html>

Dockerfile

FROM httpd:2.4.54@sha256:c9eba4494b9d856843b49eb897f9a583a0873b1c14c86d5ab77e5bdedd6ad05d

# "Created": "2022-06-08T18:45:46.260791323Z" , "Version":"2.4.54"

RUN apt-get update \

&& apt-get install -y --no-install-recommends wget ca-certificates libcjose0 libhiredis0.14 apache2-api-20120211 apache2-bin\

&& wget https://github.com/zmartzone/mod_auth_openidc/releases/download/v2.4.11.2/libapache2-mod-auth-openidc_2.4.11.2-1.buster+1_amd64.deb \

&& dpkg -i libapache2-mod-auth-openidc_2.4.11.2-1.buster+1_amd64.deb \

&& ln -s /usr/lib/apache2/modules/mod_auth_openidc.so /usr/local/apache2/modules/mod_auth_openidc.so \

&& rm -rf /var/log/dpkg.log /var/log/alternatives.log /var/log/apt \

&& touch /usr/local/apache2/conf/extra/secret.conf \

&& touch /usr/local/apache2/conf/extra/oidc.conf

RUN echo "\n\nLoadModule auth_openidc_module modules/mod_auth_openidc.so\n\nInclude conf/extra/secret.conf\nInclude conf/extra/oidc.conf\n" >> /usr/local/apache2/conf/httpd.conf

gluu.secret.conf

OIDCClientID <inum-as-received-in-client-registration-response>

OIDCCryptoPassphrase <crypto-passphrase-of-choice>

OIDCClientSecret <as-provided-in-client-registration-request>

OIDCResponseType code

OIDCScope "openid email profile"

OIDCProviderTokenEndpointAuth client_secret_basic

OIDCSSLValidateServer Off

OIDCRedirectURI https://test.apache.rp.io/callback

<Location "/">

Require valid-user

AuthType openid-connect

</Location>

-

After, run an Apache container which will play the role of an application being protected by the authenticating reverse proxy.

docker run -dit -p 8110:80 \ -v "$PWD/app.conf":/usr/local/apache2/conf/httpd.conf \ -v "$PWD/index.html":/usr/local/apache2/htdocs/index.html \ -v "$PWD/user.shtml":/usr/local/apache2/htdocs/user.shtml \ --name apache-app httpd:2.4

Note that we are using a popular pre-built image useful for acting as a reverse proxy for authentication in front of an application. It contains a stripped down Apache with minimal modules, and adds the mod_auth_openidc module for performing OpenID Connect authentication.

-

Make a test curl command call to ensure you get back some content as shown in the screenshot below

curl http://localhost:8110/user.shtml

We shall use Apache, but this time we use a Docker image that has mod_auth_oidc installed and configured. This proxy will require authentication, handle the authentication flow with redirects, and then forward requests to the application.

In order to use this, you will need to have registered a new OpenID Connect client on Janssen server. We did that in the step 1 above

- Add the following files in the

testfolder.

oidc.conf

# Unset to make sure clients can't control these

RequestHeader unset X-Remote-User

RequestHeader unset X-Remote-User-Name

RequestHeader unset X-Remote-User-Email

# If you want to see tons of logs for your experimentation

#LogLevel trace8

OIDCClientID <inum-as-received-in-client-registration-response>

OIDCProviderMetadataURL https://idp-proxy.med.stanford.edu/auth/realms/med-all/.well-known/openid-configuration

#OIDCProviderMetadataURL https://idp-proxy-stage.med.stanford.edu/auth/realms/choir/.well-known/openid-configuration

OIDCRedirectURI http://localhost:8111/oauth2callback

OIDCScope "openid email profile"

OIDCRemoteUserClaim principal

OIDCPassClaimsAs environment

<Location />

AuthType openid-connect

Require valid-user

ProxyPass http://app:80/

ProxyPassReverse http://app:80/

RequestHeader set X-Remote-User %{OIDC_CLAIM_principal}e

RequestHeader set X-Remote-User-Name %{OIDC_CLAIM_name}e

RequestHeader set X-Remote-User-Email %{OIDC_CLAIM_email}e

</Location>

proxy.conf

# This is the main Apache HTTP server configuration file. For documentation, see:

# http://httpd.apache.org/docs/2.4/

# http://httpd.apache.org/docs/2.4/mod/directives.html

#

# This is intended to be a hardened configuration, with minimal security surface area necessary

# to run mod_auth_openidc.

ServerRoot "/usr/local/apache2"

Listen 80

LoadModule mpm_event_module modules/mod_mpm_event.so

LoadModule authn_file_module modules/mod_authn_file.so

LoadModule authn_core_module modules/mod_authn_core.so

LoadModule authz_host_module modules/mod_authz_host.so

LoadModule authz_groupfile_module modules/mod_authz_groupfile.so

LoadModule authz_user_module modules/mod_authz_user.so

LoadModule authz_core_module modules/mod_authz_core.so

LoadModule access_compat_module modules/mod_access_compat.so

LoadModule auth_basic_module modules/mod_auth_basic.so

LoadModule reqtimeout_module modules/mod_reqtimeout.so

LoadModule filter_module modules/mod_filter.so

LoadModule mime_module modules/mod_mime.so

LoadModule log_config_module modules/mod_log_config.so

LoadModule env_module modules/mod_env.so

LoadModule headers_module modules/mod_headers.so

LoadModule setenvif_module modules/mod_setenvif.so

#LoadModule version_module modules/mod_version.so

LoadModule proxy_module modules/mod_proxy.so

LoadModule proxy_http_module modules/mod_proxy_http.so

LoadModule unixd_module modules/mod_unixd.so

#LoadModule status_module modules/mod_status.so

#LoadModule autoindex_module modules/mod_autoindex.so

LoadModule dir_module modules/mod_dir.so

LoadModule alias_module modules/mod_alias.so

<IfModule unixd_module>

User daemon

Group daemon

</IfModule>

ServerAdmin [email protected]

<Directory />

AllowOverride none

Require all denied

</Directory>

DocumentRoot "/usr/local/apache2/htdocs"

<Directory "/usr/local/apache2/htdocs">

Options Indexes FollowSymLinks

AllowOverride None

Require all granted

</Directory>

<IfModule dir_module>

DirectoryIndex index.html

</IfModule>

<Directory /opt/apache/htdocs>

Options None

Require all denied

</Directory>

<Files ".ht*">

Require all denied

</Files>

ErrorLog /proc/self/fd/2

LogLevel warn

<IfModule log_config_module>

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\"" combined

LogFormat "%h %l %u %t \"%r\" %>s %b" common

<IfModule logio_module>

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\" %I %O" combinedio

</IfModule>

CustomLog /proc/self/fd/1 common

</IfModule>

<IfModule alias_module>

ScriptAlias /cgi-bin/ "/usr/local/apache2/cgi-bin/"

</IfModule>

<Directory "/usr/local/apache2/cgi-bin">

AllowOverride None

Options None

Require all granted

</Directory>

<IfModule headers_module>

RequestHeader unset Proxy early

</IfModule>

<IfModule mime_module>

TypesConfig conf/mime.types

AddType application/x-compress .Z

AddType application/x-gzip .gz .tgz

</IfModule>

<IfModule proxy_html_module>

Include conf/extra/proxy-html.conf

</IfModule>

<IfModule ssl_module>

SSLRandomSeed startup builtin

SSLRandomSeed connect builtin

</IfModule>

TraceEnable off

ServerTokens Prod

ServerSignature Off

LoadModule auth_openidc_module modules/mod_auth_openidc.so

Include conf/extra/secret.conf

Include conf/extra/oidc.conf

-

Copy the provided sample secret.conf file

cp gluu.secret.conf local.secret.conf -

Edit the file to include the client secret for the client you created during DCR, and add a securely generated pass phrase for the session keys

docker build --pull -t apache-oidc -f Dockerfile . docker run -dit -p 8111:80 \ -v "$PWD/proxy.conf":/usr/local/apache2/conf/httpd.conf \ -v "$PWD/local.secret.conf":/usr/local/apache2/conf/extra/secret.conf \ -v "$PWD/oidc.conf":/usr/local/apache2/conf/extra/oidc.conf \ --link apache-app:app \ --name apache-proxy apache-oidc -

Now open a fresh web browser with private (incognito) mode, and go to this url

http://localhost:8111/user.shtml -

To check the proxy logs

docker logs -f apache-proxy -

To see the app logs

docker logs -f apache-app -

Should you modify the configuration files, just restart the proxy.

docker restart apache-proxy

- Home

-

admin-ui documentation

- Introduction

- Dashboard

- Admin Menu

- Auth Server Menu

- Admin UI Installation on bank server

- CLI commands to Add, Remove Frontend Plugins

- Developer localization guide

- Gluu Admin UI: Frontend Plugin development document

- Gluu Admin UI: Backend Plugin development document

- Gluu Cloud Admin UI: Application Architecture

- Internationalization in Gluu Admin UI

- Jans Admin UI Developers setup Guide

- Jans Config Api Role Mapping

- licenseSpring Integration in Admin UI

- License Policy

- Admin UI: Scopes

- Security and API protection token

- User Management

- Gluu Flex

- Support Portal