This is a pytorch implementation of saliency-2016-cvpr

The prediction of salient areas in images has been traditionally addressed with hand-crafted features based on neuroscience principles. This paper, however, addresses the problem with a completely data-driven approach by training a convolutional neural network (convnet). The learning process is formulated as a minimization of a loss function that measures the Euclidean distance of the predicted saliency map with the provided ground truth. The recent publication of large datasets of saliency prediction has provided enough data to train end-to-end architectures that are both fast and accurate. Two designs are proposed: a shallow convnet trained from scratch, and another deeper solution whose first three layers are adapted from another network trained for classification. To the authors' knowledge, these are the first end-to-end CNNs trained and tested for the purpose of saliency prediction

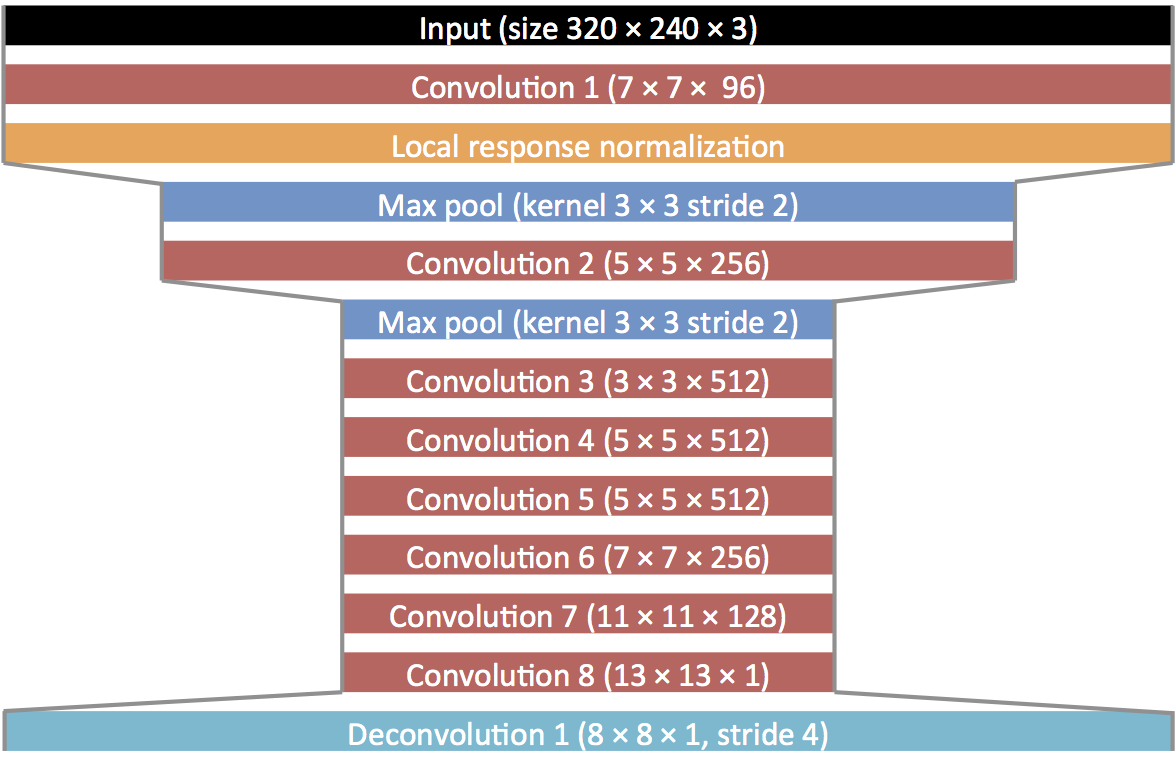

Two convnets are proposed in the paper, a shallow network and a deep one. here, I implemented the deep convnet with pytorch and reduced the channels of convolution layers by half to train the model faster. The main network architecture can be seen in the figure below.

Note that other results may not be as good as these. But probably you be able to generate more precise maps by increasing the number of channels to the main values. Spending more time tuning the model hyperparameters can also be effective.

Note that other results may not be as good as these. But probably you be able to generate more precise maps by increasing the number of channels to the main values. Spending more time tuning the model hyperparameters can also be effective.

CAT2000 dataset is used to train the model. You can use this link to download the dataset

http://saliency.mit.edu/trainSet.zip

This code draws lessons from:

https://github.com/Goutam-Kelam/Visual-Saliency/tree/master/Deep_Net

https://github.com/imatge-upc/saliency-2016-cvpr