diff --git a/.github/PULL_REQUEST_TEMPLATE.md b/.github/PULL_REQUEST_TEMPLATE.md

index 56cbd186..bb946ef2 100644

--- a/.github/PULL_REQUEST_TEMPLATE.md

+++ b/.github/PULL_REQUEST_TEMPLATE.md

@@ -45,4 +45,4 @@ Please describe the tests that you ran to verify your changes. Provide instructi

- [ ] My changes generate no new warnings

- [ ] I have added tests that prove my fix is effective or that my feature works

- [ ] New and existing unit tests pass locally with my changes

-- [ ] Any dependent changes have been merged and published in downstream modules

\ No newline at end of file

+- [ ] Any dependent changes have been merged and published in downstream modules

diff --git a/.gitignore b/.gitignore

index 26133bb2..fa94582a 100644

--- a/.gitignore

+++ b/.gitignore

@@ -104,7 +104,7 @@ ENV/

# Rope project settings

.ropeproject

-# DS Store

+# DS Store

.DS_Store

# for installation purposes

@@ -114,4 +114,4 @@ install-dev

/catboost_info/

# Data

-shapash/data/telco_customer_churn.csv

\ No newline at end of file

+shapash/data/telco_customer_churn.csv

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

new file mode 100644

index 00000000..29ae367f

--- /dev/null

+++ b/.pre-commit-config.yaml

@@ -0,0 +1,70 @@

+default_language_version:

+ python: python3

+repos:

+- repo: https://github.com/pre-commit/pre-commit-hooks

+ rev: v3.2.0

+ hooks:

+ - id: check-ast

+ - id: check-byte-order-marker

+ - id: check-case-conflict

+ - id: check-docstring-first

+ - id: check-executables-have-shebangs

+ - id: check-json

+ - id: check-yaml

+ exclude: ^chart/

+ - id: debug-statements

+ - id: end-of-file-fixer

+ exclude: ^(docs/|gdocs/)

+ - id: pretty-format-json

+ args: ['--autofix']

+ exclude: .ipynb

+ - id: trailing-whitespace

+ args: ['--markdown-linebreak-ext=md']

+ exclude: ^(docs/|gdocs/)

+ - id: mixed-line-ending

+ args: ['--fix=lf']

+ exclude: ^(docs/|gdocs/)

+ - id: check-added-large-files

+ args: ['--maxkb=500']

+ - id: no-commit-to-branch

+ args: ['--branch', 'master', '--branch', 'develop']

+- repo: https://github.com/psf/black

+ rev: 21.12b0

+ hooks:

+ - id: black

+ args: [--line-length=120]

+ additional_dependencies: ['click==8.0.4']

+#- repo: https://github.com/pre-commit/mirrors-mypy

+# rev: 'v0.931'

+# hooks:

+# - id: mypy

+# args: [--ignore-missing-imports, --disallow-untyped-defs, --show-error-codes, --no-site-packages]

+# files: src

+# - repo: https://github.com/PyCQA/flake8

+# rev: 6.0.0

+# hooks:

+# - id: flake8

+# exclude: ^tests/

+# args: ['--ignore=E501,D2,D3,D4,D104,D100,D106,D107,W503,D105,E203']

+# additional_dependencies: [ flake8-docstrings, "flake8-bugbear==22.8.23" ]

+- repo: https://github.com/pre-commit/mirrors-isort

+ rev: v5.4.2

+ hooks:

+ - id: isort

+ args: ["--profile", "black", "-l", "120"]

+- repo: https://github.com/asottile/pyupgrade

+ rev: v2.7.2

+ hooks:

+ - id: pyupgrade

+ args: [--py37-plus]

+- repo: https://github.com/asottile/blacken-docs

+ rev: v1.8.0

+ hooks:

+ - id: blacken-docs

+ additional_dependencies: [black==21.12b0]

+- repo: https://github.com/compilerla/conventional-pre-commit

+ rev: v2.1.1

+ hooks:

+ - id: conventional-pre-commit

+ stages: [commit-msg]

+ args: [] # optional: list of Conventional Commits types to allow e.g. [feat, fix, ci, chore, test]

diff --git a/.readthedocs.yml b/.readthedocs.yml

index f44b00d3..0c09bd5e 100644

--- a/.readthedocs.yml

+++ b/.readthedocs.yml

@@ -21,7 +21,7 @@ build:

os: ubuntu-20.04

tools:

python: "3.10"

-

+

# Optionally set the version of Python and requirements required to build your docs

python:

install:

diff --git a/CONTRIBUTING.md b/CONTRIBUTING.md

index 0e7bc96f..7a650ec8 100644

--- a/CONTRIBUTING.md

+++ b/CONTRIBUTING.md

@@ -58,7 +58,7 @@ To contribute to Shapash, you will need to create a personal branch.

```

git checkout -b feature/my-contribution-branch

```

-We recommand to use a convention of naming branch.

+We recommand to use a convention of naming branch.

- **feature/your_feature_name** if you are creating a feature

- **hotfix/your_bug_fix** if you are fixing a bug

@@ -70,7 +70,7 @@ Before committing your modifications, we have some recommendations :

```

pytest

```

-- Try to build Shapash

+- Try to build Shapash

```

python setup.py bdist_wheel

```

@@ -91,7 +91,7 @@ git commit -m ‘fixed a bug’

git push origin feature/my-contribution-branch

```

-Your branch is now available on your remote forked repository, with your changes.

+Your branch is now available on your remote forked repository, with your changes.

Next step is now to create a Pull Request so the Shapash Team can add your changes to the official repository.

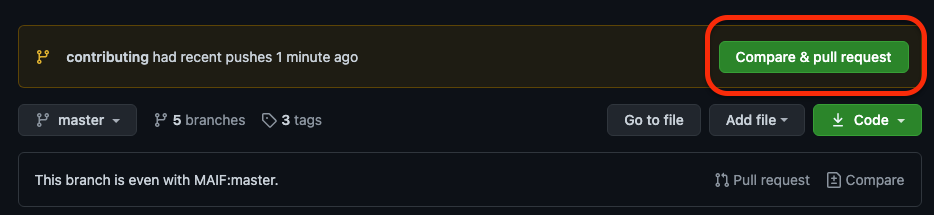

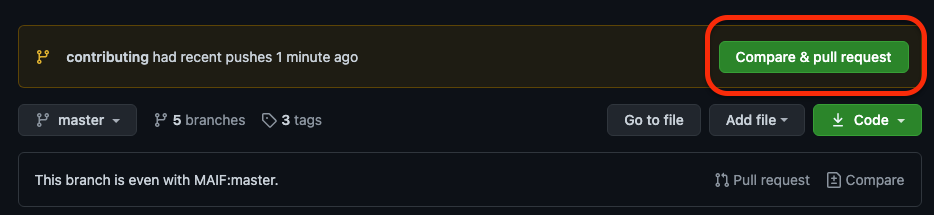

@@ -104,7 +104,7 @@ To create one, on the top of your forked repository, you will find a button "Com

-As you can see, you can select on the right side which branch of your forked repository you want to associate to the pull request.

+As you can see, you can select on the right side which branch of your forked repository you want to associate to the pull request.

On the left side, you will find the official Shapash repository.

@@ -130,4 +130,4 @@ Your pull request is now ready to be submitted. A member of the Shapash team wil

You have contributed to an Open source project, thank you and congratulations ! 🥳

-Show your contribution to Shapash in your curriculum, and share it on your social media. Be proud of yourself, you gave some code lines to the entire world !

\ No newline at end of file

+Show your contribution to Shapash in your curriculum, and share it on your social media. Be proud of yourself, you gave some code lines to the entire world !

diff --git a/LICENSE b/LICENSE

index 4947287f..f433b1a5 100644

--- a/LICENSE

+++ b/LICENSE

@@ -174,4 +174,4 @@

incurred by, or claims asserted against, such Contributor by reason

of your accepting any such warranty or additional liability.

- END OF TERMS AND CONDITIONS

\ No newline at end of file

+ END OF TERMS AND CONDITIONS

diff --git a/Makefile b/Makefile

index 720b6909..1f65e00e 100644

--- a/Makefile

+++ b/Makefile

@@ -84,4 +84,4 @@ dist: clean ## builds source and wheel package

ls -l dist

install: clean ## install the package to the active Python's site-packages

- python setup.py install

\ No newline at end of file

+ python setup.py install

diff --git a/README.md b/README.md

index 8a159b3a..aee9e717 100644

--- a/README.md

+++ b/README.md

@@ -38,7 +38,7 @@ With Shapash, you can generate a **Webapp** that simplifies the comprehension of

Additionally, Shapash contributes to data science auditing by **presenting valuable information** about any model and data **in a comprehensive report**.

-Shapash is suitable for Regression, Binary Classification, and Multiclass problems. It is **compatible with numerous models**, including Catboost, Xgboost, LightGBM, Sklearn Ensemble, Linear models, and SVM. For other models, solutions to integrate Shapash are available; more details can be found [here](#how_shapash_works).

+Shapash is suitable for Regression, Binary Classification and Multiclass problems. It is **compatible with numerous models**, including Catboost, Xgboost, LightGBM, Sklearn Ensemble, Linear models, and SVM. For other models, solutions to integrate Shapash are available; more details can be found [here](#how_shapash_works).

> [!NOTE]

> If you want to give us feedback : [Feedback form](https://framaforms.org/shapash-collecting-your-feedback-and-use-cases-1687456776)

@@ -72,9 +72,9 @@ Shapash is suitable for Regression, Binary Classification, and Multiclass proble

| 2.2.x | Dataset Filter

-As you can see, you can select on the right side which branch of your forked repository you want to associate to the pull request.

+As you can see, you can select on the right side which branch of your forked repository you want to associate to the pull request.

On the left side, you will find the official Shapash repository.

@@ -130,4 +130,4 @@ Your pull request is now ready to be submitted. A member of the Shapash team wil

You have contributed to an Open source project, thank you and congratulations ! 🥳

-Show your contribution to Shapash in your curriculum, and share it on your social media. Be proud of yourself, you gave some code lines to the entire world !

\ No newline at end of file

+Show your contribution to Shapash in your curriculum, and share it on your social media. Be proud of yourself, you gave some code lines to the entire world !

diff --git a/LICENSE b/LICENSE

index 4947287f..f433b1a5 100644

--- a/LICENSE

+++ b/LICENSE

@@ -174,4 +174,4 @@

incurred by, or claims asserted against, such Contributor by reason

of your accepting any such warranty or additional liability.

- END OF TERMS AND CONDITIONS

\ No newline at end of file

+ END OF TERMS AND CONDITIONS

diff --git a/Makefile b/Makefile

index 720b6909..1f65e00e 100644

--- a/Makefile

+++ b/Makefile

@@ -84,4 +84,4 @@ dist: clean ## builds source and wheel package

ls -l dist

install: clean ## install the package to the active Python's site-packages

- python setup.py install

\ No newline at end of file

+ python setup.py install

diff --git a/README.md b/README.md

index 8a159b3a..aee9e717 100644

--- a/README.md

+++ b/README.md

@@ -38,7 +38,7 @@ With Shapash, you can generate a **Webapp** that simplifies the comprehension of

Additionally, Shapash contributes to data science auditing by **presenting valuable information** about any model and data **in a comprehensive report**.

-Shapash is suitable for Regression, Binary Classification, and Multiclass problems. It is **compatible with numerous models**, including Catboost, Xgboost, LightGBM, Sklearn Ensemble, Linear models, and SVM. For other models, solutions to integrate Shapash are available; more details can be found [here](#how_shapash_works).

+Shapash is suitable for Regression, Binary Classification and Multiclass problems. It is **compatible with numerous models**, including Catboost, Xgboost, LightGBM, Sklearn Ensemble, Linear models, and SVM. For other models, solutions to integrate Shapash are available; more details can be found [here](#how_shapash_works).

> [!NOTE]

> If you want to give us feedback : [Feedback form](https://framaforms.org/shapash-collecting-your-feedback-and-use-cases-1687456776)

@@ -72,9 +72,9 @@ Shapash is suitable for Regression, Binary Classification, and Multiclass proble

| 2.2.x | Dataset Filter

| New tab in the webapp to filter data. And several improvements in the webapp: subtitles, labels, screen adjustments | [ ](https://github.com/MAIF/shapash/blob/master/tutorial/tutorial01-Shapash-Overview-Launch-WebApp.ipynb)

| 2.0.x | Refactoring Shapash

](https://github.com/MAIF/shapash/blob/master/tutorial/tutorial01-Shapash-Overview-Launch-WebApp.ipynb)

| 2.0.x | Refactoring Shapash

| Refactoring attributes of compile methods and init. Refactoring implementation for new backends | [ ](https://github.com/MAIF/shapash/blob/master/tutorial/explainer_and_backend/tuto-expl06-Shapash-custom-backend.ipynb)

| 1.7.x | Variabilize Colors

](https://github.com/MAIF/shapash/blob/master/tutorial/explainer_and_backend/tuto-expl06-Shapash-custom-backend.ipynb)

| 1.7.x | Variabilize Colors

| Giving possibility to have your own colour palette for outputs adapted to your design | [ ](https://github.com/MAIF/shapash/blob/master/tutorial/common/tuto-common02-colors.ipynb)

-| 1.6.x | Explainability Quality Metrics

](https://github.com/MAIF/shapash/blob/master/tutorial/common/tuto-common02-colors.ipynb)

-| 1.6.x | Explainability Quality Metrics

[Article](https://towardsdatascience.com/building-confidence-on-explainability-methods-66b9ee575514) | To help increase confidence in explainability methods, you can evaluate the relevance of your explainability using 3 metrics: **Stability**, **Consistency** and **Compacity** | [ ](https://github.com/MAIF/shapash/blob/master/tutorial/explainability_quality/tuto-quality01-Builing-confidence-explainability.ipynb)

-| 1.4.x | Groups of features

](https://github.com/MAIF/shapash/blob/master/tutorial/explainability_quality/tuto-quality01-Builing-confidence-explainability.ipynb)

-| 1.4.x | Groups of features

[Demo](https://shapash-demo2.ossbymaif.fr/) | You can now regroup features that share common properties together.

This option can be useful if your model has a lot of features. | [ ](https://github.com/MAIF/shapash/blob/master/tutorial/common/tuto-common01-groups_of_features.ipynb) |

-| 1.3.x | Shapash Report

](https://github.com/MAIF/shapash/blob/master/tutorial/common/tuto-common01-groups_of_features.ipynb) |

-| 1.3.x | Shapash Report

[Demo](https://shapash.readthedocs.io/en/latest/report.html) | A standalone HTML report that constitutes a basis of an audit document. | [ ](https://github.com/MAIF/shapash/blob/master/tutorial/generate_report/tuto-shapash-report01.ipynb) |

+| 1.6.x | Explainability Quality Metrics

](https://github.com/MAIF/shapash/blob/master/tutorial/generate_report/tuto-shapash-report01.ipynb) |

+| 1.6.x | Explainability Quality Metrics

[Article](https://towardsdatascience.com/building-confidence-on-explainability-methods-66b9ee575514) | To help increase confidence in explainability methods, you can evaluate the relevance of your explainability using 3 metrics: **Stability**, **Consistency** and **Compacity** | [ ](https://github.com/MAIF/shapash/blob/master/tutorial/explainability_quality/tuto-quality01-Builing-confidence-explainability.ipynb)

+| 1.4.x | Groups of features

](https://github.com/MAIF/shapash/blob/master/tutorial/explainability_quality/tuto-quality01-Builing-confidence-explainability.ipynb)

+| 1.4.x | Groups of features

[Demo](https://shapash-demo2.ossbymaif.fr/) | You can now regroup features that share common properties together.

This option can be useful if your model has a lot of features. | [ ](https://github.com/MAIF/shapash/blob/master/tutorial/common/tuto-common01-groups_of_features.ipynb) |

+| 1.3.x | Shapash Report

](https://github.com/MAIF/shapash/blob/master/tutorial/common/tuto-common01-groups_of_features.ipynb) |

+| 1.3.x | Shapash Report

[Demo](https://shapash.readthedocs.io/en/latest/report.html) | A standalone HTML report that constitutes a basis of an audit document. | [ ](https://github.com/MAIF/shapash/blob/master/tutorial/generate_report/tuto-shapash-report01.ipynb) |

## 🔥 Features

@@ -83,19 +83,19 @@ Shapash is suitable for Regression, Binary Classification, and Multiclass proble

](https://github.com/MAIF/shapash/blob/master/tutorial/generate_report/tuto-shapash-report01.ipynb) |

## 🔥 Features

@@ -83,19 +83,19 @@ Shapash is suitable for Regression, Binary Classification, and Multiclass proble

-

-  +

+

-

-  +

+

-

-  +

+

@@ -145,13 +145,13 @@ Shapash can use category-encoders object, sklearn ColumnTransformer or simply fe

Shapash is intended to work with Python versions 3.8 to 3.11. Installation can be done with pip:

-```python

+```bash

pip install shapash

```

In order to generate the Shapash Report some extra requirements are needed.

You can install these using the following command :

-```python

+```bash

pip install shapash[report]

```

@@ -167,24 +167,25 @@ The 4 steps to display results:

```python

from shapash import SmartExplainer

+

xpl = SmartExplainer(

- model=regressor,

- features_dict=house_dict, # Optional parameter

- preprocessing=encoder, # Optional: compile step can use inverse_transform method

- postprocessing=postprocess, # Optional: see tutorial postprocessing

+ model=regressor,

+ features_dict=house_dict, # Optional parameter

+ preprocessing=encoder, # Optional: compile step can use inverse_transform method

+ postprocessing=postprocess, # Optional: see tutorial postprocessing

)

```

- Step 2: Compile Dataset, ...

> There 1 mandatory parameter in compile method: Dataset

-

+

```python

xpl.compile(

- x=xtest,

- y_pred=y_pred, # Optional: for your own prediction (by default: model.predict)

- y_target=yTest, # Optional: allows to display True Values vs Predicted Values

- additional_data=xadditional, # Optional: additional dataset of features for Webapp

- additional_features_dict=features_dict_additional, # Optional: dict additional data

+ x=xtest,

+ y_pred=y_pred, # Optional: for your own prediction (by default: model.predict)

+ y_target=yTest, # Optional: allows to display True Values vs Predicted Values

+ additional_data=xadditional, # Optional: additional dataset of features for Webapp

+ additional_features_dict=features_dict_additional, # Optional: dict additional data

)

```

@@ -193,7 +194,7 @@ xpl.compile(

```python

app = xpl.run_app()

-```

+```

[Live Demo Shapash-Monitor](https://shapash-demo.ossbymaif.fr/)

@@ -203,15 +204,15 @@ app = xpl.run_app()

```python

xpl.generate_report(

- output_file='path/to/output/report.html',

- project_info_file='path/to/project_info.yml',

+ output_file="path/to/output/report.html",

+ project_info_file="path/to/project_info.yml",

x_train=xtrain,

y_train=ytrain,

y_test=ytest,

title_story="House prices report",

title_description="""This document is a data science report of the kaggle house prices tutorial project.

It was generated using the Shapash library.""",

- metrics=[{'name': 'MSE', 'path': 'sklearn.metrics.mean_squared_error'}]

+ metrics=[{"name": "MSE", "path": "sklearn.metrics.mean_squared_error"}],

)

```

@@ -220,9 +221,9 @@ xpl.generate_report(

- Step 5: From training to deployment : SmartPredictor Object

> Shapash provides a SmartPredictor object to deploy the summary of local explanation for the operational needs.

It is an object dedicated to deployment, lighter than SmartExplainer with additional consistency checks.

- SmartPredictor can be used with an API or in batch mode. It provides predictions, detailed or summarized local

+ SmartPredictor can be used with an API or in batch mode. It provides predictions, detailed or summarized local

explainability using appropriate wording.

-

+

```python

predictor = xpl.to_smartpredictor()

```

diff --git a/data/house_prices_labels.json b/data/house_prices_labels.json

index cda51b24..1f3e13da 100644

--- a/data/house_prices_labels.json

+++ b/data/house_prices_labels.json

@@ -1,74 +1,74 @@

{

- "MSSubClass": "Building Class",

- "MSZoning": "General zoning classification",

- "LotArea": "Lot size square feet",

- "Street": "Type of road access",

- "LotShape": "General shape of property",

- "LandContour": "Flatness of the property",

- "Utilities": "Type of utilities available",

- "LotConfig": "Lot configuration",

- "LandSlope": "Slope of property",

- "Neighborhood": "Physical locations within Ames city limits",

- "Condition1": "Proximity to various conditions",

- "Condition2": "Proximity to other various conditions",

- "BldgType": "Type of dwelling",

- "HouseStyle": "Style of dwelling",

- "OverallQual": "Overall material and finish of the house",

- "OverallCond": "Overall condition of the house",

- "YearBuilt": "Original construction date",

- "YearRemodAdd": "Remodel date",

- "RoofStyle": "Type of roof",

- "RoofMatl": "Roof material",

- "Exterior1st": "Exterior covering on house",

- "Exterior2nd": "Other exterior covering on house",

- "MasVnrType": "Masonry veneer type",

- "MasVnrArea": "Masonry veneer area in square feet",

- "ExterQual": "Exterior materials' quality",

- "ExterCond": "Exterior materials' condition",

- "Foundation": "Type of foundation",

- "BsmtQual": "Height of the basement",

- "BsmtCond": "General condition of the basement",

- "BsmtExposure": "Refers to walkout or garden level walls",

- "BsmtFinType1": "Rating of basement finished area",

- "BsmtFinSF1": "Type 1 finished square feet",

- "BsmtFinType2": "Rating of basement finished area (if present)",

- "BsmtFinSF2": "Type 2 finished square feet",

- "BsmtUnfSF": "Unfinished square feet of basement area",

- "TotalBsmtSF": "Total square feet of basement area",

- "Heating": "Type of heating",

- "HeatingQC": "Heating quality and condition",

- "CentralAir": "Central air conditioning",

- "Electrical": "Electrical system",

- "1stFlrSF": "First Floor square feet",

- "2ndFlrSF": "Second floor square feet",

- "LowQualFinSF": "Low quality finished square feet",

- "GrLivArea": "Ground living area square feet",

- "BsmtFullBath": "Basement full bathrooms",

- "BsmtHalfBath": "Basement half bathrooms",

- "FullBath": "Full bathrooms above grade",

- "HalfBath": "Half baths above grade",

- "BedroomAbvGr": "Bedrooms above grade",

- "KitchenAbvGr": "Kitchens above grade",

- "KitchenQual": "Kitchen quality",

- "TotRmsAbvGrd": "Total rooms above grade",

- "Functional": "Home functionality",

- "Fireplaces": "Number of fireplaces",

- "GarageType": "Garage location",

- "GarageYrBlt": "Year garage was built",

- "GarageFinish": "Interior finish of the garage?",

- "GarageArea": "Size of garage in square feet",

- "GarageQual": "Garage quality",

- "GarageCond": "Garage condition",

- "PavedDrive": "Paved driveway",

- "WoodDeckSF": "Wood deck area in square feet",

- "OpenPorchSF": "Open porch area in square feet",

- "EnclosedPorch": "Enclosed porch area in square feet",

- "3SsnPorch": "Three season porch area in square feet",

- "ScreenPorch": "Screen porch area in square feet",

- "PoolArea": "Pool area in square feet",

- "MiscVal": "$Value of miscellaneous feature",

- "MoSold": "Month Sold",

- "YrSold": "Year Sold",

- "SaleType": "Type of sale",

- "SaleCondition": "Condition of sale"

-}

\ No newline at end of file

+ "1stFlrSF": "First Floor square feet",

+ "2ndFlrSF": "Second floor square feet",

+ "3SsnPorch": "Three season porch area in square feet",

+ "BedroomAbvGr": "Bedrooms above grade",

+ "BldgType": "Type of dwelling",

+ "BsmtCond": "General condition of the basement",

+ "BsmtExposure": "Refers to walkout or garden level walls",

+ "BsmtFinSF1": "Type 1 finished square feet",

+ "BsmtFinSF2": "Type 2 finished square feet",

+ "BsmtFinType1": "Rating of basement finished area",

+ "BsmtFinType2": "Rating of basement finished area (if present)",

+ "BsmtFullBath": "Basement full bathrooms",

+ "BsmtHalfBath": "Basement half bathrooms",

+ "BsmtQual": "Height of the basement",

+ "BsmtUnfSF": "Unfinished square feet of basement area",

+ "CentralAir": "Central air conditioning",

+ "Condition1": "Proximity to various conditions",

+ "Condition2": "Proximity to other various conditions",

+ "Electrical": "Electrical system",

+ "EnclosedPorch": "Enclosed porch area in square feet",

+ "ExterCond": "Exterior materials' condition",

+ "ExterQual": "Exterior materials' quality",

+ "Exterior1st": "Exterior covering on house",

+ "Exterior2nd": "Other exterior covering on house",

+ "Fireplaces": "Number of fireplaces",

+ "Foundation": "Type of foundation",

+ "FullBath": "Full bathrooms above grade",

+ "Functional": "Home functionality",

+ "GarageArea": "Size of garage in square feet",

+ "GarageCond": "Garage condition",

+ "GarageFinish": "Interior finish of the garage?",

+ "GarageQual": "Garage quality",

+ "GarageType": "Garage location",

+ "GarageYrBlt": "Year garage was built",

+ "GrLivArea": "Ground living area square feet",

+ "HalfBath": "Half baths above grade",

+ "Heating": "Type of heating",

+ "HeatingQC": "Heating quality and condition",

+ "HouseStyle": "Style of dwelling",

+ "KitchenAbvGr": "Kitchens above grade",

+ "KitchenQual": "Kitchen quality",

+ "LandContour": "Flatness of the property",

+ "LandSlope": "Slope of property",

+ "LotArea": "Lot size square feet",

+ "LotConfig": "Lot configuration",

+ "LotShape": "General shape of property",

+ "LowQualFinSF": "Low quality finished square feet",

+ "MSSubClass": "Building Class",

+ "MSZoning": "General zoning classification",

+ "MasVnrArea": "Masonry veneer area in square feet",

+ "MasVnrType": "Masonry veneer type",

+ "MiscVal": "$Value of miscellaneous feature",

+ "MoSold": "Month Sold",

+ "Neighborhood": "Physical locations within Ames city limits",

+ "OpenPorchSF": "Open porch area in square feet",

+ "OverallCond": "Overall condition of the house",

+ "OverallQual": "Overall material and finish of the house",

+ "PavedDrive": "Paved driveway",

+ "PoolArea": "Pool area in square feet",

+ "RoofMatl": "Roof material",

+ "RoofStyle": "Type of roof",

+ "SaleCondition": "Condition of sale",

+ "SaleType": "Type of sale",

+ "ScreenPorch": "Screen porch area in square feet",

+ "Street": "Type of road access",

+ "TotRmsAbvGrd": "Total rooms above grade",

+ "TotalBsmtSF": "Total square feet of basement area",

+ "Utilities": "Type of utilities available",

+ "WoodDeckSF": "Wood deck area in square feet",

+ "YearBuilt": "Original construction date",

+ "YearRemodAdd": "Remodel date",

+ "YrSold": "Year Sold"

+}

diff --git a/data/titaniclabels.json b/data/titaniclabels.json

index 7a0c8e1e..7aff00fd 100644

--- a/data/titaniclabels.json

+++ b/data/titaniclabels.json

@@ -1 +1,13 @@

-{"PassengerID": "PassengerID", "Survival": "Has survived ?", "Pclass": "Ticket class", "Name": "Name, First name", "Sex": "Sex", "Age": "Age", "SibSp": "Relatives such as brother or wife", "Parch": "Relatives like children or parents", "Fare": "Passenger fare", "Embarked": "Port of embarkation", "Title": "Title of passenger"}

\ No newline at end of file

+{

+ "Age": "Age",

+ "Embarked": "Port of embarkation",

+ "Fare": "Passenger fare",

+ "Name": "Name, First name",

+ "Parch": "Relatives like children or parents",

+ "PassengerID": "PassengerID",

+ "Pclass": "Ticket class",

+ "Sex": "Sex",

+ "SibSp": "Relatives such as brother or wife",

+ "Survival": "Has survived ?",

+ "Title": "Title of passenger"

+}

diff --git a/docs/conf.py b/docs/conf.py

index b0f2c98c..6dfb8a60 100644

--- a/docs/conf.py

+++ b/docs/conf.py

@@ -12,15 +12,16 @@

#

import os

import sys

-sys.path.insert(0, '..')

+

+sys.path.insert(0, "..")

import shapash

# -- Project information -----------------------------------------------------

-project = 'Shapash'

-copyright = '2020, Maif'

-author = 'Maif'

+project = "Shapash"

+copyright = "2020, Maif"

+author = "Maif"

# The short X.Y version

version = shapash.__version__

@@ -35,30 +36,30 @@

# extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

# ones.

extensions = [

- 'sphinx.ext.autodoc',

- 'sphinx.ext.viewcode',

- 'sphinx.ext.todo',

- 'sphinx.ext.napoleon',

- 'nbsphinx',

+ "sphinx.ext.autodoc",

+ "sphinx.ext.viewcode",

+ "sphinx.ext.todo",

+ "sphinx.ext.napoleon",

+ "nbsphinx",

]

-nbsphinx_execute = 'never'

-master_doc = 'index'

+nbsphinx_execute = "never"

+master_doc = "index"

# Add any paths that contain templates here, relative to this directory.

-templates_path = ['_templates']

+templates_path = ["_templates"]

# The language for content autogenerated by Sphinx. Refer to documentation

# for a list of supported languages.

#

# This is also used if you do content translation via gettext catalogs.

# Usually you set "language" from the command line for these cases.

-language = 'en'

+language = "en"

# List of patterns, relative to source directory, that match files and

# directories to ignore when looking for source files.

# This pattern also affects html_static_path and html_extra_path.

-exclude_patterns = ['_build', 'Thumbs.db', '.DS_Store']

+exclude_patterns = ["_build", "Thumbs.db", ".DS_Store"]

# -- Options for HTML output -------------------------------------------------

@@ -66,41 +67,35 @@

# The theme to use for HTML and HTML Help pages. See the documentation for

# a list of builtin themes.

#

-html_theme = 'sphinx_material'

-#html_logo = './assets/images/svg/shapash-github.svg'

+html_theme = "sphinx_material"

+# html_logo = './assets/images/svg/shapash-github.svg'

# Material theme options (see theme.conf for more information)

html_theme_options = {

-

# Set the name of the project to appear in the navigation.

- 'nav_title': 'Shapash',

+ "nav_title": "Shapash",

# Set the color and the accent color

- 'color_primary': 'amber',

- 'color_accent': 'deep-orange',

-

+ "color_primary": "amber",

+ "color_accent": "deep-orange",

# Set the repo location to get a badge with stats

- 'repo_url': 'https://github.com/MAIF/shapash',

- 'repo_name': 'shapash',

-

+ "repo_url": "https://github.com/MAIF/shapash",

+ "repo_name": "shapash",

# Icon of the navbar

- 'logo_icon': '',

-

+ "logo_icon": "",

# Visible levels of the global TOC; -1 means unlimited

- 'globaltoc_depth': 3,

+ "globaltoc_depth": 3,

# If False, expand all TOC entries

- 'globaltoc_collapse': True,

+ "globaltoc_collapse": True,

# If True, show hidden TOC entries

- 'globaltoc_includehidden': False,

+ "globaltoc_includehidden": False,

}

-html_sidebars = {

- "**": ["logo-text.html", "globaltoc.html", "localtoc.html", "searchbox.html"]

-}

+html_sidebars = {"**": ["logo-text.html", "globaltoc.html", "localtoc.html", "searchbox.html"]}

# Add any paths that contain custom static files (such as style sheets) here,

# relative to this directory. They are copied after the builtin static files,

# so a file named "default.css" will overwrite the builtin "default.css".

-html_static_path = ['_static']

+html_static_path = ["_static"]

# -- Extension configuration -------------------------------------------------

@@ -112,9 +107,10 @@

# -- Additional html pages -------------------------------------------------

import subprocess

+

# Generates the report example in the documentation

-subprocess.call(['python', '../tutorial/generate_report/shapash_report_example.py'])

-html_extra_path = ['../tutorial/report/output/report.html']

+subprocess.call(["python", "../tutorial/generate_report/shapash_report_example.py"])

+html_extra_path = ["../tutorial/report/output/report.html"]

def setup_tutorials():

@@ -123,33 +119,45 @@ def setup_tutorials():

def _copy_notebooks_and_create_rst(d_path, new_d_path, d_name):

os.makedirs(new_d_path, exist_ok=True)

- list_notebooks = [f for f in os.listdir(d_path) if os.path.splitext(f)[-1] == '.ipynb']

+ list_notebooks = [f for f in os.listdir(d_path) if os.path.splitext(f)[-1] == ".ipynb"]

for notebook_f_name in list_notebooks:

shutil.copyfile(os.path.join(d_path, notebook_f_name), os.path.join(new_d_path, notebook_f_name))

# RST file (see for example docs/overview.rst)

- rst_file = '\n'.join(

- [f'{d_name}', '======================', '', '.. toctree::',

- ' :maxdepth: 1', ' :glob:', '', ' *', '']

+ rst_file = "\n".join(

+ [

+ f"{d_name}",

+ "======================",

+ "",

+ ".. toctree::",

+ " :maxdepth: 1",

+ " :glob:",

+ "",

+ " *",

+ "",

+ ]

)

- with open(os.path.join(new_d_path, 'index.rst'), 'w') as f:

+ with open(os.path.join(new_d_path, "index.rst"), "w") as f:

f.write(rst_file)

docs_path = pathlib.Path(__file__).parent

- tutorials_path = os.path.join(docs_path.parent, 'tutorial')

- tutorials_doc_path = os.path.join(docs_path, 'tutorials')

+ tutorials_path = os.path.join(docs_path.parent, "tutorial")

+ tutorials_doc_path = os.path.join(docs_path, "tutorials")

# Create a directory in shapash/docs/tutorials for each directory of shapash/tutorial

# And copy each notebook file in it

- list_dir = [d for d in os.listdir(tutorials_path) if os.path.isdir(os.path.join(tutorials_path, d))

- and not d.startswith('.')]

+ list_dir = [

+ d

+ for d in os.listdir(tutorials_path)

+ if os.path.isdir(os.path.join(tutorials_path, d)) and not d.startswith(".")

+ ]

for d_name in list_dir:

d_path = os.path.join(tutorials_path, d_name)

new_d_path = os.path.join(tutorials_doc_path, d_name)

_copy_notebooks_and_create_rst(d_path, new_d_path, d_name)

# Also copying all the overview tutorials (shapash/tutorial/shapash-overview-in-jupyter.ipynb for example)

- _copy_notebooks_and_create_rst(tutorials_path, tutorials_doc_path, 'overview')

+ _copy_notebooks_and_create_rst(tutorials_path, tutorials_doc_path, "overview")

setup_tutorials()

diff --git a/requirements.dev.txt b/requirements.dev.txt

index 9dfe603e..6e89a7b2 100644

--- a/requirements.dev.txt

+++ b/requirements.dev.txt

@@ -25,7 +25,7 @@ nbsphinx==0.8.8

sphinx_material==0.0.35

pytest>=6.2.5

pytest-cov>=2.8.1

-scikit-learn>=1.0.1

+scikit-learn>=1.0.1,<1.4

xgboost>=1.0.0

nbformat>4.2.0

numba>=0.53.1

diff --git a/setup.py b/setup.py

index 11b1c462..76df371c 100644

--- a/setup.py

+++ b/setup.py

@@ -1,108 +1,121 @@

#!/usr/bin/env python

-# -*- coding: utf-8 -*-

"""The setup script."""

import os

+

from setuptools import setup

here = os.path.abspath(os.path.dirname(__file__))

-with open('README.md', encoding='utf8') as readme_file:

+with open("README.md", encoding="utf8") as readme_file:

long_description = readme_file.read()

# Load the package's __version__.py module as a dictionary.

version_d: dict = {}

-with open(os.path.join(here, 'shapash', "__version__.py")) as f:

+with open(os.path.join(here, "shapash", "__version__.py")) as f:

exec(f.read(), version_d)

requirements = [

- 'plotly>=5.0.0',

- 'matplotlib>=3.2.0',

- 'numpy>1.18.0',

- 'pandas>1.0.2',

- 'shap>=0.38.1',

- 'Flask<2.3.0',

- 'dash>=2.3.1',

- 'dash-bootstrap-components>=1.1.0',

- 'dash-core-components>=2.0.0',

- 'dash-daq>=0.5.0',

- 'dash-html-components>=2.0.0',

- 'dash-renderer==1.8.3',

- 'dash-table>=5.0.0',

- 'nbformat>4.2.0',

- 'numba>=0.53.1',

- 'scikit-learn>=1.0.1',

- 'category_encoders>=2.6.0',

- 'scipy>=0.19.1',

+ "plotly>=5.0.0",

+ "matplotlib>=3.2.0",

+ "numpy>1.18.0",

+ "pandas>1.0.2",

+ "shap>=0.38.1",

+ "Flask<2.3.0",

+ "dash>=2.3.1",

+ "dash-bootstrap-components>=1.1.0",

+ "dash-core-components>=2.0.0",

+ "dash-daq>=0.5.0",

+ "dash-html-components>=2.0.0",

+ "dash-renderer==1.8.3",

+ "dash-table>=5.0.0",

+ "nbformat>4.2.0",

+ "numba>=0.53.1",

+ "scikit-learn>=1.0.1,<1.4",

+ "category_encoders>=2.6.0",

+ "scipy>=0.19.1",

]

extras = dict()

# This list should be identical to the list in shapash/report/__init__.py

-extras['report'] = [

- 'nbconvert>=6.0.7',

- 'papermill>=2.0.0',

- 'jupyter-client>=7.4.0',

- 'seaborn==0.12.2',

- 'notebook',

- 'Jinja2>=2.11.0',

- 'phik'

+extras["report"] = [

+ "nbconvert>=6.0.7",

+ "papermill>=2.0.0",

+ "jupyter-client>=7.4.0",

+ "seaborn==0.12.2",

+ "notebook",

+ "Jinja2>=2.11.0",

+ "phik",

]

-extras['xgboost'] = ['xgboost>=1.0.0']

-extras['lightgbm'] = ['lightgbm>=2.3.0']

-extras['catboost'] = ['catboost>=1.0.1']

-extras['lime'] = ['lime>=0.2.0.0']

+extras["xgboost"] = ["xgboost>=1.0.0"]

+extras["lightgbm"] = ["lightgbm>=2.3.0"]

+extras["catboost"] = ["catboost>=1.0.1"]

+extras["lime"] = ["lime>=0.2.0.0"]

-setup_requirements = ['pytest-runner', ]

+setup_requirements = [

+ "pytest-runner",

+]

-test_requirements = ['pytest', ]

+test_requirements = [

+ "pytest",

+]

setup(

name="shapash",

- version=version_d['__version__'],

- python_requires='>3.7, <3.12',

- url='https://github.com/MAIF/shapash',

+ version=version_d["__version__"],

+ python_requires=">3.7, <3.12",

+ url="https://github.com/MAIF/shapash",

author="Yann Golhen, Sebastien Bidault, Yann Lagre, Maxime Gendre",

author_email="yann.golhen@maif.fr",

description="Shapash is a Python library which aims to make machine learning interpretable and understandable by everyone.",

long_description=long_description,

- long_description_content_type='text/markdown',

+ long_description_content_type="text/markdown",

classifiers=[

- 'Programming Language :: Python :: 3',

- 'Programming Language :: Python :: 3.8',

- 'Programming Language :: Python :: 3.9',

- 'Programming Language :: Python :: 3.10',

- 'Programming Language :: Python :: 3.11',

+ "Programming Language :: Python :: 3",

+ "Programming Language :: Python :: 3.8",

+ "Programming Language :: Python :: 3.9",

+ "Programming Language :: Python :: 3.10",

+ "Programming Language :: Python :: 3.11",

"License :: OSI Approved :: Apache Software License",

"Operating System :: OS Independent",

],

install_requires=requirements,

extras_require=extras,

license="Apache Software License 2.0",

- keywords='shapash',

+ keywords="shapash",

package_dir={

- 'shapash': 'shapash',

- 'shapash.data': 'shapash/data',

- 'shapash.decomposition': 'shapash/decomposition',

- 'shapash.explainer': 'shapash/explainer',

- 'shapash.backend': 'shapash/backend',

- 'shapash.manipulation': 'shapash/manipulation',

- 'shapash.report': 'shapash/report',

- 'shapash.utils': 'shapash/utils',

- 'shapash.webapp': 'shapash/webapp',

- 'shapash.webapp.utils': 'shapash/webapp/utils',

- 'shapash.style': 'shapash/style',

+ "shapash": "shapash",

+ "shapash.data": "shapash/data",

+ "shapash.decomposition": "shapash/decomposition",

+ "shapash.explainer": "shapash/explainer",

+ "shapash.backend": "shapash/backend",

+ "shapash.manipulation": "shapash/manipulation",

+ "shapash.report": "shapash/report",

+ "shapash.utils": "shapash/utils",

+ "shapash.webapp": "shapash/webapp",

+ "shapash.webapp.utils": "shapash/webapp/utils",

+ "shapash.style": "shapash/style",

},

- packages=['shapash', 'shapash.data', 'shapash.decomposition',

- 'shapash.explainer', 'shapash.backend', 'shapash.manipulation',

- 'shapash.utils', 'shapash.webapp', 'shapash.webapp.utils',

- 'shapash.report', 'shapash.style'],

- data_files=[('style', ['shapash/style/colors.json'])],

+ packages=[

+ "shapash",

+ "shapash.data",

+ "shapash.decomposition",

+ "shapash.explainer",

+ "shapash.backend",

+ "shapash.manipulation",

+ "shapash.utils",

+ "shapash.webapp",

+ "shapash.webapp.utils",

+ "shapash.report",

+ "shapash.style",

+ ],

+ data_files=[("style", ["shapash/style/colors.json"])],

include_package_data=True,

setup_requires=setup_requirements,

- test_suite='tests',

+ test_suite="tests",

tests_require=test_requirements,

zip_safe=False,

)

diff --git a/shapash/__init__.py b/shapash/__init__.py

index 52cbbfb2..59117772 100644

--- a/shapash/__init__.py

+++ b/shapash/__init__.py

@@ -1,8 +1,10 @@

-

"""Top-level package."""

-__author__ = """Yann Golhen, Yann Lagré, Sebastien Bidault, Maxime Gendre, Thomas Bouche, Johann Martin, Guillaume Vignal"""

-__email__ = 'yann.golhen@maif.fr, yann.lagre@maif.fr, sebabstien.bidault.marketing@maif.fr, thomas.bouche@maif.fr, guillaume.vignal@maif.fr'

+__author__ = (

+ """Yann Golhen, Yann Lagré, Sebastien Bidault, Maxime Gendre, Thomas Bouche, Johann Martin, Guillaume Vignal"""

+)

+__email__ = "yann.golhen@maif.fr, yann.lagre@maif.fr, sebabstien.bidault.marketing@maif.fr, thomas.bouche@maif.fr, guillaume.vignal@maif.fr"

-from .__version__ import __version__

from shapash.explainer.smart_explainer import SmartExplainer

+

+from .__version__ import __version__

diff --git a/shapash/backend/__init__.py b/shapash/backend/__init__.py

index b9d262ab..0627fb74 100644

--- a/shapash/backend/__init__.py

+++ b/shapash/backend/__init__.py

@@ -1,9 +1,9 @@

-import sys

import inspect

+import sys

from .base_backend import BaseBackend

-from .shap_backend import ShapBackend

from .lime_backend import LimeBackend

+from .shap_backend import ShapBackend

def get_backend_cls_from_name(name):

@@ -14,10 +14,10 @@ def get_backend_cls_from_name(name):

cls

for _, cls in inspect.getmembers(sys.modules[__name__])

if (

- inspect.isclass(cls)

- and issubclass(cls, BaseBackend)

- and cls.name.lower() == name.lower()

- and cls.name.lower() != 'base'

+ inspect.isclass(cls)

+ and issubclass(cls, BaseBackend)

+ and cls.name.lower() == name.lower()

+ and cls.name.lower() != "base"

)

]

diff --git a/shapash/backend/base_backend.py b/shapash/backend/base_backend.py

index cd7ef5b8..6e3699a3 100644

--- a/shapash/backend/base_backend.py

+++ b/shapash/backend/base_backend.py

@@ -1,9 +1,10 @@

from abc import ABC, abstractmethod

from typing import Any, List, Optional, Union

-import pandas as pd

+

import numpy as np

+import pandas as pd

-from shapash.utils.check import check_model, check_contribution_object

+from shapash.utils.check import check_contribution_object, check_model

from shapash.utils.transform import adapt_contributions, get_preprocessing_mapping

from shapash.utils.utils import choose_state

@@ -19,13 +20,13 @@ class BaseBackend(ABC):

# `column_aggregation` defines a way to aggregate local contributions.

# Default is sum, possible values are 'sum' or 'first'.

# It allows to compute (column-wise) aggregation of local contributions.

- column_aggregation = 'sum'

+ column_aggregation = "sum"

# `name` defines the string name of the backend allowing to identify and

# construct the backend from it.

- name = 'base'

+ name = "base"

support_groups = True

- supported_cases = ['classification', 'regression']

+ supported_cases = ["classification", "regression"]

def __init__(self, model: Any, preprocessing: Optional[Any] = None):

"""Create a backend instance using a given implementation.

@@ -43,7 +44,7 @@ def __init__(self, model: Any, preprocessing: Optional[Any] = None):

self.state = None

self._case, self._classes = check_model(model)

if self._case not in self.supported_cases:

- raise ValueError(f'Model not supported by the backend as it does not cover {self._case} case')

+ raise ValueError(f"Model not supported by the backend as it does not cover {self._case} case")

@abstractmethod

def run_explainer(self, x: pd.DataFrame) -> dict:

@@ -53,10 +54,7 @@ def run_explainer(self, x: pd.DataFrame) -> dict:

)

def get_local_contributions(

- self,

- x: pd.DataFrame,

- explain_data: Any,

- subset: Optional[List[int]] = None

+ self, x: pd.DataFrame, explain_data: Any, subset: Optional[List[int]] = None

) -> Union[pd.DataFrame, List[pd.DataFrame]]:

"""Get local contributions using the explainer data computed in the `run_explainer`

method.

@@ -81,24 +79,21 @@ def get_local_contributions(

The local contributions computed by the backend.

"""

assert isinstance(explain_data, dict), "The _run_explainer method should return a dict"

- if 'contributions' not in explain_data.keys():

+ if "contributions" not in explain_data.keys():

raise ValueError(

- 'The _run_explainer method should return a dict'

- ' with at least `contributions` key containing '

- 'the local contributions'

+ "The _run_explainer method should return a dict"

+ " with at least `contributions` key containing "

+ "the local contributions"

)

- local_contributions = explain_data['contributions']

+ local_contributions = explain_data["contributions"]

if subset is not None:

local_contributions = local_contributions.loc[subset]

local_contributions = self.format_and_aggregate_local_contributions(x, local_contributions)

return local_contributions

def get_global_features_importance(

- self,

- contributions: pd.DataFrame,

- explain_data: Optional[dict] = None,

- subset: Optional[List[int]] = None

+ self, contributions: pd.DataFrame, explain_data: Optional[dict] = None, subset: Optional[List[int]] = None

) -> Union[pd.Series, List[pd.Series]]:

"""Get global contributions using the explainer data computed in the `run_explainer`

method.

@@ -126,9 +121,9 @@ def get_global_features_importance(

return state.compute_features_import(contributions)

def format_and_aggregate_local_contributions(

- self,

- x: pd.DataFrame,

- contributions: Union[pd.DataFrame, np.array, List[pd.DataFrame], List[np.array]],

+ self,

+ x: pd.DataFrame,

+ contributions: Union[pd.DataFrame, np.array, List[pd.DataFrame], List[np.array]],

) -> Union[pd.DataFrame, List[pd.DataFrame]]:

"""

This function allows to format and aggregate contributions in the right format

@@ -153,7 +148,8 @@ def format_and_aggregate_local_contributions(

check_contribution_object(self._case, self._classes, contributions)

contributions = self.state.validate_contributions(contributions, x)

contributions_cols = (

- contributions.columns.to_list() if isinstance(contributions, pd.DataFrame)

+ contributions.columns.to_list()

+ if isinstance(contributions, pd.DataFrame)

else contributions[0].columns.to_list()

)

if _needs_preprocessing(contributions_cols, x, self.preprocessing):

@@ -161,8 +157,7 @@ def format_and_aggregate_local_contributions(

return contributions

def _apply_preprocessing(

- self,

- contributions: Union[pd.DataFrame, List[pd.DataFrame]]

+ self, contributions: Union[pd.DataFrame, List[pd.DataFrame]]

) -> Union[pd.DataFrame, List[pd.DataFrame]]:

"""

Reconstruct contributions for original features, taken into account a preprocessing.

@@ -179,9 +174,7 @@ def _apply_preprocessing(

"""

if self.preprocessing:

return self.state.inverse_transform_contributions(

- contributions,

- self.preprocessing,

- agg_columns=self.column_aggregation

+ contributions, self.preprocessing, agg_columns=self.column_aggregation

)

else:

return contributions

diff --git a/shapash/backend/lime_backend.py b/shapash/backend/lime_backend.py

index ada25113..3830ba21 100644

--- a/shapash/backend/lime_backend.py

+++ b/shapash/backend/lime_backend.py

@@ -1,10 +1,11 @@

try:

from lime import lime_tabular

+

is_lime_available = True

except ImportError:

is_lime_available = False

-from typing import Any, Optional, List, Union

+from typing import Any, List, Optional, Union

import pandas as pd

@@ -12,12 +13,12 @@

class LimeBackend(BaseBackend):

- column_aggregation = 'sum'

- name = 'lime'

+ column_aggregation = "sum"

+ name = "lime"

support_groups = False

def __init__(self, model, preprocessing=None, data=None, **kwargs):

- super(LimeBackend, self).__init__(model, preprocessing)

+ super().__init__(model, preprocessing)

self.explainer = None

self.data = data

@@ -36,11 +37,7 @@ def run_explainer(self, x: pd.DataFrame):

dict containing local contributions

"""

data = self.data if self.data is not None else x

- explainer = lime_tabular.LimeTabularExplainer(

- data.values,

- feature_names=x.columns,

- mode=self._case

- )

+ explainer = lime_tabular.LimeTabularExplainer(data.values, feature_names=x.columns, mode=self._case)

lime_contrib = []

for i in x.index:

@@ -49,8 +46,7 @@ def run_explainer(self, x: pd.DataFrame):

if num_classes <= 2:

exp = explainer.explain_instance(x.loc[i], self.model.predict_proba, num_features=x.shape[1])

- lime_contrib.append(

- dict([[_transform_name(var_name[0], x), var_name[1]] for var_name in exp.as_list()]))

+ lime_contrib.append({_transform_name(var_name[0], x): var_name[1] for var_name in exp.as_list()})

elif num_classes > 2:

contribution = []

@@ -59,11 +55,11 @@ def run_explainer(self, x: pd.DataFrame):

df_contrib = pd.DataFrame()

for i in x.index:

exp = explainer.explain_instance(

- x.loc[i], self.model.predict_proba, top_labels=num_classes,

- num_features=x.shape[1])

+ x.loc[i], self.model.predict_proba, top_labels=num_classes, num_features=x.shape[1]

+ )

list_contrib.append(

- dict([[_transform_name(var_name[0], x), var_name[1]] for var_name in

- exp.as_list(j)]))

+ {_transform_name(var_name[0], x): var_name[1] for var_name in exp.as_list(j)}

+ )

df_contrib = pd.DataFrame(list_contrib)

df_contrib = df_contrib[list(x.columns)]

contribution.append(df_contrib.values)

@@ -71,8 +67,7 @@ def run_explainer(self, x: pd.DataFrame):

else:

exp = explainer.explain_instance(x.loc[i], self.model.predict, num_features=x.shape[1])

- lime_contrib.append(

- dict([[_transform_name(var_name[0], x), var_name[1]] for var_name in exp.as_list()]))

+ lime_contrib.append({_transform_name(var_name[0], x): var_name[1] for var_name in exp.as_list()})

contributions = pd.DataFrame(lime_contrib, index=x.index)

contributions = contributions[list(x.columns)]

@@ -83,9 +78,8 @@ def run_explainer(self, x: pd.DataFrame):

def _transform_name(var_name, x_df):

- """Function for transform name of LIME contribution shape to a comprehensive name

- """

+ """Function for transform name of LIME contribution shape to a comprehensive name"""

for colname in list(x_df.columns):

- if f' {colname} ' in f' {var_name} ':

+ if f" {colname} " in f" {var_name} ":

col_rename = colname

return col_rename

diff --git a/shapash/backend/shap_backend.py b/shapash/backend/shap_backend.py

index 4771758c..716a304a 100644

--- a/shapash/backend/shap_backend.py

+++ b/shapash/backend/shap_backend.py

@@ -1,5 +1,5 @@

-import pandas as pd

import numpy as np

+import pandas as pd

import shap

from shapash.backend.base_backend import BaseBackend

@@ -8,18 +8,18 @@

class ShapBackend(BaseBackend):

# When grouping features contributions together, Shap uses the sum of the contributions

# of the features that belong to the group

- column_aggregation = 'sum'

- name = 'shap'

+ column_aggregation = "sum"

+ name = "shap"

def __init__(self, model, preprocessing=None, masker=None, explainer_args=None, explainer_compute_args=None):

- super(ShapBackend, self).__init__(model, preprocessing)

+ super().__init__(model, preprocessing)

self.masker = masker

self.explainer_args = explainer_args if explainer_args else {}

self.explainer_compute_args = explainer_compute_args if explainer_compute_args else {}

if self.explainer_args:

if "explainer" in self.explainer_args.keys():

- shap_parameters = {k: v for k, v in self.explainer_args.items() if k != 'explainer'}

+ shap_parameters = {k: v for k, v in self.explainer_args.items() if k != "explainer"}

self.explainer = self.explainer_args["explainer"](**shap_parameters)

else:

self.explainer = shap.Explainer(**self.explainer_args)

@@ -31,15 +31,14 @@ def __init__(self, model, preprocessing=None, masker=None, explainer_args=None,

elif shap.explainers.Additive.supports_model_with_masker(model, self.masker):

self.explainer = shap.Explainer(model=model, masker=self.masker)

# otherwise use a model agnostic method

- elif hasattr(model, 'predict_proba'):

+ elif hasattr(model, "predict_proba"):

self.explainer = shap.Explainer(model=model.predict_proba, masker=self.masker)

- elif hasattr(model, 'predict'):

+ elif hasattr(model, "predict"):

self.explainer = shap.Explainer(model=model.predict, masker=self.masker)

# if we get here then we don't know how to handle what was given to us

else:

raise ValueError("The model is not recognized by Shapash! Model: " + str(model))

-

def run_explainer(self, x: pd.DataFrame) -> dict:

"""

Computes and returns local contributions using Shap explainer

@@ -78,8 +77,10 @@ def get_shap_interaction_values(x_df, explainer):

Shap interaction values for each sample as an array of shape (# samples x # features x # features).

"""

if not isinstance(explainer, shap.TreeExplainer):

- raise ValueError(f"Explainer type ({type(explainer)}) is not a TreeExplainer. "

- f"Shap interaction values can only be computed for TreeExplainer types")

+ raise ValueError(

+ f"Explainer type ({type(explainer)}) is not a TreeExplainer. "

+ f"Shap interaction values can only be computed for TreeExplainer types"

+ )

shap_interaction_values = explainer.shap_interaction_values(x_df)

diff --git a/shapash/data/data_loader.py b/shapash/data/data_loader.py

index bf91ca20..e1ad6699 100644

--- a/shapash/data/data_loader.py

+++ b/shapash/data/data_loader.py

@@ -1,12 +1,13 @@

"""

Data loader module

"""

+import json

import os

from pathlib import Path

-import json

-import pandas as pd

-from urllib.request import urlopen

from urllib.error import URLError

+from urllib.request import urlopen

+

+import pandas as pd

def _find_file(data_path, github_data_url, filename):

@@ -29,7 +30,7 @@ def _find_file(data_path, github_data_url, filename):

"""

file = os.path.join(data_path, filename)

if os.path.isfile(file) is False:

- file = github_data_url+filename

+ file = github_data_url + filename

try:

urlopen(file)

except URLError:

@@ -63,42 +64,42 @@ def data_loading(dataset):

If exist, columns labels dictionnary associated to the dataset.

"""

data_path = str(Path(__file__).parents[2] / "data")

- if dataset == 'house_prices':

- github_data_url = 'https://github.com/MAIF/shapash/raw/master/data/'

+ if dataset == "house_prices":

+ github_data_url = "https://github.com/MAIF/shapash/raw/master/data/"

data_house_prices_path = _find_file(data_path, github_data_url, "house_prices_dataset.csv")

dict_house_prices_path = _find_file(data_path, github_data_url, "house_prices_labels.json")

- data = pd.read_csv(data_house_prices_path, header=0, index_col=0, engine='python')

+ data = pd.read_csv(data_house_prices_path, header=0, index_col=0, engine="python")

if github_data_url in dict_house_prices_path:

with urlopen(dict_house_prices_path) as openfile:

dic = json.load(openfile)

else:

- with open(dict_house_prices_path, 'r') as openfile:

+ with open(dict_house_prices_path) as openfile:

dic = json.load(openfile)

return data, dic

- elif dataset == 'titanic':

- github_data_url = 'https://github.com/MAIF/shapash/raw/master/data/'

+ elif dataset == "titanic":

+ github_data_url = "https://github.com/MAIF/shapash/raw/master/data/"

data_titanic_path = _find_file(data_path, github_data_url, "titanicdata.csv")

- dict_titanic_path = _find_file(data_path, github_data_url, 'titaniclabels.json')

- data = pd.read_csv(data_titanic_path, header=0, index_col=0, engine='python')

+ dict_titanic_path = _find_file(data_path, github_data_url, "titaniclabels.json")

+ data = pd.read_csv(data_titanic_path, header=0, index_col=0, engine="python")

if github_data_url in dict_titanic_path:

with urlopen(dict_titanic_path) as openfile:

dic = json.load(openfile)

else:

- with open(dict_titanic_path, 'r') as openfile:

+ with open(dict_titanic_path) as openfile:

dic = json.load(openfile)

return data, dic

- elif dataset == 'telco_customer_churn':

- github_data_url = 'https://github.com/IBM/telco-customer-churn-on-icp4d/raw/master/data/'

+ elif dataset == "telco_customer_churn":

+ github_data_url = "https://github.com/IBM/telco-customer-churn-on-icp4d/raw/master/data/"

data_telco_path = _find_file(data_path, github_data_url, "Telco-Customer-Churn.csv")

- data = pd.read_csv(data_telco_path, header=0, index_col=0, engine='python')

+ data = pd.read_csv(data_telco_path, header=0, index_col=0, engine="python")

return data

- elif dataset == 'us_car_accident':

- github_data_url = 'https://github.com/MAIF/shapash/raw/master/data/'

+ elif dataset == "us_car_accident":

+ github_data_url = "https://github.com/MAIF/shapash/raw/master/data/"

data_accidents_path = _find_file(data_path, github_data_url, "US_Accidents_extract.csv")

- data = pd.read_csv(data_accidents_path, header=0, engine='python')

+ data = pd.read_csv(data_accidents_path, header=0, engine="python")

return data

else:

diff --git a/shapash/decomposition/contributions.py b/shapash/decomposition/contributions.py

index 9820de2f..79f21409 100644

--- a/shapash/decomposition/contributions.py

+++ b/shapash/decomposition/contributions.py

@@ -2,14 +2,15 @@

Contributions

"""

-import pandas as pd

import numpy as np

-from shapash.utils.transform import preprocessing_tolist

-from shapash.utils.transform import check_transformers

+import pandas as pd

+

from shapash.utils.category_encoder_backend import calc_inv_contrib_ce

from shapash.utils.columntransformer_backend import calc_inv_contrib_ct

+from shapash.utils.transform import check_transformers, preprocessing_tolist

-def inverse_transform_contributions(contributions, preprocessing=None, agg_columns='sum'):

+

+def inverse_transform_contributions(contributions, preprocessing=None, agg_columns="sum"):

"""

Reverse contribution giving a preprocessing.

@@ -38,12 +39,12 @@ def inverse_transform_contributions(contributions, preprocessing=None, agg_colum

"""

if not isinstance(contributions, pd.DataFrame):

- raise Exception('Shap values must be a pandas dataframe.')

+ raise Exception("Shap values must be a pandas dataframe.")

if preprocessing is None:

return contributions

else:

- #Transform preprocessing into a list

+ # Transform preprocessing into a list

list_encoding = preprocessing_tolist(preprocessing)

# check supported inverse

@@ -59,6 +60,7 @@ def inverse_transform_contributions(contributions, preprocessing=None, agg_colum

x_contrib_invers = calc_inv_contrib_ce(x_contrib_invers, encoding, agg_columns)

return x_contrib_invers

+

def rank_contributions(s_df, x_df):

"""

Function to sort contributions and input features

@@ -85,14 +87,15 @@ def rank_contributions(s_df, x_df):

sorted_contrib = np.take_along_axis(s_df.values, argsort, axis=1)

sorted_features = np.take_along_axis(x_df.values, argsort, axis=1)

- contrib_col = ['contribution_' + str(i) for i in range(s_df.shape[1])]

- col = ['feature_' + str(i) for i in range(s_df.shape[1])]

+ contrib_col = ["contribution_" + str(i) for i in range(s_df.shape[1])]

+ col = ["feature_" + str(i) for i in range(s_df.shape[1])]

s_dict = pd.DataFrame(data=argsort, columns=col, index=x_df.index)

s_ord = pd.DataFrame(data=sorted_contrib, columns=contrib_col, index=x_df.index)

x_ord = pd.DataFrame(data=sorted_features, columns=col, index=x_df.index)

return [s_ord, x_ord, s_dict]

+

def assign_contributions(ranked):

"""

Turn a list of results into a dict.

@@ -114,11 +117,7 @@ def assign_contributions(ranked):

"""

if len(ranked) != 3:

raise ValueError(

- 'Expected lenght : 3, observed lenght : {},'

- 'please check the outputs of rank_contributions.'.format(len(ranked))

+ "Expected lenght : 3, observed lenght : {},"

+ "please check the outputs of rank_contributions.".format(len(ranked))

)

- return {

- 'contrib_sorted': ranked[0],

- 'x_sorted': ranked[1],

- 'var_dict': ranked[2]

- }

+ return {"contrib_sorted": ranked[0], "x_sorted": ranked[1], "var_dict": ranked[2]}

diff --git a/shapash/explainer/consistency.py b/shapash/explainer/consistency.py

index 0dd56cb3..631287c9 100644

--- a/shapash/explainer/consistency.py

+++ b/shapash/explainer/consistency.py

@@ -1,20 +1,20 @@

-from category_encoders import OrdinalEncoder

import copy

import itertools

+

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

+from category_encoders import OrdinalEncoder

from plotly import graph_objs as go

from plotly.offline import plot

from plotly.subplots import make_subplots

from sklearn.manifold import MDS

from shapash import SmartExplainer

-from shapash.style.style_utils import colors_loading, select_palette, define_style

-

+from shapash.style.style_utils import colors_loading, define_style, select_palette

-class Consistency():

+class Consistency:

def __init__(self):

self._palette_name = list(colors_loading().keys())[0]

self._style_dict = define_style(select_palette(colors_loading(), self._palette_name))

@@ -27,9 +27,8 @@ def tuning_colorscale(self, values):

values whose quantiles must be calculated

"""

desc_df = values.describe(percentiles=np.arange(0.1, 1, 0.1).tolist())

- min_pred, max_init = list(desc_df.loc[['min', 'max']].values)

- desc_pct_df = (desc_df.loc[~desc_df.index.isin(['count', 'mean', 'std'])] - min_pred) / \

- (max_init - min_pred)

+ min_pred, max_init = list(desc_df.loc[["min", "max"]].values)

+ desc_pct_df = (desc_df.loc[~desc_df.index.isin(["count", "mean", "std"])] - min_pred) / (max_init - min_pred)

color_scale = list(map(list, (zip(desc_pct_df.values.flatten(), self._style_dict["init_contrib_colorscale"]))))

return color_scale

@@ -59,7 +58,7 @@ def compile(self, contributions, x=None, preprocessing=None):

self.x = x

self.preprocessing = preprocessing

if not isinstance(contributions, dict):

- raise ValueError('Contributions must be a dictionary')

+ raise ValueError("Contributions must be a dictionary")

self.methods = list(contributions.keys())

self.weights = list(contributions.values())

@@ -77,15 +76,15 @@ def check_consistency_contributions(self, weights):

List of contributions from different methods

"""

if weights[0].ndim == 1:

- raise ValueError('Multiple datapoints are required to compute the metric')

+ raise ValueError("Multiple datapoints are required to compute the metric")

if not all(isinstance(x, pd.DataFrame) for x in weights):

- raise ValueError('Contributions must be pandas DataFrames')

+ raise ValueError("Contributions must be pandas DataFrames")

if not all(x.shape == weights[0].shape for x in weights):

- raise ValueError('Contributions must be of same shape')

+ raise ValueError("Contributions must be of same shape")

if not all(x.columns.tolist() == weights[0].columns.tolist() for x in weights):

- raise ValueError('Columns names are different between contributions')

+ raise ValueError("Columns names are different between contributions")

if not all(x.index.tolist() == weights[0].index.tolist() for x in weights):

- raise ValueError('Index names are different between contributions')

+ raise ValueError("Index names are different between contributions")

def consistency_plot(self, selection=None, max_features=20):

"""

@@ -113,16 +112,17 @@ def consistency_plot(self, selection=None, max_features=20):

weights = [weight.values for weight in self.weights]

elif isinstance(selection, list):

if len(selection) == 1:

- raise ValueError('Selection must include multiple points')

+ raise ValueError("Selection must include multiple points")

else:

weights = [weight.values[selection] for weight in self.weights]

else:

- raise ValueError('Parameter selection must be a list')

+ raise ValueError("Parameter selection must be a list")

all_comparisons, mean_distances = self.calculate_all_distances(self.methods, weights)

- method_1, method_2, l2, index, backend_name_1, backend_name_2 = \

- self.find_examples(mean_distances, all_comparisons, weights)

+ method_1, method_2, l2, index, backend_name_1, backend_name_2 = self.find_examples(

+ mean_distances, all_comparisons, weights

+ )

self.plot_comparison(mean_distances)

self.plot_examples(method_1, method_2, l2, index, backend_name_1, backend_name_2, max_features)

@@ -156,7 +156,12 @@ def calculate_all_distances(self, methods, weights):

l2_dist = self.calculate_pairwise_distances(weights, index_i, index_j)

# Populate the (n choose 2)x4 array

pairwise_comparison = np.column_stack(

- (np.repeat(index_i, len(l2_dist)), np.repeat(index_j, len(l2_dist)), np.arange(len(l2_dist)), l2_dist,)

+ (

+ np.repeat(index_i, len(l2_dist)),

+ np.repeat(index_j, len(l2_dist)),

+ np.arange(len(l2_dist)),

+ l2_dist,

+ )

)

all_comparisons = np.concatenate((all_comparisons, pairwise_comparison), axis=0)

@@ -249,7 +254,9 @@ def find_examples(self, mean_distances, all_comparisons, weights):

l2 = []

# Evenly split the scale of L2 distances (from min to max excluding 0)

- for i in np.linspace(start=mean_distances[mean_distances > 0].min().min(), stop=mean_distances.max().max(), num=5):

+ for i in np.linspace(

+ start=mean_distances[mean_distances > 0].min().min(), stop=mean_distances.max().max(), num=5

+ ):

# For each split, find the closest existing L2 distance

closest_l2 = all_comparisons[:, -1][np.abs(all_comparisons[:, -1] - i).argmin()]

# Return the row that contains this L2 distance

@@ -298,18 +305,25 @@ def plot_comparison(self, mean_distances):

mean_distances : DataFrame

DataFrame storing all pairwise distances between methods

"""

- font = {"color": '#{:02x}{:02x}{:02x}'.format(50, 50, 50)}

+ font = {"color": "#{:02x}{:02x}{:02x}".format(50, 50, 50)}

fig, ax = plt.subplots(ncols=1, figsize=(10, 6))

- ax.text(x=0.5, y=1.04, s="Consistency of explanations:", fontsize=24, ha="center", transform=fig.transFigure, **font)

- ax.text(x=0.5, y=0.98, s="How similar are explanations from different methods?",

- fontsize=18, ha="center", transform=fig.transFigure, **font)

-

- ax.set_title(

- "Average distances between the explanations", fontsize=14, pad=-60

+ ax.text(

+ x=0.5, y=1.04, s="Consistency of explanations:", fontsize=24, ha="center", transform=fig.transFigure, **font

+ )

+ ax.text(

+ x=0.5,

+ y=0.98,

+ s="How similar are explanations from different methods?",

+ fontsize=18,

+ ha="center",

+ transform=fig.transFigure,

+ **font,

)

+ ax.set_title("Average distances between the explanations", fontsize=14, pad=-60)

+

coords = self.calculate_coords(mean_distances)

ax.scatter(coords[:, 0], coords[:, 1], marker="o")

@@ -331,9 +345,9 @@ def plot_comparison(self, mean_distances):

)

# set gray background

- ax.set_facecolor('#F5F5F2')

+ ax.set_facecolor("#F5F5F2")

# draw solid white grid lines

- ax.grid(color='w', linestyle='solid')

+ ax.grid(color="w", linestyle="solid")

lim = (coords.min().min(), coords.max().max())

margin = 0.1 * (lim[1] - lim[0])

@@ -395,8 +409,8 @@ def plot_examples(self, method_1, method_2, l2, index, backend_name_1, backend_n

figure

"""

y = np.arange(method_1[0].shape[0])

- fig, axes = plt.subplots(ncols=len(l2), figsize=(3*len(l2), 4))

- fig.subplots_adjust(wspace=.3, top=.8)

+ fig, axes = plt.subplots(ncols=len(l2), figsize=(3 * len(l2), 4))

+ fig.subplots_adjust(wspace=0.3, top=0.8)

if len(l2) == 1:

axes = np.array([axes])

fig.suptitle("Examples of explanations' comparisons for various distances (L2 norm)")

@@ -411,27 +425,36 @@ def plot_examples(self, method_1, method_2, l2, index, backend_name_1, backend_n

idx = np.flip(i.argsort())

i, j = i[idx], j[idx]

- axes[n].barh(y, i, label='method 1', left=0, color='#{:02x}{:02x}{:02x}'.format(255, 166, 17))

- axes[n].barh(y, j, label='method 2', left=np.abs(np.max(i)) + np.abs(np.min(j)) + np.max(i)/3,

- color='#{:02x}{:02x}{:02x}'.format(117, 152, 189)) # /3 to add space

+ axes[n].barh(y, i, label="method 1", left=0, color="#{:02x}{:02x}{:02x}".format(255, 166, 17))

+ axes[n].barh(

+ y,

+ j,

+ label="method 2",

+ left=np.abs(np.max(i)) + np.abs(np.min(j)) + np.max(i) / 3,

+ color="#{:02x}{:02x}{:02x}".format(117, 152, 189),

+ ) # /3 to add space

# set gray background

- axes[n].set_facecolor('#F5F5F2')

+ axes[n].set_facecolor("#F5F5F2")

# draw solid white grid lines

- axes[n].grid(color='w', linestyle='solid')

+ axes[n].grid(color="w", linestyle="solid")

- axes[n].set(title="%s: %s" %

- (self.index.name if self.index.name is not None else "Id", l) + "\n$d_{L2}$ = " + str(round(k, 2)))

+ axes[n].set(

+ title="{}: {}".format(self.index.name if self.index.name is not None else "Id", l)

+ + "\n$d_{L2}$ = "

+ + str(round(k, 2))

+ )

axes[n].set_xlabel("Contributions")

axes[n].set_ylabel(f"Top {max_features} features")

- axes[n].set_xticks([0, np.abs(np.max(i)) + np.abs(np.min(j)) + np.max(i)/3])

+ axes[n].set_xticks([0, np.abs(np.max(i)) + np.abs(np.min(j)) + np.max(i) / 3])

axes[n].set_xticklabels([m, o])

axes[n].set_yticks([])

return fig

- def pairwise_consistency_plot(self, methods, selection=None,

- max_features=10, max_points=100, file_name=None, auto_open=False):

+ def pairwise_consistency_plot(

+ self, methods, selection=None, max_features=10, max_points=100, file_name=None, auto_open=False

+ ):

"""The Pairwise_Consistency_plot compares the difference of 2 explainability methods across each feature and each data point,

and plots the distribution of those differences.

@@ -463,11 +486,11 @@ def pairwise_consistency_plot(self, methods, selection=None,

figure

"""

if self.x is None:

- raise ValueError('x must be defined in the compile to display the plot')

+ raise ValueError("x must be defined in the compile to display the plot")

if not isinstance(self.x, pd.DataFrame):

- raise ValueError('x must be a pandas DataFrame')

+ raise ValueError("x must be a pandas DataFrame")

if len(methods) != 2:

- raise ValueError('Choose 2 methods among methods of the contributions')

+ raise ValueError("Choose 2 methods among methods of the contributions")

# Select contributions of input methods

pair_indices = [self.methods.index(x) for x in methods]

@@ -480,12 +503,12 @@ def pairwise_consistency_plot(self, methods, selection=None,

x = self.x.iloc[ind_max_points]

elif isinstance(selection, list):

if len(selection) == 1:

- raise ValueError('Selection must include multiple points')

+ raise ValueError("Selection must include multiple points")

else:

weights = [weight.iloc[selection] for weight in pair_weights]

x = self.x.iloc[selection]

else:

- raise ValueError('Parameter selection must be a list')

+ raise ValueError("Parameter selection must be a list")

# Remove constant columns

const_cols = x.loc[:, x.apply(pd.Series.nunique) == 1]

@@ -526,15 +549,17 @@ def plot_pairwise_consistency(self, weights, x, top_features, methods, file_name

if isinstance(self.preprocessing, OrdinalEncoder):

encoder = self.preprocessing

else:

- categorical_features = [col for col in x.columns if x[col].dtype == 'object']

- encoder = OrdinalEncoder(cols=categorical_features,

- handle_unknown='ignore',

- return_df=True).fit(x)

+ categorical_features = [col for col in x.columns if x[col].dtype == "object"]

+ encoder = OrdinalEncoder(cols=categorical_features, handle_unknown="ignore", return_df=True).fit(x)

x = encoder.transform(x)

- xaxis_title = "Difference of contributions between the 2 methods" \

- + f"

{methods[0]} - {methods[1]}"

- yaxis_title = "Top features

(Ordered by mean of absolute contributions)"

+ xaxis_title = (

+ "Difference of contributions between the 2 methods"

+ + f"

{methods[0]} - {methods[1]}"

+ )

+ yaxis_title = (

+ "Top features

(Ordered by mean of absolute contributions)"

+ )

fig = make_subplots(specs=[[{"secondary_y": True}]])

@@ -551,11 +576,15 @@ def plot_pairwise_consistency(self, weights, x, top_features, methods, file_name

inverse_mapping = {v: k for k, v in mapping.to_dict().items()}

feature_value = x[c].map(inverse_mapping)

- hv_text = [f"Feature value: {i}

{methods[0]}: {j}

{methods[1]}: {k}

Diff: {l}"

- for i, j, k, l in zip(feature_value if switch else x[c].round(3),

- weights[0][c].round(2),

- weights[1][c].round(2),

- (weights[0][c] - weights[1][c]).round(2))]

+ hv_text = [

+ f"Feature value: {i}

{methods[0]}: {j}

{methods[1]}: {k}

Diff: {l}"

+ for i, j, k, l in zip(

+ feature_value if switch else x[c].round(3),

+ weights[0][c].round(2),

+ weights[1][c].round(2),

+ (weights[0][c] - weights[1][c]).round(2),

+ )

+ ]

fig.add_trace(

go.Violin(

@@ -565,25 +594,23 @@ def plot_pairwise_consistency(self, weights, x, top_features, methods, file_name

fillcolor="rgba(255, 0, 0, 0.1)",

line={"color": "black", "width": 0.5},

showlegend=False,

- ), secondary_y=False

+ ),

+ secondary_y=False,

)

fig.add_trace(

go.Scatter(

x=(weights[0][c] - weights[1][c]).values,

- y=len(x)*[i] + np.random.normal(0, 0.1, len(x)),

- mode='markers',

- marker={"color": x[c].values,

- "colorscale": self.tuning_colorscale(x[c]),

- "opacity": 0.7},

+ y=len(x) * [i] + np.random.normal(0, 0.1, len(x)),

+ mode="markers",

+ marker={"color": x[c].values, "colorscale": self.tuning_colorscale(x[c]), "opacity": 0.7},

name=c,

- text=len(x)*[c],

+ text=len(x) * [c],

hovertext=hv_text,

- hovertemplate="%{text}

" +

- "%{hovertext}

" +

- "",

+ hovertemplate="%{text}

" + "%{hovertext}

" + "",

showlegend=False,

- ), secondary_y=True

+ ),

+ secondary_y=True,

)

# Dummy invisible plot to add the color scale

@@ -595,15 +622,17 @@ def plot_pairwise_consistency(self, weights, x, top_features, methods, file_name

size=1,