You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

This one is tricky, because Triton doesn't have a datetime dtype. It could be cast to an integer first, but that would currently need to happen in the Workflow

Bug description

Steps/Code to reproduce bug

I run this example from Merlin Models. I run it all the way (and inclusive) to the cell where I train the model:

Subsequently, I attempt to exporting the ensemble to perform inference on triton using the following code:

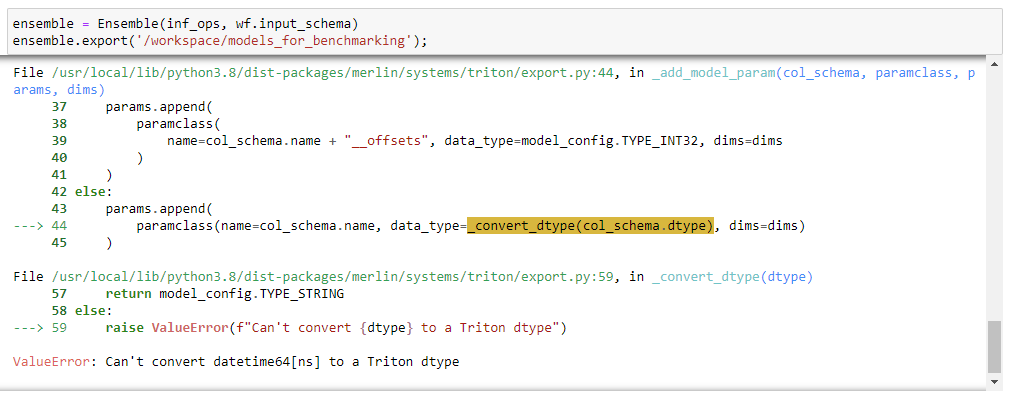

The following error occurs:

Expected behavior

The error doesn't occur and I am able to export the ensemble.

Environment details

I am running the 22.12 tensorflow container with all repos updated to current main.

Additional context

The text was updated successfully, but these errors were encountered: