diff --git a/lab1/Part1_TensorFlow.ipynb b/lab1/Part1_TensorFlow.ipynb

index c1ec0165..c4130494 100644

--- a/lab1/Part1_TensorFlow.ipynb

+++ b/lab1/Part1_TensorFlow.ipynb

@@ -1,2 +1,2 @@

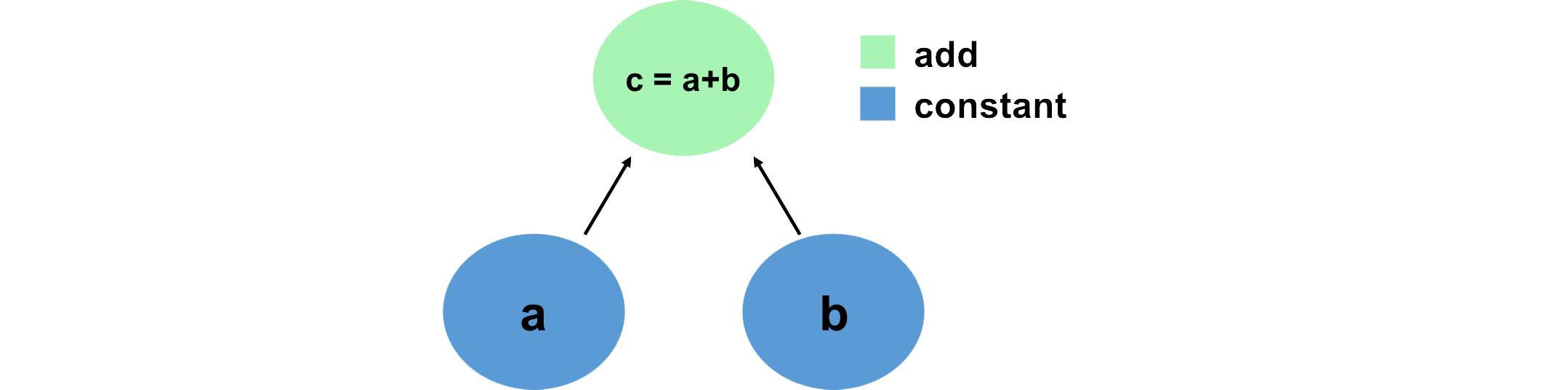

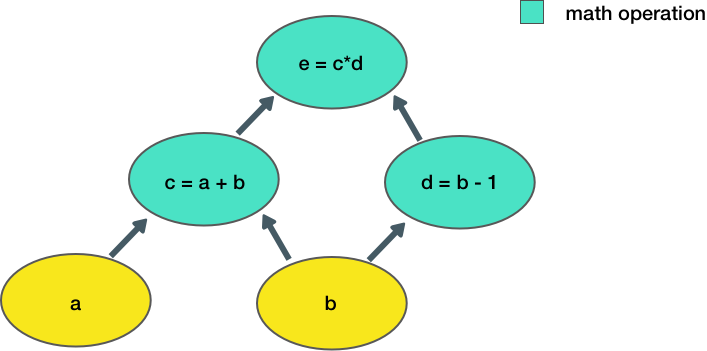

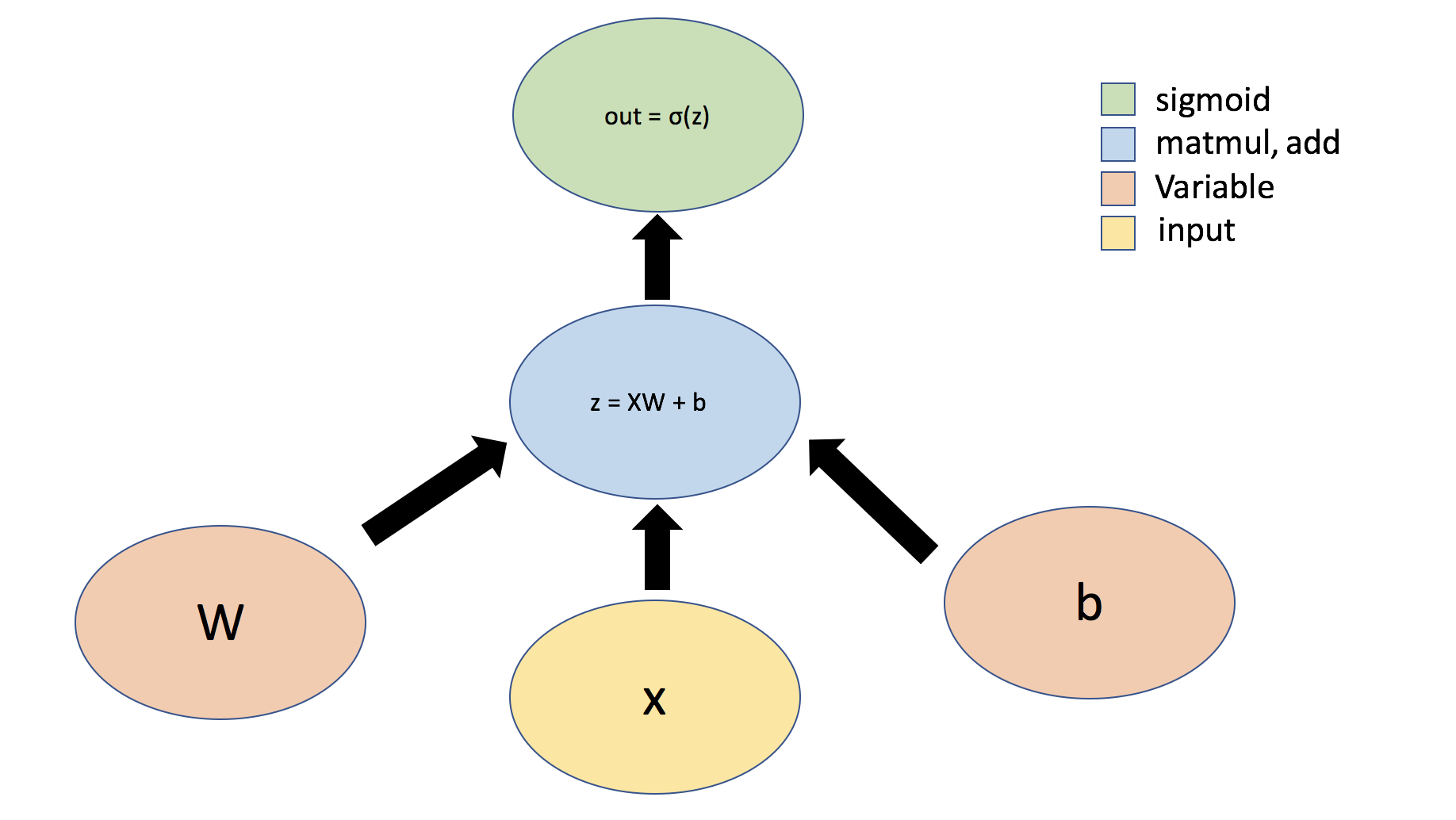

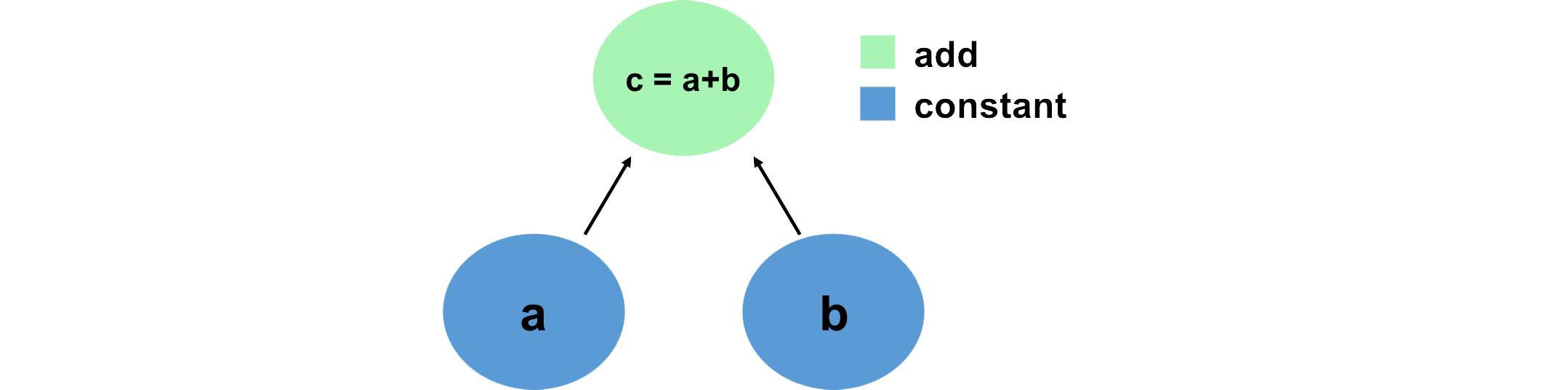

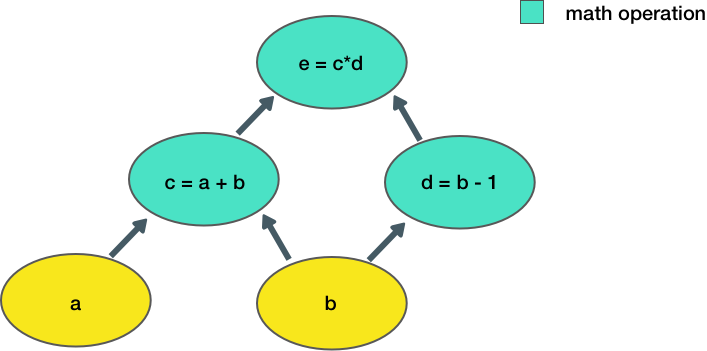

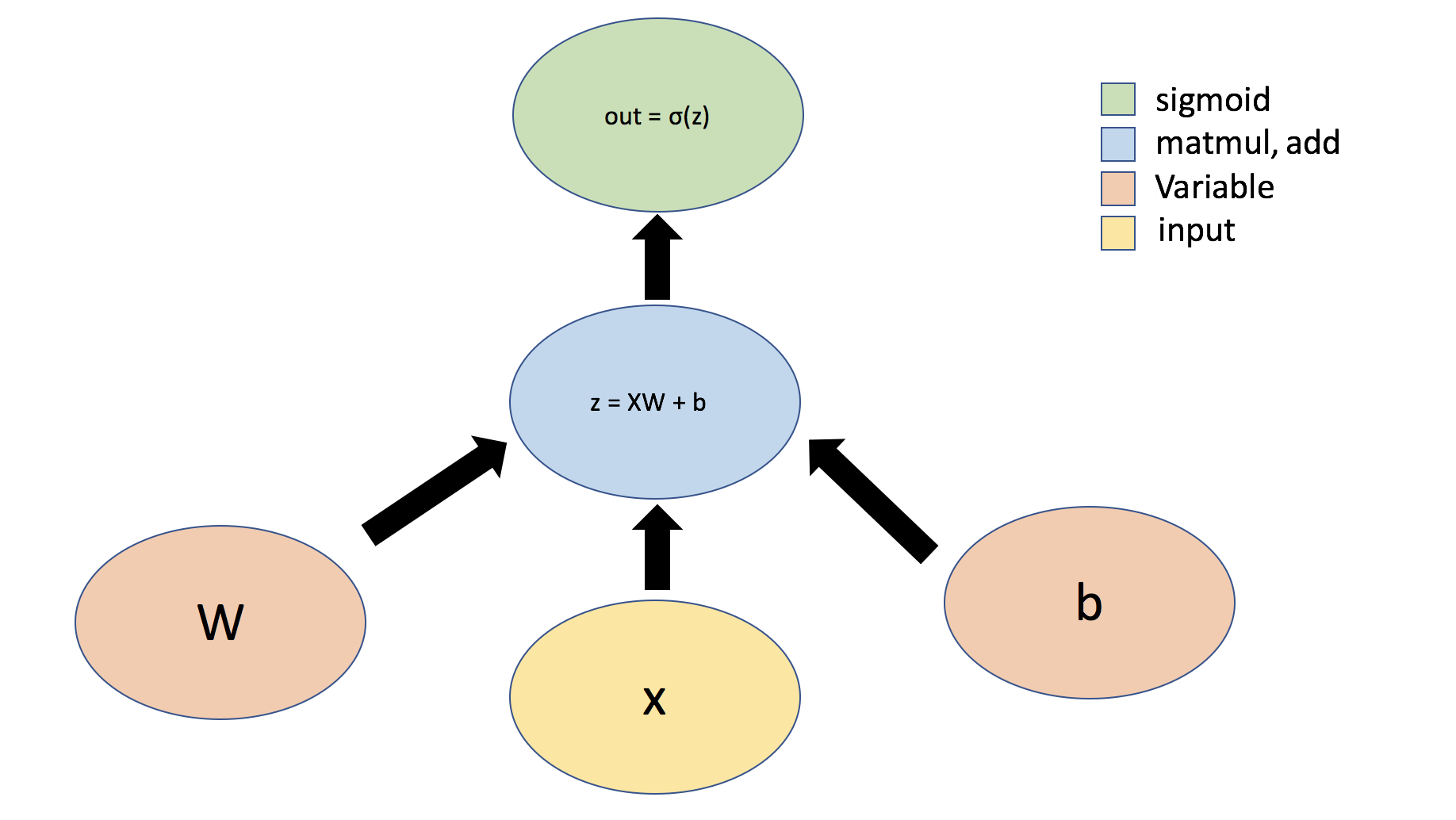

-{"nbformat":4,"nbformat_minor":0,"metadata":{"colab":{"name":"Part1_TensorFlow.ipynb","provenance":[{"file_id":"https://github.com/aamini/introtodeeplearning/blob/master/lab1/Part1_tensorflow_solution.ipynb","timestamp":1577671276005}],"collapsed_sections":["WBk0ZDWY-ff8"]},"kernelspec":{"name":"python3","display_name":"Python 3"},"accelerator":"GPU"},"cells":[{"cell_type":"markdown","metadata":{"id":"WBk0ZDWY-ff8","colab_type":"text"},"source":["\n","\n","# Copyright Information\n"]},{"cell_type":"code","metadata":{"id":"3eI6DUic-6jo","colab_type":"code","colab":{}},"source":["# Copyright 2020 MIT 6.S191 Introduction to Deep Learning. All Rights Reserved.\n","# \n","# Licensed under the MIT License. You may not use this file except in compliance\n","# with the License. Use and/or modification of this code outside of 6.S191 must\n","# reference:\n","#\n","# © MIT 6.S191: Introduction to Deep Learning\n","# http://introtodeeplearning.com\n","#"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"57knM8jrYZ2t","colab_type":"text"},"source":["# Lab 1: Intro to TensorFlow and Music Generation with RNNs\n","\n","In this lab, you'll get exposure to using TensorFlow and learn how it can be used for solving deep learning tasks. Go through the code and run each cell. Along the way, you'll encounter several ***TODO*** blocks -- follow the instructions to fill them out before running those cells and continuing.\n","\n","\n","# Part 1: Intro to TensorFlow\n","\n","## 0.1 Install TensorFlow\n","\n","TensorFlow is a software library extensively used in machine learning. Here we'll learn how computations are represented and how to define a simple neural network in TensorFlow. For all the labs in 6.S191 2020, we'll be using the latest version of TensorFlow, TensorFlow 2, which affords great flexibility and the ability to imperatively execute operations, just like in Python. You'll notice that TensorFlow 2 is quite similar to Python in its syntax and imperative execution. Let's install TensorFlow and a couple of dependencies.\n"]},{"cell_type":"code","metadata":{"id":"LkaimNJfYZ2w","colab_type":"code","colab":{}},"source":["%tensorflow_version 2.x\n","import tensorflow as tf\n","\n","# Download and import the MIT 6.S191 package\n","!pip install mitdeeplearning\n","import mitdeeplearning as mdl\n","\n","import numpy as np\n","import matplotlib.pyplot as plt"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"2QNMcdP4m3Vs","colab_type":"text"},"source":["## 1.1 Why is TensorFlow called TensorFlow?\n","\n","TensorFlow is called 'TensorFlow' because it handles the flow (node/mathematical operation) of Tensors, which are data structures that you can think of as multi-dimensional arrays. Tensors are represented as n-dimensional arrays of base dataypes such as a string or integer -- they provide a way to generalize vectors and matrices to higher dimensions.\n","\n","The ```shape``` of a Tensor defines its number of dimensions and the size of each dimension. The ```rank``` of a Tensor provides the number of dimensions (n-dimensions) -- you can also think of this as the Tensor's order or degree.\n","\n","Let's first look at 0-d Tensors, of which a scalar is an example:"]},{"cell_type":"code","metadata":{"id":"tFxztZQInlAB","colab_type":"code","colab":{}},"source":["sport = tf.constant(\"Tennis\", tf.string)\n","number = tf.constant(1.41421356237, tf.float64)\n","\n","print(\"`sport` is a {}-d Tensor\".format(tf.rank(sport).numpy()))\n","print(\"`number` is a {}-d Tensor\".format(tf.rank(number).numpy()))"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"-dljcPUcoJZ6","colab_type":"text"},"source":["Vectors and lists can be used to create 1-d Tensors:"]},{"cell_type":"code","metadata":{"id":"oaHXABe8oPcO","colab_type":"code","colab":{}},"source":["sports = tf.constant([\"Tennis\", \"Basketball\"], tf.string)\n","numbers = tf.constant([3.141592, 1.414213, 2.71821], tf.float64)\n","\n","print(\"`sports` is a {}-d Tensor with shape: {}\".format(tf.rank(sports).numpy(), tf.shape(sports)))\n","print(\"`numbers` is a {}-d Tensor with shape: {}\".format(tf.rank(numbers).numpy(), tf.shape(numbers)))"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"gvffwkvtodLP","colab_type":"text"},"source":["Next we consider creating 2-d (i.e., matrices) and higher-rank Tensors. For examples, in future labs involving image processing and computer vision, we will use 4-d Tensors. Here the dimensions correspond to the number of example images in our batch, image height, image width, and the number of color channels."]},{"cell_type":"code","metadata":{"id":"tFeBBe1IouS3","colab_type":"code","colab":{}},"source":["### Defining higher-order Tensors ###\n","\n","'''TODO: Define a 2-d Tensor'''\n","matrix = # TODO\n","\n","assert isinstance(matrix, tf.Tensor), \"matrix must be a tf Tensor object\"\n","assert tf.rank(matrix).numpy() == 2"],"execution_count":0,"outputs":[]},{"cell_type":"code","metadata":{"id":"Zv1fTn_Ya_cz","colab_type":"code","colab":{}},"source":["'''TODO: Define a 4-d Tensor.'''\n","# Use tf.zeros to initialize a 4-d Tensor of zeros with size 10 x 256 x 256 x 3. \n","# You can think of this as 10 images where each image is RGB 256 x 256.\n","images = # TODO\n","\n","assert isinstance(images, tf.Tensor), \"matrix must be a tf Tensor object\"\n","assert tf.rank(images).numpy() == 4, \"matrix must be of rank 4\"\n","assert tf.shape(images).numpy().tolist() == [10, 256, 256, 3], \"matrix is incorrect shape\""],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"wkaCDOGapMyl","colab_type":"text"},"source":["As you have seen, the ```shape``` of a Tensor provides the number of elements in each Tensor dimension. The ```shape``` is quite useful, and we'll use it often. You can also use slicing to access subtensors within a higher-rank Tensor:"]},{"cell_type":"code","metadata":{"id":"FhaufyObuLEG","colab_type":"code","colab":{}},"source":["row_vector = matrix[1]\n","column_vector = matrix[:,2]\n","scalar = matrix[1, 2]\n","\n","print(\"`row_vector`: {}\".format(row_vector.numpy()))\n","print(\"`column_vector`: {}\".format(column_vector.numpy()))\n","print(\"`scalar`: {}\".format(scalar.numpy()))"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"iD3VO-LZYZ2z","colab_type":"text"},"source":["## 1.2 Computations on Tensors\n","\n","A convenient way to think about and visualize computations in TensorFlow is in terms of graphs. We can define this graph in terms of Tensors, which hold data, and the mathematical operations that act on these Tensors in some order. Let's look at a simple example, and define this computation using TensorFlow:\n","\n",""]},{"cell_type":"code","metadata":{"id":"X_YJrZsxYZ2z","colab_type":"code","colab":{}},"source":["# Create the nodes in the graph, and initialize values\n","a = tf.constant(15)\n","b = tf.constant(61)\n","\n","# Add them!\n","c1 = tf.add(a,b)\n","c2 = a + b # TensorFlow overrides the \"+\" operation so that it is able to act on Tensors\n","print(c1)\n","print(c2)"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"Mbfv_QOiYZ23","colab_type":"text"},"source":["Notice how we've created a computation graph consisting of TensorFlow operations, and how the output is a Tensor with value 76 -- we've just created a computation graph consisting of operations, and it's executed them and given us back the result.\n","\n","Now let's consider a slightly more complicated example:\n","\n","\n","\n","Here, we take two inputs, `a, b`, and compute an output `e`. Each node in the graph represents an operation that takes some input, does some computation, and passes its output to another node.\n","\n","Let's define a simple function in TensorFlow to construct this computation function:"]},{"cell_type":"code","metadata":{"scrolled":true,"id":"PJnfzpWyYZ23","colab_type":"code","colab":{}},"source":["### Defining Tensor computations ###\n","\n","# Construct a simple computation function\n","def func(a,b):\n"," '''TODO: Define the operation for c, d, e (use tf.add, tf.subtract, tf.multiply).'''\n"," c = # TODO\n"," d = # TODO\n"," e = # TODO\n"," return e"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"AwrRfDMS2-oy","colab_type":"text"},"source":["Now, we can call this function to execute the computation graph given some inputs `a,b`:"]},{"cell_type":"code","metadata":{"id":"pnwsf8w2uF7p","colab_type":"code","colab":{}},"source":["# Consider example values for a,b\n","a, b = 1.5, 2.5\n","# Execute the computation\n","e_out = func(a,b)\n","print(e_out)"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"6HqgUIUhYZ29","colab_type":"text"},"source":["Notice how our output is a Tensor with value defined by the output of the computation, and that the output has no shape as it is a single scalar value."]},{"cell_type":"markdown","metadata":{"id":"1h4o9Bb0YZ29","colab_type":"text"},"source":["## 1.3 Neural networks in TensorFlow\n","We can also define neural networks in TensorFlow. TensorFlow uses a high-level API called [Keras](https://www.tensorflow.org/guide/keras) that provides a powerful, intuitive framework for building and training deep learning models.\n","\n","Let's first consider the example of a simple perceptron defined by just one dense layer: $ y = \\sigma(Wx + b)$, where $W$ represents a matrix of weights, $b$ is a bias, $x$ is the input, $\\sigma$ is the sigmoid activation function, and $y$ is the output. We can also visualize this operation using a graph: \n","\n","\n","\n","Tensors can flow through abstract types called [```Layers```](https://www.tensorflow.org/api_docs/python/tf/keras/layers/Layer) -- the building blocks of neural networks. ```Layers``` implement common neural networks operations, and are used to update weights, compute losses, and define inter-layer connectivity. We will first define a ```Layer``` to implement the simple perceptron defined above."]},{"cell_type":"code","metadata":{"id":"HutbJk-1kHPh","colab_type":"code","colab":{}},"source":["### Defining a network Layer ###\n","\n","# n_output_nodes: number of output nodes\n","# input_shape: shape of the input\n","# x: input to the layer\n","\n","class OurDenseLayer(tf.keras.layers.Layer):\n"," def __init__(self, n_output_nodes):\n"," super(OurDenseLayer, self).__init__()\n"," self.n_output_nodes = n_output_nodes\n","\n"," def build(self, input_shape):\n"," d = int(input_shape[-1])\n"," # Define and initialize parameters: a weight matrix W and bias b\n"," # Note that parameter initialization is random!\n"," self.W = self.add_weight(\"weight\", shape=[d, self.n_output_nodes]) # note the dimensionality\n"," self.b = self.add_weight(\"bias\", shape=[1, self.n_output_nodes]) # note the dimensionality\n","\n"," def call(self, x):\n"," '''TODO: define the operation for z (hint: use tf.matmul)'''\n"," z = # TODO\n","\n"," '''TODO: define the operation for out (hint: use tf.sigmoid)'''\n"," y = # TODO\n"," return y\n","\n","# Since layer parameters are initialized randomly, we will set a random seed for reproducibility\n","tf.random.set_seed(1)\n","layer = OurDenseLayer(3)\n","layer.build((1,2))\n","x_input = tf.constant([[1,2.]], shape=(1,2))\n","y = layer.call(x_input)\n","\n","# test the output!\n","print(y.numpy())\n","mdl.lab1.test_custom_dense_layer_output(y)"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"Jt1FgM7qYZ3D","colab_type":"text"},"source":["Conveniently, TensorFlow has defined a number of ```Layers``` that are commonly used in neural networks, for example a [```Dense```](https://www.tensorflow.org/api_docs/python/tf/keras/layers/Dense?version=stable). Now, instead of using a single ```Layer``` to define our simple neural network, we'll use the [`Sequential`](https://www.tensorflow.org/versions/r2.0/api_docs/python/tf/keras/Sequential) model from Keras and a single [`Dense` ](https://www.tensorflow.org/versions/r2.0/api_docs/python/tf/keras/layers/Dense) layer to define our network. With the `Sequential` API, you can readily create neural networks by stacking together layers like building blocks. "]},{"cell_type":"code","metadata":{"id":"7WXTpmoL6TDz","colab_type":"code","colab":{}},"source":["### Defining a neural network using the Sequential API ###\n","\n","# Import relevant packages\n","from tensorflow.keras import Sequential\n","from tensorflow.keras.layers import Dense\n","\n","# Define the number of outputs\n","n_output_nodes = 3\n","\n","# First define the model \n","model = Sequential()\n","\n","'''TODO: Define a dense (fully connected) layer to compute z'''\n","# Remember: dense layers are defined by the parameters W and b!\n","# You can read more about the initialization of W and b in the TF documentation :) \n","# https://www.tensorflow.org/api_docs/python/tf/keras/layers/Dense?version=stable\n","dense_layer = # TODO\n","\n","# Add the dense layer to the model\n","model.add(dense_layer)\n"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"HDGcwYfUyR-U","colab_type":"text"},"source":["That's it! We've defined our model using the Sequential API. Now, we can test it out using an example input:"]},{"cell_type":"code","metadata":{"id":"sg23OczByRDb","colab_type":"code","colab":{}},"source":["# Test model with example input\n","x_input = tf.constant([[1,2.]], shape=(1,2))\n","\n","'''TODO: feed input into the model and predict the output!'''\n","model_output = # TODO\n","print(model_output)"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"596NvsOOtr9F","colab_type":"text"},"source":["In addition to defining models using the `Sequential` API, we can also define neural networks by directly subclassing the [`Model`](https://www.tensorflow.org/api_docs/python/tf/keras/Model?version=stable) class, which groups layers together to enable model training and inference. The `Model` class captures what we refer to as a \"model\" or as a \"network\". Using Subclassing, we can create a class for our model, and then define the forward pass through the network using the `call` function. Subclassing affords the flexibility to define custom layers, custom training loops, custom activation functions, and custom models. Let's define the same neural network as above now using Subclassing rather than the `Sequential` model."]},{"cell_type":"code","metadata":{"id":"K4aCflPVyViD","colab_type":"code","colab":{}},"source":["### Defining a model using subclassing ###\n","\n","from tensorflow.keras import Model\n","from tensorflow.keras.layers import Dense\n","\n","class SubclassModel(tf.keras.Model):\n","\n"," # In __init__, we define the Model's layers\n"," def __init__(self, n_output_nodes):\n"," super(SubclassModel, self).__init__()\n"," '''TODO: Our model consists of a single Dense layer. Define this layer.''' \n"," self.dense_layer = '''TODO: Dense Layer'''\n","\n"," # In the call function, we define the Model's forward pass.\n"," def call(self, inputs):\n"," return self.dense_layer(inputs)"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"U0-lwHDk4irB","colab_type":"text"},"source":["Just like the model we built using the `Sequential` API, let's test out our `SubclassModel` using an example input.\n","\n"]},{"cell_type":"code","metadata":{"id":"LhB34RA-4gXb","colab_type":"code","colab":{}},"source":["n_output_nodes = 3\n","model = SubclassModel(n_output_nodes)\n","\n","x_input = tf.constant([[1,2.]], shape=(1,2))\n","\n","print(model.call(x_input))"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"HTIFMJLAzsyE","colab_type":"text"},"source":["Importantly, Subclassing affords us a lot of flexibility to define custom models. For example, we can use boolean arguments in the `call` function to specify different network behaviors, for example different behaviors during training and inference. Let's suppose under some instances we want our network to simply output the input, without any perturbation. We define a boolean argument `isidentity` to control this behavior:"]},{"cell_type":"code","metadata":{"id":"P7jzGX5D1xT5","colab_type":"code","colab":{}},"source":["### Defining a model using subclassing and specifying custom behavior ###\n","\n","from tensorflow.keras import Model\n","from tensorflow.keras.layers import Dense\n","\n","class IdentityModel(tf.keras.Model):\n","\n"," # As before, in __init__ we define the Model's layers\n"," # Since our desired behavior involves the forward pass, this part is unchanged\n"," def __init__(self, n_output_nodes):\n"," super(IdentityModel, self).__init__()\n"," self.dense_layer = tf.keras.layers.Dense(n_output_nodes, activation='sigmoid')\n","\n"," '''TODO: Implement the behavior where the network outputs the input, unchanged, \n"," under control of the isidentity argument.'''\n"," def call(self, inputs, isidentity=False):\n"," x = self.dense_layer(inputs)\n"," '''TODO: Implement identity behavior'''"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"Ku4rcCGx5T3y","colab_type":"text"},"source":["Let's test this behavior:"]},{"cell_type":"code","metadata":{"id":"NzC0mgbk5dp2","colab_type":"code","colab":{}},"source":["n_output_nodes = 3\n","model = IdentityModel(n_output_nodes)\n","\n","x_input = tf.constant([[1,2.]], shape=(1,2))\n","'''TODO: pass the input into the model and call with and without the input identity option.'''\n","out_activate = # TODO\n","out_identity = # TODO\n","\n","print(\"Network output with activation: {}; network identity output: {}\".format(out_activate.numpy(), out_identity.numpy()))"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"7V1dEqdk6VI5","colab_type":"text"},"source":["Now that we have learned how to define `Layers` as well as neural networks in TensorFlow using both the `Sequential` and Subclassing APIs, we're ready to turn our attention to how to actually implement network training with backpropagation."]},{"cell_type":"markdown","metadata":{"id":"dQwDhKn8kbO2","colab_type":"text"},"source":["## 1.4 Automatic differentiation in TensorFlow\n","\n","[Automatic differentiation](https://en.wikipedia.org/wiki/Automatic_differentiation)\n","is one of the most important parts of TensorFlow and is the backbone of training with \n","[backpropagation](https://en.wikipedia.org/wiki/Backpropagation). We will use the TensorFlow GradientTape [`tf.GradientTape`](https://www.tensorflow.org/api_docs/python/tf/GradientTape?version=stable) to trace operations for computing gradients later. \n","\n","When a forward pass is made through the network, all forward-pass operations get recorded to a \"tape\"; then, to compute the gradient, the tape is played backwards. By default, the tape is discarded after it is played backwards; this means that a particular `tf.GradientTape` can only\n","compute one gradient, and subsequent calls throw a runtime error. However, we can compute multiple gradients over the same computation by creating a ```persistent``` gradient tape. \n","\n","First, we will look at how we can compute gradients using GradientTape and access them for computation. We define the simple function $ y = x^2$ and compute the gradient:"]},{"cell_type":"code","metadata":{"id":"tdkqk8pw5yJM","colab_type":"code","colab":{}},"source":["### Gradient computation with GradientTape ###\n","\n","# y = x^2\n","# Example: x = 3.0\n","x = tf.Variable(3.0)\n","\n","# Initiate the gradient tape\n","with tf.GradientTape() as tape:\n"," # Define the function\n"," y = x * x\n","# Access the gradient -- derivative of y with respect to x\n","dy_dx = tape.gradient(y, x)\n","\n","assert dy_dx.numpy() == 6.0"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"JhU5metS5xF3","colab_type":"text"},"source":["In training neural networks, we use differentiation and stochastic gradient descent (SGD) to optimize a loss function. Now that we have a sense of how `GradientTape` can be used to compute and access derivatives, we will look at an example where we use automatic differentiation and SGD to find the minimum of $L=(x-x_f)^2$. Here $x_f$ is a variable for a desired value we are trying to optimize for; $L$ represents a loss that we are trying to minimize. While we can clearly solve this problem analytically ($x_{min}=x_f$), considering how we can compute this using `GradientTape` sets us up nicely for future labs where we use gradient descent to optimize entire neural network losses."]},{"cell_type":"code","metadata":{"attributes":{"classes":["py"],"id":""},"colab_type":"code","id":"7g1yWiSXqEf-","colab":{}},"source":["### Function minimization with automatic differentiation and SGD ###\n","\n","# Initialize a random value for our initial x\n","x = tf.Variable([tf.random.normal([1])])\n","print(\"Initializing x={}\".format(x.numpy()))\n","\n","learning_rate = 1e-2 # learning rate for SGD\n","history = []\n","# Define the target value\n","x_f = 4\n","\n","# We will run SGD for a number of iterations. At each iteration, we compute the loss, \n","# compute the derivative of the loss with respect to x, and perform the SGD update.\n","for i in range(500):\n"," with tf.GradientTape() as tape:\n"," '''TODO: define the loss as described above'''\n"," loss = # TODO\n","\n"," # loss minimization using gradient tape\n"," grad = tape.gradient(loss, x) # compute the derivative of the loss with respect to x\n"," new_x = x - learning_rate*grad # sgd update\n"," x.assign(new_x) # update the value of x\n"," history.append(x.numpy()[0])\n","\n","# Plot the evolution of x as we optimize towards x_f!\n","plt.plot(history)\n","plt.plot([0, 500],[x_f,x_f])\n","plt.legend(('Predicted', 'True'))\n","plt.xlabel('Iteration')\n","plt.ylabel('x value')"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"pC7czCwk3ceH","colab_type":"text"},"source":["`GradientTape` provides an extremely flexible framework for automatic differentiation. In order to back propagate errors through a neural network, we track forward passes on the Tape, use this information to determine the gradients, and then use these gradients for optimization using SGD."]}]}

+{"nbformat":4,"nbformat_minor":0,"metadata":{"colab":{"name":"Part1_TensorFlow.ipynb","provenance":[{"file_id":"https://github.com/aamini/introtodeeplearning/blob/master/lab1/Part1_tensorflow_solution.ipynb","timestamp":1577671276005}],"collapsed_sections":["WBk0ZDWY-ff8"]},"kernelspec":{"name":"python3","display_name":"Python 3"},"accelerator":"GPU"},"cells":[{"cell_type":"markdown","metadata":{"id":"WBk0ZDWY-ff8","colab_type":"text"},"source":["\n","\n","# Copyright Information\n"]},{"cell_type":"code","metadata":{"id":"3eI6DUic-6jo","colab_type":"code","colab":{}},"source":["# Copyright 2020 MIT 6.S191 Introduction to Deep Learning. All Rights Reserved.\n","# \n","# Licensed under the MIT License. You may not use this file except in compliance\n","# with the License. Use and/or modification of this code outside of 6.S191 must\n","# reference:\n","#\n","# © MIT 6.S191: Introduction to Deep Learning\n","# http://introtodeeplearning.com\n","#"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"57knM8jrYZ2t","colab_type":"text"},"source":["# Lab 1: Intro to TensorFlow and Music Generation with RNNs\n","\n","In this lab, you'll get exposure to using TensorFlow and learn how it can be used for solving deep learning tasks. Go through the code and run each cell. Along the way, you'll encounter several ***TODO*** blocks -- follow the instructions to fill them out before running those cells and continuing.\n","\n","\n","# Part 1: Intro to TensorFlow\n","\n","## 0.1 Install TensorFlow\n","\n","TensorFlow is a software library extensively used in machine learning. Here we'll learn how computations are represented and how to define a simple neural network in TensorFlow. For all the labs in 6.S191 2020, we'll be using the latest version of TensorFlow, TensorFlow 2, which affords great flexibility and the ability to imperatively execute operations, just like in Python. You'll notice that TensorFlow 2 is quite similar to Python in its syntax and imperative execution. Let's install TensorFlow and a couple of dependencies.\n"]},{"cell_type":"code","metadata":{"id":"LkaimNJfYZ2w","colab_type":"code","colab":{}},"source":["%tensorflow_version 2.x\n","import tensorflow as tf\n","\n","# Download and import the MIT 6.S191 package\n","!pip install mitdeeplearning\n","import mitdeeplearning as mdl\n","\n","import numpy as np\n","import matplotlib.pyplot as plt"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"2QNMcdP4m3Vs","colab_type":"text"},"source":["## 1.1 Why is TensorFlow called TensorFlow?\n","\n","TensorFlow is called 'TensorFlow' because it handles the flow (node/mathematical operation) of Tensors, which are data structures that you can think of as multi-dimensional arrays. Tensors are represented as n-dimensional arrays of base dataypes such as a string or integer -- they provide a way to generalize vectors and matrices to higher dimensions.\n","\n","The ```shape``` of a Tensor defines its number of dimensions and the size of each dimension. The ```rank``` of a Tensor provides the number of dimensions (n-dimensions) -- you can also think of this as the Tensor's order or degree.\n","\n","Let's first look at 0-d Tensors, of which a scalar is an example:"]},{"cell_type":"code","metadata":{"id":"tFxztZQInlAB","colab_type":"code","colab":{}},"source":["sport = tf.constant(\"Tennis\", tf.string)\n","number = tf.constant(1.41421356237, tf.float64)\n","\n","print(\"`sport` is a {}-d Tensor\".format(tf.rank(sport).numpy()))\n","print(\"`number` is a {}-d Tensor\".format(tf.rank(number).numpy()))"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"-dljcPUcoJZ6","colab_type":"text"},"source":["Vectors and lists can be used to create 1-d Tensors:"]},{"cell_type":"code","metadata":{"id":"oaHXABe8oPcO","colab_type":"code","colab":{}},"source":["sports = tf.constant([\"Tennis\", \"Basketball\"], tf.string)\n","numbers = tf.constant([3.141592, 1.414213, 2.71821], tf.float64)\n","\n","print(\"`sports` is a {}-d Tensor with shape: {}\".format(tf.rank(sports).numpy(), tf.shape(sports)))\n","print(\"`numbers` is a {}-d Tensor with shape: {}\".format(tf.rank(numbers).numpy(), tf.shape(numbers)))"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"gvffwkvtodLP","colab_type":"text"},"source":["Next we consider creating 2-d (i.e., matrices) and higher-rank Tensors. For examples, in future labs involving image processing and computer vision, we will use 4-d Tensors. Here the dimensions correspond to the number of example images in our batch, image height, image width, and the number of color channels."]},{"cell_type":"code","metadata":{"id":"tFeBBe1IouS3","colab_type":"code","colab":{}},"source":["### Defining higher-order Tensors ###\n","\n","'''TODO: Define a 2-d Tensor'''\n","matrix = # TODO\n","\n","assert isinstance(matrix, tf.Tensor), \"matrix must be a tf Tensor object\"\n","assert tf.rank(matrix).numpy() == 2"],"execution_count":0,"outputs":[]},{"cell_type":"code","metadata":{"id":"Zv1fTn_Ya_cz","colab_type":"code","colab":{}},"source":["'''TODO: Define a 4-d Tensor.'''\n","# Use tf.zeros to initialize a 4-d Tensor of zeros with size 10 x 256 x 256 x 3. \n","# You can think of this as 10 images where each image is RGB 256 x 256.\n","images = # TODO\n","\n","assert isinstance(images, tf.Tensor), \"matrix must be a tf Tensor object\"\n","assert tf.rank(images).numpy() == 4, \"matrix must be of rank 4\"\n","assert tf.shape(images).numpy().tolist() == [10, 256, 256, 3], \"matrix is incorrect shape\""],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"wkaCDOGapMyl","colab_type":"text"},"source":["As you have seen, the ```shape``` of a Tensor provides the number of elements in each Tensor dimension. The ```shape``` is quite useful, and we'll use it often. You can also use slicing to access subtensors within a higher-rank Tensor:"]},{"cell_type":"code","metadata":{"id":"FhaufyObuLEG","colab_type":"code","colab":{}},"source":["row_vector = images[1]\n","column_vector = images[:,2]\n","scalar = images[1, 2]\n","\n","print(\"`row_vector`: {}\".format(row_vector.numpy()))\n","print(\"`column_vector`: {}\".format(column_vector.numpy()))\n","print(\"`scalar`: {}\".format(scalar.numpy()))"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"iD3VO-LZYZ2z","colab_type":"text"},"source":["## 1.2 Computations on Tensors\n","\n","A convenient way to think about and visualize computations in TensorFlow is in terms of graphs. We can define this graph in terms of Tensors, which hold data, and the mathematical operations that act on these Tensors in some order. Let's look at a simple example, and define this computation using TensorFlow:\n","\n",""]},{"cell_type":"code","metadata":{"id":"X_YJrZsxYZ2z","colab_type":"code","colab":{}},"source":["# Create the nodes in the graph, and initialize values\n","a = tf.constant(15)\n","b = tf.constant(61)\n","\n","# Add them!\n","c1 = tf.add(a,b)\n","c2 = a + b # TensorFlow overrides the \"+\" operation so that it is able to act on Tensors\n","print(c1)\n","print(c2)"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"Mbfv_QOiYZ23","colab_type":"text"},"source":["Notice how we've created a computation graph consisting of TensorFlow operations, and how the output is a Tensor with value 76 -- we've just created a computation graph consisting of operations, and it's executed them and given us back the result.\n","\n","Now let's consider a slightly more complicated example:\n","\n","\n","\n","Here, we take two inputs, `a, b`, and compute an output `e`. Each node in the graph represents an operation that takes some input, does some computation, and passes its output to another node.\n","\n","Let's define a simple function in TensorFlow to construct this computation function:"]},{"cell_type":"code","metadata":{"scrolled":true,"id":"PJnfzpWyYZ23","colab_type":"code","colab":{}},"source":["### Defining Tensor computations ###\n","\n","# Construct a simple computation function\n","def func(a,b):\n"," '''TODO: Define the operation for c, d, e (use tf.add, tf.subtract, tf.multiply).'''\n"," c = # TODO\n"," d = # TODO\n"," e = # TODO\n"," return e"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"AwrRfDMS2-oy","colab_type":"text"},"source":["Now, we can call this function to execute the computation graph given some inputs `a,b`:"]},{"cell_type":"code","metadata":{"id":"pnwsf8w2uF7p","colab_type":"code","colab":{}},"source":["# Consider example values for a,b\n","a, b = 1.5, 2.5\n","# Execute the computation\n","e_out = func(a,b)\n","print(e_out)"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"6HqgUIUhYZ29","colab_type":"text"},"source":["Notice how our output is a Tensor with value defined by the output of the computation, and that the output has no shape as it is a single scalar value."]},{"cell_type":"markdown","metadata":{"id":"1h4o9Bb0YZ29","colab_type":"text"},"source":["## 1.3 Neural networks in TensorFlow\n","We can also define neural networks in TensorFlow. TensorFlow uses a high-level API called [Keras](https://www.tensorflow.org/guide/keras) that provides a powerful, intuitive framework for building and training deep learning models.\n","\n","Let's first consider the example of a simple perceptron defined by just one dense layer: $ y = \\sigma(Wx + b)$, where $W$ represents a matrix of weights, $b$ is a bias, $x$ is the input, $\\sigma$ is the sigmoid activation function, and $y$ is the output. We can also visualize this operation using a graph: \n","\n","\n","\n","Tensors can flow through abstract types called [```Layers```](https://www.tensorflow.org/api_docs/python/tf/keras/layers/Layer) -- the building blocks of neural networks. ```Layers``` implement common neural networks operations, and are used to update weights, compute losses, and define inter-layer connectivity. We will first define a ```Layer``` to implement the simple perceptron defined above."]},{"cell_type":"code","metadata":{"id":"HutbJk-1kHPh","colab_type":"code","colab":{}},"source":["### Defining a network Layer ###\n","\n","# n_output_nodes: number of output nodes\n","# input_shape: shape of the input\n","# x: input to the layer\n","\n","class OurDenseLayer(tf.keras.layers.Layer):\n"," def __init__(self, n_output_nodes):\n"," super(OurDenseLayer, self).__init__()\n"," self.n_output_nodes = n_output_nodes\n","\n"," def build(self, input_shape):\n"," d = int(input_shape[-1])\n"," # Define and initialize parameters: a weight matrix W and bias b\n"," # Note that parameter initialization is random!\n"," self.W = self.add_weight(\"weight\", shape=[d, self.n_output_nodes]) # note the dimensionality\n"," self.b = self.add_weight(\"bias\", shape=[1, self.n_output_nodes]) # note the dimensionality\n","\n"," def call(self, x):\n"," '''TODO: define the operation for z (hint: use tf.matmul)'''\n"," z = # TODO\n","\n"," '''TODO: define the operation for out (hint: use tf.sigmoid)'''\n"," y = # TODO\n"," return y\n","\n","# Since layer parameters are initialized randomly, we will set a random seed for reproducibility\n","tf.random.set_seed(1)\n","layer = OurDenseLayer(3)\n","layer.build((1,2))\n","x_input = tf.constant([[1,2.]], shape=(1,2))\n","y = layer.call(x_input)\n","\n","# test the output!\n","print(y.numpy())\n","mdl.lab1.test_custom_dense_layer_output(y)"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"Jt1FgM7qYZ3D","colab_type":"text"},"source":["Conveniently, TensorFlow has defined a number of ```Layers``` that are commonly used in neural networks, for example a [```Dense```](https://www.tensorflow.org/api_docs/python/tf/keras/layers/Dense?version=stable). Now, instead of using a single ```Layer``` to define our simple neural network, we'll use the [`Sequential`](https://www.tensorflow.org/versions/r2.0/api_docs/python/tf/keras/Sequential) model from Keras and a single [`Dense` ](https://www.tensorflow.org/versions/r2.0/api_docs/python/tf/keras/layers/Dense) layer to define our network. With the `Sequential` API, you can readily create neural networks by stacking together layers like building blocks. "]},{"cell_type":"code","metadata":{"id":"7WXTpmoL6TDz","colab_type":"code","colab":{}},"source":["### Defining a neural network using the Sequential API ###\n","\n","# Import relevant packages\n","from tensorflow.keras import Sequential\n","from tensorflow.keras.layers import Dense\n","\n","# Define the number of outputs\n","n_output_nodes = 3\n","\n","# First define the model \n","model = Sequential()\n","\n","'''TODO: Define a dense (fully connected) layer to compute z'''\n","# Remember: dense layers are defined by the parameters W and b!\n","# You can read more about the initialization of W and b in the TF documentation :) \n","# https://www.tensorflow.org/api_docs/python/tf/keras/layers/Dense?version=stable\n","dense_layer = # TODO\n","\n","# Add the dense layer to the model\n","model.add(dense_layer)\n"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"HDGcwYfUyR-U","colab_type":"text"},"source":["That's it! We've defined our model using the Sequential API. Now, we can test it out using an example input:"]},{"cell_type":"code","metadata":{"id":"sg23OczByRDb","colab_type":"code","colab":{}},"source":["# Test model with example input\n","x_input = tf.constant([[1,2.]], shape=(1,2))\n","\n","'''TODO: feed input into the model and predict the output!'''\n","model_output = # TODO\n","print(model_output)"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"596NvsOOtr9F","colab_type":"text"},"source":["In addition to defining models using the `Sequential` API, we can also define neural networks by directly subclassing the [`Model`](https://www.tensorflow.org/api_docs/python/tf/keras/Model?version=stable) class, which groups layers together to enable model training and inference. The `Model` class captures what we refer to as a \"model\" or as a \"network\". Using Subclassing, we can create a class for our model, and then define the forward pass through the network using the `call` function. Subclassing affords the flexibility to define custom layers, custom training loops, custom activation functions, and custom models. Let's define the same neural network as above now using Subclassing rather than the `Sequential` model."]},{"cell_type":"code","metadata":{"id":"K4aCflPVyViD","colab_type":"code","colab":{}},"source":["### Defining a model using subclassing ###\n","\n","from tensorflow.keras import Model\n","from tensorflow.keras.layers import Dense\n","\n","class SubclassModel(tf.keras.Model):\n","\n"," # In __init__, we define the Model's layers\n"," def __init__(self, n_output_nodes):\n"," super(SubclassModel, self).__init__()\n"," '''TODO: Our model consists of a single Dense layer. Define this layer.''' \n"," self.dense_layer = '''TODO: Dense Layer'''\n","\n"," # In the call function, we define the Model's forward pass.\n"," def call(self, inputs):\n"," return self.dense_layer(inputs)"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"U0-lwHDk4irB","colab_type":"text"},"source":["Just like the model we built using the `Sequential` API, let's test out our `SubclassModel` using an example input.\n","\n"]},{"cell_type":"code","metadata":{"id":"LhB34RA-4gXb","colab_type":"code","colab":{}},"source":["n_output_nodes = 3\n","model = SubclassModel(n_output_nodes)\n","\n","x_input = tf.constant([[1,2.]], shape=(1,2))\n","\n","print(model.call(x_input))"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"HTIFMJLAzsyE","colab_type":"text"},"source":["Importantly, Subclassing affords us a lot of flexibility to define custom models. For example, we can use boolean arguments in the `call` function to specify different network behaviors, for example different behaviors during training and inference. Let's suppose under some instances we want our network to simply output the input, without any perturbation. We define a boolean argument `isidentity` to control this behavior:"]},{"cell_type":"code","metadata":{"id":"P7jzGX5D1xT5","colab_type":"code","colab":{}},"source":["### Defining a model using subclassing and specifying custom behavior ###\n","\n","from tensorflow.keras import Model\n","from tensorflow.keras.layers import Dense\n","\n","class IdentityModel(tf.keras.Model):\n","\n"," # As before, in __init__ we define the Model's layers\n"," # Since our desired behavior involves the forward pass, this part is unchanged\n"," def __init__(self, n_output_nodes):\n"," super(IdentityModel, self).__init__()\n"," self.dense_layer = tf.keras.layers.Dense(n_output_nodes, activation='sigmoid')\n","\n"," '''TODO: Implement the behavior where the network outputs the input, unchanged, \n"," under control of the isidentity argument.'''\n"," def call(self, inputs, isidentity=False):\n"," x = self.dense_layer(inputs)\n"," '''TODO: Implement identity behavior'''"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"Ku4rcCGx5T3y","colab_type":"text"},"source":["Let's test this behavior:"]},{"cell_type":"code","metadata":{"id":"NzC0mgbk5dp2","colab_type":"code","colab":{}},"source":["n_output_nodes = 3\n","model = IdentityModel(n_output_nodes)\n","\n","x_input = tf.constant([[1,2.]], shape=(1,2))\n","'''TODO: pass the input into the model and call with and without the input identity option.'''\n","out_activate = # TODO\n","out_identity = # TODO\n","\n","print(\"Network output with activation: {}; network identity output: {}\".format(out_activate.numpy(), out_identity.numpy()))"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"7V1dEqdk6VI5","colab_type":"text"},"source":["Now that we have learned how to define `Layers` as well as neural networks in TensorFlow using both the `Sequential` and Subclassing APIs, we're ready to turn our attention to how to actually implement network training with backpropagation."]},{"cell_type":"markdown","metadata":{"id":"dQwDhKn8kbO2","colab_type":"text"},"source":["## 1.4 Automatic differentiation in TensorFlow\n","\n","[Automatic differentiation](https://en.wikipedia.org/wiki/Automatic_differentiation)\n","is one of the most important parts of TensorFlow and is the backbone of training with \n","[backpropagation](https://en.wikipedia.org/wiki/Backpropagation). We will use the TensorFlow GradientTape [`tf.GradientTape`](https://www.tensorflow.org/api_docs/python/tf/GradientTape?version=stable) to trace operations for computing gradients later. \n","\n","When a forward pass is made through the network, all forward-pass operations get recorded to a \"tape\"; then, to compute the gradient, the tape is played backwards. By default, the tape is discarded after it is played backwards; this means that a particular `tf.GradientTape` can only\n","compute one gradient, and subsequent calls throw a runtime error. However, we can compute multiple gradients over the same computation by creating a ```persistent``` gradient tape. \n","\n","First, we will look at how we can compute gradients using GradientTape and access them for computation. We define the simple function $ y = x^2$ and compute the gradient:"]},{"cell_type":"code","metadata":{"id":"tdkqk8pw5yJM","colab_type":"code","colab":{}},"source":["### Gradient computation with GradientTape ###\n","\n","# y = x^2\n","# Example: x = 3.0\n","x = tf.Variable(3.0)\n","\n","# Initiate the gradient tape\n","with tf.GradientTape() as tape:\n"," # Define the function\n"," y = x * x\n","# Access the gradient -- derivative of y with respect to x\n","dy_dx = tape.gradient(y, x)\n","\n","assert dy_dx.numpy() == 6.0"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"JhU5metS5xF3","colab_type":"text"},"source":["In training neural networks, we use differentiation and stochastic gradient descent (SGD) to optimize a loss function. Now that we have a sense of how `GradientTape` can be used to compute and access derivatives, we will look at an example where we use automatic differentiation and SGD to find the minimum of $L=(x-x_f)^2$. Here $x_f$ is a variable for a desired value we are trying to optimize for; $L$ represents a loss that we are trying to minimize. While we can clearly solve this problem analytically ($x_{min}=x_f$), considering how we can compute this using `GradientTape` sets us up nicely for future labs where we use gradient descent to optimize entire neural network losses."]},{"cell_type":"code","metadata":{"attributes":{"classes":["py"],"id":""},"colab_type":"code","id":"7g1yWiSXqEf-","colab":{}},"source":["### Function minimization with automatic differentiation and SGD ###\n","\n","# Initialize a random value for our initial x\n","x = tf.Variable([tf.random.normal([1])])\n","print(\"Initializing x={}\".format(x.numpy()))\n","\n","learning_rate = 1e-2 # learning rate for SGD\n","history = []\n","# Define the target value\n","x_f = 4\n","\n","# We will run SGD for a number of iterations. At each iteration, we compute the loss, \n","# compute the derivative of the loss with respect to x, and perform the SGD update.\n","for i in range(500):\n"," with tf.GradientTape() as tape:\n"," '''TODO: define the loss as described above'''\n"," loss = # TODO\n","\n"," # loss minimization using gradient tape\n"," grad = tape.gradient(loss, x) # compute the derivative of the loss with respect to x\n"," new_x = x - learning_rate*grad # sgd update\n"," x.assign(new_x) # update the value of x\n"," history.append(x.numpy()[0])\n","\n","# Plot the evolution of x as we optimize towards x_f!\n","plt.plot(history)\n","plt.plot([0, 500],[x_f,x_f])\n","plt.legend(('Predicted', 'True'))\n","plt.xlabel('Iteration')\n","plt.ylabel('x value')"],"execution_count":0,"outputs":[]},{"cell_type":"markdown","metadata":{"id":"pC7czCwk3ceH","colab_type":"text"},"source":["`GradientTape` provides an extremely flexible framework for automatic differentiation. In order to back propagate errors through a neural network, we track forward passes on the Tape, use this information to determine the gradients, and then use these gradients for optimization using SGD."]}]}

\n"," Visit MIT Deep Learning

\n"," Visit MIT Deep Learning Run in Google Colab

Run in Google Colab View Source on GitHub

View Source on GitHub \n"," Visit MIT Deep Learning

\n"," Visit MIT Deep Learning Run in Google Colab

Run in Google Colab View Source on GitHub

View Source on GitHub \n"," Visit MIT Deep Learning

\n"," Visit MIT Deep Learning Run in Google Colab

Run in Google Colab View Source on GitHub

View Source on GitHub