Welcome to Atlas! This is not just another AI research project; it's an adventure into the unknown territories of Artificial Intelligence. The mission? To push the boundaries of what AI can and cannot do 🤖. We're here to explore, experiment, and expand the knowledge frontier of AI, making sure the AI community keeps growing and learning from our discoveries.

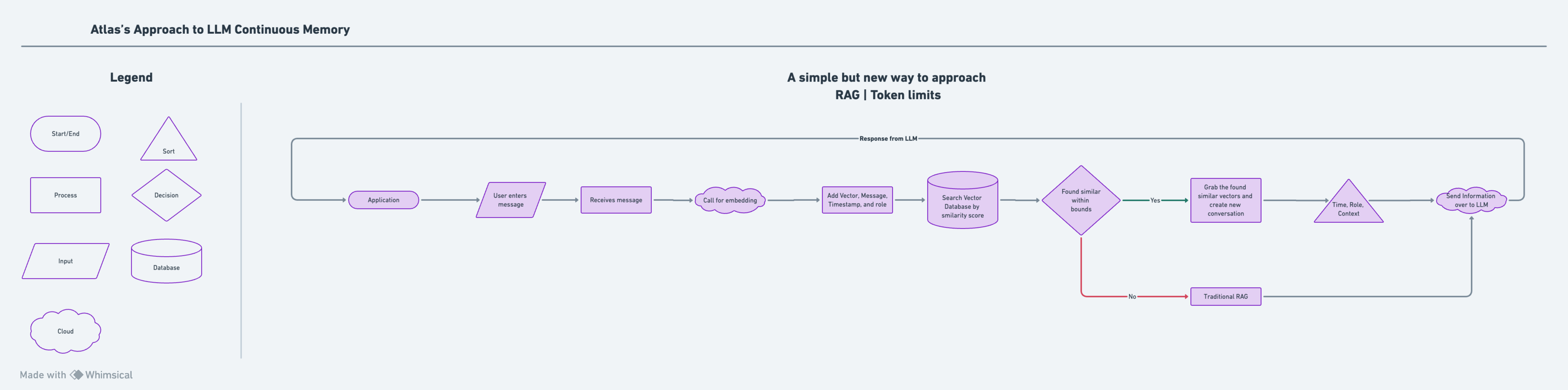

In the realm of AI, particularly with Large Language Models (LLMs), we've hit a snag—maintaining context beyond token limits is like trying to remember a dream after you wake up; it fades. Atlas is here to change that. Imagine chats with AI that remember the start of the conversation, no matter how long it goes. That's the future we're building.

- Test Multiple LLMs: We're playing matchmaker with different Large Language Models to see which ones can hold a conversation without losing track. 🤹♂️

- Maintain Chat Context: Our goal is to keep the chat's context as sticky as honey, making sure nothing important slips through the cracks. 🍯

- Leverage Qdrant: Like a librarian with a photographic memory, Qdrant helps us manage our data efficiently and effectively. 📚

- Continuous R&D: We're on a never-ending quest for knowledge, always looking for better ways to break through AI's current limitations. 🔍

- Vector Database: With Qdrant, we're handling data like a pro, ensuring we can always find what we need when we need it.

- Large Language Models: It's a round-robin of AI models in our lab, testing each one's ability to keep up with our chats.

Stay tuned! Once we release the primary dataset:

- Datasets: A goldmine of time-stamped, context-rich chat logs.

- Methodologies: The secret recipes we used to keep our chats coherent and context-aware.

- Performance Metrics: A report on how each LLM fared in our tests.

- Technical Insights: Nuggets of wisdom from the journey through AI's context retention challenge.

- Stay tuned -

After we release our first set of data, we'll be opening the doors wide for contributions and forks. Imagine being part of shaping the future of AI—exciting, right? Keep an eye out for updates, and get ready to join us in this epic adventure!

Got questions? Suggestions? Just want to chat about AI? Reach out!