| Category | -Metric Name | -Definition | -Data Required | -Use Scenarios and Recommended Practices | -Value | -

|---|---|---|---|---|---|

| Delivery Velocity | -Requirement Count | -Number of issues in type "Requirement" | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -

-1. Analyze the number of requirements and delivery rate of different time cycles to find the stability and trend of the development process.

- 2. Analyze and compare the number of requirements delivered and delivery rate of each project/team, and compare the scale of requirements of different projects. - 3. Based on historical data, establish a baseline of the delivery capacity of a single iteration (optimistic, probable and pessimistic values) to provide a reference for iteration estimation. - 4. Drill down to analyze the number and percentage of requirements in different phases of SDLC. Analyze rationality and identify the requirements stuck in the backlog. |

- 1. Based on historical data, establish a baseline of the delivery capacity of a single iteration to improve the organization and planning of R&D resources.

- 2. Evaluate whether the delivery capacity matches the business phase and demand scale. Identify key bottlenecks and reasonably allocate resources. |

-

| Requirement Delivery Rate | -Ratio of delivered requirements to all requirements | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -|||

| Requirement Lead Time | -Lead time of issues with type "Requirement" | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -

-1. Analyze the trend of requirement lead time to observe if it has improved over time.

- 2. Analyze and compare the requirement lead time of each project/team to identify key projects with abnormal lead time. - 3. Drill down to analyze a requirement's staying time in different phases of SDLC. Analyze the bottleneck of delivery velocity and improve the workflow. |

- 1. Analyze key projects and critical points, identify good/to-be-improved practices that affect requirement lead time, and reduce the risk of delays

- 2. Focus on the end-to-end velocity of value delivery process; coordinate different parts of R&D to avoid efficiency shafts; make targeted improvements to bottlenecks. |

- |

| Requirement Granularity | -Number of story points associated with an issue | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -

-1. Analyze the story points/requirement lead time of requirements to evaluate whether the ticket size, ie. requirement complexity is optimal.

- 2. Compare the estimated requirement granularity with the actual situation and evaluate whether the difference is reasonable by combining more microscopic workload metrics (e.g. lines of code/code equivalents) |

- 1. Promote product teams to split requirements carefully, improve requirements quality, help developers understand requirements clearly, deliver efficiently and with high quality, and improve the project management capability of the team.

- 2. Establish a data-supported workload estimation model to help R&D teams calibrate their estimation methods and more accurately assess the granularity of requirements, which is useful to achieve better issue planning in project management. |

- |

| Commit Count | -Number of Commits | -Source Code Management entities: Git/GitHub/GitLab commits | -

-1. Identify the main reasons for the unusual number of commits and the possible impact on the number of commits through comparison

- 2. Evaluate whether the number of commits is reasonable in conjunction with more microscopic workload metrics (e.g. lines of code/code equivalents) |

- 1. Identify potential bottlenecks that may affect output

- 2. Encourage R&D practices of small step submissions and develop excellent coding habits |

- |

| Added Lines of Code | -Accumulated number of added lines of code | -Source Code Management entities: Git/GitHub/GitLab commits | -

-1. From the project/team dimension, observe the accumulated change in Added lines to assess the team activity and code growth rate

- 2. From version cycle dimension, observe the active time distribution of code changes, and evaluate the effectiveness of project development model. - 3. From the member dimension, observe the trend and stability of code output of each member, and identify the key points that affect code output by comparison. |

- 1. identify potential bottlenecks that may affect the output

- 2. Encourage the team to implement a development model that matches the business requirements; develop excellent coding habits |

- |

| Deleted Lines of Code | -Accumulated number of deleted lines of code | -Source Code Management entities: Git/GitHub/GitLab commits | -|||

| Pull Request Review Time | -Time from Pull/Merge created time until merged | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | --1. Observe the mean and distribution of code review time from the project/team/individual dimension to assess the rationality of the review time | -1. Take inventory of project/team code review resources to avoid lack of resources and backlog of review sessions, resulting in long waiting time

- 2. Encourage teams to implement an efficient and responsive code review mechanism |

- |

| Bug Age | -Lead time of issues in type "Bug" | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -

-1. Observe the trend of bug age and locate the key reasons. -2. According to the severity level, type (business, functional classification), affected module, source of bugs, count and observe the length of bug and incident age. |

- 1. Help the team to establish an effective hierarchical response mechanism for bugs and incidents. Focus on the resolution of important problems in the backlog. -2. Improve team's and individual's bug/incident fixing efficiency. Identify good/to-be-improved practices that affect bug age or incident age |

- |

| Incident Age | -Lead time of issues in type "Incident" | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -|||

| Delivery Quality | -Pull Request Count | -Number of Pull/Merge Requests | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -

-1. From the developer dimension, we evaluate the code quality of developers by combining the task complexity with the metrics related to the number of review passes and review rounds. -2. From the reviewer dimension, we observe the reviewer's review style by taking into account the task complexity, the number of passes and the number of review rounds. -3. From the project/team dimension, we combine the project phase and team task complexity to aggregate the metrics related to the number of review passes and review rounds, and identify the modules with abnormal code review process and possible quality risks. |

- 1. Code review metrics are process indicators to provide quick feedback on developers' code quality -2. Promote the team to establish a unified coding specification and standardize the code review criteria -3. Identify modules with low-quality risks in advance, optimize practices, and precipitate into reusable knowledge and tools to avoid technical debt accumulation |

-

| Pull Request Pass Rate | -Ratio of Pull/Merge Review requests to merged | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -|||

| Pull Request Review Rounds | -Number of cycles of commits followed by comments/final merge | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -|||

| Pull Request Review Count | -Number of Pull/Merge Reviewers | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -1. As a secondary indicator, assess the cost of labor invested in the code review process | -1. Take inventory of project/team code review resources to avoid long waits for review sessions due to insufficient resource input | -|

| Bug Count | -Number of bugs found during testing | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -

-1. From the project or team dimension, observe the statistics on the total number of defects, the distribution of the number of defects in each severity level/type/owner, the cumulative trend of defects, and the change trend of the defect rate in thousands of lines, etc. -2. From version cycle dimension, observe the statistics on the cumulative trend of the number of defects/defect rate, which can be used to determine whether the growth rate of defects is slowing down, showing a flat convergence trend, and is an important reference for judging the stability of software version quality -3. From the time dimension, analyze the trend of the number of test defects, defect rate to locate the key items/key points -4. Evaluate whether the software quality and test plan are reasonable by referring to CMMI standard values |

- 1. Defect drill-down analysis to inform the development of design and code review strategies and to improve the internal QA process -2. Assist teams to locate projects/modules with higher defect severity and density, and clean up technical debts -3. Analyze critical points, identify good/to-be-improved practices that affect defect count or defect rate, to reduce the amount of future defects |

- |

| Incident Count | -Number of Incidents found after shipping | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -|||

| Bugs Count per 1k Lines of Code | -Amount of bugs per 1,000 lines of code | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -|||

| Incidents Count per 1k Lines of Code | -Amount of incidents per 1,000 lines of code | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -|||

| Delivery Cost | -Commit Author Count | -Number of Contributors who have committed code | -Source Code Management entities: Git/GitHub/GitLab commits | -1. As a secondary indicator, this helps assess the labor cost of participating in coding | -1. Take inventory of project/team R&D resource inputs, assess input-output ratio, and rationalize resource deployment | -

| Delivery Capability | -Build Count | -The number of builds started | -CI/CD entities: Jenkins PRs, GitLabCI MRs, etc | -1. From the project dimension, compare the number of builds and success rate by combining the project phase and the complexity of tasks -2. From the time dimension, analyze the trend of the number of builds and success rate to see if it has improved over time |

- 1. As a process indicator, it reflects the value flow efficiency of upstream production and research links -2. Identify excellent/to-be-improved practices that impact the build, and drive the team to precipitate reusable tools and mechanisms to build infrastructure for fast and high-frequency delivery |

-

| Build Duration | -The duration of successful builds | -CI/CD entities: Jenkins PRs, GitLabCI MRs, etc | -|||

| Build Success Rate | -The percentage of successful builds | -CI/CD entities: Jenkins PRs, GitLabCI MRs, etc | -

DevLake Components

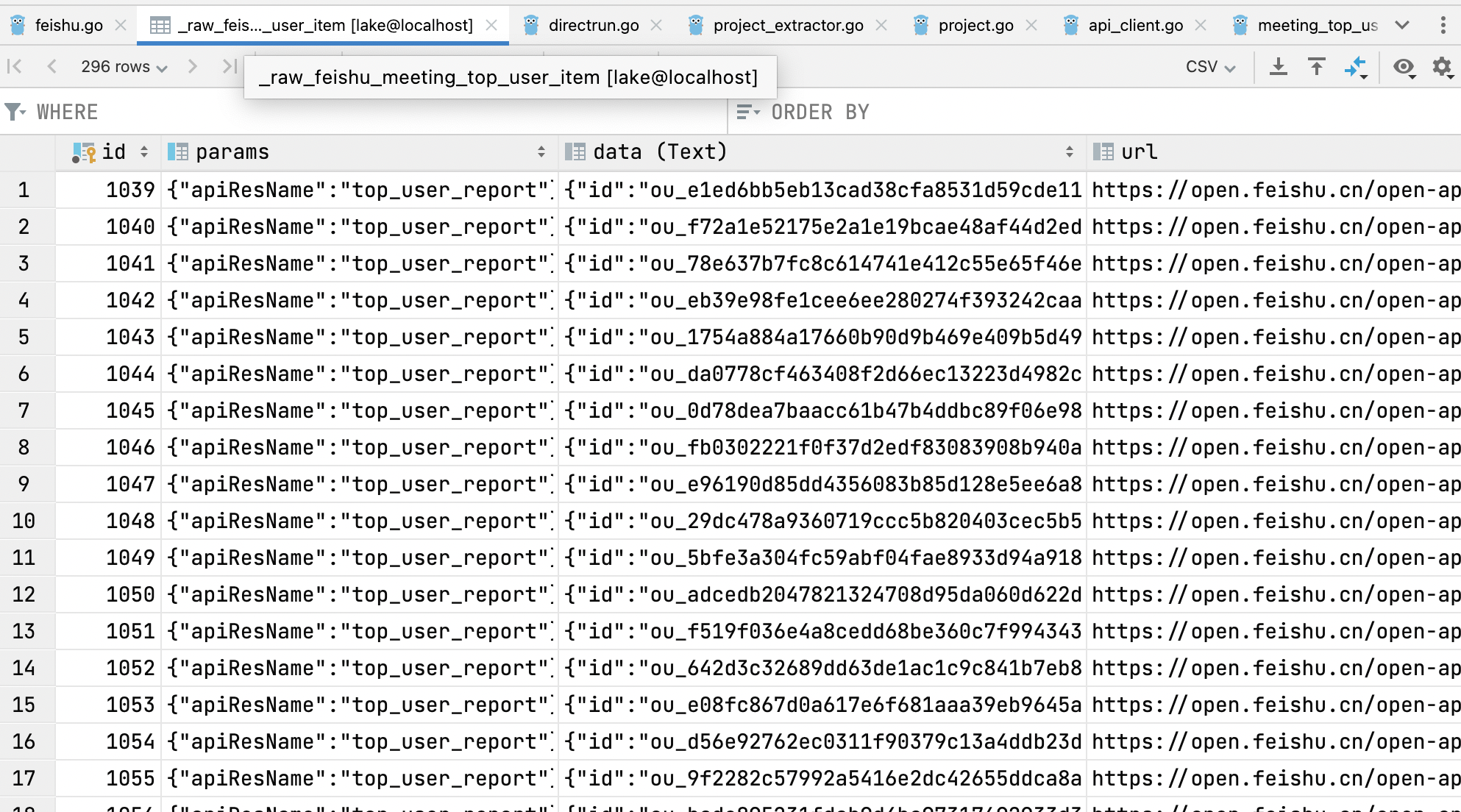

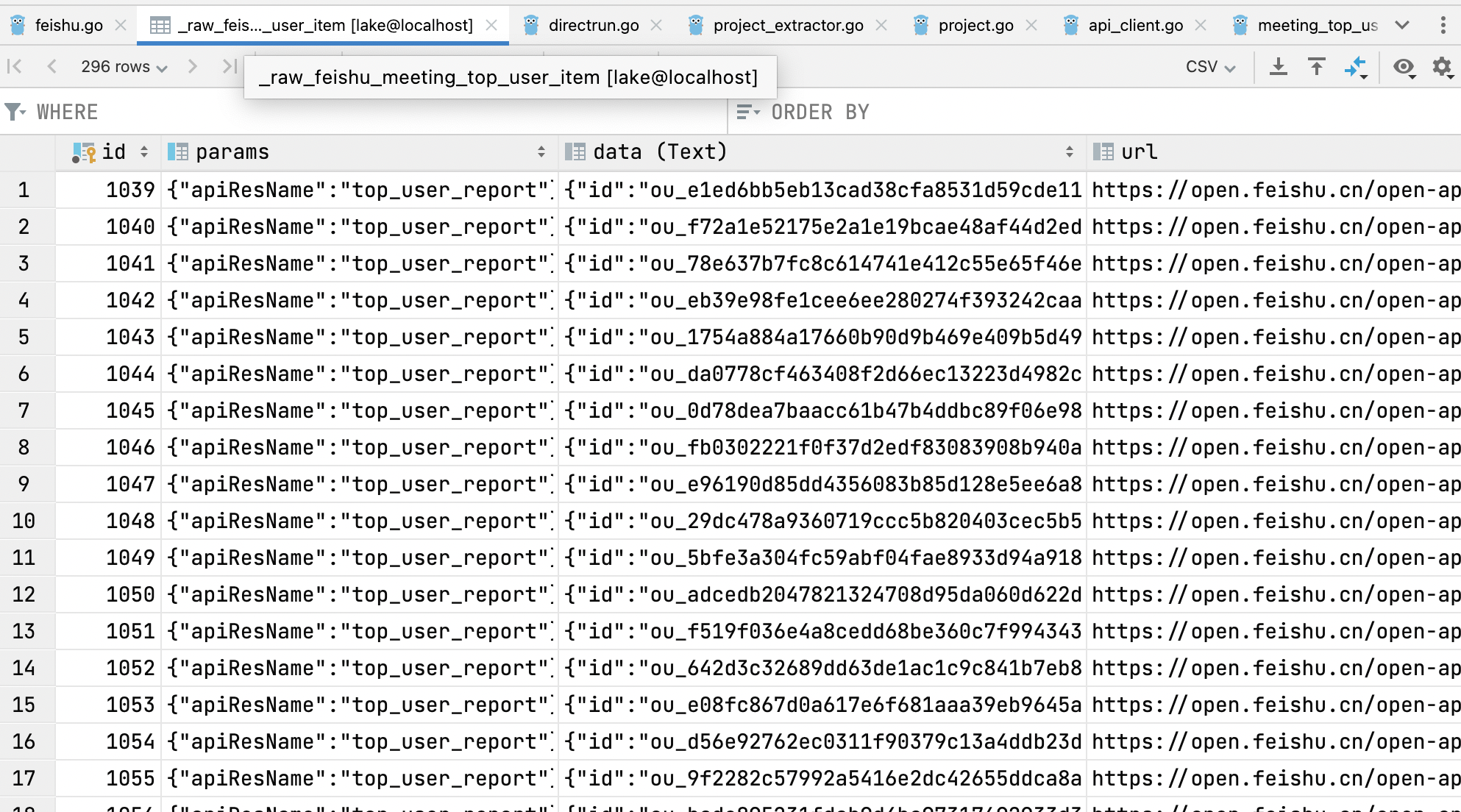

- -A DevLake installation typically consists of the following components: - -- Config UI: A handy user interface to create, trigger, and debug data pipelines. -- API Server: The main programmatic interface of DevLake. -- Runner: The runner does all the heavy-lifting for executing tasks. In the default DevLake installation, it runs within the API Server, but DevLake provides a temporal-based runner (beta) for production environments. -- Database: The database stores both DevLake's metadata and user data collected by data pipelines. DevLake supports MySQL and PostgreSQL as of v0.11. -- Plugins: Plugins enable DevLake to collect and analyze dev data from any DevOps tools with an accessible API. DevLake community is actively adding plugins for popular DevOps tools, but if your preferred tool is not covered yet, feel free to open a GitHub issue to let us know or check out our doc on how to build a new plugin by yourself. -- Dashboards: Dashboards deliver data and insights to DevLake users. A dashboard is simply a collection of SQL queries along with corresponding visualization configurations. DevLake's official dashboard tool is Grafana and pre-built dashboards are shipped in Grafana's JSON format. Users are welcome to swap for their own choice of dashboard/BI tool if desired. - -## Dataflow - -DevLake Dataflow

- -A typical plugin's dataflow is illustrated below: - -1. The Raw layer stores the API responses from data sources (DevOps tools) in JSON. This saves developers' time if the raw data is to be transformed differently later on. Please note that communicating with data sources' APIs is usually the most time-consuming step. -2. The Tool layer extracts raw data from JSONs into a relational schema that's easier to consume by analytical tasks. Each DevOps tool would have a schema that's tailored to their data structure, hence the name, the Tool layer. -3. The Domain layer attempts to build a layer of abstraction on top of the Tool layer so that analytics logics can be re-used across different tools. For example, GitHub's Pull Request (PR) and GitLab's Merge Request (MR) are similar entities. They each have their own table name and schema in the Tool layer, but they're consolidated into a single entity in the Domain layer, so that developers only need to implement metrics like Cycle Time and Code Review Rounds once against the domain layer schema. - -## Principles - -1. Extensible: DevLake's plugin system allows users to integrate with any DevOps tool. DevLake also provides a dbt plugin that enables users to define their own data transformation and analysis workflows. -2. Portable: DevLake has a modular design and provides multiple options for each module. Users of different setups can freely choose the right configuration for themselves. -3. Robust: DevLake provides an SDK to help plugins efficiently and reliably collect data from data sources while respecting their API rate limits and constraints. - - -

-## Project Metrics This Covers

-

-| Metric Name | Description |

-|:------------------------------------|:--------------------------------------------------------------------------------------------------|

-| Requirement Count | Number of issues with type "Requirement" |

-| Requirement Lead Time | Lead time of issues with type "Requirement" |

-| Requirement Delivery Rate | Ratio of delivered requirements to all requirements |

-| Requirement Granularity | Number of story points associated with an issue |

-| Bug Count | Number of issues with type "Bug"

-

-## Project Metrics This Covers

-

-| Metric Name | Description |

-|:------------------------------------|:--------------------------------------------------------------------------------------------------|

-| Requirement Count | Number of issues with type "Requirement" |

-| Requirement Lead Time | Lead time of issues with type "Requirement" |

-| Requirement Delivery Rate | Ratio of delivered requirements to all requirements |

-| Requirement Granularity | Number of story points associated with an issue |

-| Bug Count | Number of issues with type "Bug" -

-When first visiting Grafana, you will be provided with a sample dashboard with some basic charts setup from the database.

-

-## Contents

-

-Section | Link

-:------------ | :-------------

-Logging In | [View Section](#logging-in)

-Viewing All Dashboards | [View Section](#viewing-all-dashboards)

-Customizing a Dashboard | [View Section](#customizing-a-dashboard)

-Dashboard Settings | [View Section](#dashboard-settings)

-Provisioning a Dashboard | [View Section](#provisioning-a-dashboard)

-Troubleshooting DB Connection | [View Section](#troubleshooting-db-connection)

-

-## Logging In

-

-Once the app is up and running, visit `http://localhost:3002` to view the Grafana dashboard.

-

-Default login credentials are:

-

-- Username: `admin`

-- Password: `admin`

-

-## Viewing All Dashboards

-

-To see all dashboards created in Grafana visit `/dashboards`

-

-Or, use the sidebar and click on **Manage**:

-

-

-

-

-## Customizing a Dashboard

-

-When viewing a dashboard, click the top bar of a panel, and go to **edit**

-

-

-

-**Edit Dashboard Panel Page:**

-

-

-

-### 1. Preview Area

-- **Top Left** is the variable select area (custom dashboard variables, used for switching projects, or grouping data)

-- **Top Right** we have a toolbar with some buttons related to the display of the data:

- - View data results in a table

- - Time range selector

- - Refresh data button

-- **The Main Area** will display the chart and should update in real time

-

-> Note: Data should refresh automatically, but may require a refresh using the button in some cases

-

-### 2. Query Builder

-Here we form the SQL query to pull data into our chart, from our database

-- Ensure the **Data Source** is the correct database

-

-

-

-- Select **Format as Table**, and **Edit SQL** buttons to write/edit queries as SQL

-

-

-

-- The **Main Area** is where the queries are written, and in the top right is the **Query Inspector** button (to inspect returned data)

-

-

-

-### 3. Main Panel Toolbar

-In the top right of the window are buttons for:

-- Dashboard settings (regarding entire dashboard)

-- Save/apply changes (to specific panel)

-

-### 4. Grafana Parameter Sidebar

-- Change chart style (bar/line/pie chart etc)

-- Edit legends, chart parameters

-- Modify chart styling

-- Other Grafana specific settings

-

-## Dashboard Settings

-

-When viewing a dashboard click on the settings icon to view dashboard settings. Here are 2 important sections to use:

-

-

-

-- Variables

- - Create variables to use throughout the dashboard panels, that are also built on SQL queries

-

-

-

-- JSON Model

- - Copy `json` code here and save it to a new file in `/grafana/dashboards/` with a unique name in the `lake` repo. This will allow us to persist dashboards when we load the app

-

-

-

-## Provisioning a Dashboard

-

-To save a dashboard in the `lake` repo and load it:

-

-1. Create a dashboard in browser (visit `/dashboard/new`, or use sidebar)

-2. Save dashboard (in top right of screen)

-3. Go to dashboard settings (in top right of screen)

-4. Click on _JSON Model_ in sidebar

-5. Copy code into a new `.json` file in `/grafana/dashboards`

-

-## Troubleshooting DB Connection

-

-To ensure we have properly connected our database to the data source in Grafana, check database settings in `./grafana/datasources/datasource.yml`, specifically:

-- `database`

-- `user`

-- `secureJsonData/password`

diff --git a/versioned_docs/version-v0.11/UserManuals/RecurringPipelines.md b/versioned_docs/version-v0.11/UserManuals/RecurringPipelines.md

deleted file mode 100644

index ce82b1eb00d..00000000000

--- a/versioned_docs/version-v0.11/UserManuals/RecurringPipelines.md

+++ /dev/null

@@ -1,30 +0,0 @@

----

-title: "Recurring Pipelines"

-sidebar_position: 3

-description: >

- Recurring Pipelines

----

-

-## How to create recurring pipelines?

-

-Once you've verified that a pipeline works, most likely you'll want to run that pipeline periodically to keep data fresh, and DevLake's pipeline blueprint feature have got you covered.

-

-

-1. Click 'Create Pipeline Run' and

- - Toggle the plugins you'd like to run, here we use GitHub and GitExtractor plugin as an example

- - Toggle on Automate Pipeline

-

-

-

-2. Click 'Add Blueprint'. Fill in the form and 'Save Blueprint'.

-

- - **NOTE**: The schedule syntax is standard unix cron syntax, [Crontab.guru](https://crontab.guru/) is an useful reference

- - **IMPORANT**: The scheduler is running using the `UTC` timezone. If you want data collection to happen at 3 AM New York time (UTC-04:00) every day, use **Custom Shedule** and set it to `0 7 * * *`

-

-

-

-3. Click 'Save Blueprint'.

-

-4. Click 'Pipeline Blueprints', you can view and edit the new blueprint in the blueprint list.

-

-

\ No newline at end of file

diff --git a/versioned_docs/version-v0.11/UserManuals/TemporalSetup.md b/versioned_docs/version-v0.11/UserManuals/TemporalSetup.md

deleted file mode 100644

index f893a830dfd..00000000000

--- a/versioned_docs/version-v0.11/UserManuals/TemporalSetup.md

+++ /dev/null

@@ -1,35 +0,0 @@

----

-title: "Temporal Setup"

-sidebar_position: 5

-description: >

- The steps to install DevLake in Temporal mode.

----

-

-

-Normally, DevLake would execute pipelines on a local machine (we call it `local mode`), it is sufficient most of the time. However, when you have too many pipelines that need to be executed in parallel, it can be problematic, as the horsepower and throughput of a single machine is limited.

-

-`temporal mode` was added to support distributed pipeline execution, you can fire up arbitrary workers on multiple machines to carry out those pipelines in parallel to overcome the limitations of a single machine.

-

-But, be careful, many API services like JIRA/GITHUB have a request rate limit mechanism. Collecting data in parallel against the same API service with the same identity would most likely hit such limit.

-

-## How it works

-

-1. DevLake Server and Workers connect to the same temporal server by setting up `TEMPORAL_URL`

-2. DevLake Server sends a `pipeline` to the temporal server, and one of the Workers pick it up and execute it

-

-

-**IMPORTANT: This feature is in early stage of development. Please use with caution**

-

-

-## Temporal Demo

-

-### Requirements

-

-- [Docker](https://docs.docker.com/get-docker)

-- [docker-compose](https://docs.docker.com/compose/install/)

-- [temporalio](https://temporal.io/)

-

-### How to setup

-

-1. Clone and fire up [temporalio](https://temporal.io/) services

-2. Clone this repo, and fire up DevLake with command `docker-compose -f docker-compose-temporal.yml up -d`

\ No newline at end of file

diff --git a/versioned_docs/version-v0.11/UserManuals/_category_.json b/versioned_docs/version-v0.11/UserManuals/_category_.json

deleted file mode 100644

index b47bdfd7d09..00000000000

--- a/versioned_docs/version-v0.11/UserManuals/_category_.json

+++ /dev/null

@@ -1,4 +0,0 @@

-{

- "label": "User Manuals",

- "position": 3

-}

diff --git a/versioned_docs/version-v0.12/DataModels/DataSupport.md b/versioned_docs/version-v0.12/DataModels/DataSupport.md

deleted file mode 100644

index 4cb4b619131..00000000000

--- a/versioned_docs/version-v0.12/DataModels/DataSupport.md

+++ /dev/null

@@ -1,59 +0,0 @@

----

-title: "Data Support"

-description: >

- Data sources that DevLake supports

-sidebar_position: 1

----

-

-

-## Data Sources and Data Plugins

-DevLake supports the following data sources. The data from each data source is collected with one or more plugins. There are 9 data plugins in total: `ae`, `feishu`, `gitextractor`, `github`, `gitlab`, `jenkins`, `jira`, `refdiff` and `tapd`.

-

-

-| Data Source | Versions | Plugins |

-|-------------|--------------------------------------|-------- |

-| AE | | `ae` |

-| Feishu | Cloud |`feishu` |

-| GitHub | Cloud |`github`, `gitextractor`, `refdiff` |

-| Gitlab | Cloud, Community Edition 13.x+ |`gitlab`, `gitextractor`, `refdiff` |

-| Jenkins | 2.263.x+ |`jenkins` |

-| Jira | Cloud, Server 8.x+, Data Center 8.x+ |`jira` |

-| TAPD | Cloud | `tapd` |

-

-

-

-## Data Collection Scope By Each Plugin

-This table shows the entities collected by each plugin. Domain layer entities in this table are consistent with the entities [here](./DevLakeDomainLayerSchema.md).

-

-| Domain Layer Entities | ae | gitextractor | github | gitlab | jenkins | jira | refdiff | tapd |

-| --------------------- | -------------- | ------------ | -------------- | ------- | ------- | ------- | ------- | ------- |

-| commits | update commits | default | not-by-default | default | | | | |

-| commit_parents | | default | | | | | | |

-| commit_files | | default | | | | | | |

-| pull_requests | | | default | default | | | | |

-| pull_request_commits | | | default | default | | | | |

-| pull_request_comments | | | default | default | | | | |

-| pull_request_labels | | | default | | | | | |

-| refs | | default | | | | | | |

-| refs_commits_diffs | | | | | | | default | |

-| refs_issues_diffs | | | | | | | default | |

-| ref_pr_cherry_picks | | | | | | | default | |

-| repos | | | default | default | | | | |

-| repo_commits | | default | default | | | | | |

-| board_repos | | | | | | | | |

-| issue_commits | | | | | | | | |

-| issue_repo_commits | | | | | | | | |

-| pull_request_issues | | | | | | | | |

-| refs_issues_diffs | | | | | | | | |

-| boards | | | default | | | default | | default |

-| board_issues | | | default | | | default | | default |

-| issue_changelogs | | | | | | default | | default |

-| issues | | | default | | | default | | default |

-| issue_comments | | | | | | default | | default |

-| issue_labels | | | default | | | | | |

-| sprints | | | | | | default | | default |

-| issue_worklogs | | | | | | default | | default |

-| users o | | | default | | | default | | default |

-| builds | | | | | default | | | |

-| jobs | | | | | default | | | |

-

diff --git a/versioned_docs/version-v0.12/DataModels/DevLakeDomainLayerSchema.md b/versioned_docs/version-v0.12/DataModels/DevLakeDomainLayerSchema.md

deleted file mode 100644

index 10c80d907a1..00000000000

--- a/versioned_docs/version-v0.12/DataModels/DevLakeDomainLayerSchema.md

+++ /dev/null

@@ -1,544 +0,0 @@

----

-title: "Domain Layer Schema"

-description: >

- DevLake Domain Layer Schema

-sidebar_position: 2

----

-

-## Summary

-

-This document describes the entities in DevLake's domain layer schema and their relationships.

-

-Data in the domain layer is transformed from the data in the tool layer. The tool layer schema is based on the data from specific tools such as Jira, GitHub, Gitlab, Jenkins, etc. The domain layer schema can be regarded as an abstraction of tool-layer schemas.

-

-Domain layer schema itself includes 2 logical layers: a `DWD` layer and a `DWM` layer. The DWD layer stores the detailed data points, while the DWM is the slight aggregation and operation of DWD to store more organized details or middle-level metrics.

-

-

-## Use Cases

-1. Users can make customized Grafana dashboards based on the domain layer schema.

-2. Contributors can complete the ETL logic when adding new data source plugins refering to this data model.

-

-

-## Data Models

-

-This is the up-to-date domain layer schema for DevLake v0.10.x. Tables (entities) are categorized into 5 domains.

-1. Issue tracking domain entities: Jira issues, GitHub issues, GitLab issues, etc.

-2. Source code management domain entities: Git/GitHub/Gitlab commits and refs(tags and branches), etc.

-3. Code review domain entities: GitHub PRs, Gitlab MRs, etc.

-4. CI/CD domain entities: Jenkins jobs & builds, etc.

-5. Cross-domain entities: entities that map entities from different domains to break data isolation.

-

-

-### Schema Diagram

-

-

-When reading the schema, you'll notice that many tables' primary key is called `id`. Unlike auto-increment id or UUID, `id` is a string composed of several parts to uniquely identify similar entities (e.g. repo) from different platforms (e.g. Github/Gitlab) and allow them to co-exist in a single table.

-

-Tables that end with WIP are still under development.

-

-

-### Naming Conventions

-

-1. The name of a table is in plural form. Eg. boards, issues, etc.

-2. The name of a table which describe the relation between 2 entities is in the form of [BigEntity in singular form]\_[SmallEntity in plural form]. Eg. board_issues, sprint_issues, pull_request_comments, etc.

-3. Value of the field in enum type are in capital letters. Eg. [table.issues.type](https://merico.feishu.cn/docs/doccnvyuG9YpVc6lvmWkmmbZtUc#ZDCw9k) has 3 values, REQUIREMENT, BUG, INCIDENT. Values that are phrases, such as 'IN_PROGRESS' of [table.issues.status](https://merico.feishu.cn/docs/doccnvyuG9YpVc6lvmWkmmbZtUc#ZDCw9k), are separated with underscore '\_'.

-

-

-

-When first visiting Grafana, you will be provided with a sample dashboard with some basic charts setup from the database.

-

-## Contents

-

-Section | Link

-:------------ | :-------------

-Logging In | [View Section](#logging-in)

-Viewing All Dashboards | [View Section](#viewing-all-dashboards)

-Customizing a Dashboard | [View Section](#customizing-a-dashboard)

-Dashboard Settings | [View Section](#dashboard-settings)

-Provisioning a Dashboard | [View Section](#provisioning-a-dashboard)

-Troubleshooting DB Connection | [View Section](#troubleshooting-db-connection)

-

-## Logging In

-

-Once the app is up and running, visit `http://localhost:3002` to view the Grafana dashboard.

-

-Default login credentials are:

-

-- Username: `admin`

-- Password: `admin`

-

-## Viewing All Dashboards

-

-To see all dashboards created in Grafana visit `/dashboards`

-

-Or, use the sidebar and click on **Manage**:

-

-

-

-

-## Customizing a Dashboard

-

-When viewing a dashboard, click the top bar of a panel, and go to **edit**

-

-

-

-**Edit Dashboard Panel Page:**

-

-

-

-### 1. Preview Area

-- **Top Left** is the variable select area (custom dashboard variables, used for switching projects, or grouping data)

-- **Top Right** we have a toolbar with some buttons related to the display of the data:

- - View data results in a table

- - Time range selector

- - Refresh data button

-- **The Main Area** will display the chart and should update in real time

-

-> Note: Data should refresh automatically, but may require a refresh using the button in some cases

-

-### 2. Query Builder

-Here we form the SQL query to pull data into our chart, from our database

-- Ensure the **Data Source** is the correct database

-

-

-

-- Select **Format as Table**, and **Edit SQL** buttons to write/edit queries as SQL

-

-

-

-- The **Main Area** is where the queries are written, and in the top right is the **Query Inspector** button (to inspect returned data)

-

-

-

-### 3. Main Panel Toolbar

-In the top right of the window are buttons for:

-- Dashboard settings (regarding entire dashboard)

-- Save/apply changes (to specific panel)

-

-### 4. Grafana Parameter Sidebar

-- Change chart style (bar/line/pie chart etc)

-- Edit legends, chart parameters

-- Modify chart styling

-- Other Grafana specific settings

-

-## Dashboard Settings

-

-When viewing a dashboard click on the settings icon to view dashboard settings. Here are 2 important sections to use:

-

-

-

-- Variables

- - Create variables to use throughout the dashboard panels, that are also built on SQL queries

-

-

-

-- JSON Model

- - Copy `json` code here and save it to a new file in `/grafana/dashboards/` with a unique name in the `lake` repo. This will allow us to persist dashboards when we load the app

-

-

-

-## Provisioning a Dashboard

-

-To save a dashboard in the `lake` repo and load it:

-

-1. Create a dashboard in browser (visit `/dashboard/new`, or use sidebar)

-2. Save dashboard (in top right of screen)

-3. Go to dashboard settings (in top right of screen)

-4. Click on _JSON Model_ in sidebar

-5. Copy code into a new `.json` file in `/grafana/dashboards`

-

-## Troubleshooting DB Connection

-

-To ensure we have properly connected our database to the data source in Grafana, check database settings in `./grafana/datasources/datasource.yml`, specifically:

-- `database`

-- `user`

-- `secureJsonData/password`

diff --git a/versioned_docs/version-v0.11/UserManuals/RecurringPipelines.md b/versioned_docs/version-v0.11/UserManuals/RecurringPipelines.md

deleted file mode 100644

index ce82b1eb00d..00000000000

--- a/versioned_docs/version-v0.11/UserManuals/RecurringPipelines.md

+++ /dev/null

@@ -1,30 +0,0 @@

----

-title: "Recurring Pipelines"

-sidebar_position: 3

-description: >

- Recurring Pipelines

----

-

-## How to create recurring pipelines?

-

-Once you've verified that a pipeline works, most likely you'll want to run that pipeline periodically to keep data fresh, and DevLake's pipeline blueprint feature have got you covered.

-

-

-1. Click 'Create Pipeline Run' and

- - Toggle the plugins you'd like to run, here we use GitHub and GitExtractor plugin as an example

- - Toggle on Automate Pipeline

-

-

-

-2. Click 'Add Blueprint'. Fill in the form and 'Save Blueprint'.

-

- - **NOTE**: The schedule syntax is standard unix cron syntax, [Crontab.guru](https://crontab.guru/) is an useful reference

- - **IMPORANT**: The scheduler is running using the `UTC` timezone. If you want data collection to happen at 3 AM New York time (UTC-04:00) every day, use **Custom Shedule** and set it to `0 7 * * *`

-

-

-

-3. Click 'Save Blueprint'.

-

-4. Click 'Pipeline Blueprints', you can view and edit the new blueprint in the blueprint list.

-

-

\ No newline at end of file

diff --git a/versioned_docs/version-v0.11/UserManuals/TemporalSetup.md b/versioned_docs/version-v0.11/UserManuals/TemporalSetup.md

deleted file mode 100644

index f893a830dfd..00000000000

--- a/versioned_docs/version-v0.11/UserManuals/TemporalSetup.md

+++ /dev/null

@@ -1,35 +0,0 @@

----

-title: "Temporal Setup"

-sidebar_position: 5

-description: >

- The steps to install DevLake in Temporal mode.

----

-

-

-Normally, DevLake would execute pipelines on a local machine (we call it `local mode`), it is sufficient most of the time. However, when you have too many pipelines that need to be executed in parallel, it can be problematic, as the horsepower and throughput of a single machine is limited.

-

-`temporal mode` was added to support distributed pipeline execution, you can fire up arbitrary workers on multiple machines to carry out those pipelines in parallel to overcome the limitations of a single machine.

-

-But, be careful, many API services like JIRA/GITHUB have a request rate limit mechanism. Collecting data in parallel against the same API service with the same identity would most likely hit such limit.

-

-## How it works

-

-1. DevLake Server and Workers connect to the same temporal server by setting up `TEMPORAL_URL`

-2. DevLake Server sends a `pipeline` to the temporal server, and one of the Workers pick it up and execute it

-

-

-**IMPORTANT: This feature is in early stage of development. Please use with caution**

-

-

-## Temporal Demo

-

-### Requirements

-

-- [Docker](https://docs.docker.com/get-docker)

-- [docker-compose](https://docs.docker.com/compose/install/)

-- [temporalio](https://temporal.io/)

-

-### How to setup

-

-1. Clone and fire up [temporalio](https://temporal.io/) services

-2. Clone this repo, and fire up DevLake with command `docker-compose -f docker-compose-temporal.yml up -d`

\ No newline at end of file

diff --git a/versioned_docs/version-v0.11/UserManuals/_category_.json b/versioned_docs/version-v0.11/UserManuals/_category_.json

deleted file mode 100644

index b47bdfd7d09..00000000000

--- a/versioned_docs/version-v0.11/UserManuals/_category_.json

+++ /dev/null

@@ -1,4 +0,0 @@

-{

- "label": "User Manuals",

- "position": 3

-}

diff --git a/versioned_docs/version-v0.12/DataModels/DataSupport.md b/versioned_docs/version-v0.12/DataModels/DataSupport.md

deleted file mode 100644

index 4cb4b619131..00000000000

--- a/versioned_docs/version-v0.12/DataModels/DataSupport.md

+++ /dev/null

@@ -1,59 +0,0 @@

----

-title: "Data Support"

-description: >

- Data sources that DevLake supports

-sidebar_position: 1

----

-

-

-## Data Sources and Data Plugins

-DevLake supports the following data sources. The data from each data source is collected with one or more plugins. There are 9 data plugins in total: `ae`, `feishu`, `gitextractor`, `github`, `gitlab`, `jenkins`, `jira`, `refdiff` and `tapd`.

-

-

-| Data Source | Versions | Plugins |

-|-------------|--------------------------------------|-------- |

-| AE | | `ae` |

-| Feishu | Cloud |`feishu` |

-| GitHub | Cloud |`github`, `gitextractor`, `refdiff` |

-| Gitlab | Cloud, Community Edition 13.x+ |`gitlab`, `gitextractor`, `refdiff` |

-| Jenkins | 2.263.x+ |`jenkins` |

-| Jira | Cloud, Server 8.x+, Data Center 8.x+ |`jira` |

-| TAPD | Cloud | `tapd` |

-

-

-

-## Data Collection Scope By Each Plugin

-This table shows the entities collected by each plugin. Domain layer entities in this table are consistent with the entities [here](./DevLakeDomainLayerSchema.md).

-

-| Domain Layer Entities | ae | gitextractor | github | gitlab | jenkins | jira | refdiff | tapd |

-| --------------------- | -------------- | ------------ | -------------- | ------- | ------- | ------- | ------- | ------- |

-| commits | update commits | default | not-by-default | default | | | | |

-| commit_parents | | default | | | | | | |

-| commit_files | | default | | | | | | |

-| pull_requests | | | default | default | | | | |

-| pull_request_commits | | | default | default | | | | |

-| pull_request_comments | | | default | default | | | | |

-| pull_request_labels | | | default | | | | | |

-| refs | | default | | | | | | |

-| refs_commits_diffs | | | | | | | default | |

-| refs_issues_diffs | | | | | | | default | |

-| ref_pr_cherry_picks | | | | | | | default | |

-| repos | | | default | default | | | | |

-| repo_commits | | default | default | | | | | |

-| board_repos | | | | | | | | |

-| issue_commits | | | | | | | | |

-| issue_repo_commits | | | | | | | | |

-| pull_request_issues | | | | | | | | |

-| refs_issues_diffs | | | | | | | | |

-| boards | | | default | | | default | | default |

-| board_issues | | | default | | | default | | default |

-| issue_changelogs | | | | | | default | | default |

-| issues | | | default | | | default | | default |

-| issue_comments | | | | | | default | | default |

-| issue_labels | | | default | | | | | |

-| sprints | | | | | | default | | default |

-| issue_worklogs | | | | | | default | | default |

-| users o | | | default | | | default | | default |

-| builds | | | | | default | | | |

-| jobs | | | | | default | | | |

-

diff --git a/versioned_docs/version-v0.12/DataModels/DevLakeDomainLayerSchema.md b/versioned_docs/version-v0.12/DataModels/DevLakeDomainLayerSchema.md

deleted file mode 100644

index 10c80d907a1..00000000000

--- a/versioned_docs/version-v0.12/DataModels/DevLakeDomainLayerSchema.md

+++ /dev/null

@@ -1,544 +0,0 @@

----

-title: "Domain Layer Schema"

-description: >

- DevLake Domain Layer Schema

-sidebar_position: 2

----

-

-## Summary

-

-This document describes the entities in DevLake's domain layer schema and their relationships.

-

-Data in the domain layer is transformed from the data in the tool layer. The tool layer schema is based on the data from specific tools such as Jira, GitHub, Gitlab, Jenkins, etc. The domain layer schema can be regarded as an abstraction of tool-layer schemas.

-

-Domain layer schema itself includes 2 logical layers: a `DWD` layer and a `DWM` layer. The DWD layer stores the detailed data points, while the DWM is the slight aggregation and operation of DWD to store more organized details or middle-level metrics.

-

-

-## Use Cases

-1. Users can make customized Grafana dashboards based on the domain layer schema.

-2. Contributors can complete the ETL logic when adding new data source plugins refering to this data model.

-

-

-## Data Models

-

-This is the up-to-date domain layer schema for DevLake v0.10.x. Tables (entities) are categorized into 5 domains.

-1. Issue tracking domain entities: Jira issues, GitHub issues, GitLab issues, etc.

-2. Source code management domain entities: Git/GitHub/Gitlab commits and refs(tags and branches), etc.

-3. Code review domain entities: GitHub PRs, Gitlab MRs, etc.

-4. CI/CD domain entities: Jenkins jobs & builds, etc.

-5. Cross-domain entities: entities that map entities from different domains to break data isolation.

-

-

-### Schema Diagram

-

-

-When reading the schema, you'll notice that many tables' primary key is called `id`. Unlike auto-increment id or UUID, `id` is a string composed of several parts to uniquely identify similar entities (e.g. repo) from different platforms (e.g. Github/Gitlab) and allow them to co-exist in a single table.

-

-Tables that end with WIP are still under development.

-

-

-### Naming Conventions

-

-1. The name of a table is in plural form. Eg. boards, issues, etc.

-2. The name of a table which describe the relation between 2 entities is in the form of [BigEntity in singular form]\_[SmallEntity in plural form]. Eg. board_issues, sprint_issues, pull_request_comments, etc.

-3. Value of the field in enum type are in capital letters. Eg. [table.issues.type](https://merico.feishu.cn/docs/doccnvyuG9YpVc6lvmWkmmbZtUc#ZDCw9k) has 3 values, REQUIREMENT, BUG, INCIDENT. Values that are phrases, such as 'IN_PROGRESS' of [table.issues.status](https://merico.feishu.cn/docs/doccnvyuG9YpVc6lvmWkmmbZtUc#ZDCw9k), are separated with underscore '\_'.

-

-| Category | -Metric Name | -Definition | -Data Required | -Use Scenarios and Recommended Practices | -Value | -

|---|---|---|---|---|---|

| Delivery Velocity | -Requirement Count | -Number of issues in type "Requirement" | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -

-1. Analyze the number of requirements and delivery rate of different time cycles to find the stability and trend of the development process.

- 2. Analyze and compare the number of requirements delivered and delivery rate of each project/team, and compare the scale of requirements of different projects. - 3. Based on historical data, establish a baseline of the delivery capacity of a single iteration (optimistic, probable and pessimistic values) to provide a reference for iteration estimation. - 4. Drill down to analyze the number and percentage of requirements in different phases of SDLC. Analyze rationality and identify the requirements stuck in the backlog. |

- 1. Based on historical data, establish a baseline of the delivery capacity of a single iteration to improve the organization and planning of R&D resources.

- 2. Evaluate whether the delivery capacity matches the business phase and demand scale. Identify key bottlenecks and reasonably allocate resources. |

-

| Requirement Delivery Rate | -Ratio of delivered requirements to all requirements | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -|||

| Requirement Lead Time | -Lead time of issues with type "Requirement" | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -

-1. Analyze the trend of requirement lead time to observe if it has improved over time.

- 2. Analyze and compare the requirement lead time of each project/team to identify key projects with abnormal lead time. - 3. Drill down to analyze a requirement's staying time in different phases of SDLC. Analyze the bottleneck of delivery velocity and improve the workflow. |

- 1. Analyze key projects and critical points, identify good/to-be-improved practices that affect requirement lead time, and reduce the risk of delays

- 2. Focus on the end-to-end velocity of value delivery process; coordinate different parts of R&D to avoid efficiency shafts; make targeted improvements to bottlenecks. |

- |

| Requirement Granularity | -Number of story points associated with an issue | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -

-1. Analyze the story points/requirement lead time of requirements to evaluate whether the ticket size, ie. requirement complexity is optimal.

- 2. Compare the estimated requirement granularity with the actual situation and evaluate whether the difference is reasonable by combining more microscopic workload metrics (e.g. lines of code/code equivalents) |

- 1. Promote product teams to split requirements carefully, improve requirements quality, help developers understand requirements clearly, deliver efficiently and with high quality, and improve the project management capability of the team.

- 2. Establish a data-supported workload estimation model to help R&D teams calibrate their estimation methods and more accurately assess the granularity of requirements, which is useful to achieve better issue planning in project management. |

- |

| Commit Count | -Number of Commits | -Source Code Management entities: Git/GitHub/GitLab commits | -

-1. Identify the main reasons for the unusual number of commits and the possible impact on the number of commits through comparison

- 2. Evaluate whether the number of commits is reasonable in conjunction with more microscopic workload metrics (e.g. lines of code/code equivalents) |

- 1. Identify potential bottlenecks that may affect output

- 2. Encourage R&D practices of small step submissions and develop excellent coding habits |

- |

| Added Lines of Code | -Accumulated number of added lines of code | -Source Code Management entities: Git/GitHub/GitLab commits | -

-1. From the project/team dimension, observe the accumulated change in Added lines to assess the team activity and code growth rate

- 2. From version cycle dimension, observe the active time distribution of code changes, and evaluate the effectiveness of project development model. - 3. From the member dimension, observe the trend and stability of code output of each member, and identify the key points that affect code output by comparison. |

- 1. identify potential bottlenecks that may affect the output

- 2. Encourage the team to implement a development model that matches the business requirements; develop excellent coding habits |

- |

| Deleted Lines of Code | -Accumulated number of deleted lines of code | -Source Code Management entities: Git/GitHub/GitLab commits | -|||

| Pull Request Review Time | -Time from Pull/Merge created time until merged | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | --1. Observe the mean and distribution of code review time from the project/team/individual dimension to assess the rationality of the review time | -1. Take inventory of project/team code review resources to avoid lack of resources and backlog of review sessions, resulting in long waiting time

- 2. Encourage teams to implement an efficient and responsive code review mechanism |

- |

| Bug Age | -Lead time of issues in type "Bug" | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -

-1. Observe the trend of bug age and locate the key reasons. -2. According to the severity level, type (business, functional classification), affected module, source of bugs, count and observe the length of bug and incident age. |

- 1. Help the team to establish an effective hierarchical response mechanism for bugs and incidents. Focus on the resolution of important problems in the backlog. -2. Improve team's and individual's bug/incident fixing efficiency. Identify good/to-be-improved practices that affect bug age or incident age |

- |

| Incident Age | -Lead time of issues in type "Incident" | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -|||

| Delivery Quality | -Pull Request Count | -Number of Pull/Merge Requests | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -

-1. From the developer dimension, we evaluate the code quality of developers by combining the task complexity with the metrics related to the number of review passes and review rounds. -2. From the reviewer dimension, we observe the reviewer's review style by taking into account the task complexity, the number of passes and the number of review rounds. -3. From the project/team dimension, we combine the project phase and team task complexity to aggregate the metrics related to the number of review passes and review rounds, and identify the modules with abnormal code review process and possible quality risks. |

- 1. Code review metrics are process indicators to provide quick feedback on developers' code quality -2. Promote the team to establish a unified coding specification and standardize the code review criteria -3. Identify modules with low-quality risks in advance, optimize practices, and precipitate into reusable knowledge and tools to avoid technical debt accumulation |

-

| Pull Request Pass Rate | -Ratio of Pull/Merge Review requests to merged | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -|||

| Pull Request Review Rounds | -Number of cycles of commits followed by comments/final merge | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -|||

| Pull Request Review Count | -Number of Pull/Merge Reviewers | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -1. As a secondary indicator, assess the cost of labor invested in the code review process | -1. Take inventory of project/team code review resources to avoid long waits for review sessions due to insufficient resource input | -|

| Bug Count | -Number of bugs found during testing | -Issue/Task Management entities: Jira issues, GitHub issues, etc | -

-1. From the project or team dimension, observe the statistics on the total number of defects, the distribution of the number of defects in each severity level/type/owner, the cumulative trend of defects, and the change trend of the defect rate in thousands of lines, etc. -2. From version cycle dimension, observe the statistics on the cumulative trend of the number of defects/defect rate, which can be used to determine whether the growth rate of defects is slowing down, showing a flat convergence trend, and is an important reference for judging the stability of software version quality -3. From the time dimension, analyze the trend of the number of test defects, defect rate to locate the key items/key points -4. Evaluate whether the software quality and test plan are reasonable by referring to CMMI standard values |

- 1. Defect drill-down analysis to inform the development of design and code review strategies and to improve the internal QA process -2. Assist teams to locate projects/modules with higher defect severity and density, and clean up technical debts -3. Analyze critical points, identify good/to-be-improved practices that affect defect count or defect rate, to reduce the amount of future defects |

- |

| Incident Count | -Number of Incidents found after shipping | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -|||

| Bugs Count per 1k Lines of Code | -Amount of bugs per 1,000 lines of code | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -|||

| Incidents Count per 1k Lines of Code | -Amount of incidents per 1,000 lines of code | -Source Code Management entities: GitHub PRs, GitLab MRs, etc | -|||

| Delivery Cost | -Commit Author Count | -Number of Contributors who have committed code | -Source Code Management entities: Git/GitHub/GitLab commits | -1. As a secondary indicator, this helps assess the labor cost of participating in coding | -1. Take inventory of project/team R&D resource inputs, assess input-output ratio, and rationalize resource deployment | -

| Delivery Capability | -Build Count | -The number of builds started | -CI/CD entities: Jenkins PRs, GitLabCI MRs, etc | -1. From the project dimension, compare the number of builds and success rate by combining the project phase and the complexity of tasks -2. From the time dimension, analyze the trend of the number of builds and success rate to see if it has improved over time |

- 1. As a process indicator, it reflects the value flow efficiency of upstream production and research links -2. Identify excellent/to-be-improved practices that impact the build, and drive the team to precipitate reusable tools and mechanisms to build infrastructure for fast and high-frequency delivery |

-

| Build Duration | -The duration of successful builds | -CI/CD entities: Jenkins PRs, GitLabCI MRs, etc | -|||

| Build Success Rate | -The percentage of successful builds | -CI/CD entities: Jenkins PRs, GitLabCI MRs, etc | -

DevLake Components

- -A DevLake installation typically consists of the following components: - -- Config UI: A handy user interface to create, trigger, and debug Blueprints. A Blueprint specifies the where (data connection), what (data scope), how (transformation rule), and when (sync frequency) of a data pipeline. -- API Server: The main programmatic interface of DevLake. -- Runner: The runner does all the heavy-lifting for executing tasks. In the default DevLake installation, it runs within the API Server, but DevLake provides a temporal-based runner (beta) for production environments. -- Database: The database stores both DevLake's metadata and user data collected by data pipelines. DevLake supports MySQL and PostgreSQL as of v0.11. -- Plugins: Plugins enable DevLake to collect and analyze dev data from any DevOps tools with an accessible API. DevLake community is actively adding plugins for popular DevOps tools, but if your preferred tool is not covered yet, feel free to open a GitHub issue to let us know or check out our doc on how to build a new plugin by yourself. -- Dashboards: Dashboards deliver data and insights to DevLake users. A dashboard is simply a collection of SQL queries along with corresponding visualization configurations. DevLake's official dashboard tool is Grafana and pre-built dashboards are shipped in Grafana's JSON format. Users are welcome to swap for their own choice of dashboard/BI tool if desired. - -## Dataflow - -DevLake Dataflow

- -A typical plugin's dataflow is illustrated below: - -1. The Raw layer stores the API responses from data sources (DevOps tools) in JSON. This saves developers' time if the raw data is to be transformed differently later on. Please note that communicating with data sources' APIs is usually the most time-consuming step. -2. The Tool layer extracts raw data from JSONs into a relational schema that's easier to consume by analytical tasks. Each DevOps tool would have a schema that's tailored to their data structure, hence the name, the Tool layer. -3. The Domain layer attempts to build a layer of abstraction on top of the Tool layer so that analytics logics can be re-used across different tools. For example, GitHub's Pull Request (PR) and GitLab's Merge Request (MR) are similar entities. They each have their own table name and schema in the Tool layer, but they're consolidated into a single entity in the Domain layer, so that developers only need to implement metrics like Cycle Time and Code Review Rounds once against the domain layer schema. - -## Principles - -1. Extensible: DevLake's plugin system allows users to integrate with any DevOps tool. DevLake also provides a dbt plugin that enables users to define their own data transformation and analysis workflows. -2. Portable: DevLake has a modular design and provides multiple options for each module. Users of different setups can freely choose the right configuration for themselves. -3. Robust: DevLake provides an SDK to help plugins efficiently and reliably collect data from data sources while respecting their API rate limits and constraints. - - -

-## Project Metrics This Covers

-

-| Metric Name | Description |

-|:------------------------------------|:--------------------------------------------------------------------------------------------------|

-| Requirement Count | Number of issues with type "Requirement" |

-| Requirement Lead Time | Lead time of issues with type "Requirement" |

-| Requirement Delivery Rate | Ratio of delivered requirements to all requirements |

-| Requirement Granularity | Number of story points associated with an issue |

-| Bug Count | Number of issues with type "Bug"

-

-## Project Metrics This Covers

-

-| Metric Name | Description |

-|:------------------------------------|:--------------------------------------------------------------------------------------------------|

-| Requirement Count | Number of issues with type "Requirement" |

-| Requirement Lead Time | Lead time of issues with type "Requirement" |

-| Requirement Delivery Rate | Ratio of delivered requirements to all requirements |

-| Requirement Granularity | Number of story points associated with an issue |

-| Bug Count | Number of issues with type "Bug" -

-When first visiting Grafana, you will be provided with a sample dashboard with some basic charts setup from the database.

-

-## Contents

-

-Section | Link

-:------------ | :-------------

-Logging In | [View Section](#logging-in)

-Viewing All Dashboards | [View Section](#viewing-all-dashboards)

-Customizing a Dashboard | [View Section](#customizing-a-dashboard)

-Dashboard Settings | [View Section](#dashboard-settings)

-Provisioning a Dashboard | [View Section](#provisioning-a-dashboard)

-Troubleshooting DB Connection | [View Section](#troubleshooting-db-connection)

-

-## Logging In

-

-Once the app is up and running, visit `http://localhost:3002` to view the Grafana dashboard.

-

-Default login credentials are:

-

-- Username: `admin`

-- Password: `admin`

-

-## Viewing All Dashboards

-

-To see all dashboards created in Grafana visit `/dashboards`

-

-Or, use the sidebar and click on **Manage**:

-

-

-

-

-## Customizing a Dashboard

-

-When viewing a dashboard, click the top bar of a panel, and go to **edit**

-

-

-

-**Edit Dashboard Panel Page:**

-

-

-

-### 1. Preview Area

-- **Top Left** is the variable select area (custom dashboard variables, used for switching projects, or grouping data)

-- **Top Right** we have a toolbar with some buttons related to the display of the data:

- - View data results in a table

- - Time range selector

- - Refresh data button

-- **The Main Area** will display the chart and should update in real time

-

-> Note: Data should refresh automatically, but may require a refresh using the button in some cases

-

-### 2. Query Builder

-Here we form the SQL query to pull data into our chart, from our database

-- Ensure the **Data Source** is the correct database

-

-

-

-- Select **Format as Table**, and **Edit SQL** buttons to write/edit queries as SQL

-

-

-

-- The **Main Area** is where the queries are written, and in the top right is the **Query Inspector** button (to inspect returned data)

-

-

-

-### 3. Main Panel Toolbar

-In the top right of the window are buttons for:

-- Dashboard settings (regarding entire dashboard)

-- Save/apply changes (to specific panel)

-

-### 4. Grafana Parameter Sidebar

-- Change chart style (bar/line/pie chart etc)

-- Edit legends, chart parameters

-- Modify chart styling

-- Other Grafana specific settings

-

-## Dashboard Settings

-

-When viewing a dashboard click on the settings icon to view dashboard settings. Here are 2 important sections to use:

-

-

-

-- Variables

- - Create variables to use throughout the dashboard panels, that are also built on SQL queries

-

-

-

-- JSON Model

- - Copy `json` code here and save it to a new file in `/grafana/dashboards/` with a unique name in the `lake` repo. This will allow us to persist dashboards when we load the app

-

-

-

-## Provisioning a Dashboard

-

-To save a dashboard in the `lake` repo and load it:

-

-1. Create a dashboard in browser (visit `/dashboard/new`, or use sidebar)

-2. Save dashboard (in top right of screen)

-3. Go to dashboard settings (in top right of screen)

-4. Click on _JSON Model_ in sidebar

-5. Copy code into a new `.json` file in `/grafana/dashboards`

-

-## Troubleshooting DB Connection

-

-To ensure we have properly connected our database to the data source in Grafana, check database settings in `./grafana/datasources/datasource.yml`, specifically:

-- `database`

-- `user`

-- `secureJsonData/password`

diff --git a/versioned_docs/version-v0.12/UserManuals/Dashboards/_category_.json b/versioned_docs/version-v0.12/UserManuals/Dashboards/_category_.json

deleted file mode 100644

index 0db83c6e9b8..00000000000

--- a/versioned_docs/version-v0.12/UserManuals/Dashboards/_category_.json

+++ /dev/null

@@ -1,4 +0,0 @@

-{

- "label": "Dashboards",

- "position": 5

-}

diff --git a/versioned_docs/version-v0.12/UserManuals/TeamConfiguration.md b/versioned_docs/version-v0.12/UserManuals/TeamConfiguration.md

deleted file mode 100644

index b81df20001b..00000000000

--- a/versioned_docs/version-v0.12/UserManuals/TeamConfiguration.md

+++ /dev/null

@@ -1,188 +0,0 @@

----

-title: "Team Configuration"

-sidebar_position: 7

-description: >

- Team Configuration

----

-## What is 'Team Configuration' and how it works?

-

-To organize and display metrics by `team`, Apache DevLake needs to know about the team configuration in an organization, specifically:

-

-1. What are the teams?

-2. Who are the users(unified identities)?

-3. Which users belong to a team?

-4. Which accounts(identities in specific tools) belong to the same user?

-

-Each of the questions above corresponds to a table in DevLake's schema, illustrated below:

-

-

-

-1. `teams` table stores all the teams in the organization.

-2. `users` table stores the organization's roster. An entry in the `users` table corresponds to a person in the org.

-3. `team_users` table stores which users belong to a team.

-4. `user_accounts` table stores which accounts belong to a user. An `account` refers to an identiy in a DevOps tool and is automatically created when importing data from that tool. For example, a `user` may have a GitHub `account` as well as a Jira `account`.

-

-Apache DevLake uses a simple heuristic algorithm based on emails and names to automatically map accounts to users and populate the `user_accounts` table.

-When Apache DevLake cannot confidently map an `account` to a `user` due to insufficient information, it allows DevLake users to manually configure the mapping to ensure accuracy and integrity.

-

-## A step-by-step guide

-

-In the following sections, we'll walk through how to configure teams and create the five aforementioned tables (`teams`, `users`, `team_users`, `accounts`, and `user_accounts`).

-The overall workflow is:

-

-1. Create the `teams` table

-2. Create the `users` and `team_users` table

-3. Populate the `accounts` table via data collection

-4. Run a heuristic algorithm to populate `user_accounts` table

-5. Manually update `user_accounts` when the algorithm can't catch everything

-

-Note:

-

-1. Please replace `/path/to/*.csv` with the absolute path of the CSV file you'd like to upload.

-2. Please replace `127.0.0.1:8080` with your actual Apache DevLake service IP and port number.

-

-## Step 1 - Create the `teams` table

-

-You can create the `teams` table by sending a PUT request to `/plugins/org/teams.csv` with a `teams.csv` file. To jumpstart the process, you can download a template `teams.csv` from `/plugins/org/teams.csv?fake_data=true`. Below are the detailed instructions:

-

-a. Download the template `teams.csv` file

-

- i. GET http://127.0.0.1:8080/plugins/org/teams.csv?fake_data=true (pasting the URL into your browser will download the template)

-

- ii. If you prefer using curl:

- curl --location --request GET 'http://127.0.0.1:8080/plugins/org/teams.csv?fake_data=true'

-

-

-b. Fill out `teams.csv` file and upload it to DevLake

-

- i. Fill out `teams.csv` with your org data. Please don't modify the column headers or the file suffix.

-

- ii. Upload `teams.csv` to DevLake with the following curl command:

- curl --location --request PUT 'http://127.0.0.1:8080/plugins/org/teams.csv' --form 'file=@"/path/to/teams.csv"'

-

- iii. The PUT request would populate the `teams` table with data from `teams.csv` file.

- You can connect to the database and verify the data in the `teams` table.

- See Appendix for how to connect to the database.

-

-

-

-

-## Step 2 - Create the `users` and `team_users` table

-

-You can create the `users` and `team_users` table by sending a single PUT request to `/plugins/org/users.csv` with a `users.csv` file. To jumpstart the process, you can download a template `users.csv` from `/plugins/org/users.csv?fake_data=true`. Below are the detailed instructions:

-

-a. Download the template `users.csv` file

-

- i. GET http://127.0.0.1:8080/plugins/org/users.csv?fake_data=true (pasting the URL into your browser will download the template)

-

- ii. If you prefer using curl:

- curl --location --request GET 'http://127.0.0.1:8080/plugins/org/users.csv?fake_data=true'

-

-

-b. Fill out `users.csv` and upload to DevLake

-

- i. Fill out `users.csv` with your org data. Please don't modify the column headers or the file suffix

-

- ii. Upload `users.csv` to DevLake with the following curl command:

- curl --location --request PUT 'http://127.0.0.1:8080/plugins/org/users.csv' --form 'file=@"/path/to/users.csv"'

-

- iii. The PUT request would populate the `users` table along with the `team_users` table with data from `users.csv` file.

- You can connect to the database and verify these two tables.

-

-

-

-

-

-c. If you ever want to update `team_users` or `users` table, simply upload the updated `users.csv` to DevLake again following step b.

-

-## Step 3 - Populate the `accounts` table via data collection

-

-The `accounts` table is automatically populated when you collect data from data sources like GitHub and Jira through DevLake.

-

-For example, the GitHub plugin would create one entry in the `accounts` table for each GitHub user involved in your repository.

-For demo purposes, we'll insert some mock data into the `accounts` table using SQL:

-

-```

-INSERT INTO `accounts` (`id`, `created_at`, `updated_at`, `_raw_data_params`, `_raw_data_table`, `_raw_data_id`, `_raw_data_remark`, `email`, `full_name`, `user_name`, `avatar_url`, `organization`, `created_date`, `status`)

-VALUES

- ('github:GithubAccount:1:1234', '2022-07-12 10:54:09.632', '2022-07-12 10:54:09.632', '{\"ConnectionId\":1,\"Owner\":\"apache\",\"Repo\":\"incubator-devlake\"}', '_raw_github_api_pull_request_reviews', 28, '', 'TyroneKCummings@teleworm.us', '', 'Tyrone K. Cummings', 'https://avatars.githubusercontent.com/u/101256042?u=a6e460fbaffce7514cbd65ac739a985f5158dabc&v=4', '', NULL, 0),

- ('jira:JiraAccount:1:629cdf', '2022-07-12 10:54:09.632', '2022-07-12 10:54:09.632', '{\"ConnectionId\":1,\"BoardId\":\"76\"}', '_raw_jira_api_users', 5, '', 'DorothyRUpdegraff@dayrep.com', '', 'Dorothy R. Updegraff', 'https://avatars.jiraxxxx158dabc&v=4', '', NULL, 0);

-

-```

-

-

-

-## Step 4 - Run a heuristic algorithm to populate `user_accounts` table

-

-Now that we have data in both the `users` and `accounts` table, we can tell DevLake to infer the mappings between `users` and `accounts` with a simple heuristic algorithm based on names and emails.

-

-a. Send an API request to DevLake to run the mapping algorithm

-

-```

-curl --location --request POST '127.0.0.1:8080/pipelines' \

---header 'Content-Type: application/json' \

---data-raw '{

- "name": "test",

- "plan":[

- [

- {

- "plugin": "org",

- "subtasks":["connectUserAccountsExact"],

- "options":{

- "connectionId":1

- }

- }

- ]

- ]

-}'

-```

-

-b. After successful execution, you can verify the data in `user_accounts` in the database.

-

-

-

-## Step 5 - Manually update `user_accounts` when the algorithm can't catch everything

-

-It is recommended to examine the generated `user_accounts` table after running the algorithm.

-We'll demonstrate how to manually update `user_accounts` when the mapping is inaccurate/incomplete in this section.

-To make manual verification easier, DevLake provides an API for users to download `user_accounts` as a CSV file.

-Alternatively, you can verify and modify `user_accounts` all by SQL, see Appendix for more info.

-

-a. GET http://127.0.0.1:8080/plugins/org/user_account_mapping.csv(pasting the URL into your browser will download the file). If you prefer using curl:

-```

-curl --location --request GET 'http://127.0.0.1:8080/plugins/org/user_account_mapping.csv'

-```

-

-

-

-b. If you find the mapping inaccurate or incomplete, you can modify the `user_account_mapping.csv` file and then upload it to DevLake.

-For example, here we change the `UserId` of row 'Id=github:GithubAccount:1:1234' in the `user_account_mapping.csv` file to 2.

-Then we upload the updated `user_account_mapping.csv` file with the following curl command:

-

-```

-curl --location --request PUT 'http://127.0.0.1:8080/plugins/org/user_account_mapping.csv' --form 'file=@"/path/to/user_account_mapping.csv"'

-```

-

-c. You can verify the data in the `user_accounts` table has been updated.

-

-

-

-## Appendix A: how to connect to the database

-

-Here we use MySQL as an example. You can install database management tools like Sequel Ace, DataGrip, MySQLWorkbench, etc.

-

-

-Or through the command line:

-

-```

-mysql -h

-

-When first visiting Grafana, you will be provided with a sample dashboard with some basic charts setup from the database.

-

-## Contents

-

-Section | Link

-:------------ | :-------------

-Logging In | [View Section](#logging-in)

-Viewing All Dashboards | [View Section](#viewing-all-dashboards)

-Customizing a Dashboard | [View Section](#customizing-a-dashboard)

-Dashboard Settings | [View Section](#dashboard-settings)

-Provisioning a Dashboard | [View Section](#provisioning-a-dashboard)

-Troubleshooting DB Connection | [View Section](#troubleshooting-db-connection)

-

-## Logging In

-

-Once the app is up and running, visit `http://localhost:3002` to view the Grafana dashboard.

-

-Default login credentials are:

-

-- Username: `admin`

-- Password: `admin`

-

-## Viewing All Dashboards

-

-To see all dashboards created in Grafana visit `/dashboards`

-

-Or, use the sidebar and click on **Manage**:

-

-

-

-

-## Customizing a Dashboard

-

-When viewing a dashboard, click the top bar of a panel, and go to **edit**

-

-

-

-**Edit Dashboard Panel Page:**

-

-

-

-### 1. Preview Area

-- **Top Left** is the variable select area (custom dashboard variables, used for switching projects, or grouping data)

-- **Top Right** we have a toolbar with some buttons related to the display of the data:

- - View data results in a table

- - Time range selector

- - Refresh data button

-- **The Main Area** will display the chart and should update in real time

-

-> Note: Data should refresh automatically, but may require a refresh using the button in some cases

-

-### 2. Query Builder

-Here we form the SQL query to pull data into our chart, from our database

-- Ensure the **Data Source** is the correct database

-

-

-

-- Select **Format as Table**, and **Edit SQL** buttons to write/edit queries as SQL

-

-

-

-- The **Main Area** is where the queries are written, and in the top right is the **Query Inspector** button (to inspect returned data)

-

-

-

-### 3. Main Panel Toolbar

-In the top right of the window are buttons for:

-- Dashboard settings (regarding entire dashboard)

-- Save/apply changes (to specific panel)

-

-### 4. Grafana Parameter Sidebar

-- Change chart style (bar/line/pie chart etc)

-- Edit legends, chart parameters

-- Modify chart styling

-- Other Grafana specific settings

-

-## Dashboard Settings

-

-When viewing a dashboard click on the settings icon to view dashboard settings. Here are 2 important sections to use:

-

-

-

-- Variables

- - Create variables to use throughout the dashboard panels, that are also built on SQL queries

-

-

-

-- JSON Model

- - Copy `json` code here and save it to a new file in `/grafana/dashboards/` with a unique name in the `lake` repo. This will allow us to persist dashboards when we load the app

-

-

-

-## Provisioning a Dashboard

-

-To save a dashboard in the `lake` repo and load it:

-

-1. Create a dashboard in browser (visit `/dashboard/new`, or use sidebar)

-2. Save dashboard (in top right of screen)

-3. Go to dashboard settings (in top right of screen)

-4. Click on _JSON Model_ in sidebar

-5. Copy code into a new `.json` file in `/grafana/dashboards`

-

-## Troubleshooting DB Connection

-

-To ensure we have properly connected our database to the data source in Grafana, check database settings in `./grafana/datasources/datasource.yml`, specifically:

-- `database`

-- `user`

-- `secureJsonData/password`

diff --git a/versioned_docs/version-v0.12/UserManuals/Dashboards/_category_.json b/versioned_docs/version-v0.12/UserManuals/Dashboards/_category_.json

deleted file mode 100644

index 0db83c6e9b8..00000000000

--- a/versioned_docs/version-v0.12/UserManuals/Dashboards/_category_.json

+++ /dev/null

@@ -1,4 +0,0 @@

-{

- "label": "Dashboards",

- "position": 5

-}

diff --git a/versioned_docs/version-v0.12/UserManuals/TeamConfiguration.md b/versioned_docs/version-v0.12/UserManuals/TeamConfiguration.md

deleted file mode 100644

index b81df20001b..00000000000

--- a/versioned_docs/version-v0.12/UserManuals/TeamConfiguration.md

+++ /dev/null

@@ -1,188 +0,0 @@

----

-title: "Team Configuration"

-sidebar_position: 7

-description: >

- Team Configuration

----

-## What is 'Team Configuration' and how it works?

-

-To organize and display metrics by `team`, Apache DevLake needs to know about the team configuration in an organization, specifically:

-

-1. What are the teams?

-2. Who are the users(unified identities)?

-3. Which users belong to a team?

-4. Which accounts(identities in specific tools) belong to the same user?

-

-Each of the questions above corresponds to a table in DevLake's schema, illustrated below:

-

-

-