Frigate in Proxmox LXC - Unprivileged with Intel iGPU (11th gen), USB Coral and Network share #5773

Replies: 31 comments 76 replies

-

|

Good work figuring this out, especially the iGPU hardware acceleration! I starting going down the same rabbit hole trying to get hw acceleration working on my bare metal install on debian with a core i9-12900H and never got it working. Eventually I tried out the beta version of Frigate and everything worked as it should. My best guess was that the frigate 0.11 image was built before these newer intel chips were fully supported. If you continue to have issues, try out the beta. I have since migrated from bare metal to proxmox and I didn't have to do anything special to pass through the iGPU, I just looked at a few examples from the other discussions here to get it set up. Even H265 is working. |

Beta Was this translation helpful? Give feedback.

-

|

Hi @Dougiebabe and @akmolina28 Have you tried running 0.12.0 in an unprivileged LXC? I'm getting this error: For some reason, see #6075 0.12 needs to change the ownership of these files to nobody, and it can't do that in an unprivileged environment. |

Beta Was this translation helpful? Give feedback.

-

|

Thanks for the guide @Dougiebabe, would you happen to know if it is possible to store recordings on the host? I have tried mapping the group on the LXC (media) to a group on the host (media) to no avail. I think my problem is that the root user on the LXC used by Frigate cant be mapped to my user 'media' on the host. Any suggestions would be much appreciated |

Beta Was this translation helpful? Give feedback.

-

|

I created a big ZFS pool in Proxmox. In that pool (pooldino - dinosaur spinning drives) was the root volume for my frigate container. |

Beta Was this translation helpful? Give feedback.

-

|

Thanks for this guide. I followed it carefully and got really far with my Frigate journey. I'm really new to Frigate and Home assistant world and this is exactly what I needed. However, after following the steps for iGPU passthrough, it looks like it is working. I can even confirm that the openvino install worked as my interference speed is like 12ms (vs CPU which is like 280ms). This is really good so far. Moving on .. after adding the line below in config.yml camera section. hwaccel_args: preset-vaapi It does seem to work but Im getting an error (screenshot below) from the frigate system page In the logs I see this

Like I mentioned, Im really new to this and so far I'm it look me 4 days just to have a decent config.yml that works well (still need more improvements!). Is this expected behavior? Can it be safely ignored? Is there a way to check what this error impacts in terms of hardware acceleration of the LXC container? TIA! |

Beta Was this translation helpful? Give feedback.

-

|

Hello, I've used this guide and it worked well with iGPU detectors. Now I just received a M.2 pcie which I confirmed is detected by the proxmox host. Looks like this guide only tackles USB Corals vs M.2 Pcie ones. Anyone knows any guide for Proxmox LXC with M.2 pcie coral passthrough? Still new and learning these stuff. Thank you1 |

Beta Was this translation helpful? Give feedback.

-

|

The igpu passthrough works with idmap, i also encountered a simillar issue where my guest would show the render file mapped with group render and vainfo works but no apps/services could have access to it, the problem was because render file needs to be mounted with it's directory after mounting that it works without any issues, hope this helps. Also oddly enough, i found many guide on igpu passthrough on priviledged container only mounting the render file without the dir, and i remembered it didn't need it before, don't know when things changed but i can confirm it works by mounting both the dir and render file on lxc conf. |

Beta Was this translation helpful? Give feedback.

-

|

I've updated the guide slightly as there was a mistake in the NAS Storage part where the group didn't actually have access to the NAS storage mounted to the host, the fix is in the fstab part. I had been using local storage in my own but have now swapped over to NAS storage for the recordings. I have also updated the docker compose part to the default docker compose as I had missed the newer go2rtc parts for the newer v12 version. Everything has been running rock solid for me since moving this over to the unprivileged LXC and I am also able to use the GPU for Plex transcoding on another LXC which wasn't possible when using VMs. |

Beta Was this translation helpful? Give feedback.

-

|

First off, thanks for the great guide! But... I have been at this all day, and I absolutely cannot get the Coral USB to be recognised by Frigate unless I switch to a privileged container. The trouble is if I do that it breaks everything else Docker related including Portainer (agent). Fairly new to all this (5 days!?) but I have managed to get a few other VMs running in Proxmox, including OMV, Plex, Sonarr, Radarr, SABnzbd etc, and Frigate running fine with hardware decoding, it just WILL NOT find the Coral in an unprivileged container!! What info do you need!? TIA |

Beta Was this translation helpful? Give feedback.

-

|

Very good guide. one issue I keep having is every time i reboot the proxmox server, the usb assignment for the coral device changes bus assigment usually between bus 004 and 003 which means i have to check and edit the conf file for the passthrough does anyone get the same and if so what do you do to combat it? |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

I have one more issue that is perplexing me and that is to store my Frigate recordings on a shared drive. I have been unable to get this to work and I am not sure if it's due to incorrect setup or possibly a permissions issue. Following the steps above I added this line to my fstab file -> //192.168.1.110/frigate_network_storage /mnt/lxc_shares/frigate_network_storage cifs _netdev,noserverino,x-systemd.automount,noatime,uid=100000,gid=110000,dir_mode=0770,file_mode=0770,username=xxxxxx><frigate,password=xxxxxxxx 0 0 I have this in my container conf file -> mp0: /mnt/lxc_shares/frigate_network_storage/,mp=/opt/frigate/media I added this volume to the portainer stack -> - /opt/frigate/media:/media/frigate in the frigate.yml file I added this so that the database is local and not on the network storage When I did all this Frigate appeared to be running within docker however I couldn't get the UI page to come up it just gave me a spinning circle. Anyone with more docker/proxmox knowledge than I is there a way to determine if my config setup is incorrect or a possible permissions error somewhere? Any guidance or ideas would be appreciated. |

Beta Was this translation helpful? Give feedback.

-

After username you have a ><frigate part, that doesn't look right. Is that in your fstab or an error with copy paste? |

Beta Was this translation helpful? Give feedback.

-

|

Still struggling to try and get my shared folder mounted however looking at this wouldn't it suggested that it is mounted? What I am having trouble understanding is the full path to the shared folder is //192.168.1.110/mnt/HomePool/Frigate/frigate_network_storage. How does it know that //192.168.1.110/frigate_network_storage. would map to that? What would I need to change to make that work? I tried entering the full path but it didn't like that and gave me an error message. |

Beta Was this translation helpful? Give feedback.

-

|

Great! This will come in useful for me in a week. Been running Frigate on lxc proxmox for a while now as a backup to BI. By any chance do we know when Frigate+ docker images will be released? Thanks! |

Beta Was this translation helpful? Give feedback.

-

|

Hi, Any suggestion? [EDIT] SOLVED! |

Beta Was this translation helpful? Give feedback.

-

|

Honestly, I can't thank you enough for this very helpful guide. It fixed every issue I had stumbled upon, much appreciated. 👍🏼 |

Beta Was this translation helpful? Give feedback.

-

|

Hi. I'm new to frigate and just installed it on Proxmox 8.1 in a LXC container with docker Everything works fine & I'm able to see the camera etc. BUT.... Once i enable recording I'm getting this error message & I'm unable to record I'm running Proxmox on a Dell optiplex with Intel(R) Core(TM) i5-6500 CPU @ 3.20GHz My camera is Dahua compatible (Asecam). its a 2K IP POE Camera This is the GPU that i have I 'm able to see the same display info inside the container as well. This is my config.yml this is my docker compose Any help in getting recording to work would be really helpful |

Beta Was this translation helpful? Give feedback.

-

|

Things got a lot easier This is not needed anymore The following works right away, |

Beta Was this translation helpful? Give feedback.

-

|

Hi all I've followed this guide (Thanks!) to install Frigate on Proxmox What should I do to upgrade Frigate from 0.13.1 to 0.13.2 ? AFAIK, I didn't used docker-compose Thanks! |

Beta Was this translation helpful? Give feedback.

-

|

I am not sure where I went wrong but I followed the guide verbatim but I get an error that the Frigate config file cannot be found: My Portainer stack config file: Config file (config.yml): |

Beta Was this translation helpful? Give feedback.

-

|

Did anybody try to pass-through an AMD APU (integrated GPU) using this method? Is it even possible? |

Beta Was this translation helpful? Give feedback.

-

|

I have a Threadripper Pro which does not have an IGPU so do I pass nothing though and let software handle it? |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

Great tuto! Wanted to add, there's now a script from tteck's proxmox helper script to automate Frigate LXC installation. See this discussion tteck/Proxmox#2711 |

Beta Was this translation helpful? Give feedback.

-

|

This has been very helpful. Since there has been a lot of bug reports with the 6.8 kernels, I would like to add that it is working with Proxmox 8.2 kernel In both 22.04 and 24.04 though in order to use the iGPU for inferencing, you will need to install the Intel Compute Runtime files into the Frigate container after bringing it up: docker exec -ti frigate bash

cd $(mktemp -d)

wget https://github.com/intel/intel-graphics-compiler/releases/download/igc-1.0.16900.23/intel-igc-core_1.0.16900.23_amd64.deb

wget https://github.com/intel/intel-graphics-compiler/releases/download/igc-1.0.16900.23/intel-igc-opencl_1.0.16900.23_amd64.deb

wget https://github.com/intel/compute-runtime/releases/download/24.22.29735.20/intel-level-zero-gpu_1.3.29735.20_amd64.deb

wget https://github.com/intel/compute-runtime/releases/download/24.22.29735.20/intel-opencl-icd_24.22.29735.20_amd64.deb

wget https://github.com/intel/compute-runtime/releases/download/24.22.29735.20/libigdgmm12_22.3.19_amd64.deb

dpkg -i *.debSome warnings will show, but they can be ignored. It will work, somehow. Keep in mind that the latest Proxmox, the latest Ubuntu and the latest Frigate are all changing rapidly so by the time you read this, it may not be necessary anymore. Try without first. For now it seems to be an outdated dependency: #12260 This is on slightly different hardware (Intel Core Ultra

@Dougiebabe the format for setting this in the LXC configuration is new and confusing to me, but ChatGPT v4o explained it to me, and helped me create a configuration that works for me. Just paste in one of the examples, and explain to ChatGPT which ID's you need to map instead. Use these commands inside the LXC container to find out where to map from, and again outsude the LXC container (i.e. on the Proxmox host) to find out where to map to. getent group render

getent group videoIn my case it would end up like this. It's a bit weird but you need to map all 65535 IDs in order, that's why you need all those extra lines that seem illogical at first, in stead of just the two you recognize.

The idmap is recommended because when your GPU is readable to all, it means everyone, even guests at any level inside or outside any container on the system, including unwanted guests from over the world, can potentially spy on your screen without having to do some kind of privilege escalation exploit. I realize I'm responding to an old post, but this is still very relevant. |

Beta Was this translation helpful? Give feedback.

-

|

I would rather not chmod renderD128 but I cannot get it to work with idmaps either. Any help is appreciated! |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

-

|

first step is done, Frigate at Proxmox in Portainer runs, but I have some questions/problems:

System: 0.14.0-da913d8 |

Beta Was this translation helpful? Give feedback.

-

|

Hoping someone can help. I'm following this guide and when I get to the part about mounting an external share I get the following error when I try to manually mount the share. root@pve:~# mount /mnt/lxc_shares/frigate_network_storage dmesg outputs the following: I went through and was able to finish the rest of the install and the mount point shows up under the container resources but when I'm in Frigate there's no reference to the external Samba storage it just shows the storage allocated from the root where it was installed. The external storage is a Samba Cifs share on Openmediavault which is running as a VM on the same proxmox server as Frigate. |

Beta Was this translation helpful? Give feedback.

-

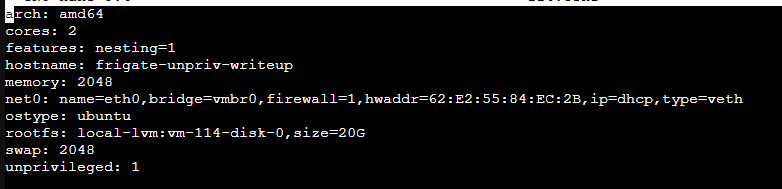

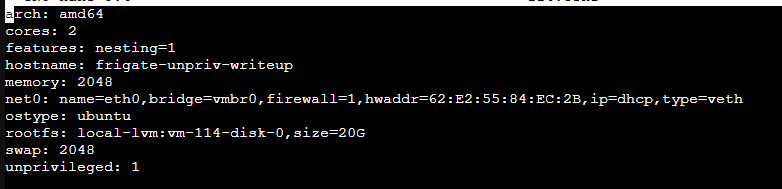

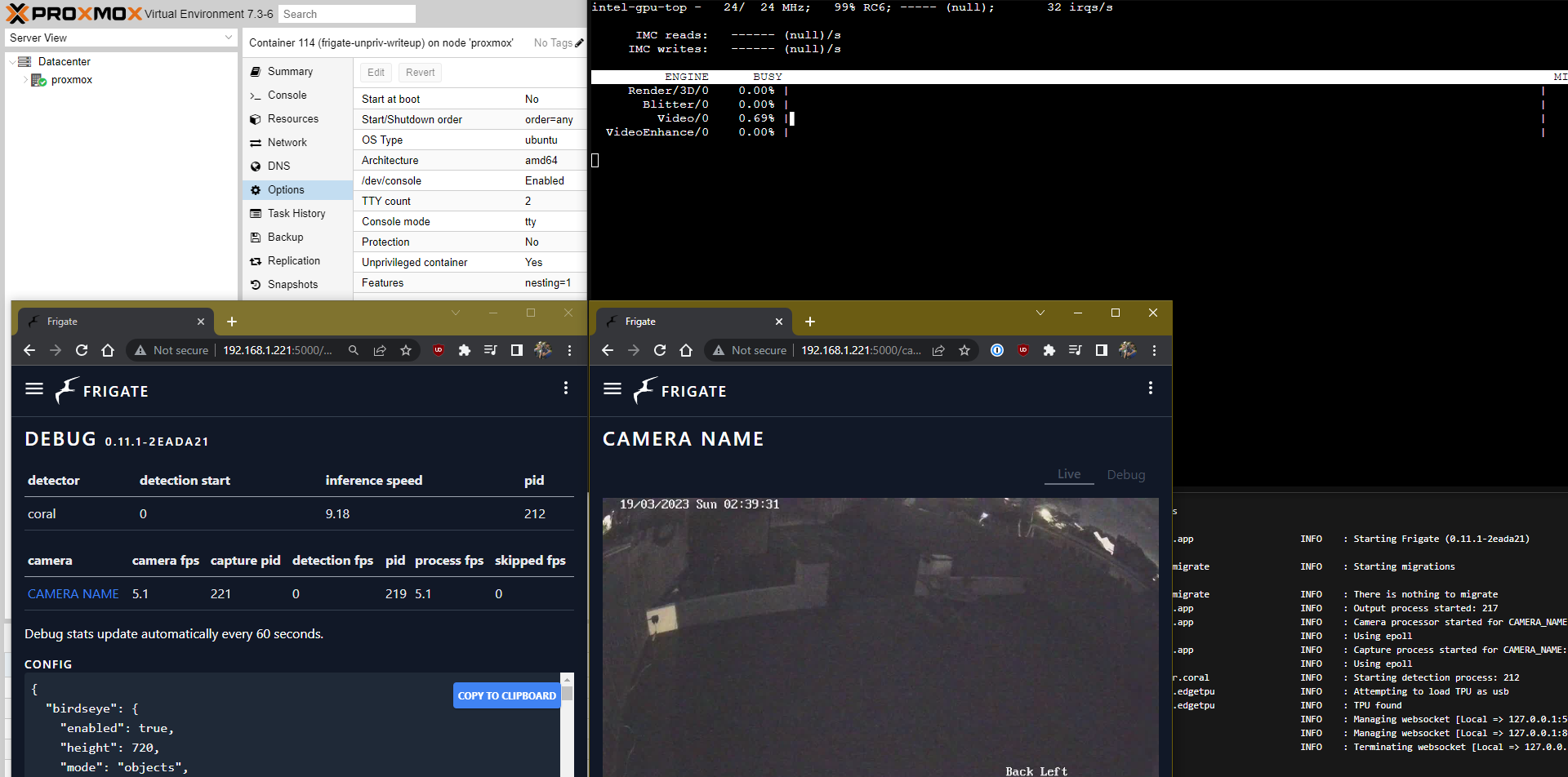

So this has taken a little while for me to get working and I'm not sure that it's 100% correct so I'm open to feedback on this

The reason I have tried to do this is because I want to use the iGPU for both Frigate and Plex on this newer 11th gen CPU. First I tried to do this in a VM with the iGPU passed through and while this seemed to work OK for Frigate (although I did have some random crashes and /TMP filling up) as soon as I started transcoding on the iGPU it would freeze the GPU and I would have to restart the VM to get things working again.

From reading this could be an issue with the newer 11th+ gen iGPUs not passing through properly? I'm not sure what the reason was but I couldn't get Plex transcoding properly on this GPU.

I started reading up on LXC containers and found that you can share resources across containers and it's talking direct to the GPU without a virtual abstraction layer, great!

First I got Frigate working with Privileged containers and then Plex, all was good. Frigate was working with HW Accel and Plex was transcoding using HW Accel as well. At this point I did some reading of Privileged vs Unprivileged containers and saw that it's recommended where possible to use Unprivileged containers and with Plex being open to the Internet I was keen to do so.

So that is the WHY I spent the last week or so trying to get this working, here is the HOW

!!! I give absolutely no warranty/deep support on what I write below. This write up is a mix of all kinds of information from all over the internet !!!

I'll split this up into a few sections:

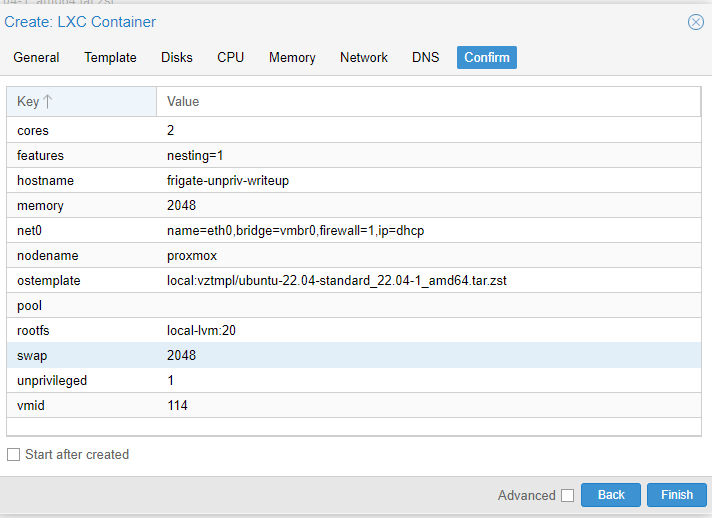

1) Setting up a Proxmox LXC Unpriv Container

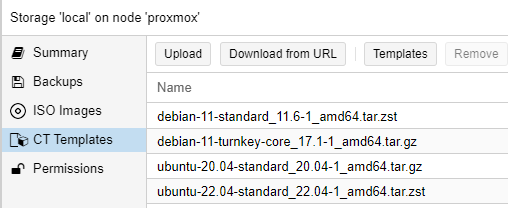

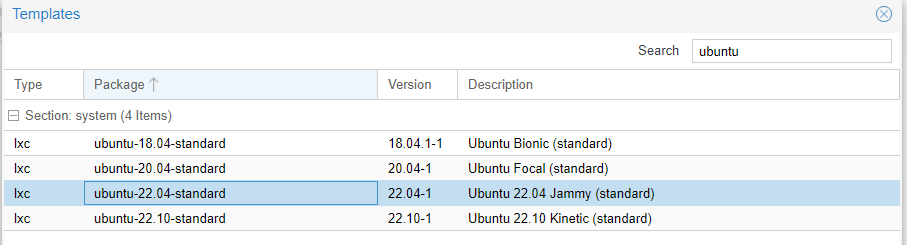

Logon to Proxmox host --> go to 'Local' on the L/H Pane --> CT Templates --> Templates

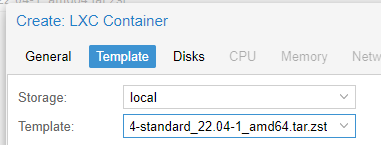

I prefer to use Ubuntu so in this guide I will be using Ubuntu 22.04, I have tested this on Debian 11 Turnkey Core and it worked so others should work

Click Templates --> search 'Ubuntu' --> download 22.04

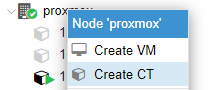

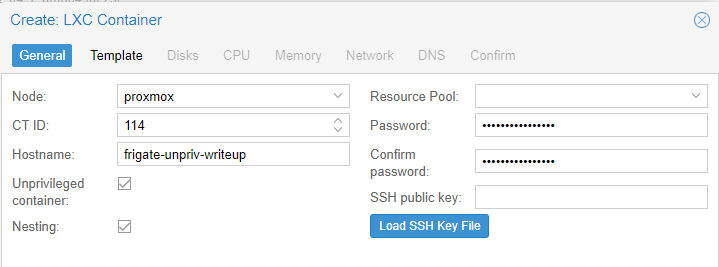

Create a new CT (LXC Container) by right clicking on your host

Give the CT a name

Leave Unpriv and Nesting ticked

Type a strong password (The password you use here is what you will use to logon/SSH to the CT)

Choose the template

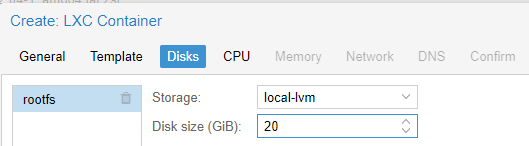

Give the CT a disk (this is used for the OS and any clips/recordings if you are NOT using a network share)

Assign the number of CPU and RAM you wish to use

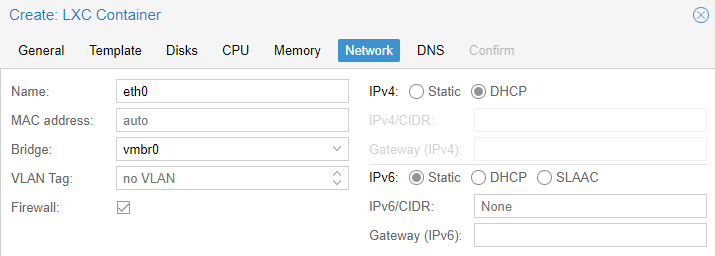

Assign the network (I always forget to change the network to DHCP)

Choose DNS settings

Confirm the CT

You now have a CT ready for us to configure

2) Passing through the USB Coral

If you don't have a USB coral you can skip this part. If you have an M.2/PCIe coral then these instructions will probably not work for you.

Console onto your Proxmox host

Type

lsusband you should see something like this - Note down the USB Bus that the coral is present onType the following into the Proxmox console

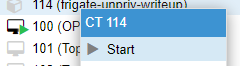

You should now be in the lxc folder and see a list of CT conf files. We need to edit the CT conf file that corresponds to the CT we made above, you can find this in Proxmox

So I need to then type

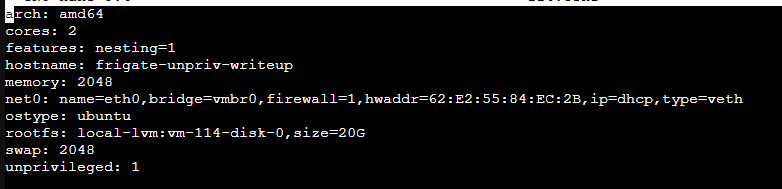

nano 114.confto edit the conf file for the CT I am working on - YOURS MAY DIFFERWe need to add the below to the conf file - REPLACE THE BUS NUMBER ON THE LAST LINE WITH YOUR OWN

My understanding of the above commands :

usb0: host=1a6e:089a,usb3=1

usb1: host=18d1:9302,usb3=1 - These are the devices IDs (I believe) that the coral can have and I think lock the device to the LXC

lxc.cgroup2.devices.allow: c 189:* rwm - This is allow the USB Device ID to passed through to LXC

lxc.mount.entry: /dev/bus/usb/004 dev/bus/usb/004 none bind,optional,create=dir - This mounts the device to the LXC at the path dev/bus/usb/004

Ctrl-xto save the fileThat's it, if you don't need iGPU or Network shares you can move on to the Installing Frigate step!

3) Passing through the iGPU

This is the bit that got me for days and I'm still not sure that the way I am suggesting here is 100% correct.

I have tried doing idmaps and mapping the video and render group to the groups in the LXC but it just would NOT work, then I found a post on reddit while looking into using udev (which worked in a priv but not unpriv CT) which fixed the problem.

So I suggest you read the below and understand what is happening before following the steps, hopefully someone can tell me if the chmod command below is OK? I think it allows everyone to use the render128 device which should be a lower risk than using a priv CT?

The guide:

Console onto your Proxmox host

type

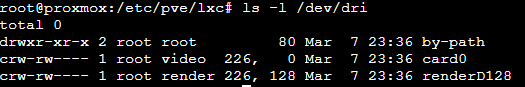

lspci -nnv | grep VGAand confirm your GPU is detectedType

ls -l /dev/driand you should get something like the belowHere you can see the the device 'card0' has a major number of 226 and a minor number of 0

The renderD128 has a major number of 226 and a minor number of 128

We need to tell the container that it is allowed to use these devices.

Type the following into the Proxmox console

You should now be in the lxc folder and see a list of CT conf files. We need to edit the CT conf file that corresponds to the CT we made above, you can find this in Proxmox

So I need to then type

nano 114.confto edit the conf file for the CT I am working on - YOURS MAY DIFFERWe need to add the below to the conf file - YOURS COULD BE DIFFERENT TO THIS DEPENDING ON THE

ls -l /dev/driOUTPUTlxc.cgroup2.devices.allow: c 226:0 rwm

lxc.cgroup2.devices.allow: c 226:128 rwm

lxc.mount.entry: /dev/dri/renderD128 dev/dri/renderD128 none bind,optional,create=file 0, 0

lxc.mount.entry: /dev/dri dev/dri none bind,optional,create=dir

My understanding of the above:

lxc.cgroup2.devices.allow: c 226:0 rwm

lxc.cgroup2.devices.allow: c 226:128 rwm - These two are the devices we want the LXC to be allowed to use

lxc.mount.entry: /dev/dri/renderD128 dev/dri/renderD128 none bind,optional,create=file 0, 0

lxc.mount.entry: /dev/dri dev/dri none bind,optional,create=dir - These two are the mounting points within the LXC container that the devices will be mounted to

Ctrl-xto save the fileNow, at this point the LXC should have the device be mounted but it won't have the correct permissions. There are a lot of guides that will then say you need to an

lxc.idmap:to your conf file. I tried and tried and tried to get this working in that way but failed everytime. The device would show the correct group (render) and vainfo would show the device but nothing within the CT could use it. Yes I made sure the maps were correct and Yes I added the required lines to the/etc/subgidfile. It just didn't work. I would LOVE to get this working and if someone has a suggestion please let me know.Anyway, this guide will be using the following command to get access to the GPU within the CT

On the Proxmox host console type

chmod 666 /dev/dri/renderD128This will give rw access to the renderD128 device for ALL users

Now I haven't tested this next part as my host hasn't been rebooted yet but the reddit post above explains that the chmod won't be kept over reboots and that the following is needed to reset it after a reboot

and put the following in it

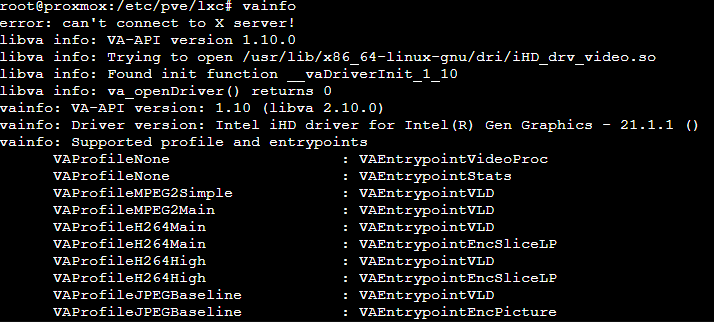

Now I also have the I965 drivers installed on my proxmox host. I can't confirm if this is 100% needed so I would ask that people skip this next command and see if it works, if not then you can come back and install

On the Proxmox host console

apt install vainfoThis should install the required drivers and once installed running

vainfoshould return something like thisThat's it, at this point you should the iGPU working in the CT. If you don't need network shares then you can move onto installing Frigate!

4) Passing through the network share

This part was taken from the this forum post

The post does a good job of explaining the issue of mounting a share in an unpriv container and how we are going to get around that in this instance.

Start the CT

Open the console to the LXC and do the following

Create the group "lxc_shares" with GID=10000 in the LXC which will match the GID=110000 on the PVE host.

groupadd -g 10000 lxc_sharesAdd the user(s) that need access to the CIFS share to the group "lxc_shares".

(Frigate uses root as the user)

usermod -aG lxc_shares rootShutdown the LXC

On Proxmox console

Create the mount point on the PVE host (this can be whatever you prefer)

mkdir -p /mnt/lxc_shares/frigate_network_storageAdd NAS CIFS share to

/etc/fstabnano /etc/fstab!!!You will need to edit this line to include your own NAS path, Username and Password!!!

//IP_ADDRESS/SHARENAME /mnt/lxc_shares/frigate_network_storage cifs _netdev,noserverino,x-systemd.automount,noatime,uid=100000,gid=110000,dir_mode=0770,file_mode=0770,username=USERNAME,password=PASSWORD 0 0

This will then mount the share on startup to the Proxmox host

To mount the share NOW to the host type

mount /mnt/lxc_shares/frigate_network_storageType the following into the Proxmox console

You should now be in the lxc folder and see a list of CT conf files. We need to edit the CT conf file that corresponds to the CT we made above, you can find this in Proxmox

So I need to then type

nano 114.confto edit the conf file for the CT I am working on - YOURS MAY DIFFERI then need to add the following to my conf file - YOURS MAY DIFFER DEPENDING ON THE PATH YOU CHOSE FOR THE FOLDER

mp0: /mnt/lxc_shares/frigate_network_storage/,mp=/opt/frigate/mediaAt this point you should now have some network storage mounted to the LXC container! If you followed along with everything else then you should also have a USB Coral and iGPU connected to the LXC container!

5) Installing Frigate

I'm just going to copy paste from my blog post about how to install frigate and get it configured as if you know then you can skip this part but if this is your first time trying then it might help you get it up and running. Inspiration for this blog post came from this blog post

Start the container within Proxmox and open the 'Console' to the container

Once at the command prompt let's do some updates

Now lets get docker installed

Once the above finishes, you can test Docker by running this command

I personally use and love portainer so we will be installing that to manage docker and the frigate container - if you wish to do this in the command line then that is fine but the instructions from here will be assuming you are using Portainer

Now we can open up another browser tab and head over to the Portainer GUI, the address is the same as your container but ending in :9000. In my example this is 192.168.1.59:9000 - You can get your IP address by typing

ip addrIf all went well, you should be greeted with the below new installation screen, create a new password and note it down.

Once in Portainer, navigate around and check out the Containers List. Here you will see Portainer itself and the hello world test we ran earlier, here is where you will see all future Docker containers like Frigate once installed, feel free to delete the hello world container.

We now have installed Docker running in LXC Linux under Proxmox with management via a web based GUI.

Installing Frigate (Pre Reqs)

First we need to setup the folder structure that we will use for Frigate, this is how I set mine up, you are welcome to use your own folder structure

On the container console type the following:

We are going to use the media folder for storing clips and recordings

We are going to use the storage folder for the db

While we are here we are going to make a basic config.yml file - this file contains all the settings for Frigate and you will spend a bit of time in here editing this file to suit your own setup

make sure you are in

/opt/frigateand typenano config.ymlEdit the following in a word editor so that it suits your setup. Pay attention to the MQTT section and the Camera Section! Also if you have an older CPU (<10th gen) then pay attention to the HW Accel section! Paste the edited config into the

config.ymlfilectrl-xto save the fileNow we have a config file located in

/opt/frigate/config.ymlInstalling the Frigate Docker container

Go back to Portainer and click on 'Stacks' --> Add Stack

Give the stack a name and then copy the below into the text box

Edit the RTSP Password and hit Deploy

At this point you should now have Frigate up and running. You can go back to the Containers section in Portainer and see the Frigate container running. From here you can open the logs and check that you have no errors.

If you now go to the container http://IPADDRESS:5000 then you should be greeted with your new Frigate container!

That's it... I have followed along as I wrote the doc/guide and I now have Frigate running in a LXC with a Coral, iGPU and network share

I'd welcome any feedback or suggestions. I **THINK ** this is more secure than running in a full priv LXC CT but welcome advise on that.

Beta Was this translation helpful? Give feedback.

All reactions