diff --git a/CODE_OF_CONDUCT.md b/CODE_OF_CONDUCT.md

new file mode 100644

index 00000000..f19b8049

--- /dev/null

+++ b/CODE_OF_CONDUCT.md

@@ -0,0 +1,13 @@

+---

+title: "Contributor Code of Conduct"

+---

+

+As contributors and maintainers of this project,

+we pledge to follow the [The Carpentries Code of Conduct][coc].

+

+Instances of abusive, harassing, or otherwise unacceptable behavior

+may be reported by following our [reporting guidelines][coc-reporting].

+

+

+[coc-reporting]: https://docs.carpentries.org/topic_folders/policies/incident-reporting.html

+[coc]: https://docs.carpentries.org/topic_folders/policies/code-of-conduct.html

diff --git a/LICENSE.md b/LICENSE.md

new file mode 100644

index 00000000..7632871f

--- /dev/null

+++ b/LICENSE.md

@@ -0,0 +1,79 @@

+---

+title: "Licenses"

+---

+

+## Instructional Material

+

+All Carpentries (Software Carpentry, Data Carpentry, and Library Carpentry)

+instructional material is made available under the [Creative Commons

+Attribution license][cc-by-human]. The following is a human-readable summary of

+(and not a substitute for) the [full legal text of the CC BY 4.0

+license][cc-by-legal].

+

+You are free:

+

+- to **Share**---copy and redistribute the material in any medium or format

+- to **Adapt**---remix, transform, and build upon the material

+

+for any purpose, even commercially.

+

+The licensor cannot revoke these freedoms as long as you follow the license

+terms.

+

+Under the following terms:

+

+- **Attribution**---You must give appropriate credit (mentioning that your work

+ is derived from work that is Copyright (c) The Carpentries and, where

+ practical, linking to ), provide a [link to the

+ license][cc-by-human], and indicate if changes were made. You may do so in

+ any reasonable manner, but not in any way that suggests the licensor endorses

+ you or your use.

+

+- **No additional restrictions**---You may not apply legal terms or

+ technological measures that legally restrict others from doing anything the

+ license permits. With the understanding that:

+

+Notices:

+

+* You do not have to comply with the license for elements of the material in

+ the public domain or where your use is permitted by an applicable exception

+ or limitation.

+* No warranties are given. The license may not give you all of the permissions

+ necessary for your intended use. For example, other rights such as publicity,

+ privacy, or moral rights may limit how you use the material.

+

+## Software

+

+Except where otherwise noted, the example programs and other software provided

+by The Carpentries are made available under the [OSI][osi]-approved [MIT

+license][mit-license].

+

+Permission is hereby granted, free of charge, to any person obtaining a copy of

+this software and associated documentation files (the "Software"), to deal in

+the Software without restriction, including without limitation the rights to

+use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies

+of the Software, and to permit persons to whom the Software is furnished to do

+so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

+

+## Trademark

+

+"The Carpentries", "Software Carpentry", "Data Carpentry", and "Library

+Carpentry" and their respective logos are registered trademarks of [Community

+Initiatives][ci].

+

+[cc-by-human]: https://creativecommons.org/licenses/by/4.0/

+[cc-by-legal]: https://creativecommons.org/licenses/by/4.0/legalcode

+[mit-license]: https://opensource.org/licenses/mit-license.html

+[ci]: https://communityin.org/

+[osi]: https://opensource.org

diff --git a/basic-targets.md b/basic-targets.md

new file mode 100644

index 00000000..1b0e1820

--- /dev/null

+++ b/basic-targets.md

@@ -0,0 +1,298 @@

+---

+title: 'First targets Workflow'

+teaching: 10

+exercises: 2

+---

+

+:::::::::::::::::::::::::::::::::::::: questions

+

+- What are best practices for organizing analyses?

+- What is a `_targets.R` file for?

+- What is the content of the `_targets.R` file?

+- How do you run a workflow?

+

+::::::::::::::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: objectives

+

+- Create a project in RStudio

+- Explain the purpose of the `_targets.R` file

+- Write a basic `_targets.R` file

+- Use a `_targets.R` file to run a workflow

+

+::::::::::::::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: {.instructor}

+

+Episode summary: First chance to get hands dirty by writing a very simple workflow

+

+:::::::::::::::::::::::::::::::::::::

+

+

+

+## Create a project

+

+### About projects

+

+`targets` uses the "project" concept for organizing analyses: all of the files needed for a given project are put in a single folder, the project folder.

+The project folder has additional subfolders for organization, such as folders for data, code, and results.

+

+By using projects, it makes it straightforward to re-orient yourself if you return to an analysis after time spent elsewhere.

+This wouldn't be a problem if we only ever work on one thing at a time until completion, but that is almost never the case.

+It is hard to remember what you were doing when you come back to a project after working on something else (a phenomenon called "context switching").

+By using a standardized organization system, you will reduce confusion and lost time... in other words, you are increasing reproducibility!

+

+This workshop will use RStudio, since it also works well with the project organization concept.

+

+### Create a project in RStudio

+

+Let's start a new project using RStudio.

+

+Click "File", then select "New Project".

+

+This will open the New Project Wizard, a set of menus to help you set up the project.

+

+{alt="Screenshot of RStudio New Project Wizard menu"}

+

+In the Wizard, click the first option, "New Directory", since we are making a brand-new project from scratch.

+Click "New Project" in the next menu.

+In "Directory name", enter a name that helps you remember the purpose of the project, such as "targets-demo" (follow best practices for naming files and folders).

+Under "Create project as a subdirectory of...", click the "Browse" button to select a directory to put the project.

+We recommend putting it on your Desktop so you can easily find it.

+

+You can leave "Create a git repository" and "Use renv with this project" unchecked, but these are both excellent tools to improve reproducibility, and you should consider learning them and using them in the future, if you don't already.

+They can be enabled at any later time, so you don't need to worry about trying to use them immediately.

+

+Once you work through these steps, your RStudio session should look like this:

+

+{alt="Screenshot of RStudio with a newly created project called 'targets-demo' open containing a single file, 'targets-demo.Rproj'"}

+

+Our project now contains a single file, created by RStudio: `targets-demo.Rproj`. You should not edit this file by hand. Its purpose is to tell RStudio that this is a project folder and to store some RStudio settings (if you use version-control software, it is OK to commit this file). Also, you can open the project by double clicking on the `.Rproj` file in your file explorer (try it by quitting RStudio then navigating in your file browser to your Desktop, opening the "targets-demo" folder, and double clicking `targets-demo.Rproj`).

+

+OK, now that our project is set up, we are ready to start using `targets`!

+

+## Create a `_targets.R` file

+

+Every `targets` project must include a special file, called `_targets.R` in the main project folder (the "project root").

+The `_targets.R` file includes the specification of the workflow: directions for R to run your analysis, kind of like a recipe.

+By using the `_targets.R` file, you won't have to remember to run specific scripts in a certain order.

+Instead, R will do it for you (more reproducibility points)!

+

+### Anatomy of a `_targets.R` file

+

+We will now start to write a `_targets.R` file. Fortunately, `targets` comes with a function to help us do this.

+

+In the R console, first load the `targets` package with `library(targets)`, then run the command `tar_script()`.

+

+

+``` r

+library(targets)

+tar_script()

+```

+

+Nothing will happen in the console, but in the file viewer, you should see a new file, `_targets.R` appear. Open it using the File menu or by clicking on it.

+

+We can see this default `_targets.R` file includes three main parts:

+

+- Loading packages with `library()`

+- Defining a custom function with `function()`

+- Defining a list with `list()`.

+

+The last part, the list, is the most important part of the `_targets.R` file.

+It defines the steps in the workflow.

+The `_targets.R` file must always end with this list.

+

+Furthermore, each item in the list is a call of the `tar_target()` function.

+The first argument of `tar_target()` is name of the target to build, and the second argument is the command used to build it.

+Note that the name of the target is **unquoted**, that is, it is written without any surrounding quotation marks.

+

+## Set up `_targets.R` file to run example analysis

+

+### Background: non-`targets` version

+

+We will use this template to start building our analysis of bill shape in penguins.

+First though, to get familiar with the functions and packages we'll use, let's run the code like you would in a "normal" R script without using `targets`.

+

+Recall that we are using the `palmerpenguins` R package to obtain the data.

+This package actually includes two variations of the dataset: one is an external CSV file with the raw data, and another is the cleaned data loaded into R.

+In real life you are probably have externally stored raw data, so **let's use the raw penguin data** as the starting point for our analysis too.

+

+The `path_to_file()` function in `palmerpenguins` provides the path to the raw data CSV file (it is inside the `palmerpenguins` R package source code that you downloaded to your computer when you installed the package).

+

+

+``` r

+library(palmerpenguins)

+

+# Get path to CSV file

+penguins_csv_file <- path_to_file("penguins_raw.csv")

+

+penguins_csv_file

+```

+

+``` output

+[1] "/home/runner/.local/share/renv/cache/v5/linux-ubuntu-jammy/R-4.4/x86_64-pc-linux-gnu/palmerpenguins/0.1.1/6c6861efbc13c1d543749e9c7be4a592/palmerpenguins/extdata/penguins_raw.csv"

+```

+

+We will use the `tidyverse` set of packages for loading and manipulating the data. We don't have time to cover all the details about using `tidyverse` now, but if you want to learn more about it, please see the ["Manipulating, analyzing and exporting data with tidyverse" lesson](https://datacarpentry.org/R-ecology-lesson/03-dplyr.html).

+

+Let's load the data with `read_csv()`.

+

+

+``` r

+library(tidyverse)

+

+# Read CSV file into R

+penguins_data_raw <- read_csv(penguins_csv_file)

+

+penguins_data_raw

+```

+

+

+``` output

+Rows: 344 Columns: 17

+── Column specification ────────────────────────────────────────────────────────

+Delimiter: ","

+chr (9): studyName, Species, Region, Island, Stage, Individual ID, Clutch C...

+dbl (7): Sample Number, Culmen Length (mm), Culmen Depth (mm), Flipper Leng...

+date (1): Date Egg

+

+ℹ Use `spec()` to retrieve the full column specification for this data.

+ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.

+```

+

+``` output

+# A tibble: 344 × 17

+ studyName `Sample Number` Species Region Island Stage `Individual ID`

+

+ 1 PAL0708 1 Adelie Penguin… Anvers Torge… Adul… N1A1

+ 2 PAL0708 2 Adelie Penguin… Anvers Torge… Adul… N1A2

+ 3 PAL0708 3 Adelie Penguin… Anvers Torge… Adul… N2A1

+ 4 PAL0708 4 Adelie Penguin… Anvers Torge… Adul… N2A2

+ 5 PAL0708 5 Adelie Penguin… Anvers Torge… Adul… N3A1

+ 6 PAL0708 6 Adelie Penguin… Anvers Torge… Adul… N3A2

+ 7 PAL0708 7 Adelie Penguin… Anvers Torge… Adul… N4A1

+ 8 PAL0708 8 Adelie Penguin… Anvers Torge… Adul… N4A2

+ 9 PAL0708 9 Adelie Penguin… Anvers Torge… Adul… N5A1

+10 PAL0708 10 Adelie Penguin… Anvers Torge… Adul… N5A2

+# ℹ 334 more rows

+# ℹ 10 more variables: `Clutch Completion` , `Date Egg` ,

+# `Culmen Length (mm)` , `Culmen Depth (mm)` ,

+# `Flipper Length (mm)` , `Body Mass (g)` , Sex ,

+# `Delta 15 N (o/oo)` , `Delta 13 C (o/oo)` , Comments

+```

+

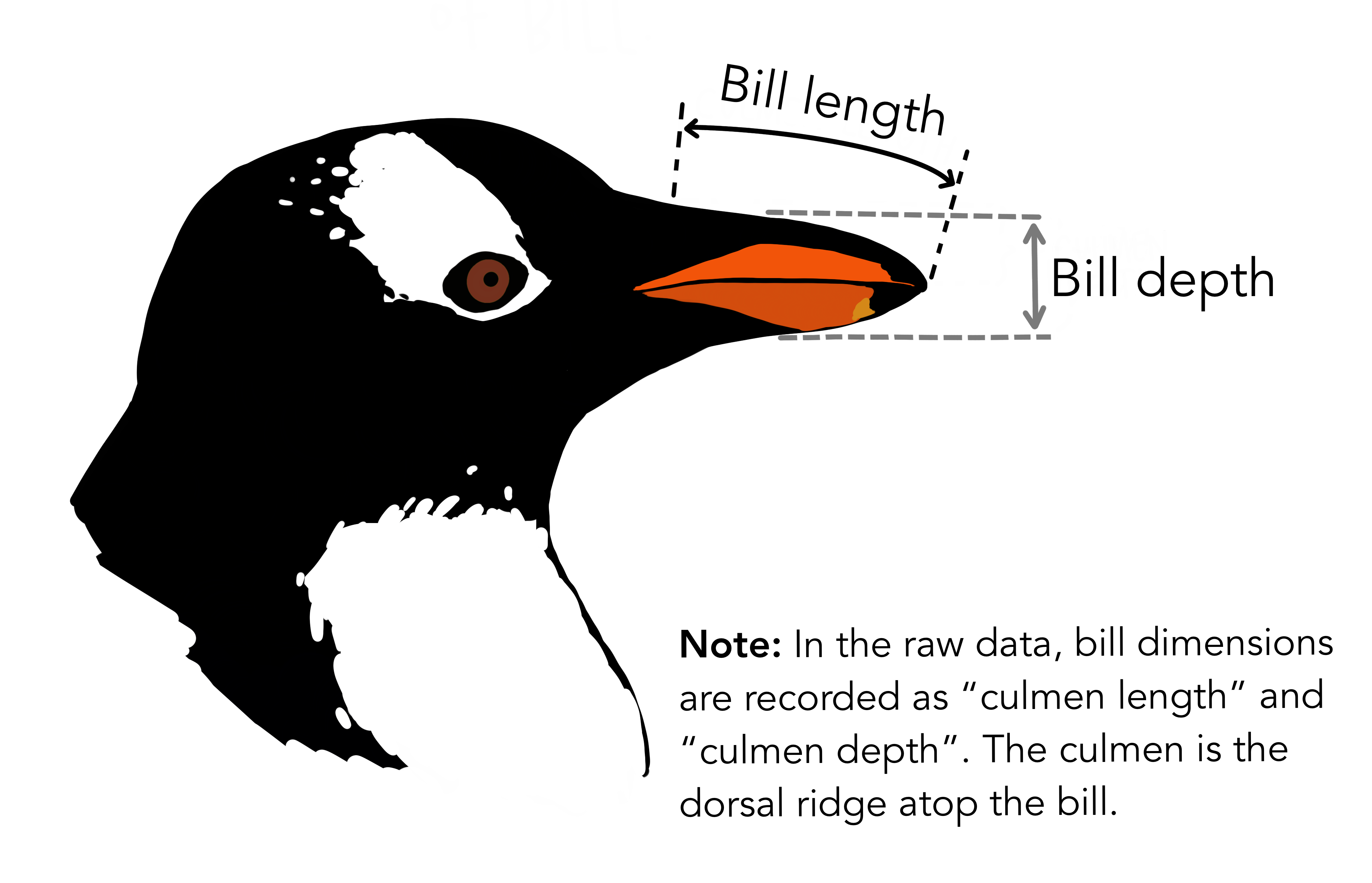

+We see the raw data has some awkward column names with spaces (these are hard to type out and can easily lead to mistakes in the code), and far more columns than we need.

+For the purposes of this analysis, we only need species name, bill length, and bill depth.

+In the raw data, the rather technical term "culmen" is used to refer to the bill.

+

+

+

+Let's clean up the data to make it easier to use for downstream analyses.

+We will also remove any rows with missing data, because this could cause errors for some functions later.

+

+

+``` r

+# Clean up raw data

+penguins_data <- penguins_data_raw |>

+ # Rename columns for easier typing and

+ # subset to only the columns needed for analysis

+ select(

+ species = Species,

+ bill_length_mm = `Culmen Length (mm)`,

+ bill_depth_mm = `Culmen Depth (mm)`

+ ) |>

+ # Delete rows with missing data

+ remove_missing(na.rm = TRUE)

+

+penguins_data

+```

+

+``` output

+# A tibble: 342 × 3

+ species bill_length_mm bill_depth_mm

+

+ 1 Adelie Penguin (Pygoscelis adeliae) 39.1 18.7

+ 2 Adelie Penguin (Pygoscelis adeliae) 39.5 17.4

+ 3 Adelie Penguin (Pygoscelis adeliae) 40.3 18

+ 4 Adelie Penguin (Pygoscelis adeliae) 36.7 19.3

+ 5 Adelie Penguin (Pygoscelis adeliae) 39.3 20.6

+ 6 Adelie Penguin (Pygoscelis adeliae) 38.9 17.8

+ 7 Adelie Penguin (Pygoscelis adeliae) 39.2 19.6

+ 8 Adelie Penguin (Pygoscelis adeliae) 34.1 18.1

+ 9 Adelie Penguin (Pygoscelis adeliae) 42 20.2

+10 Adelie Penguin (Pygoscelis adeliae) 37.8 17.1

+# ℹ 332 more rows

+```

+

+That's better!

+

+### `targets` version

+

+What does this look like using `targets`?

+

+The biggest difference is that we need to **put each step of the workflow into the list at the end**.

+

+We also define a custom function for the data cleaning step.

+That is because the list of targets at the end **should look like a high-level summary of your analysis**.

+You want to avoid lengthy chunks of code when defining the targets; instead, put that code in the custom functions.

+The other steps (setting the file path and loading the data) are each just one function call so there's not much point in putting those into their own custom functions.

+

+Finally, each step in the workflow is defined with the `tar_target()` function.

+

+

+``` r

+library(targets)

+library(tidyverse)

+library(palmerpenguins)

+

+clean_penguin_data <- function(penguins_data_raw) {

+ penguins_data_raw |>

+ select(

+ species = Species,

+ bill_length_mm = `Culmen Length (mm)`,

+ bill_depth_mm = `Culmen Depth (mm)`

+ ) |>

+ remove_missing(na.rm = TRUE)

+}

+

+list(

+ tar_target(penguins_csv_file, path_to_file("penguins_raw.csv")),

+ tar_target(penguins_data_raw, read_csv(

+ penguins_csv_file, show_col_types = FALSE)),

+ tar_target(penguins_data, clean_penguin_data(penguins_data_raw))

+)

+```

+

+I have set `show_col_types = FALSE` in `read_csv()` because we know from the earlier code that the column types were set correctly by default (character for species and numeric for bill length and depth), so we don't need to see the warning it would otherwise issue.

+

+## Run the workflow

+

+Now that we have a workflow, we can run it with the `tar_make()` function.

+Try running it, and you should see something like this:

+

+

+``` r

+tar_make()

+```

+

+``` output

+▶ dispatched target penguins_csv_file

+● completed target penguins_csv_file [0.001 seconds, 190 bytes]

+▶ dispatched target penguins_data_raw

+● completed target penguins_data_raw [0.188 seconds, 10.403 kilobytes]

+▶ dispatched target penguins_data

+● completed target penguins_data [0.007 seconds, 1.609 kilobytes]

+▶ ended pipeline [0.341 seconds]

+```

+

+Congratulations, you've run your first workflow with `targets`!

+

+::::::::::::::::::::::::::::::::::::: keypoints

+

+- Projects help keep our analyses organized so we can easily re-run them later

+- Use the RStudio Project Wizard to create projects

+- The `_targets.R` file is a special file that must be included in all `targets` projects, and defines the worklow

+- Use `tar_script()` to create a default `_targets.R` file

+- Use `tar_make()` to run the workflow

+

+::::::::::::::::::::::::::::::::::::::::::::::::

diff --git a/branch.md b/branch.md

new file mode 100644

index 00000000..e982fd81

--- /dev/null

+++ b/branch.md

@@ -0,0 +1,524 @@

+---

+title: 'Branching'

+teaching: 10

+exercises: 2

+---

+

+:::::::::::::::::::::::::::::::::::::: questions

+

+- How can we specify many targets without typing everything out?

+

+::::::::::::::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: objectives

+

+- Be able to specify targets using branching

+

+::::::::::::::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: instructor

+

+Episode summary: Show how to use branching

+

+:::::::::::::::::::::::::::::::::::::

+

+

+

+## Why branching?

+

+One of the major strengths of `targets` is the ability to define many targets from a single line of code ("branching").

+This not only saves you typing, it also **reduces the risk of errors** since there is less chance of making a typo.

+

+## Types of branching

+

+There are two types of branching, **dynamic branching** and **static branching**.

+"Branching" refers to the idea that you can provide a single specification for how to make targets (the "pattern"), and `targets` generates multiple targets from it ("branches").

+"Dynamic" means that the branches that result from the pattern do not have to be defined ahead of time---they are a dynamic result of the code.

+

+In this workshop, we will only cover dynamic branching since it is generally easier to write (static branching requires use of [meta-programming](https://books.ropensci.org/targets/static.html#metaprogramming), an advanced topic). For more information about each and when you might want to use one or the other (or some combination of the two), [see the `targets` package manual](https://books.ropensci.org/targets/dynamic.html).

+

+## Example without branching

+

+To see how this works, let's continue our analysis of the `palmerpenguins` dataset.

+

+**Our hypothesis is that bill depth decreases with bill length.**

+We will test this hypothesis with a linear model.

+

+For example, this is a model of bill depth dependent on bill length:

+

+

+``` r

+lm(bill_depth_mm ~ bill_length_mm, data = penguins_data)

+```

+

+We can add this to our pipeline. We will call it the `combined_model` because it combines all the species together without distinction:

+

+

+``` r

+source("R/packages.R")

+source("R/functions.R")

+

+tar_plan(

+ # Load raw data

+ tar_file_read(

+ penguins_data_raw,

+ path_to_file("penguins_raw.csv"),

+ read_csv(!!.x, show_col_types = FALSE)

+ ),

+ # Clean data

+ penguins_data = clean_penguin_data(penguins_data_raw),

+ # Build model

+ combined_model = lm(

+ bill_depth_mm ~ bill_length_mm,

+ data = penguins_data

+ )

+)

+```

+

+

+``` output

+✔ skipped target penguins_data_raw_file

+✔ skipped target penguins_data_raw

+✔ skipped target penguins_data

+▶ dispatched target combined_model

+● completed target combined_model [0.024 seconds, 11.201 kilobytes]

+▶ ended pipeline [0.273 seconds]

+```

+

+Let's have a look at the model. We will use the `glance()` function from the `broom` package. Unlike base R `summary()`, this function returns output as a tibble (the tidyverse equivalent of a dataframe), which as we will see later is quite useful for downstream analyses.

+

+

+``` r

+library(broom)

+tar_load(combined_model)

+glance(combined_model)

+```

+

+``` output

+# A tibble: 1 × 12

+ r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC deviance df.residual nobs

+

+1 0.0552 0.0525 1.92 19.9 0.0000112 1 -708. 1422. 1433. 1256. 340 342

+```

+

+Notice the small *P*-value.

+This seems to indicate that the model is highly significant.

+

+But wait a moment... is this really an appropriate model? Recall that there are three species of penguins in the dataset. It is possible that the relationship between bill depth and length **varies by species**.

+

+We should probably test some alternative models.

+These could include models that add a parameter for species, or add an interaction effect between species and bill length.

+

+Now our workflow is getting more complicated. This is what a workflow for such an analysis might look like **without branching** (make sure to add `library(broom)` to `packages.R`):

+

+

+``` r

+source("R/packages.R")

+source("R/functions.R")

+

+tar_plan(

+ # Load raw data

+ tar_file_read(

+ penguins_data_raw,

+ path_to_file("penguins_raw.csv"),

+ read_csv(!!.x, show_col_types = FALSE)

+ ),

+ # Clean data

+ penguins_data = clean_penguin_data(penguins_data_raw),

+ # Build models

+ combined_model = lm(

+ bill_depth_mm ~ bill_length_mm,

+ data = penguins_data

+ ),

+ species_model = lm(

+ bill_depth_mm ~ bill_length_mm + species,

+ data = penguins_data

+ ),

+ interaction_model = lm(

+ bill_depth_mm ~ bill_length_mm * species,

+ data = penguins_data

+ ),

+ # Get model summaries

+ combined_summary = glance(combined_model),

+ species_summary = glance(species_model),

+ interaction_summary = glance(interaction_model)

+)

+```

+

+

+``` output

+✔ skipped target penguins_data_raw_file

+✔ skipped target penguins_data_raw

+✔ skipped target penguins_data

+✔ skipped target combined_model

+▶ dispatched target interaction_model

+● completed target interaction_model [0.003 seconds, 19.283 kilobytes]

+▶ dispatched target species_model

+● completed target species_model [0.001 seconds, 15.439 kilobytes]

+▶ dispatched target combined_summary

+● completed target combined_summary [0.006 seconds, 348 bytes]

+▶ dispatched target interaction_summary

+● completed target interaction_summary [0.003 seconds, 348 bytes]

+▶ dispatched target species_summary

+● completed target species_summary [0.003 seconds, 347 bytes]

+▶ ended pipeline [0.28 seconds]

+```

+

+Let's look at the summary of one of the models:

+

+

+``` r

+tar_read(species_summary)

+```

+

+``` output

+# A tibble: 1 × 12

+ r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC deviance df.residual nobs

+

+1 0.769 0.767 0.953 375. 3.65e-107 3 -467. 944. 963. 307. 338 342

+```

+

+So this way of writing the pipeline works, but is repetitive: we have to call `glance()` each time we want to obtain summary statistics for each model.

+Furthermore, each summary target (`combined_summary`, etc.) is explicitly named and typed out manually.

+It would be fairly easy to make a typo and end up with the wrong model being summarized.

+

+## Example with branching

+

+### First attempt

+

+Let's see how to write the same plan using **dynamic branching**:

+

+

+``` r

+source("R/packages.R")

+source("R/functions.R")

+

+tar_plan(

+ # Load raw data

+ tar_file_read(

+ penguins_data_raw,

+ path_to_file("penguins_raw.csv"),

+ read_csv(!!.x, show_col_types = FALSE)

+ ),

+ # Clean data

+ penguins_data = clean_penguin_data(penguins_data_raw),

+ # Build models

+ models = list(

+ combined_model = lm(

+ bill_depth_mm ~ bill_length_mm, data = penguins_data),

+ species_model = lm(

+ bill_depth_mm ~ bill_length_mm + species, data = penguins_data),

+ interaction_model = lm(

+ bill_depth_mm ~ bill_length_mm * species, data = penguins_data)

+ ),

+ # Get model summaries

+ tar_target(

+ model_summaries,

+ glance(models[[1]]),

+ pattern = map(models)

+ )

+)

+```

+

+What is going on here?

+

+First, let's look at the messages provided by `tar_make()`.

+

+

+``` output

+✔ skipped target penguins_data_raw_file

+✔ skipped target penguins_data_raw

+✔ skipped target penguins_data

+▶ dispatched target models

+● completed target models [0.005 seconds, 43.009 kilobytes]

+▶ dispatched branch model_summaries_812e3af782bee03f

+● completed branch model_summaries_812e3af782bee03f [0.006 seconds, 348 bytes]

+▶ dispatched branch model_summaries_2b8108839427c135

+● completed branch model_summaries_2b8108839427c135 [0.003 seconds, 347 bytes]

+▶ dispatched branch model_summaries_533cd9a636c3e05b

+● completed branch model_summaries_533cd9a636c3e05b [0.003 seconds, 348 bytes]

+● completed pattern model_summaries

+▶ ended pipeline [0.302 seconds]

+```

+

+There is a series of smaller targets (branches) that are each named like model_summaries_812e3af782bee03f, then one overall `model_summaries` target.

+That is the result of specifying targets using branching: each of the smaller targets are the "branches" that comprise the overall target.

+Since `targets` has no way of knowing ahead of time how many branches there will be or what they represent, it names each one using this series of numbers and letters (the "hash").

+`targets` builds each branch one at a time, then combines them into the overall target.

+

+Next, let's look in more detail about how the workflow is set up, starting with how we defined the models:

+

+

+``` r

+ # Build models

+ models = list(

+ combined_model = lm(

+ bill_depth_mm ~ bill_length_mm, data = penguins_data),

+ species_model = lm(

+ bill_depth_mm ~ bill_length_mm + species, data = penguins_data),

+ interaction_model = lm(

+ bill_depth_mm ~ bill_length_mm * species, data = penguins_data)

+ ),

+```

+

+Unlike the non-branching version, we defined the models **in a list** (instead of one target per model).

+This is because dynamic branching is similar to the `base::apply()` or [`purrrr::map()`](https://purrr.tidyverse.org/reference/map.html) method of looping: it applies a function to each element of a list.

+So we need to prepare the input for looping as a list.

+

+Next, take a look at the command to build the target `model_summaries`.

+

+

+``` r

+ # Get model summaries

+ tar_target(

+ model_summaries,

+ glance(models[[1]]),

+ pattern = map(models)

+ )

+```

+

+As before, the first argument is the name of the target to build, and the second is the command to build it.

+

+Here, we apply the `glance()` function to each element of `models` (the `[[1]]` is necessary because when the function gets applied, each element is actually a nested list, and we need to remove one layer of nesting).

+

+Finally, there is an argument we haven't seen before, `pattern`, which indicates that this target should be built using dynamic branching.

+`map` means to apply the command to each element of the input list (`models`) sequentially.

+

+Now that we understand how the branching workflow is constructed, let's inspect the output:

+

+

+``` r

+tar_read(model_summaries)

+```

+

+

+``` output

+# A tibble: 3 × 12

+ r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC deviance df.residual nobs

+

+1 0.0552 0.0525 1.92 19.9 1.12e- 5 1 -708. 1422. 1433. 1256. 340 342

+2 0.769 0.767 0.953 375. 3.65e-107 3 -467. 944. 963. 307. 338 342

+3 0.770 0.766 0.955 225. 8.52e-105 5 -466. 947. 974. 306. 336 342

+```

+

+The model summary statistics are all included in a single dataframe.

+

+But there's one problem: **we can't tell which row came from which model!** It would be unwise to assume that they are in the same order as the list of models.

+

+This is due to the way dynamic branching works: by default, there is no information about the provenance of each target preserved in the output.

+

+How can we fix this?

+

+### Second attempt

+

+The key to obtaining useful output from branching pipelines is to include the necessary information in the output of each individual branch.

+Here, we want to know the kind of model that corresponds to each row of the model summaries.

+To do that, we need to write a **custom function**.

+You will need to write custom functions frequently when using `targets`, so it's good to get used to it!

+

+Here is the function. Save this in `R/functions.R`:

+

+

+``` r

+glance_with_mod_name <- function(model_in_list) {

+ model_name <- names(model_in_list)

+ model <- model_in_list[[1]]

+ glance(model) |>

+ mutate(model_name = model_name)

+}

+```

+

+Our new pipeline looks almost the same as before, but this time we use the custom function instead of `glance()`.

+

+

+``` r

+source("R/functions.R")

+source("R/packages.R")

+

+tar_plan(

+ # Load raw data

+ tar_file_read(

+ penguins_data_raw,

+ path_to_file("penguins_raw.csv"),

+ read_csv(!!.x, show_col_types = FALSE)

+ ),

+ # Clean data

+ penguins_data = clean_penguin_data(penguins_data_raw),

+ # Build models

+ models = list(

+ combined_model = lm(

+ bill_depth_mm ~ bill_length_mm, data = penguins_data),

+ species_model = lm(

+ bill_depth_mm ~ bill_length_mm + species, data = penguins_data),

+ interaction_model = lm(

+ bill_depth_mm ~ bill_length_mm * species, data = penguins_data)

+ ),

+ # Get model summaries

+ tar_target(

+ model_summaries,

+ glance_with_mod_name(models),

+ pattern = map(models)

+ )

+)

+```

+

+

+``` output

+✔ skipped target penguins_data_raw_file

+✔ skipped target penguins_data_raw

+✔ skipped target penguins_data

+✔ skipped target models

+▶ dispatched branch model_summaries_812e3af782bee03f

+● completed branch model_summaries_812e3af782bee03f [0.012 seconds, 374 bytes]

+▶ dispatched branch model_summaries_2b8108839427c135

+● completed branch model_summaries_2b8108839427c135 [0.007 seconds, 371 bytes]

+▶ dispatched branch model_summaries_533cd9a636c3e05b

+● completed branch model_summaries_533cd9a636c3e05b [0.004 seconds, 377 bytes]

+● completed pattern model_summaries

+▶ ended pipeline [0.281 seconds]

+```

+

+And this time, when we load the `model_summaries`, we can tell which model corresponds to which row (you may need to scroll to the right to see it).

+

+

+``` r

+tar_read(model_summaries)

+```

+

+``` output

+# A tibble: 3 × 13

+ r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC deviance df.residual nobs model_name

+

+1 0.0552 0.0525 1.92 19.9 1.12e- 5 1 -708. 1422. 1433. 1256. 340 342 combined_model

+2 0.769 0.767 0.953 375. 3.65e-107 3 -467. 944. 963. 307. 338 342 species_model

+3 0.770 0.766 0.955 225. 8.52e-105 5 -466. 947. 974. 306. 336 342 interaction_model

+```

+

+Next we will add one more target, a prediction of bill depth based on each model. These will be needed for plotting the models in the report.

+Such a prediction can be obtained with the `augment()` function of the `broom` package.

+

+

+``` r

+tar_load(models)

+augment(models[[1]])

+```

+

+``` output

+# A tibble: 342 × 8

+ bill_depth_mm bill_length_mm .fitted .resid .hat .sigma .cooksd .std.resid

+

+ 1 18.7 39.1 17.6 1.14 0.00521 1.92 0.000924 0.594

+ 2 17.4 39.5 17.5 -0.127 0.00485 1.93 0.0000107 -0.0663

+ 3 18 40.3 17.5 0.541 0.00421 1.92 0.000168 0.282

+ 4 19.3 36.7 17.8 1.53 0.00806 1.92 0.00261 0.802

+ 5 20.6 39.3 17.5 3.06 0.00503 1.92 0.00641 1.59

+ 6 17.8 38.9 17.6 0.222 0.00541 1.93 0.0000364 0.116

+ 7 19.6 39.2 17.6 2.05 0.00512 1.92 0.00293 1.07

+ 8 18.1 34.1 18.0 0.114 0.0124 1.93 0.0000223 0.0595

+ 9 20.2 42 17.3 2.89 0.00329 1.92 0.00373 1.50

+10 17.1 37.8 17.7 -0.572 0.00661 1.92 0.000296 -0.298

+# ℹ 332 more rows

+```

+

+::::::::::::::::::::::::::::::::::::: {.challenge}

+

+## Challenge: Add model predictions to the workflow

+

+Can you add the model predictions using `augment()`? You will need to define a custom function just like we did for `glance()`.

+

+:::::::::::::::::::::::::::::::::: {.solution}

+

+Define the new function as `augment_with_mod_name()`. It is the same as `glance_with_mod_name()`, but use `augment()` instead of `glance()`:

+

+

+``` r

+augment_with_mod_name <- function(model_in_list) {

+ model_name <- names(model_in_list)

+ model <- model_in_list[[1]]

+ augment(model) |>

+ mutate(model_name = model_name)

+}

+```

+

+Add the step to the workflow:

+

+

+``` r

+source("R/functions.R")

+source("R/packages.R")

+

+tar_plan(

+ # Load raw data

+ tar_file_read(

+ penguins_data_raw,

+ path_to_file("penguins_raw.csv"),

+ read_csv(!!.x, show_col_types = FALSE)

+ ),

+ # Clean data

+ penguins_data = clean_penguin_data(penguins_data_raw),

+ # Build models

+ models = list(

+ combined_model = lm(

+ bill_depth_mm ~ bill_length_mm, data = penguins_data),

+ species_model = lm(

+ bill_depth_mm ~ bill_length_mm + species, data = penguins_data),

+ interaction_model = lm(

+ bill_depth_mm ~ bill_length_mm * species, data = penguins_data)

+ ),

+ # Get model summaries

+ tar_target(

+ model_summaries,

+ glance_with_mod_name(models),

+ pattern = map(models)

+ ),

+ # Get model predictions

+ tar_target(

+ model_predictions,

+ augment_with_mod_name(models),

+ pattern = map(models)

+ )

+)

+```

+

+::::::::::::::::::::::::::::::::::

+

+:::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: {.callout}

+

+## Best practices for branching

+

+Dynamic branching is designed to work well with **dataframes** (tibbles).

+

+So if possible, write your custom functions to accept dataframes as input and return them as output, and always include any necessary metadata as a column or columns.

+

+:::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: {.challenge}

+

+## Challenge: What other kinds of patterns are there?

+

+So far, we have only used a single function in conjunction with the `pattern` argument, `map()`, which applies the function to each element of its input in sequence.

+

+Can you think of any other ways you might want to apply a branching pattern?

+

+:::::::::::::::::::::::::::::::::: {.solution}

+

+Some other ways of applying branching patterns include:

+

+- crossing: one branch per combination of elements (`cross()` function)

+- slicing: one branch for each of a manually selected set of elements (`slice()` function)

+- sampling: one branch for each of a randomly selected set of elements (`sample()` function)

+

+You can [find out more about different branching patterns in the `targets` manual](https://books.ropensci.org/targets/dynamic.html#patterns).

+

+::::::::::::::::::::::::::::::::::

+

+:::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: keypoints

+

+- Dynamic branching creates multiple targets with a single command

+- You usually need to write custom functions so that the output of the branches includes necessary metadata

+

+::::::::::::::::::::::::::::::::::::::::::::::::

diff --git a/cache.md b/cache.md

new file mode 100644

index 00000000..437d429e

--- /dev/null

+++ b/cache.md

@@ -0,0 +1,145 @@

+---

+title: 'Loading Workflow Objects'

+teaching: 10

+exercises: 2

+---

+

+

+

+:::::::::::::::::::::::::::::::::::::: questions

+

+- Where does the workflow happen?

+- How can we inspect the objects built by the workflow?

+

+::::::::::::::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: objectives

+

+- Explain where `targets` runs the workflow and why

+- Be able to load objects built by the workflow into your R session

+

+::::::::::::::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: instructor

+

+Episode summary: Show how to get at the objects that we built

+

+:::::::::::::::::::::::::::::::::::::

+

+## Where does the workflow happen?

+

+So we just finished running our first workflow.

+Now you probably want to look at its output.

+But, if we just call the name of the object (for example, `penguins_data`), we get an error.

+

+``` r

+penguins_data

+```

+

+``` error

+Error: object 'penguins_data' not found

+```

+

+Where are the results of our workflow?

+

+::::::::::::::::::::::::::::::::::::: instructor

+

+- To reinforce the concept of `targets` running in a separate R session, you may want to pretend trying to run `penguins_data`, then feigning surprise when it doesn't work and using it as a teaching moment (errors are pedagogy!).

+

+::::::::::::::::::::::::::::::::::::::::::::::::

+

+We don't see the workflow results because `targets` **runs the workflow in a separate R session** that we can't interact with.

+This is for reproducibility---the objects built by the workflow should only depend on the code in your project, not any commands you may have interactively given to R.

+

+Fortunately, `targets` has two functions that can be used to load objects built by the workflow into our current session, `tar_load()` and `tar_read()`.

+Let's see how these work.

+

+## tar_load()

+

+`tar_load()` loads an object built by the workflow into the current session.

+Its first argument is the name of the object you want to load.

+Let's use this to load `penguins_data` and get an overview of the data with `summary()`.

+

+

+

+

+``` r

+tar_load(penguins_data)

+summary(penguins_data)

+```

+

+``` output

+ species bill_length_mm bill_depth_mm

+ Length:342 Min. :32.10 Min. :13.10

+ Class :character 1st Qu.:39.23 1st Qu.:15.60

+ Mode :character Median :44.45 Median :17.30

+ Mean :43.92 Mean :17.15

+ 3rd Qu.:48.50 3rd Qu.:18.70

+ Max. :59.60 Max. :21.50

+```

+

+Note that `tar_load()` is used for its **side-effect**---loading the desired object into the current R session.

+It doesn't actually return a value.

+

+## tar_read()

+

+`tar_read()` is similar to `tar_load()` in that it is used to retrieve objects built by the workflow, but unlike `tar_load()`, it returns them directly as output.

+

+Let's try it with `penguins_csv_file`.

+

+

+``` r

+tar_read(penguins_csv_file)

+```

+

+``` output

+[1] "/home/runner/.local/share/renv/cache/v5/linux-ubuntu-jammy/R-4.4/x86_64-pc-linux-gnu/palmerpenguins/0.1.1/6c6861efbc13c1d543749e9c7be4a592/palmerpenguins/extdata/penguins_raw.csv"

+```

+

+We immediately see the contents of `penguins_csv_file`.

+But it has not been loaded into the environment.

+If you try to run `penguins_csv_file` now, you will get an error:

+

+

+``` r

+penguins_csv_file

+```

+

+``` error

+Error: object 'penguins_csv_file' not found

+```

+

+## When to use which function

+

+`tar_load()` tends to be more useful when you want to load objects and do things with them.

+`tar_read()` is more useful when you just want to immediately inspect an object.

+

+## The targets cache

+

+If you close your R session, then re-start it and use `tar_load()` or `tar_read()`, you will notice that it can still load the workflow objects.

+In other words, the workflow output is **saved across R sessions**.

+How is this possible?

+

+You may have noticed a new folder has appeared in your project, called `_targets`.

+This is the **targets cache**.

+It contains all of the workflow output; that is how we can load the targets built by the workflow even after quitting then restarting R.

+

+**You should not edit the contents of the cache by hand** (with one exception).

+Doing so would make your analysis non-reproducible.

+

+The one exception to this rule is a special subfolder called `_targets/user`.

+This folder does not exist by default.

+You can create it if you want, and put whatever you want inside.

+

+Generally, `_targets/user` is a good place to store files that are not code, like data and output.

+

+Note that if you don't have anything in `_targets/user` that you need to keep around, it is possible to "reset" your workflow by simply deleting the entire `_targets` folder. Of course, this means you will need to run everything over again, so don't do this lightly!

+

+::::::::::::::::::::::::::::::::::::: keypoints

+

+- `targets` workflows are run in a separate, non-interactive R session

+- `tar_load()` loads a workflow object into the current R session

+- `tar_read()` reads a workflow object and returns its value

+- The `_targets` folder is the cache and generally should not be edited by hand

+

+::::::::::::::::::::::::::::::::::::::::::::::::

diff --git a/config.yaml b/config.yaml

new file mode 100644

index 00000000..63382b36

--- /dev/null

+++ b/config.yaml

@@ -0,0 +1,87 @@

+#------------------------------------------------------------

+# Values for this lesson.

+#------------------------------------------------------------

+

+# Which carpentry is this (swc, dc, lc, or cp)?

+# swc: Software Carpentry

+# dc: Data Carpentry

+# lc: Library Carpentry

+# cp: Carpentries (to use for instructor training for instance)

+# incubator: The Carpentries Incubator

+carpentry: 'incubator'

+

+# Overall title for pages.

+title: 'Introduction to targets'

+

+# Date the lesson was created (YYYY-MM-DD, this is empty by default)

+created: ~

+

+# Comma-separated list of keywords for the lesson

+keywords: 'reproducibility, data, targets, R'

+

+# Life cycle stage of the lesson

+# possible values: pre-alpha, alpha, beta, stable

+life_cycle: 'pre-alpha'

+

+# License of the lesson

+license: 'CC-BY 4.0'

+

+# Link to the source repository for this lesson

+source: 'https://github.com/carpentries-incubator/targets-workshop'

+

+# Default branch of your lesson

+branch: 'main'

+

+# Who to contact if there are any issues

+contact: 'joelnitta@gmail.com'

+

+# Navigation ------------------------------------------------

+#

+# Use the following menu items to specify the order of

+# individual pages in each dropdown section. Leave blank to

+# include all pages in the folder.

+#

+# Example -------------

+#

+# episodes:

+# - introduction.md

+# - first-steps.md

+#

+# learners:

+# - setup.md

+#

+# instructors:

+# - instructor-notes.md

+#

+# profiles:

+# - one-learner.md

+# - another-learner.md

+

+# Order of episodes in your lesson

+episodes:

+- introduction.Rmd

+- basic-targets.Rmd

+- cache.Rmd

+- lifecycle.Rmd

+- organization.Rmd

+- packages.Rmd

+- files.Rmd

+- branch.Rmd

+- parallel.Rmd

+- quarto.Rmd

+

+# Information for Learners

+learners:

+

+# Information for Instructors

+instructors:

+

+# Learner Profiles

+profiles:

+

+# Customisation ---------------------------------------------

+#

+# This space below is where custom yaml items (e.g. pinning

+# sandpaper and varnish versions) should live

+

+

diff --git a/fig/basic-rstudio-project.png b/fig/basic-rstudio-project.png

new file mode 100644

index 00000000..335768fc

Binary files /dev/null and b/fig/basic-rstudio-project.png differ

diff --git a/fig/basic-rstudio-wizard.png b/fig/basic-rstudio-wizard.png

new file mode 100644

index 00000000..f3c6d5ce

Binary files /dev/null and b/fig/basic-rstudio-wizard.png differ

diff --git a/fig/lifecycle-visnetwork.png b/fig/lifecycle-visnetwork.png

new file mode 100644

index 00000000..5187a62f

Binary files /dev/null and b/fig/lifecycle-visnetwork.png differ

diff --git a/files.md b/files.md

new file mode 100644

index 00000000..d4544f9c

--- /dev/null

+++ b/files.md

@@ -0,0 +1,301 @@

+---

+title: 'Working with External Files'

+teaching: 10

+exercises: 2

+---

+

+:::::::::::::::::::::::::::::::::::::: questions

+

+- How can we load external data?

+

+::::::::::::::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: objectives

+

+- Be able to load external data into a workflow

+- Configure the workflow to rerun if the contents of the external data change

+

+::::::::::::::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: instructor

+

+Episode summary: Show how to read and write external files

+

+:::::::::::::::::::::::::::::::::::::

+

+

+

+## Treating external files as a dependency

+

+Almost all workflows will start by importing data, which is typically stored as an external file.

+

+As a simple example, let's create an external data file in RStudio with the "New File" menu option. Enter a single line of text, "Hello World" and save it as "hello.txt" text file in `_targets/user/data/`.

+

+We will read in the contents of this file and store it as `some_data` in the workflow by writing the following plan and running `tar_make()`:

+

+::::::::::::::::::::::::::::::::::::: {.callout}

+

+## Save your progress

+

+You can only have one active `_targets.R` file at a time in a given project.

+

+We are about to create a new `_targets.R` file, but you probably don't want to lose your progress in the one we have been working on so far (the penguins bill analysis). You can temporarily rename that one to something like `_targets_old.R` so that you don't overwrite it with the new example `_targets.R` file below. Then, rename them when you are ready to work on it again.

+

+:::::::::::::::::::::::::::::::::::::

+

+

+``` r

+library(targets)

+library(tarchetypes)

+

+tar_plan(

+ some_data = readLines("_targets/user/data/hello.txt")

+)

+```

+

+

+``` output

+▶ dispatched target some_data

+● completed target some_data [0 seconds, 64 bytes]

+▶ ended pipeline [0.089 seconds]

+```

+

+If we inspect the contents of `some_data` with `tar_read(some_data)`, it will contain the string `"Hello World"` as expected.

+

+Now say we edit "hello.txt", perhaps add some text: "Hello World. How are you?". Edit this in the RStudio text editor and save it. Now run the pipeline again.

+

+

+``` r

+library(targets)

+library(tarchetypes)

+

+tar_plan(

+ some_data = readLines("_targets/user/data/hello.txt")

+)

+```

+

+

+``` output

+✔ skipped target some_data

+✔ skipped pipeline [0.087 seconds]

+```

+

+The target `some_data` was skipped, even though the contents of the file changed.

+

+That is because right now, targets is only tracking the **name** of the file, not its contents. We need to use a special function for that, `tar_file()` from the `tarchetypes` package. `tar_file()` will calculate the "hash" of a file---a unique digital signature that is determined by the file's contents. If the contents change, the hash will change, and this will be detected by `targets`.

+

+

+``` r

+library(targets)

+library(tarchetypes)

+

+tar_plan(

+ tar_file(data_file, "_targets/user/data/hello.txt"),

+ some_data = readLines(data_file)

+)

+```

+

+

+``` output

+▶ dispatched target data_file

+● completed target data_file [0.001 seconds, 26 bytes]

+▶ dispatched target some_data

+● completed target some_data [0 seconds, 78 bytes]

+▶ ended pipeline [0.109 seconds]

+```

+

+This time we see that `targets` does successfully re-build `some_data` as expected.

+

+## A shortcut (or, About target factories)

+

+However, also notice that this means we need to write two targets instead of one: one target to track the contents of the file (`data_file`), and one target to store what we load from the file (`some_data`).

+

+It turns out that this is a common pattern in `targets` workflows, so `tarchetypes` provides a shortcut to express this more concisely, `tar_file_read()`.

+

+

+``` r

+library(targets)

+library(tarchetypes)

+

+tar_plan(

+ tar_file_read(

+ hello,

+ "_targets/user/data/hello.txt",

+ readLines(!!.x)

+ )

+)

+```

+

+Let's inspect this pipeline with `tar_manifest()`:

+

+

+``` r

+tar_manifest()

+```

+

+

+``` output

+# A tibble: 2 × 2

+ name command

+

+1 hello_file "\"_targets/user/data/hello.txt\""

+2 hello "readLines(hello_file)"

+```

+

+Notice that even though we only specified one target in the pipeline (`hello`, with `tar_file_read()`), the pipeline actually includes **two** targets, `hello_file` and `hello`.

+

+That is because `tar_file_read()` is a special function called a **target factory**, so-called because it makes **multiple** targets at once. One of the main purposes of the `tarchetypes` package is to provide target factories to make writing pipelines easier and less error-prone.

+

+## Non-standard evaluation

+

+What is the deal with the `!!.x`? That may look unfamiliar even if you are used to using R. It is known as "non-standard evaluation," and gets used in some special contexts. We don't have time to go into the details now, but just remember that you will need to use this special notation with `tar_file_read()`. If you forget how to write it (this happens frequently!) look at the examples in the help file by running `?tar_file_read`.

+

+## Other data loading functions

+

+Although we used `readLines()` as an example here, you can use the same pattern for other functions that load data from external files, such as `readr::read_csv()`, `xlsx::read_excel()`, and others (for example, `read_csv(!!.x)`, `read_excel(!!.x)`, etc.).

+

+This is generally recommended so that your pipeline stays up to date with your input data.

+

+::::::::::::::::::::::::::::::::::::: {.challenge}

+

+## Challenge: Use `tar_file_read()` with the penguins example

+

+We didn't know about `tar_file_read()` yet when we started on the penguins bill analysis.

+

+How can you use `tar_file_read()` to load the CSV file while tracking its contents?

+

+:::::::::::::::::::::::::::::::::: {.solution}

+

+

+``` r

+source("R/packages.R")

+source("R/functions.R")

+

+tar_plan(

+ tar_file_read(

+ penguins_data_raw,

+ path_to_file("penguins_raw.csv"),

+ read_csv(!!.x, show_col_types = FALSE)

+ ),

+ penguins_data = clean_penguin_data(penguins_data_raw)

+)

+```

+

+

+``` output

+▶ dispatched target penguins_data_raw_file

+● completed target penguins_data_raw_file [0.001 seconds, 53.098 kilobytes]

+▶ dispatched target penguins_data_raw

+● completed target penguins_data_raw [0.099 seconds, 10.403 kilobytes]

+▶ dispatched target penguins_data

+● completed target penguins_data [0.015 seconds, 1.495 kilobytes]

+▶ ended pipeline [0.369 seconds]

+```

+

+::::::::::::::::::::::::::::::::::

+

+:::::::::::::::::::::::::::::::::::::

+

+## Writing out data

+

+Writing to files is similar to loading in files: we will use the `tar_file()` function. There is one important caveat: in this case, the second argument of `tar_file()` (the command to build the target) **must return the path to the file**. Not all functions that write files do this (some return nothing; these treat the output file is a side-effect of running the function), so you may need to define a custom function that writes out the file and then returns its path.

+

+Let's do this for `writeLines()`, the R function that writes character data to a file. Normally, its output would be `NULL` (nothing), as we can see here:

+

+

+``` r

+x <- writeLines("some text", "test.txt")

+x

+```

+

+

+``` output

+NULL

+```

+

+Here is our modified function that writes character data to a file and returns the name of the file (the `...` means "pass the rest of these arguments to `writeLines()`"):

+

+

+``` r

+write_lines_file <- function(text, file, ...) {

+ writeLines(text = text, con = file, ...)

+ file

+}

+```

+

+Let's try it out:

+

+

+``` r

+x <- write_lines_file("some text", "test.txt")

+x

+```

+

+

+``` output

+[1] "test.txt"

+```

+

+We can now use this in a pipeline. For example let's change the text to upper case then write it out again:

+

+

+``` r

+library(targets)

+library(tarchetypes)

+

+source("R/functions.R")

+

+tar_plan(

+ tar_file_read(

+ hello,

+ "_targets/user/data/hello.txt",

+ readLines(!!.x)

+ ),

+ hello_caps = toupper(hello),

+ tar_file(

+ hello_caps_out,

+ write_lines_file(hello_caps, "_targets/user/results/hello_caps.txt")

+ )

+)

+```

+

+

+``` output

+▶ dispatched target hello_file

+● completed target hello_file [0 seconds, 26 bytes]

+▶ dispatched target hello

+● completed target hello [0 seconds, 78 bytes]

+▶ dispatched target hello_caps

+● completed target hello_caps [0.001 seconds, 78 bytes]

+▶ dispatched target hello_caps_out

+● completed target hello_caps_out [0 seconds, 26 bytes]

+▶ ended pipeline [0.111 seconds]

+```

+

+Take a look at `hello_caps.txt` in the `results` folder and verify it is as you expect.

+

+::::::::::::::::::::::::::::::::::::: {.challenge}

+

+## Challenge: What happens to file output if its modified?

+

+Delete or change the contents of `hello_caps.txt` in the `results` folder.

+What do you think will happen when you run `tar_make()` again?

+Try it and see.

+

+:::::::::::::::::::::::::::::::::: {.solution}

+

+`targets` detects that `hello_caps_out` has changed (is "invalidated"), and re-runs the code to make it, thus writing out `hello_caps.txt` to `results` again.

+

+So this way of writing out results makes your pipeline more robust: we have a guarantee that the contents of the file in `results` are generated solely by the code in your plan.

+

+::::::::::::::::::::::::::::::::::

+

+:::::::::::::::::::::::::::::::::::::

+

+::::::::::::::::::::::::::::::::::::: keypoints

+

+- `tarchetypes::tar_file()` tracks the contents of a file

+- Use `tarchetypes::tar_file_read()` in combination with data loading functions like `read_csv()` to keep the pipeline in sync with your input data

+- Use `tarchetypes::tar_file()` in combination with a function that writes to a file and returns its path to write out data

+

+::::::::::::::::::::::::::::::::::::::::::::::::

diff --git a/files/functions.R b/files/functions.R

new file mode 100644

index 00000000..c063edc2

--- /dev/null

+++ b/files/functions.R

@@ -0,0 +1,357 @@

+#' Write an example targets plan

+#'

+#' To save on repition and errors when repeatedly running examples

+#'

+#' @param plan_select Plan template to choose from; 1, 2, or 3.

+#'

+#' @return Writes out the plan to _targets.R in the working directory.

+#' WILL OVERWRITE ANY EXISTING FILE WITH THE SAME NAME

+#' @examples

+#' library(targets)

+#' tar_dir({

+#' write_example_plan(1)

+#' tar_make()

+#' })

+#'

+write_example_plan <- function(plan_select) {

+ # functions

+ glance_with_mod_name_func <- c(

+ "glance_with_mod_name <- function(model_in_list) {",

+ "model_name <- names(model_in_list)",

+ "model <- model_in_list[[1]]",

+ "broom::glance(model) |>",

+ " mutate(model_name = model_name)",

+ "}"

+ )

+ augment_with_mod_name_func <- c(

+ "augment_with_mod_name <- function(model_in_list) {",

+ "model_name <- names(model_in_list)",

+ "model <- model_in_list[[1]]",

+ "broom::augment(model) |>",

+ " mutate(model_name = model_name)",

+ "}"

+ )

+ glance_slow_func <- c(

+ "glance_with_mod_name_slow <- function(model_in_list) {",

+ "Sys.sleep(4)",

+ "model_name <- names(model_in_list)",

+ "model <- model_in_list[[1]]",

+ "broom::glance(model) |>",

+ " mutate(model_name = model_name)",

+ "}"

+ )

+ augment_slow_func <- c(

+ "augment_with_mod_name_slow <- function(model_in_list) {",

+ "Sys.sleep(4)",

+ "model_name <- names(model_in_list)",

+ "model <- model_in_list[[1]]",

+ "broom::augment(model) |>",

+ " mutate(model_name = model_name)",

+ "}"

+ )

+ clean_penguin_data_func <- c(

+ "clean_penguin_data <- function(penguins_data_raw) {",

+ " penguins_data_raw |>",

+ " select(",

+ " species = Species,",

+ " bill_length_mm = `Culmen Length (mm)`,",

+ " bill_depth_mm = `Culmen Depth (mm)`",

+ " ) |>",

+ " remove_missing(na.rm = TRUE) |>",

+ " separate(species, into = 'species', extra = 'drop')",

+ "}"

+ )

+ # original plan

+ plan_1 <- c(

+ "library(targets)",

+ "library(palmerpenguins)",

+ "suppressPackageStartupMessages(library(tidyverse))",

+ "clean_penguin_data <- function(penguins_data_raw) {",

+ " penguins_data_raw |>",

+ " select(",

+ " species = Species,",

+ " bill_length_mm = `Culmen Length (mm)`,",

+ " bill_depth_mm = `Culmen Depth (mm)`",

+ " ) |>",

+ " remove_missing(na.rm = TRUE)",

+ "}",

+ "list(",

+ " tar_target(penguins_csv_file, path_to_file('penguins_raw.csv')),",

+ " tar_target(penguins_data_raw, read_csv(",

+ " penguins_csv_file, show_col_types = FALSE)),",

+ " tar_target(penguins_data, clean_penguin_data(penguins_data_raw))",

+ ")"

+ )

+ # separate species names

+ plan_2 <- c(

+ "library(targets)",

+ "library(palmerpenguins)",

+ "suppressPackageStartupMessages(library(tidyverse))",

+ "clean_penguin_data <- function(penguins_data_raw) {",

+ " penguins_data_raw |>",

+ " select(",

+ " species = Species,",

+ " bill_length_mm = `Culmen Length (mm)`,",

+ " bill_depth_mm = `Culmen Depth (mm)`",

+ " ) |>",

+ " remove_missing(na.rm = TRUE) |>",

+ " separate(species, into = 'species', extra = 'drop')",

+ "}",

+ "list(",

+ " tar_target(penguins_csv_file, path_to_file('penguins_raw.csv')),",

+ " tar_target(penguins_data_raw, read_csv(",

+ " penguins_csv_file, show_col_types = FALSE)),",

+ " tar_target(penguins_data, clean_penguin_data(penguins_data_raw))",

+ ")"

+ )

+ # tar_file_read

+ plan_3 <- c(

+ "library(targets)",

+ "library(palmerpenguins)",

+ "library(tarchetypes)",

+ "suppressPackageStartupMessages(library(tidyverse))",

+ "clean_penguin_data <- function(penguins_data_raw) {",

+ " penguins_data_raw |>",

+ " select(",

+ " species = Species,",

+ " bill_length_mm = `Culmen Length (mm)`,",

+ " bill_depth_mm = `Culmen Depth (mm)`",

+ " ) |>",

+ " remove_missing(na.rm = TRUE) |>",

+ " separate(species, into = 'species', extra = 'drop')",

+ "}",

+ "tar_plan(",

+ " tar_file_read(",

+ " penguins_data_raw,",

+ " path_to_file('penguins_raw.csv'),",

+ " read_csv(!!.x, show_col_types = FALSE)",

+ " ),",

+ " penguins_data = clean_penguin_data(penguins_data_raw)",

+ ")"

+ )

+ # add one model

+ plan_4 <- c(

+ "library(targets)",

+ "library(palmerpenguins)",

+ "library(tarchetypes)",

+ "suppressPackageStartupMessages(library(tidyverse))",

+ "clean_penguin_data <- function(penguins_data_raw) {",

+ " penguins_data_raw |>",

+ " select(",

+ " species = Species,",

+ " bill_length_mm = `Culmen Length (mm)`,",

+ " bill_depth_mm = `Culmen Depth (mm)`",

+ " ) |>",

+ " remove_missing(na.rm = TRUE) |>",

+ " separate(species, into = 'species', extra = 'drop')",

+ "}",

+ "tar_plan(",

+ " tar_file_read(",

+ " penguins_data_raw,",

+ " path_to_file('penguins_raw.csv'),",

+ " read_csv(!!.x, show_col_types = FALSE)",

+ " ),",

+ " penguins_data = clean_penguin_data(penguins_data_raw),",

+ " combined_model = lm(",

+ " bill_depth_mm ~ bill_length_mm, data = penguins_data)",

+ ")"

+ )

+ # add multiple models

+ plan_5 <- c(

+ "library(targets)",

+ "library(palmerpenguins)",

+ "library(tarchetypes)",

+ "library(broom)",

+ "suppressPackageStartupMessages(library(tidyverse))",

+ "clean_penguin_data <- function(penguins_data_raw) {",

+ " penguins_data_raw |>",

+ " select(",

+ " species = Species,",

+ " bill_length_mm = `Culmen Length (mm)`,",

+ " bill_depth_mm = `Culmen Depth (mm)`",

+ " ) |>",

+ " remove_missing(na.rm = TRUE) |>",

+ " separate(species, into = 'species', extra = 'drop')",

+ "}",

+ "tar_plan(",

+ " tar_file_read(",

+ " penguins_data_raw,",

+ " path_to_file('penguins_raw.csv'),",

+ " read_csv(!!.x, show_col_types = FALSE)",

+ " ),",

+ " penguins_data = clean_penguin_data(penguins_data_raw),",

+ " combined_model = lm(",

+ " bill_depth_mm ~ bill_length_mm, data = penguins_data),",

+ " species_model = lm(",

+ " bill_depth_mm ~ bill_length_mm + species, data = penguins_data),",

+ " interaction_model = lm(",

+ " bill_depth_mm ~ bill_length_mm * species, data = penguins_data),",

+ " combined_summary = glance(combined_model),",

+ " species_summary = glance(species_model),",

+ " interaction_summary = glance(interaction_model)",

+ ")"

+ )

+ # add multiple models with branching

+ plan_6 <- c(

+ "library(targets)",

+ "library(palmerpenguins)",

+ "library(tarchetypes)",

+ "library(broom)",

+ "suppressPackageStartupMessages(library(tidyverse))",

+ clean_penguin_data_func,

+ "tar_plan(",

+ " tar_file_read(",

+ " penguins_data_raw,",

+ " path_to_file('penguins_raw.csv'),",

+ " read_csv(!!.x, show_col_types = FALSE)",

+ " ),",

+ " penguins_data = clean_penguin_data(penguins_data_raw),",

+ " models = list(",

+ " combined_model = lm(",

+ " bill_depth_mm ~ bill_length_mm, data = penguins_data),",

+ " species_model = lm(",

+ " bill_depth_mm ~ bill_length_mm + species, data = penguins_data),",

+ " interaction_model = lm(",

+ " bill_depth_mm ~ bill_length_mm * species, data = penguins_data)",

+ " ),",

+ " tar_target(",

+ " model_summaries,",

+ " glance(models[[1]]),",

+ " pattern = map(models)",

+ " )",

+ ")"

+ )

+ # add multiple models with branching, custom glance func

+ plan_7 <- c(

+ "library(targets)",

+ "library(palmerpenguins)",

+ "library(tarchetypes)",

+ "library(broom)",

+ "suppressPackageStartupMessages(library(tidyverse))",

+ glance_with_mod_name_func,

+ clean_penguin_data_func,

+ "tar_plan(",

+ " tar_file_read(",

+ " penguins_data_raw,",

+ " path_to_file('penguins_raw.csv'),",

+ " read_csv(!!.x, show_col_types = FALSE)",

+ " ),",

+ " penguins_data = clean_penguin_data(penguins_data_raw),",

+ " models = list(",

+ " combined_model = lm(",

+ " bill_depth_mm ~ bill_length_mm, data = penguins_data),",

+ " species_model = lm(",

+ " bill_depth_mm ~ bill_length_mm + species, data = penguins_data),",

+ " interaction_model = lm(",

+ " bill_depth_mm ~ bill_length_mm * species, data = penguins_data)",

+ " ),",

+ " tar_target(",

+ " model_summaries,",

+ " glance_with_mod_name(models),",

+ " pattern = map(models)",

+ " )",

+ ")"

+ )

+ # adds future and predictions

+ plan_8 <- c(

+ "library(targets)",

+ "library(palmerpenguins)",

+ "library(tarchetypes)",

+ "library(broom)",

+ "library(crew)",

+ "suppressPackageStartupMessages(library(tidyverse))",

+ glance_slow_func,

+ augment_slow_func,

+ clean_penguin_data_func,

+ "tar_option_set(controller = crew_controller_local(workers = 2))",

+ "tar_plan(",

+ " tar_file_read(",

+ " penguins_data_raw,",

+ " path_to_file('penguins_raw.csv'),",

+ " read_csv(!!.x, show_col_types = FALSE)",

+ " ),",

+ " penguins_data = clean_penguin_data(penguins_data_raw),",

+ " models = list(",

+ " combined_model = lm(",

+ " bill_depth_mm ~ bill_length_mm, data = penguins_data),",

+ " species_model = lm(",

+ " bill_depth_mm ~ bill_length_mm + species, data = penguins_data),",

+ " interaction_model = lm(",

+ " bill_depth_mm ~ bill_length_mm * species, data = penguins_data)",

+ " ),",

+ " tar_target(",

+ " model_summaries,",

+ " glance_with_mod_name_slow(models),",

+ " pattern = map(models)",

+ " ),",

+ " tar_target(",

+ " model_predictions,",

+ " augment_with_mod_name_slow(models),",

+ " pattern = map(models)",

+ " ),",

+ ")"

+ )

+ # adds report

+ plan_9 <- c(

+ "library(targets)",

+ "library(palmerpenguins)",

+ "library(tarchetypes)",

+ "library(broom)",

+ "suppressPackageStartupMessages(library(tidyverse))",

+ glance_with_mod_name_func,

+ augment_with_mod_name_func,

+ clean_penguin_data_func,

+ "tar_plan(",

+ " tar_file_read(",

+ " penguins_data_raw,",

+ " path_to_file('penguins_raw.csv'),",

+ " read_csv(!!.x, show_col_types = FALSE)",

+ " ),",

+ " penguins_data = clean_penguin_data(penguins_data_raw),",

+ " models = list(",

+ " combined_model = lm(",

+ " bill_depth_mm ~ bill_length_mm, data = penguins_data),",

+ " species_model = lm(",

+ " bill_depth_mm ~ bill_length_mm + species, data = penguins_data),",

+ " interaction_model = lm(",

+ " bill_depth_mm ~ bill_length_mm * species, data = penguins_data)",

+ " ),",

+ " tar_target(",

+ " model_summaries,",

+ " glance_with_mod_name(models),",

+ " pattern = map(models)",

+ " ),",

+ " tar_target(",

+ " model_predictions,",

+ " augment_with_mod_name(models),",

+ " pattern = map(models)",

+ " ),",

+ " tar_quarto(",

+ " penguin_report,",

+ " path = 'penguin_report.qmd',",

+ " quiet = FALSE,",

+ " packages = c('targets', 'tidyverse')",

+ " )",

+ ")"

+ )

+ switch(

+ as.character(plan_select),

+ "1" = readr::write_lines(plan_1, "_targets.R"),

+ "2" = readr::write_lines(plan_2, "_targets.R"),

+ "3" = readr::write_lines(plan_3, "_targets.R"),

+ "4" = readr::write_lines(plan_4, "_targets.R"),

+ "5" = readr::write_lines(plan_5, "_targets.R"),

+ "6" = readr::write_lines(plan_6, "_targets.R"),

+ "7" = readr::write_lines(plan_7, "_targets.R"),

+ "8" = readr::write_lines(plan_8, "_targets.R"),

+ "9" = readr::write_lines(plan_9, "_targets.R"),

+ stop("plan_select must be 1, 2, 3, 4, 5, 6, 7, 8, or 9")

+ )

+}

+

+glance_with_mod_name <- function(model_in_list) {

+ model_name <- names(model_in_list)

+ model <- model_in_list[[1]]

+ broom::glance(model) |>

+ mutate(model_name = model_name)

+}

diff --git a/files/lesson_functions.R b/files/lesson_functions.R

new file mode 100644

index 00000000..5e903b7a

--- /dev/null

+++ b/files/lesson_functions.R

@@ -0,0 +1,59 @@

+# Functions used in the lesson `.Rmd` files, but that learners

+# aren't exposed to, and aren't used inside the Targets pipelines

+

+make_tempdir <- function() {

+ x <- tempfile()

+ dir.create(x, showWarnings = FALSE)

+ x

+}

+

+files_root <- normalizePath("files")

+plan_root <- file.path(files_root, "plans")

+utility_funcs <- file.path(files_root, "tar_functions") |>

+ list.files(full.names = TRUE, pattern = "\\.R$") |>

+ lapply(readLines) |>

+ unlist()

+package_script <- file.path(files_root, "packages.R")

+

+#' @param file The path to another file to use as a workflow

+#' @param chunk The chunk name to use as a targets workflow

+write_example_plan <- function(file = NULL, chunk = NULL) {

+ # Write the utility functions into the R/ directory

+

+ if (!dir.exists("R")) {

+ dir.create("R")

+

+ # Write the functions.R script

+ file.path("R", "functions.R") |>

+ writeLines(utility_funcs, con = _)

+

+ # Copy the packages.R script

+ file.path("R", "packages.R") |>

+ file.copy(from = package_script, to = _)

+ }

+

+ # Write the workflow

+ if (!is.null(file)) {

+ file.path(plan_root, file) |>

+ file.copy(from = _, to = "_targets.R", overwrite = TRUE)

+ }

+ if (!is.null(chunk)) {

+ writeLines(text = knitr::knit_code$get(chunk), con = "_targets.R")

+ }

+

+ invisible()

+}

+

+directory_stack <- getwd()

+

+pushd <- function(dir) {

+ directory_stack <<- c(dir, directory_stack)

+ setwd(directory_stack[1])

+ invisible()

+}

+

+popd <- function() {

+ directory_stack <<- directory_stack[-1]

+ setwd(directory_stack[1])

+ invisible()

+}

diff --git a/files/packages.R b/files/packages.R

new file mode 100644

index 00000000..6f8bcc90

--- /dev/null

+++ b/files/packages.R

@@ -0,0 +1,6 @@

+library(targets)

+library(tarchetypes)

+library(palmerpenguins)

+library(tidyverse)

+library(broom)

+library(htmlwidgets)

diff --git a/files/plans/README.md b/files/plans/README.md

new file mode 100644

index 00000000..a19d96ea

--- /dev/null

+++ b/files/plans/README.md

@@ -0,0 +1 @@

+Plans that are re-used between multiple episodes are placed here

\ No newline at end of file

diff --git a/files/plans/plan_1.R b/files/plans/plan_1.R

new file mode 100644

index 00000000..7c5575e3

--- /dev/null

+++ b/files/plans/plan_1.R

@@ -0,0 +1,21 @@

+options(tidyverse.quiet = TRUE)

+library(targets)

+library(tidyverse)

+library(palmerpenguins)

+

+clean_penguin_data <- function(penguins_data_raw) {

+ penguins_data_raw |>

+ select(

+ species = Species,

+ bill_length_mm = `Culmen Length (mm)`,

+ bill_depth_mm = `Culmen Depth (mm)`

+ ) |>

+ remove_missing(na.rm = TRUE)

+}

+

+list(

+ tar_target(penguins_csv_file, path_to_file("penguins_raw.csv")),

+ tar_target(penguins_data_raw, read_csv(

+ penguins_csv_file, show_col_types = FALSE)),

+ tar_target(penguins_data, clean_penguin_data(penguins_data_raw))

+)

diff --git a/files/plans/plan_10.R b/files/plans/plan_10.R

new file mode 100644

index 00000000..be92fd01

--- /dev/null

+++ b/files/plans/plan_10.R

@@ -0,0 +1,42 @@

+options(tidyverse.quiet = TRUE)

+suppressPackageStartupMessages(library(crew))

+source("R/functions.R")

+source("R/packages.R")

+

+# Set up parallelization

+library(crew)

+tar_option_set(

+ controller = crew_controller_local(workers = 2)

+)

+

+tar_plan(

+ # Load raw data

+ tar_file_read(

+ penguins_data_raw,

+ path_to_file("penguins_raw.csv"),