diff --git a/_posts/complementing-python-with-rust.mdx b/_posts/complementing-python-with-rust.mdx

index 53c6e73..0bd6c93 100644

--- a/_posts/complementing-python-with-rust.mdx

+++ b/_posts/complementing-python-with-rust.mdx

@@ -121,7 +121,17 @@ pub extern fn double(n: i32) -> i32 {

}

```

-Finally, to show you the difference in performance, I will consider the following problem — I want to read a text file, split it into words on whitespace, and find the most common words in that file. This is useful, for example, in writing a [spelling-corrector](http://norvig.com/spell-correct.html). It is simple to do this in Python since it has a built in data structure. `collections.Counter` is a kind of dictionary and has a method called `most_common`, that returns the common words with their count. Rust doesn’t have this method. So we will write an implementation of `most_common` that takes a dictionary (HashMap in Rust) as input and returns the most common key after aggregating.

+Finally, to show you the difference in performance,

+I will consider the following problem — I want to read a text file,

+split it into words on whitespace, and find the most common words in

+that file. This is useful, for example,

+in writing a [spelling-corrector](http://norvig.com/spell-correct.html). It

+is simple to do this in Python since it has a built in

+data structure. collections.Counter is a kind of dictionary and

+has a method called most_common, that returns the common words

+with their count. Rust doesn’t have this method. So we will write an

+implementation of most_common that takes a dictionary (HashMap in Rust)

+as input and returns the most common key after aggregating.

```rust,python,shell

$ cargo new words

@@ -246,7 +256,12 @@ fn n_most_common(

)> where T: Eq + Hash + Clone

```

-So the `n_most_common` method takes a HashMap (dictionary) as the first argument. The key in this HashMap is a generic type T but the where clause specifies that this generic type T has to implement 3 traits — Eq, Hash and Clone. This is exactly same in a Python dictionary, where the keys have be hashable. But in the case of Rust, this is checked at compile type and doesn’t need unit-tests for this behaviour to hold.

+So the n_most_common method takes a HashMap (dictionary) as the

+first argument. The key in this HashMap is a generic type T but the where

+clause specifies that this generic type T has to implement 3 traits — Eq, Hash

+and Clone. This is exactly same in a Python dictionary, where the keys have be

+hashable. But in the case of Rust, this is checked at compile type and doesn’t

+need unit-tests for this behaviour to hold.

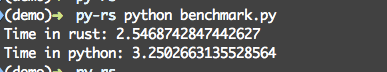

_Time taken in Rust and Python (lower is better)_

diff --git a/_posts/look-ma-kubernetes-objects.mdx b/_posts/look-ma-kubernetes-objects.mdx

index 7eb458c..7793513 100644

--- a/_posts/look-ma-kubernetes-objects.mdx

+++ b/_posts/look-ma-kubernetes-objects.mdx

@@ -24,9 +24,10 @@ Today I work as a Site Reliability Engineer. The goal of our team is to provide

There is one fundamental abstraction in Kubernetes applied uniformly from how I think about it. That of a [Kubernetes Resource Model (KRM)](https://github.com/kubernetes/community/blob/master/contributors/design-proposals/architecture/resource-management.md). When you represent something as a KRM, it means two things —

-`Declarative`: We focus on specifying the intent/what.

+Declarative We focus on specifying the intent/what.

-`Reconciliation loop` There is a controller that will reconcile the intent with the observed state constantly.

+Reconciliation loop There is a controller that will reconcile the intent

+with the observed state constantly.

There are already projects like [config-connector](https://cloud.google.com/config-connector/docs/overview) and [crossplane](https://github.com/crossplane/crossplane) that allow us to manage infrastructure with this approach to varying degrees. In this design, there is no distinction between an infrastructure component and an application component. Everything is represented uniformly as a KRM (which means declarative and reconciliation) and the same tools are applied to manage infrastructure and applications. So perhaps a modern way to provision infrastructure is to provision a Kubernetes cluster which will provision your infrastructure and your Kubernetes clusters which will run applications.

diff --git a/_posts/python-concurrent-futures.mdx b/_posts/python-concurrent-futures.mdx

index bddecaa..d892597 100644

--- a/_posts/python-concurrent-futures.mdx

+++ b/_posts/python-concurrent-futures.mdx

@@ -10,7 +10,10 @@ ogImage:

url: 'https://user-images.githubusercontent.com/222507/109425295-e87f5f80-79e7-11eb-8cc8-2d73a5dd3982.png'

---

-I had been wanting to try some new features of Python 3 for a while now. I recently found some free time and tried concurrent.futures. Concurrent.futures allows us to write code that runs in parallel in Python without resorting to creating threads or forking processes.

+I had been wanting to try some new features of Python 3 for a while now.

+I recently found some free time and tried concurrent.futures.

+Concurrent.futures allows us to write code that runs in parallel in

+Python without resorting to creating threads or forking processes.

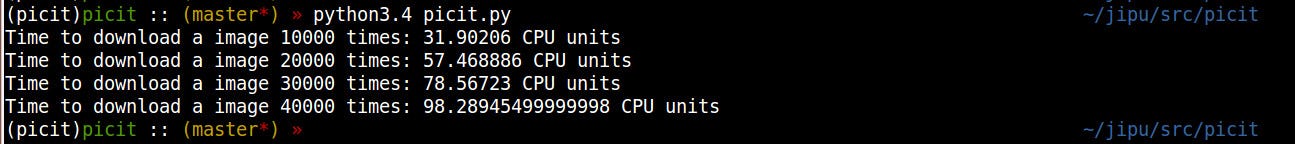

We will use the following problem to write some code — write a script that downloads images over the internet to a local directory.

@@ -38,10 +41,16 @@ Now let us look at a version that uses concurrent.futures. It is surprisingly ea

Only the download_ntimes method has changed to download images asynchronously using [ProcessPoolExecutor](https://docs.python.org/3/library/concurrent.futures.html#concurrent.futures.ProcessPoolExecutor). From the documentation -

-> The ProcessPoolExecutor class uses a pool of processes to execute calls asynchronously. ProcessPoolExecutor uses the multiprocessing module, which allows it to side-step the

+> The ProcessPoolExecutor class uses a pool of processes to execute calls asynchronously.

+> ProcessPoolExecutor uses the multiprocessing module, which allows it to side-step the

> [global interpreter lock](https://docs.python.org/3/glossary.html#term-global-interpreter-lock)

-Note also that we don’t specify how many workers to use. That is because, *if max_workers is None or not given, it will default to the number of processors on the machine. *The download times now are -

+Note also that we don’t specify how many workers to use.

+That is because

+

+> if max_workers is None or not given, it will default to the number of processors on the machine.

+

+The download times now are -

diff --git a/_posts/secret-sauce.mdx b/_posts/secret-sauce.mdx

index 84044aa..65fdae0 100644

--- a/_posts/secret-sauce.mdx

+++ b/_posts/secret-sauce.mdx

@@ -31,7 +31,7 @@ publishing to Rabbitmq failed, rendering templates from local disk failed, etc.

In fact, we will **always** have some service failing occasionally in a

distributed system. Which is why we need **error-reporting** to be front and center.

-I want to clarify what I mean by `error-reporting`. To me, it means -

+I want to clarify what I mean by _error-reporting_. To me, it means -

- All stakeholders get **real-time/instant** notifications of errors in the system.

- The errors are actionable. I.e. there is enough information in error reports to

diff --git a/components/post-body.tsx b/components/post-body.tsx

index c05cba6..888c6b5 100644

--- a/components/post-body.tsx

+++ b/components/post-body.tsx

@@ -1,4 +1,6 @@

import { MDXRemote, MDXRemoteSerializeResult } from 'next-mdx-remote'

+import { Code } from '@nextui-org/react'

+

import Quotation from './quotation'

import YoutubeEmbed from './youtube-embed'

@@ -9,6 +11,7 @@ type PostBodyProps = {

const components = {

Quotation,

YoutubeEmbed,

+ Code,

}

const PostBody: React.FC = ({ source }) => {