diff --git a/.gitignore b/.gitignore

index 7daa4363..7f89125e 100644

--- a/.gitignore

+++ b/.gitignore

@@ -3,3 +3,4 @@ __pycache__/

*.pth

*.tif

examples/data/*

+*.out

diff --git a/README.md b/README.md

index aa1c6f56..be7b6331 100644

--- a/README.md

+++ b/README.md

@@ -16,31 +16,26 @@ We implement napari applications for:

-**Beta version**

-

-This is an advanced beta version. While many features are still under development, we aim to keep the user interface and python library stable.

-Any feedback is welcome, but please be aware that the functionality is under active development and that some features may not be thoroughly tested yet.

-We will soon provide a stand-alone application for running the `micro_sam` annotation tools, and plan to also release it as [napari plugin](https://napari.org/stable/plugins/index.html) in the future.

-

-If you run into any problems or have questions please open an issue on Github or reach out via [image.sc](https://forum.image.sc/) using the tag `micro-sam` and tagging @constantinpape.

+If you run into any problems or have questions regarding our tool please open an issue on Github or reach out via [image.sc](https://forum.image.sc/) using the tag `micro-sam` and tagging @constantinpape.

## Installation and Usage

You can install `micro_sam` via conda:

```

-conda install -c conda-forge micro_sam

+conda install -c conda-forge micro_sam napari pyqt

```

You can then start the `micro_sam` tools by running `$ micro_sam.annotator` in the command line.

+For an introduction in how to use the napari based annotation tools check out [the video tutorials](https://www.youtube.com/watch?v=ket7bDUP9tI&list=PLwYZXQJ3f36GQPpKCrSbHjGiH39X4XjSO&pp=gAQBiAQB).

Please check out [the documentation](https://computational-cell-analytics.github.io/micro-sam/) for more details on the installation and usage of `micro_sam`.

## Citation

If you are using this repository in your research please cite

-- [SegmentAnything](https://arxiv.org/abs/2304.02643)

-- and our repository on [zenodo](https://doi.org/10.5281/zenodo.7919746) (we are working on a publication)

+- Our [preprint](https://doi.org/10.1101/2023.08.21.554208)

+- and the original [Segment Anything publication](https://arxiv.org/abs/2304.02643)

## Related Projects

@@ -56,6 +51,17 @@ Compared to these we support more applications (2d, 3d and tracking), and provid

## Release Overview

+**New in version 0.2.1 and 0.2.2**

+

+- Several bugfixes for the newly introduced functionality in 0.2.0.

+

+**New in version 0.2.0**

+

+- Functionality for training / finetuning and evaluation of Segment Anything Models

+- Full support for our finetuned segment anything models

+- Improvements of the automated instance segmentation functionality in the 2d annotator

+- And several other small improvements

+

**New in version 0.1.1**

- Fine-tuned segment anything models for microscopy (experimental)

diff --git a/deployment/construct.yaml b/deployment/construct.yaml

index 5231b738..d9db54df 100644

--- a/deployment/construct.yaml

+++ b/deployment/construct.yaml

@@ -8,8 +8,6 @@ header_image: ../doc/images/micro-sam-logo.png

icon_image: ../doc/images/micro-sam-logo.png

channels:

- conda-forge

-welcome_text: Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod

- tempor incididunt ut labore et dolore magna aliqua.

-conclusion_text: Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- nisi ut aliquip ex ea commodo consequat.

-initialize_by_default: false

\ No newline at end of file

+welcome_text: Install Segment Anything for Microscopy.

+conclusion_text: Segment Anything for Microscopy has been installed.

+initialize_by_default: false

diff --git a/doc/annotation_tools.md b/doc/annotation_tools.md

index 921e1112..7d6a4027 100644

--- a/doc/annotation_tools.md

+++ b/doc/annotation_tools.md

@@ -13,9 +13,22 @@ The annotation tools can be started from the `micro_sam` GUI, the command line o

$ micro_sam.annotator

```

-They are built with [napari](https://napari.org/stable/) to implement the viewer and user interaction.

+They are built using [napari](https://napari.org/stable/) and [magicgui](https://pyapp-kit.github.io/magicgui/) to provide the viewer and user interface.

If you are not familiar with napari yet, [start here](https://napari.org/stable/tutorials/fundamentals/quick_start.html).

-The `micro_sam` applications are mainly based on [the point layer](https://napari.org/stable/howtos/layers/points.html), [the shape layer](https://napari.org/stable/howtos/layers/shapes.html) and [the label layer](https://napari.org/stable/howtos/layers/labels.html).

+The `micro_sam` tools use [the point layer](https://napari.org/stable/howtos/layers/points.html), [shape layer](https://napari.org/stable/howtos/layers/shapes.html) and [label layer](https://napari.org/stable/howtos/layers/labels.html).

+

+The annotation tools are explained in detail below. In addition to the documentation here we also provide [video tutorials](https://www.youtube.com/watch?v=ket7bDUP9tI&list=PLwYZXQJ3f36GQPpKCrSbHjGiH39X4XjSO).

+

+

+## Starting via GUI

+

+The annotation toools can be started from a central GUI, which can be started with the command `$ micro_sam.annotator` or using the executable [from an installer](#from-installer).

+

+In the GUI you can select with of the four annotation tools you want to use:

+

-**Beta version**

-

-This is an advanced beta version. While many features are still under development, we aim to keep the user interface and python library stable.

-Any feedback is welcome, but please be aware that the functionality is under active development and that some features may not be thoroughly tested yet.

-We will soon provide a stand-alone application for running the `micro_sam` annotation tools, and plan to also release it as [napari plugin](https://napari.org/stable/plugins/index.html) in the future.

-

-If you run into any problems or have questions please open an issue on Github or reach out via [image.sc](https://forum.image.sc/) using the tag `micro-sam` and tagging @constantinpape.

+If you run into any problems or have questions regarding our tool please open an issue on Github or reach out via [image.sc](https://forum.image.sc/) using the tag `micro-sam` and tagging @constantinpape.

## Installation and Usage

You can install `micro_sam` via conda:

```

-conda install -c conda-forge micro_sam

+conda install -c conda-forge micro_sam napari pyqt

```

You can then start the `micro_sam` tools by running `$ micro_sam.annotator` in the command line.

+For an introduction in how to use the napari based annotation tools check out [the video tutorials](https://www.youtube.com/watch?v=ket7bDUP9tI&list=PLwYZXQJ3f36GQPpKCrSbHjGiH39X4XjSO&pp=gAQBiAQB).

Please check out [the documentation](https://computational-cell-analytics.github.io/micro-sam/) for more details on the installation and usage of `micro_sam`.

## Citation

If you are using this repository in your research please cite

-- [SegmentAnything](https://arxiv.org/abs/2304.02643)

-- and our repository on [zenodo](https://doi.org/10.5281/zenodo.7919746) (we are working on a publication)

+- Our [preprint](https://doi.org/10.1101/2023.08.21.554208)

+- and the original [Segment Anything publication](https://arxiv.org/abs/2304.02643)

## Related Projects

@@ -56,6 +51,17 @@ Compared to these we support more applications (2d, 3d and tracking), and provid

## Release Overview

+**New in version 0.2.1 and 0.2.2**

+

+- Several bugfixes for the newly introduced functionality in 0.2.0.

+

+**New in version 0.2.0**

+

+- Functionality for training / finetuning and evaluation of Segment Anything Models

+- Full support for our finetuned segment anything models

+- Improvements of the automated instance segmentation functionality in the 2d annotator

+- And several other small improvements

+

**New in version 0.1.1**

- Fine-tuned segment anything models for microscopy (experimental)

diff --git a/deployment/construct.yaml b/deployment/construct.yaml

index 5231b738..d9db54df 100644

--- a/deployment/construct.yaml

+++ b/deployment/construct.yaml

@@ -8,8 +8,6 @@ header_image: ../doc/images/micro-sam-logo.png

icon_image: ../doc/images/micro-sam-logo.png

channels:

- conda-forge

-welcome_text: Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod

- tempor incididunt ut labore et dolore magna aliqua.

-conclusion_text: Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- nisi ut aliquip ex ea commodo consequat.

-initialize_by_default: false

\ No newline at end of file

+welcome_text: Install Segment Anything for Microscopy.

+conclusion_text: Segment Anything for Microscopy has been installed.

+initialize_by_default: false

diff --git a/doc/annotation_tools.md b/doc/annotation_tools.md

index 921e1112..7d6a4027 100644

--- a/doc/annotation_tools.md

+++ b/doc/annotation_tools.md

@@ -13,9 +13,22 @@ The annotation tools can be started from the `micro_sam` GUI, the command line o

$ micro_sam.annotator

```

-They are built with [napari](https://napari.org/stable/) to implement the viewer and user interaction.

+They are built using [napari](https://napari.org/stable/) and [magicgui](https://pyapp-kit.github.io/magicgui/) to provide the viewer and user interface.

If you are not familiar with napari yet, [start here](https://napari.org/stable/tutorials/fundamentals/quick_start.html).

-The `micro_sam` applications are mainly based on [the point layer](https://napari.org/stable/howtos/layers/points.html), [the shape layer](https://napari.org/stable/howtos/layers/shapes.html) and [the label layer](https://napari.org/stable/howtos/layers/labels.html).

+The `micro_sam` tools use [the point layer](https://napari.org/stable/howtos/layers/points.html), [shape layer](https://napari.org/stable/howtos/layers/shapes.html) and [label layer](https://napari.org/stable/howtos/layers/labels.html).

+

+The annotation tools are explained in detail below. In addition to the documentation here we also provide [video tutorials](https://www.youtube.com/watch?v=ket7bDUP9tI&list=PLwYZXQJ3f36GQPpKCrSbHjGiH39X4XjSO).

+

+

+## Starting via GUI

+

+The annotation toools can be started from a central GUI, which can be started with the command `$ micro_sam.annotator` or using the executable [from an installer](#from-installer).

+

+In the GUI you can select with of the four annotation tools you want to use:

+ +

+And after selecting them a new window will open where you can select the input file path and other optional parameter. Then click the top button to start the tool. **Note: If you are not starting the annotation tool with a path to pre-computed embeddings then it can take several minutes to open napari after pressing the button because the embeddings are being computed.**

+

## Annotator 2D

@@ -44,7 +57,7 @@ It contains the following elements:

Note that point prompts and box prompts can be combined. When you're using point prompts you can only segment one object at a time. With box prompts you can segment several objects at once.

-Check out [this video](https://youtu.be/DfWE_XRcqN8) for an example of how to use the interactive 2d annotator.

+Check out [this video](https://youtu.be/ket7bDUP9tI) for a tutorial for the 2d annotation tool.

We also provide the `image series annotator`, which can be used for running the 2d annotator for several images in a folder. You can start by clicking `Image series annotator` in the GUI, running `micro_sam.image_series_annotator` in the command line or from a [python script](https://github.com/computational-cell-analytics/micro-sam/blob/master/examples/image_series_annotator.py).

@@ -69,7 +82,7 @@ Most elements are the same as in [the 2d annotator](#annotator-2d):

Note that you can only segment one object at a time with the 3d annotator.

-Check out [this video](https://youtu.be/5Jo_CtIefTM) for an overview of the interactive 3d segmentation functionality.

+Check out [this video](https://youtu.be/PEy9-rTCdS4) for a tutorial for the 3d annotation tool.

## Annotator Tracking

@@ -93,7 +106,7 @@ Most elements are the same as in [the 2d annotator](#annotator-2d):

Note that the tracking annotator only supports 2d image data, volumetric data is not supported.

-Check out [this video](https://youtu.be/PBPW0rDOn9w) for an overview of the interactive tracking functionality.

+Check out [this video](https://youtu.be/Xi5pRWMO6_w) for a tutorial for how to use the tracking annotation tool.

## Tips & Tricks

@@ -105,7 +118,7 @@ You can activate tiling by passing the parameters `tile_shape`, which determines

- If you're using the command line functions you can pass them via the options `--tile_shape 1024 1024 --halo 128 128`

- Note that prediction with tiling only works when the embeddings are cached to file, so you must specify an `embedding_path` (`-e` in the CLI).

- You should choose the `halo` such that it is larger than half of the maximal radius of the objects your segmenting.

-- The applications pre-compute the image embeddings produced by SegmentAnything and (optionally) store them on disc. If you are using a CPU this step can take a while for 3d data or timeseries (you will see a progress bar with a time estimate). If you have access to a GPU without graphical interface (e.g. via a local computer cluster or a cloud provider), you can also pre-compute the embeddings there and then copy them to your laptop / local machine to speed this up. You can use the command `micro_sam.precompute_embeddings` for this (it is installed with the rest of the applications). You can specify the location of the precomputed embeddings via the `embedding_path` argument.

+- The applications pre-compute the image embeddings produced by SegmentAnything and (optionally) store them on disc. If you are using a CPU this step can take a while for 3d data or timeseries (you will see a progress bar with a time estimate). If you have access to a GPU without graphical interface (e.g. via a local computer cluster or a cloud provider), you can also pre-compute the embeddings there and then copy them to your laptop / local machine to speed this up. You can use the command `micro_sam.precompute_state` for this (it is installed with the rest of the applications). You can specify the location of the precomputed embeddings via the `embedding_path` argument.

- Most other processing steps are very fast even on a CPU, so interactive annotation is possible. An exception is the automatic segmentation step (2d segmentation), which takes several minutes without a GPU (depending on the image size). For large volumes and timeseries segmenting an object in 3d / tracking across time can take a couple settings with a CPU (it is very fast with a GPU).

- You can also try using a smaller version of the SegmentAnything model to speed up the computations. For this you can pass the `model_type` argument and either set it to `vit_b` or to `vit_l` (default is `vit_h`). However, this may lead to worse results.

- You can save and load the results from the `committed_objects` / `committed_tracks` layer to correct segmentations you obtained from another tool (e.g. CellPose) or to save intermediate annotation results. The results can be saved via `File -> Save Selected Layer(s) ...` in the napari menu (see the tutorial videos for details). They can be loaded again by specifying the corresponding location via the `segmentation_result` (2d and 3d segmentation) or `tracking_result` (tracking) argument.

diff --git a/doc/finetuned_models.md b/doc/finetuned_models.md

new file mode 100644

index 00000000..4fc9fb13

--- /dev/null

+++ b/doc/finetuned_models.md

@@ -0,0 +1,36 @@

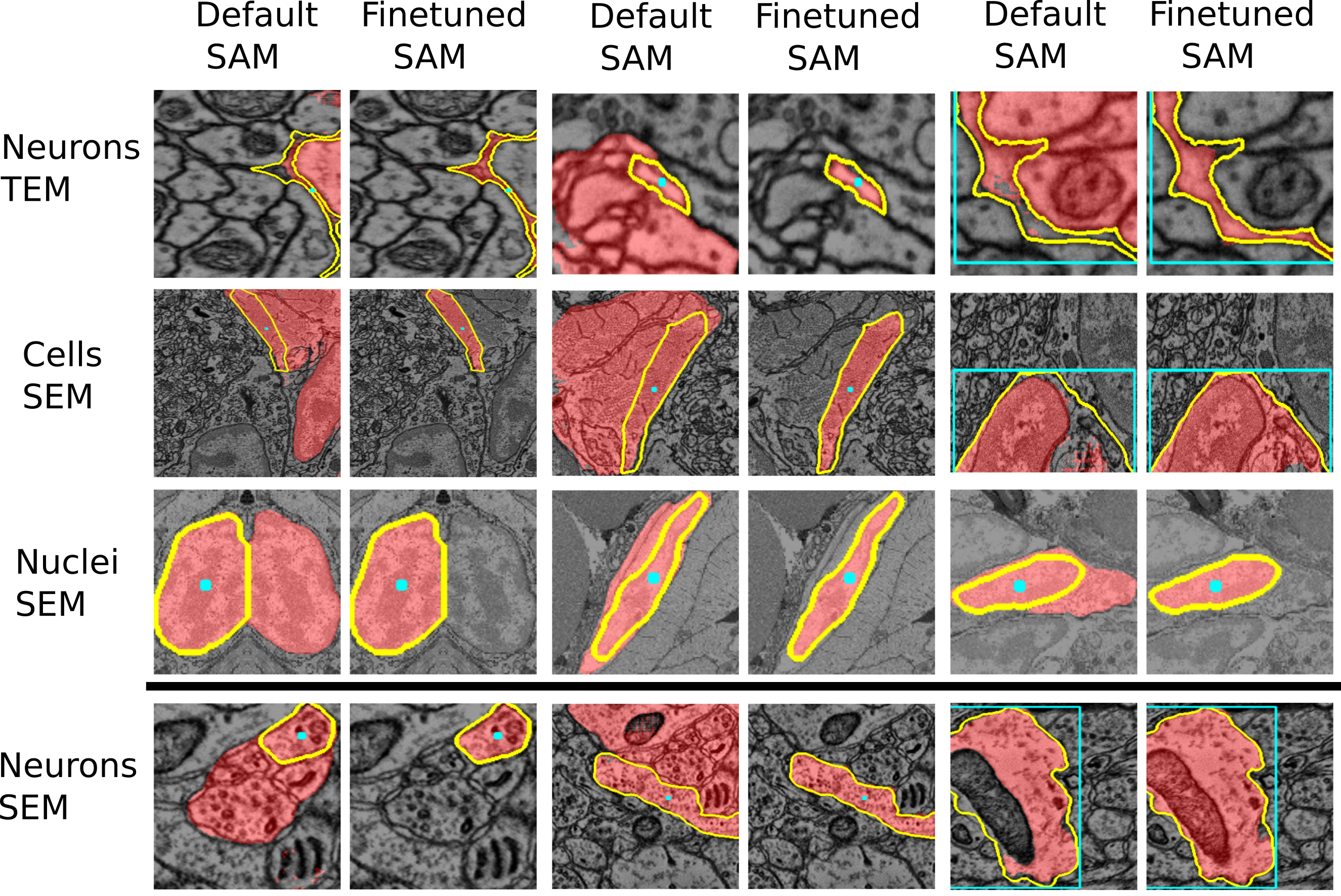

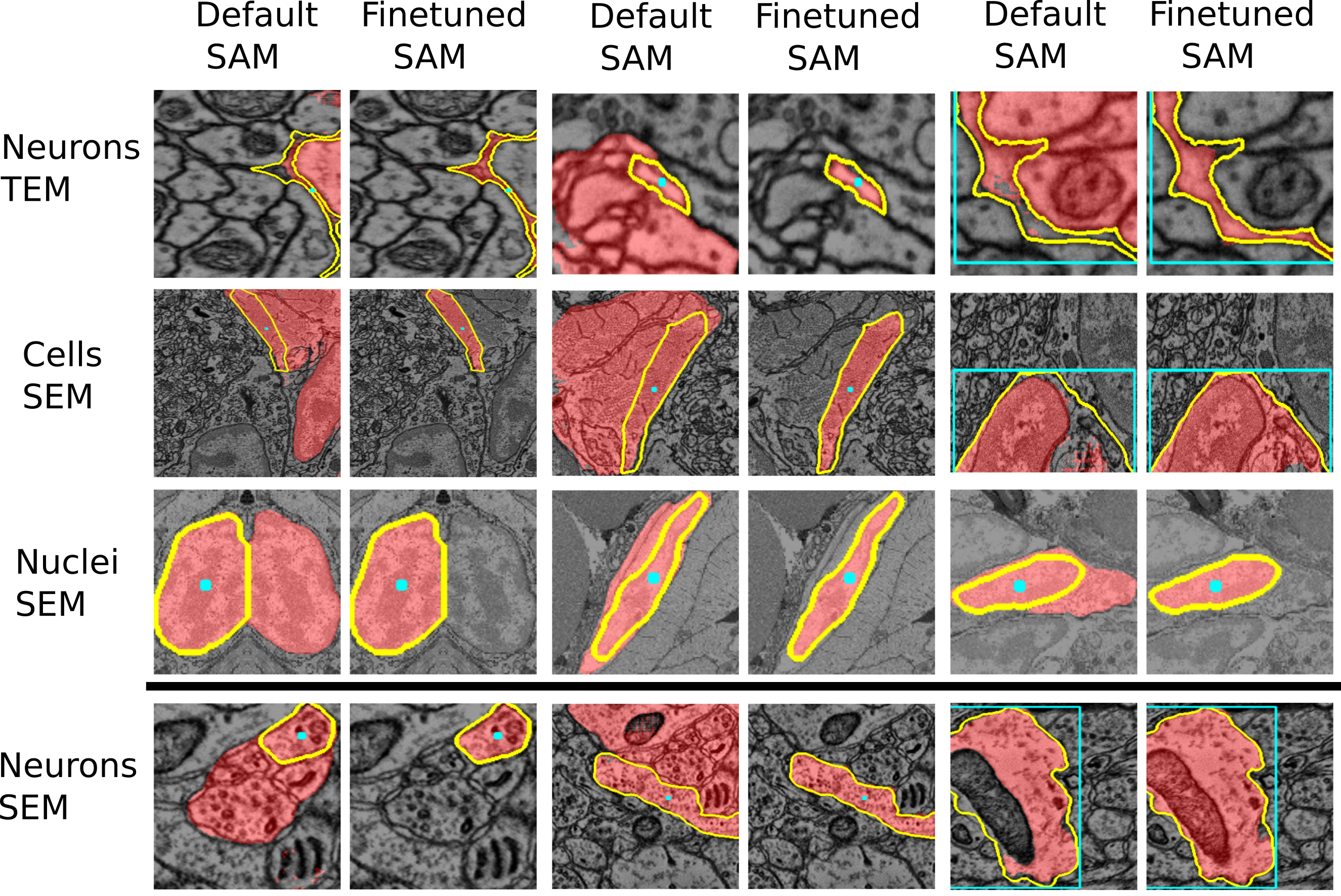

+# Finetuned models

+

+We provide models that were finetuned on microscopy data using `micro_sam.training`. They are hosted on zenodo. We currently offer the following models:

+- `vit_h`: Default Segment Anything model with vit-h backbone.

+- `vit_l`: Default Segment Anything model with vit-l backbone.

+- `vit_b`: Default Segment Anything model with vit-b backbone.

+- `vit_h_lm`: Finetuned Segment Anything model for cells and nuclei in light microscopy data with vit-h backbone.

+- `vit_b_lm`: Finetuned Segment Anything model for cells and nuclei in light microscopy data with vit-b backbone.

+- `vit_h_em`: Finetuned Segment Anything model for neurites and cells in electron microscopy data with vit-h backbone.

+- `vit_b_em`: Finetuned Segment Anything model for neurites and cells in electron microscopy data with vit-b backbone.

+

+See the two figures below of the improvements through the finetuned model for LM and EM data.

+

+

+

+And after selecting them a new window will open where you can select the input file path and other optional parameter. Then click the top button to start the tool. **Note: If you are not starting the annotation tool with a path to pre-computed embeddings then it can take several minutes to open napari after pressing the button because the embeddings are being computed.**

+

## Annotator 2D

@@ -44,7 +57,7 @@ It contains the following elements:

Note that point prompts and box prompts can be combined. When you're using point prompts you can only segment one object at a time. With box prompts you can segment several objects at once.

-Check out [this video](https://youtu.be/DfWE_XRcqN8) for an example of how to use the interactive 2d annotator.

+Check out [this video](https://youtu.be/ket7bDUP9tI) for a tutorial for the 2d annotation tool.

We also provide the `image series annotator`, which can be used for running the 2d annotator for several images in a folder. You can start by clicking `Image series annotator` in the GUI, running `micro_sam.image_series_annotator` in the command line or from a [python script](https://github.com/computational-cell-analytics/micro-sam/blob/master/examples/image_series_annotator.py).

@@ -69,7 +82,7 @@ Most elements are the same as in [the 2d annotator](#annotator-2d):

Note that you can only segment one object at a time with the 3d annotator.

-Check out [this video](https://youtu.be/5Jo_CtIefTM) for an overview of the interactive 3d segmentation functionality.

+Check out [this video](https://youtu.be/PEy9-rTCdS4) for a tutorial for the 3d annotation tool.

## Annotator Tracking

@@ -93,7 +106,7 @@ Most elements are the same as in [the 2d annotator](#annotator-2d):

Note that the tracking annotator only supports 2d image data, volumetric data is not supported.

-Check out [this video](https://youtu.be/PBPW0rDOn9w) for an overview of the interactive tracking functionality.

+Check out [this video](https://youtu.be/Xi5pRWMO6_w) for a tutorial for how to use the tracking annotation tool.

## Tips & Tricks

@@ -105,7 +118,7 @@ You can activate tiling by passing the parameters `tile_shape`, which determines

- If you're using the command line functions you can pass them via the options `--tile_shape 1024 1024 --halo 128 128`

- Note that prediction with tiling only works when the embeddings are cached to file, so you must specify an `embedding_path` (`-e` in the CLI).

- You should choose the `halo` such that it is larger than half of the maximal radius of the objects your segmenting.

-- The applications pre-compute the image embeddings produced by SegmentAnything and (optionally) store them on disc. If you are using a CPU this step can take a while for 3d data or timeseries (you will see a progress bar with a time estimate). If you have access to a GPU without graphical interface (e.g. via a local computer cluster or a cloud provider), you can also pre-compute the embeddings there and then copy them to your laptop / local machine to speed this up. You can use the command `micro_sam.precompute_embeddings` for this (it is installed with the rest of the applications). You can specify the location of the precomputed embeddings via the `embedding_path` argument.

+- The applications pre-compute the image embeddings produced by SegmentAnything and (optionally) store them on disc. If you are using a CPU this step can take a while for 3d data or timeseries (you will see a progress bar with a time estimate). If you have access to a GPU without graphical interface (e.g. via a local computer cluster or a cloud provider), you can also pre-compute the embeddings there and then copy them to your laptop / local machine to speed this up. You can use the command `micro_sam.precompute_state` for this (it is installed with the rest of the applications). You can specify the location of the precomputed embeddings via the `embedding_path` argument.

- Most other processing steps are very fast even on a CPU, so interactive annotation is possible. An exception is the automatic segmentation step (2d segmentation), which takes several minutes without a GPU (depending on the image size). For large volumes and timeseries segmenting an object in 3d / tracking across time can take a couple settings with a CPU (it is very fast with a GPU).

- You can also try using a smaller version of the SegmentAnything model to speed up the computations. For this you can pass the `model_type` argument and either set it to `vit_b` or to `vit_l` (default is `vit_h`). However, this may lead to worse results.

- You can save and load the results from the `committed_objects` / `committed_tracks` layer to correct segmentations you obtained from another tool (e.g. CellPose) or to save intermediate annotation results. The results can be saved via `File -> Save Selected Layer(s) ...` in the napari menu (see the tutorial videos for details). They can be loaded again by specifying the corresponding location via the `segmentation_result` (2d and 3d segmentation) or `tracking_result` (tracking) argument.

diff --git a/doc/finetuned_models.md b/doc/finetuned_models.md

new file mode 100644

index 00000000..4fc9fb13

--- /dev/null

+++ b/doc/finetuned_models.md

@@ -0,0 +1,36 @@

+# Finetuned models

+

+We provide models that were finetuned on microscopy data using `micro_sam.training`. They are hosted on zenodo. We currently offer the following models:

+- `vit_h`: Default Segment Anything model with vit-h backbone.

+- `vit_l`: Default Segment Anything model with vit-l backbone.

+- `vit_b`: Default Segment Anything model with vit-b backbone.

+- `vit_h_lm`: Finetuned Segment Anything model for cells and nuclei in light microscopy data with vit-h backbone.

+- `vit_b_lm`: Finetuned Segment Anything model for cells and nuclei in light microscopy data with vit-b backbone.

+- `vit_h_em`: Finetuned Segment Anything model for neurites and cells in electron microscopy data with vit-h backbone.

+- `vit_b_em`: Finetuned Segment Anything model for neurites and cells in electron microscopy data with vit-b backbone.

+

+See the two figures below of the improvements through the finetuned model for LM and EM data.

+

+ +

+

+

+ +

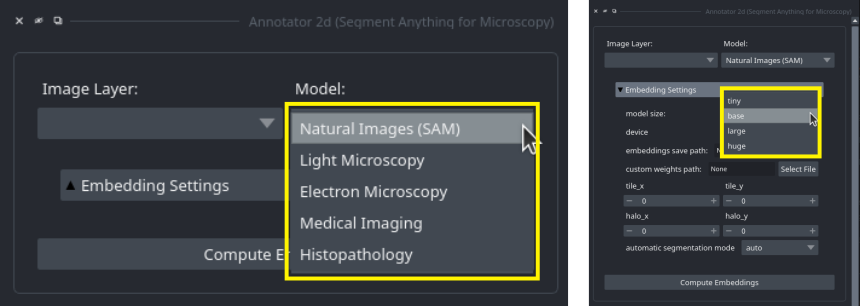

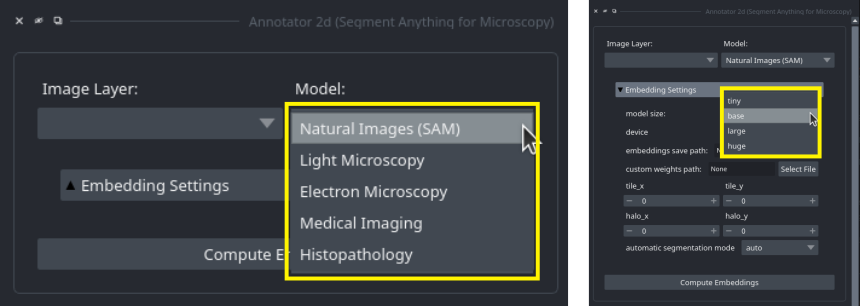

+You can select which of the models is used in the annotation tools by selecting the corresponding name from the `Model Type` menu:

+

+

+

+You can select which of the models is used in the annotation tools by selecting the corresponding name from the `Model Type` menu:

+

+ +

+To use a specific model in the python library you need to pass the corresponding name as value to the `model_type` parameter exposed by all relevant functions.

+See for example the [2d annotator example](https://github.com/computational-cell-analytics/micro-sam/blob/master/examples/annotator_2d.py#L62) where `use_finetuned_model` can be set to `True` to use the `vit_h_lm` model.

+

+## Which model should I choose?

+

+As a rule of thumb:

+- Use the `_lm` models for segmenting cells or nuclei in light microscopy.

+- Use the `_em` models for segmenting ceells or neurites in electron microscopy.

+ - Note that this model does not work well for segmenting mitochondria or other organelles becuase it is biased towards segmenting the full cell / cellular compartment.

+- For other cases use the default models.

+

+See also the figures above for examples where the finetuned models work better than the vanilla models.

+Currently the model `vit_h` is used by default.

+

+We are working on releasing more fine-tuned models, in particular for mitochondria and other organelles in EM.

diff --git a/doc/images/em_comparison.png b/doc/images/em_comparison.png

new file mode 100644

index 00000000..86b2d66c

Binary files /dev/null and b/doc/images/em_comparison.png differ

diff --git a/doc/images/lm_comparison.png b/doc/images/lm_comparison.png

new file mode 100644

index 00000000..4e115d61

Binary files /dev/null and b/doc/images/lm_comparison.png differ

diff --git a/doc/images/micro-sam-gui.png b/doc/images/micro-sam-gui.png

new file mode 100644

index 00000000..26254490

Binary files /dev/null and b/doc/images/micro-sam-gui.png differ

diff --git a/doc/images/model-type-selector.png b/doc/images/model-type-selector.png

new file mode 100644

index 00000000..cab3b077

Binary files /dev/null and b/doc/images/model-type-selector.png differ

diff --git a/doc/images/vanilla-v-finetuned.png b/doc/images/vanilla-v-finetuned.png

deleted file mode 100644

index a72446a4..00000000

Binary files a/doc/images/vanilla-v-finetuned.png and /dev/null differ

diff --git a/doc/installation.md b/doc/installation.md

index 12bda71e..0b49edc5 100644

--- a/doc/installation.md

+++ b/doc/installation.md

@@ -1,16 +1,38 @@

# Installation

-`micro_sam` requires the following dependencies:

+We provide three different ways of installing `micro_sam`:

+- [From conda](#from-conda) is the recommended way if you want to use all functionality.

+- [From source](#from-source) for setting up a development environment to change and potentially contribute to our software.

+- [From installer](#from-installer) to install without having to use conda. This mode of installation is still experimental! It only provides the annotation tools and does not enable model finetuning.

+

+Our software requires the following dependencies:

- [PyTorch](https://pytorch.org/get-started/locally/)

- [SegmentAnything](https://github.com/facebookresearch/segment-anything#installation)

-- [napari](https://napari.org/stable/)

- [elf](https://github.com/constantinpape/elf)

+- [napari](https://napari.org/stable/) (for the interactive annotation tools)

+- [torch_em](https://github.com/constantinpape/torch-em) (for the training functionality)

+

+## From conda

-It is available as a conda package and can be installed via

+`micro_sam` is available as a conda package and can be installed via

```

$ conda install -c conda-forge micro_sam

```

+This command will not install the required dependencies for the annotation tools and for training / finetuning.

+To use the annotation functionality you also need to install `napari`:

+```

+$ conda install -c conda-forge napari pyqt

+```

+And to use the training functionality `torch_em`:

+```

+$ conda install -c conda-forge torch_em

+```

+

+In case the installation via conda takes too long consider using [mamba](https://mamba.readthedocs.io/en/latest/).

+Once you have it installed you can simply replace the `conda` commands with `mamba`.

+

+

## From source

To install `micro_sam` from source, we recommend to first set up a conda environment with the necessary requirements:

@@ -54,3 +76,48 @@ $ pip install -e .

- Install `micro_sam` by running `pip install -e .` in this folder.

- **Note:** we have seen many issues with the pytorch installation on MAC. If a wrong pytorch version is installed for you (which will cause pytorch errors once you run the application) please try again with a clean `mambaforge` installation. Please install the `OS X, arm64` version from [here](https://github.com/conda-forge/miniforge#mambaforge).

- Some MACs require a specific installation order of packages. If the steps layed out above don't work for you please check out the procedure described [in this github issue](https://github.com/computational-cell-analytics/micro-sam/issues/77).

+

+

+## From installer

+

+We also provide installers for Linuxand Windows:

+- [Linux](https://owncloud.gwdg.de/index.php/s/Cw9RmA3BlyqKJeU)

+- [Windows](https://owncloud.gwdg.de/index.php/s/1iD1eIcMZvEyE6d)

+

+

+**The installers are stil experimental and not fully tested.** Mac is not supported yet, but we are working on also providing an installer for it.

+

+If you encounter problems with them then please consider installing `micro_sam` via [conda](#from-conda) instead.

+

+**Linux Installer:**

+

+To use the installer:

+- Unpack the zip file you have downloaded.

+- Make the installer executable: `$ chmod +x micro_sam-0.2.0post1-Linux-x86_64.sh`

+- Run the installer: `$./micro_sam-0.2.0post1-Linux-x86_64.sh$`

+ - You can select where to install `micro_sam` during the installation. By default it will be installed in `$HOME/micro_sam`.

+ - The installer will unpack all `micro_sam` files to the installation directory.

+- After the installation you can start the annotator with the command `.../micro_sam/bin/micro_sam.annotator`.

+ - To make it easier to run the annotation tool you can add `.../micro_sam/bin` to your `PATH` or set a softlink to `.../micro_sam/bin/micro_sam.annotator`.

+

+

+

+**Windows Installer:**

+

+- Unpack the zip file you have downloaded.

+- Run the installer by double clicking on it.

+- Choose installation type: `Just Me(recommended)` or `All Users(requires admin privileges)`.

+- Choose installation path. By default it will be installed in `C:\Users\\micro_sam` for `Just Me` installation or in `C:\ProgramData\micro_sam` for `All Users`.

+ - The installer will unpack all micro_sam files to the installation directory.

+- After the installation you can start the annotator by double clicking on `.\micro_sam\Scripts\micro_sam.annotator.exe` or with the command `.\micro_sam\Scripts\micro_sam.annotator.exe` from the Command Prompt.

diff --git a/doc/python_library.md b/doc/python_library.md

index a34b1966..dbab4b0a 100644

--- a/doc/python_library.md

+++ b/doc/python_library.md

@@ -5,24 +5,25 @@ The python library can be imported via

import micro_sam

```

-It implements functionality for running Segment Anything for 2d and 3d data, provides more instance segmentation functionality and several other helpful functions for using Segment Anything.

-This functionality is used to implement the `micro_sam` annotation tools, but you can also use it as a standalone python library. Check out the documentation under `Submodules` for more details on the python library.

+The library

+- implements function to apply Segment Anything to 2d and 3d data more conviently in `micro_sam.prompt_based_segmentation`.

+- provides more and imporoved automatic instance segmentation functionality in `micro_sam.instance_segmentation`.

+- implements training functionality that can be used for finetuning on your own data in `micro_sam.training`.

+- provides functionality for quantitative and qualitative evaluation of Segment Anything models in `micro_sam.evaluation`.

-## Finetuned models

+This functionality is used to implement the interactive annotation tools and can also be used as a standalone python library.

+Some preliminary examples for how to use the python library can be found [here](https://github.com/computational-cell-analytics/micro-sam/tree/master/examples/use_as_library). Check out the `Submodules` documentation for more details.

-We provide finetuned Segment Anything models for microscopy data. They are still in an experimental stage and we will upload more and better models soon, as well as the code for fine-tuning.

-For using the preliminary models, check out the [2d annotator example](https://github.com/computational-cell-analytics/micro-sam/blob/master/examples/annotator_2d.py#L62) and set `use_finetuned_model` to `True`.

+## Training your own model

-We currently provide support for the following models:

-- `vit_h`: The default Segment Anything model with vit-h backbone.

-- `vit_l`: The default Segment Anything model with vit-l backbone.

-- `vit_b`: The default Segment Anything model with vit-b backbone.

-- `vit_h_lm`: The preliminary finetuned Segment Anything model for light microscopy data with vit-h backbone.

-- `vit_b_lm`: The preliminary finetuned Segment Anything model for light microscopy data with vit-b backbone.

+We reimplement the training logic described in the [Segment Anything publication](https://arxiv.org/abs/2304.02643) to enable finetuning on custom data.

+We use this functionality to provide the [finetuned microscopy models](#finetuned-models) and it can also be used to finetune models on your own data.

+In fact the best results can be expected when finetuning on your own data, and we found that it does not require much annotated training data to get siginficant improvements in model performance.

+So a good strategy is to annotate a few images with one of the provided models using one of the interactive annotation tools and, if the annotation is not working as good as expected yet, finetune on the annotated data.

+

-These are also the valid names for the `model_type` parameter in `micro_sam`. The library will automatically choose and if necessary download the corresponding model.

-

-See the difference between the normal and finetuned Segment Anything ViT-h model on an image from [LiveCELL](https://sartorius-research.github.io/LIVECell/):

-

-

+

+To use a specific model in the python library you need to pass the corresponding name as value to the `model_type` parameter exposed by all relevant functions.

+See for example the [2d annotator example](https://github.com/computational-cell-analytics/micro-sam/blob/master/examples/annotator_2d.py#L62) where `use_finetuned_model` can be set to `True` to use the `vit_h_lm` model.

+

+## Which model should I choose?

+

+As a rule of thumb:

+- Use the `_lm` models for segmenting cells or nuclei in light microscopy.

+- Use the `_em` models for segmenting ceells or neurites in electron microscopy.

+ - Note that this model does not work well for segmenting mitochondria or other organelles becuase it is biased towards segmenting the full cell / cellular compartment.

+- For other cases use the default models.

+

+See also the figures above for examples where the finetuned models work better than the vanilla models.

+Currently the model `vit_h` is used by default.

+

+We are working on releasing more fine-tuned models, in particular for mitochondria and other organelles in EM.

diff --git a/doc/images/em_comparison.png b/doc/images/em_comparison.png

new file mode 100644

index 00000000..86b2d66c

Binary files /dev/null and b/doc/images/em_comparison.png differ

diff --git a/doc/images/lm_comparison.png b/doc/images/lm_comparison.png

new file mode 100644

index 00000000..4e115d61

Binary files /dev/null and b/doc/images/lm_comparison.png differ

diff --git a/doc/images/micro-sam-gui.png b/doc/images/micro-sam-gui.png

new file mode 100644

index 00000000..26254490

Binary files /dev/null and b/doc/images/micro-sam-gui.png differ

diff --git a/doc/images/model-type-selector.png b/doc/images/model-type-selector.png

new file mode 100644

index 00000000..cab3b077

Binary files /dev/null and b/doc/images/model-type-selector.png differ

diff --git a/doc/images/vanilla-v-finetuned.png b/doc/images/vanilla-v-finetuned.png

deleted file mode 100644

index a72446a4..00000000

Binary files a/doc/images/vanilla-v-finetuned.png and /dev/null differ

diff --git a/doc/installation.md b/doc/installation.md

index 12bda71e..0b49edc5 100644

--- a/doc/installation.md

+++ b/doc/installation.md

@@ -1,16 +1,38 @@

# Installation

-`micro_sam` requires the following dependencies:

+We provide three different ways of installing `micro_sam`:

+- [From conda](#from-conda) is the recommended way if you want to use all functionality.

+- [From source](#from-source) for setting up a development environment to change and potentially contribute to our software.

+- [From installer](#from-installer) to install without having to use conda. This mode of installation is still experimental! It only provides the annotation tools and does not enable model finetuning.

+

+Our software requires the following dependencies:

- [PyTorch](https://pytorch.org/get-started/locally/)

- [SegmentAnything](https://github.com/facebookresearch/segment-anything#installation)

-- [napari](https://napari.org/stable/)

- [elf](https://github.com/constantinpape/elf)

+- [napari](https://napari.org/stable/) (for the interactive annotation tools)

+- [torch_em](https://github.com/constantinpape/torch-em) (for the training functionality)

+

+## From conda

-It is available as a conda package and can be installed via

+`micro_sam` is available as a conda package and can be installed via

```

$ conda install -c conda-forge micro_sam

```

+This command will not install the required dependencies for the annotation tools and for training / finetuning.

+To use the annotation functionality you also need to install `napari`:

+```

+$ conda install -c conda-forge napari pyqt

+```

+And to use the training functionality `torch_em`:

+```

+$ conda install -c conda-forge torch_em

+```

+

+In case the installation via conda takes too long consider using [mamba](https://mamba.readthedocs.io/en/latest/).

+Once you have it installed you can simply replace the `conda` commands with `mamba`.

+

+

## From source

To install `micro_sam` from source, we recommend to first set up a conda environment with the necessary requirements:

@@ -54,3 +76,48 @@ $ pip install -e .

- Install `micro_sam` by running `pip install -e .` in this folder.

- **Note:** we have seen many issues with the pytorch installation on MAC. If a wrong pytorch version is installed for you (which will cause pytorch errors once you run the application) please try again with a clean `mambaforge` installation. Please install the `OS X, arm64` version from [here](https://github.com/conda-forge/miniforge#mambaforge).

- Some MACs require a specific installation order of packages. If the steps layed out above don't work for you please check out the procedure described [in this github issue](https://github.com/computational-cell-analytics/micro-sam/issues/77).

+

+

+## From installer

+

+We also provide installers for Linuxand Windows:

+- [Linux](https://owncloud.gwdg.de/index.php/s/Cw9RmA3BlyqKJeU)

+- [Windows](https://owncloud.gwdg.de/index.php/s/1iD1eIcMZvEyE6d)

+

+

+**The installers are stil experimental and not fully tested.** Mac is not supported yet, but we are working on also providing an installer for it.

+

+If you encounter problems with them then please consider installing `micro_sam` via [conda](#from-conda) instead.

+

+**Linux Installer:**

+

+To use the installer:

+- Unpack the zip file you have downloaded.

+- Make the installer executable: `$ chmod +x micro_sam-0.2.0post1-Linux-x86_64.sh`

+- Run the installer: `$./micro_sam-0.2.0post1-Linux-x86_64.sh$`

+ - You can select where to install `micro_sam` during the installation. By default it will be installed in `$HOME/micro_sam`.

+ - The installer will unpack all `micro_sam` files to the installation directory.

+- After the installation you can start the annotator with the command `.../micro_sam/bin/micro_sam.annotator`.

+ - To make it easier to run the annotation tool you can add `.../micro_sam/bin` to your `PATH` or set a softlink to `.../micro_sam/bin/micro_sam.annotator`.

+

+

+

+**Windows Installer:**

+

+- Unpack the zip file you have downloaded.

+- Run the installer by double clicking on it.

+- Choose installation type: `Just Me(recommended)` or `All Users(requires admin privileges)`.

+- Choose installation path. By default it will be installed in `C:\Users\\micro_sam` for `Just Me` installation or in `C:\ProgramData\micro_sam` for `All Users`.

+ - The installer will unpack all micro_sam files to the installation directory.

+- After the installation you can start the annotator by double clicking on `.\micro_sam\Scripts\micro_sam.annotator.exe` or with the command `.\micro_sam\Scripts\micro_sam.annotator.exe` from the Command Prompt.

diff --git a/doc/python_library.md b/doc/python_library.md

index a34b1966..dbab4b0a 100644

--- a/doc/python_library.md

+++ b/doc/python_library.md

@@ -5,24 +5,25 @@ The python library can be imported via

import micro_sam

```

-It implements functionality for running Segment Anything for 2d and 3d data, provides more instance segmentation functionality and several other helpful functions for using Segment Anything.

-This functionality is used to implement the `micro_sam` annotation tools, but you can also use it as a standalone python library. Check out the documentation under `Submodules` for more details on the python library.

+The library

+- implements function to apply Segment Anything to 2d and 3d data more conviently in `micro_sam.prompt_based_segmentation`.

+- provides more and imporoved automatic instance segmentation functionality in `micro_sam.instance_segmentation`.

+- implements training functionality that can be used for finetuning on your own data in `micro_sam.training`.

+- provides functionality for quantitative and qualitative evaluation of Segment Anything models in `micro_sam.evaluation`.

-## Finetuned models

+This functionality is used to implement the interactive annotation tools and can also be used as a standalone python library.

+Some preliminary examples for how to use the python library can be found [here](https://github.com/computational-cell-analytics/micro-sam/tree/master/examples/use_as_library). Check out the `Submodules` documentation for more details.

-We provide finetuned Segment Anything models for microscopy data. They are still in an experimental stage and we will upload more and better models soon, as well as the code for fine-tuning.

-For using the preliminary models, check out the [2d annotator example](https://github.com/computational-cell-analytics/micro-sam/blob/master/examples/annotator_2d.py#L62) and set `use_finetuned_model` to `True`.

+## Training your own model

-We currently provide support for the following models:

-- `vit_h`: The default Segment Anything model with vit-h backbone.

-- `vit_l`: The default Segment Anything model with vit-l backbone.

-- `vit_b`: The default Segment Anything model with vit-b backbone.

-- `vit_h_lm`: The preliminary finetuned Segment Anything model for light microscopy data with vit-h backbone.

-- `vit_b_lm`: The preliminary finetuned Segment Anything model for light microscopy data with vit-b backbone.

+We reimplement the training logic described in the [Segment Anything publication](https://arxiv.org/abs/2304.02643) to enable finetuning on custom data.

+We use this functionality to provide the [finetuned microscopy models](#finetuned-models) and it can also be used to finetune models on your own data.

+In fact the best results can be expected when finetuning on your own data, and we found that it does not require much annotated training data to get siginficant improvements in model performance.

+So a good strategy is to annotate a few images with one of the provided models using one of the interactive annotation tools and, if the annotation is not working as good as expected yet, finetune on the annotated data.

+

-These are also the valid names for the `model_type` parameter in `micro_sam`. The library will automatically choose and if necessary download the corresponding model.

-

-See the difference between the normal and finetuned Segment Anything ViT-h model on an image from [LiveCELL](https://sartorius-research.github.io/LIVECell/):

-

- +The training logic is implemented in `micro_sam.training` and is based on [torch-em](https://github.com/constantinpape/torch-em). Please check out [examples/finetuning](https://github.com/computational-cell-analytics/micro-sam/tree/master/examples/finetuning) to see how you can finetune on your own data with it. The script `finetune_hela.py` contains an example for finetuning on a small custom microscopy dataset and `use_finetuned_model.py` shows how this model can then be used in the interactive annotatin tools.

+More advanced examples, including quantitative and qualitative evaluation, of finetuned models can be found in [finetuning](https://github.com/computational-cell-analytics/micro-sam/tree/master/finetuning), which contains the code for training and evaluating our microscopy models.

diff --git a/doc/start_page.md b/doc/start_page.md

index b5fab2a9..840a650b 100644

--- a/doc/start_page.md

+++ b/doc/start_page.md

@@ -2,7 +2,7 @@

Segment Anything for Microscopy implements automatic and interactive annotation for microscopy data. It is built on top of [Segment Anything](https://segment-anything.com/) by Meta AI and specializes it for microscopy and other bio-imaging data.

Its core components are:

-- The `micro_sam` annotator tools for interactive data annotation with [napari](https://napari.org/stable/).

+- The `micro_sam` tools for interactive data annotation with [napari](https://napari.org/stable/).

- The `micro_sam` library to apply Segment Anything to 2d and 3d data or fine-tune it on your data.

- The `micro_sam` models that are fine-tuned on publicly available microscopy data.

@@ -19,20 +19,18 @@ On our roadmap for more functionality are:

If you run into any problems or have questions please open an issue on Github or reach out via [image.sc](https://forum.image.sc/) using the tag `micro-sam` and tagging @constantinpape.

-

## Quickstart

You can install `micro_sam` via conda:

```

-$ conda install -c conda-forge micro_sam

+$ conda install -c conda-forge micro_sam napari pyqt

```

-For more installation options check out [Installation](#installation)

+We also provide experimental installers for all operating systems.

+For more details on the available installation options check out [the installation section](#installation).

After installing `micro_sam` you can run the annotation tool via `$ micro_sam.annotator`, which opens a menu for selecting the annotation tool and its inputs.

-See [Annotation Tools](#annotation-tools) for an overview and explanation of the annotation functionality.

+See [the annotation tool section](#annotation-tools) for an overview and explanation of the annotation functionality.

The `micro_sam` python library can be used via

```python

@@ -47,7 +45,5 @@ For now, check out [the example script for the 2d annotator](https://github.com/

## Citation

If you are using `micro_sam` in your research please cite

-- [SegmentAnything](https://arxiv.org/abs/2304.02643)

-- and our repository on [zenodo](https://doi.org/10.5281/zenodo.7919746)

-

-We will release a preprint soon that describes this work and can be cited instead of zenodo.

+- Our [preprint](https://doi.org/10.1101/2023.08.21.554208)

+- and the original [Segment Anything publication](https://arxiv.org/abs/2304.02643)

diff --git a/environment_cpu.yaml b/environment_cpu.yaml

index 4d5f8ea7..ad91dab9 100644

--- a/environment_cpu.yaml

+++ b/environment_cpu.yaml

@@ -11,6 +11,7 @@ dependencies:

- pytorch

- segment-anything

- torchvision

+ - torch_em >=0.5.1

- tqdm

# - pip:

# - git+https://github.com/facebookresearch/segment-anything.git

diff --git a/environment_gpu.yaml b/environment_gpu.yaml

index 21d5df53..57700759 100644

--- a/environment_gpu.yaml

+++ b/environment_gpu.yaml

@@ -12,6 +12,7 @@ dependencies:

- pytorch-cuda>=11.7 # you may need to update the cuda version to match your system

- segment-anything

- torchvision

+ - torch_em >=0.5.1

- tqdm

# - pip:

# - git+https://github.com/facebookresearch/segment-anything.git

diff --git a/examples/README.md b/examples/README.md

index 87b3a963..4844167f 100644

--- a/examples/README.md

+++ b/examples/README.md

@@ -6,5 +6,8 @@ Examples for using the micro_sam annotation tools:

- `annotator_tracking.py`: run the interactive tracking annotation tool

- `image_series_annotator.py`: run the annotation tool for a series of images

+The folder `finetuning` contains example scripts that show how a Segment Anything model can be fine-tuned

+on custom data with the `micro_sam.train` library, and how the finetuned models can then be used within the annotatin tools.

+

The folder `use_as_library` contains example scripts that show how `micro_sam` can be used as a python

-library to apply Segment Anything on mult-dimensional data.

+library to apply Segment Anything to mult-dimensional data.

diff --git a/examples/annotator_2d.py b/examples/annotator_2d.py

index f0d6d44b..8f4930f2 100644

--- a/examples/annotator_2d.py

+++ b/examples/annotator_2d.py

@@ -34,7 +34,7 @@ def hela_2d_annotator(use_finetuned_model):

embedding_path = "./embeddings/embeddings-hela2d.zarr"

model_type = "vit_h"

- annotator_2d(image, embedding_path, show_embeddings=False, model_type=model_type)

+ annotator_2d(image, embedding_path, show_embeddings=False, model_type=model_type, precompute_amg_state=True)

def wholeslide_annotator(use_finetuned_model):

diff --git a/examples/annotator_with_custom_model.py b/examples/annotator_with_custom_model.py

new file mode 100644

index 00000000..ceb8b2cb

--- /dev/null

+++ b/examples/annotator_with_custom_model.py

@@ -0,0 +1,23 @@

+import h5py

+import micro_sam.sam_annotator as annotator

+from micro_sam.util import get_sam_model

+

+# TODO add an example for the 2d annotator with a custom model

+

+

+def annotator_3d_with_custom_model():

+ with h5py.File("./data/gut1_block_1.h5") as f:

+ raw = f["raw"][:]

+

+ custom_model = "/home/pape/Work/data/models/sam/user-study/vit_h_nuclei_em_finetuned.pt"

+ embedding_path = "./embeddings/nuclei3d-custom-vit-h.zarr"

+ predictor = get_sam_model(checkpoint_path=custom_model, model_type="vit_h")

+ annotator.annotator_3d(raw, embedding_path, predictor=predictor)

+

+

+def main():

+ annotator_3d_with_custom_model()

+

+

+if __name__ == "__main__":

+ main()

diff --git a/examples/finetuning/.gitignore b/examples/finetuning/.gitignore

new file mode 100644

index 00000000..ec28c5ea

--- /dev/null

+++ b/examples/finetuning/.gitignore

@@ -0,0 +1,2 @@

+checkpoints/

+logs/

diff --git a/examples/finetuning/finetune_hela.py b/examples/finetuning/finetune_hela.py

new file mode 100644

index 00000000..f83bd8cb

--- /dev/null

+++ b/examples/finetuning/finetune_hela.py

@@ -0,0 +1,140 @@

+import os

+

+import numpy as np

+import torch

+import torch_em

+

+import micro_sam.training as sam_training

+from micro_sam.sample_data import fetch_tracking_example_data, fetch_tracking_segmentation_data

+from micro_sam.util import export_custom_sam_model

+

+DATA_FOLDER = "data"

+

+

+def get_dataloader(split, patch_shape, batch_size):

+ """Return train or val data loader for finetuning SAM.

+

+ The data loader must be a torch data loader that retuns `x, y` tensors,

+ where `x` is the image data and `y` are the labels.

+ The labels have to be in a label mask instance segmentation format.

+ I.e. a tensor of the same spatial shape as `x`, with each object mask having its own ID.

+ Important: the ID 0 is reseved for background, and the IDs must be consecutive

+

+ Here, we use `torch_em.default_segmentation_loader` for creating a suitable data loader from

+ the example hela data. You can either adapt this for your own data (see comments below)

+ or write a suitable torch dataloader yourself.

+ """

+ assert split in ("train", "val")

+ os.makedirs(DATA_FOLDER, exist_ok=True)

+

+ # This will download the image and segmentation data for training.

+ image_dir = fetch_tracking_example_data(DATA_FOLDER)

+ segmentation_dir = fetch_tracking_segmentation_data(DATA_FOLDER)

+

+ # torch_em.default_segmentation_loader is a convenience function to build a torch dataloader

+ # from image data and labels for training segmentation models.

+ # It supports image data in various formats. Here, we load image data and labels from the two

+ # folders with tif images that were downloaded by the example data functionality, by specifying

+ # `raw_key` and `label_key` as `*.tif`. This means all images in the respective folders that end with

+ # .tif will be loadded.

+ # The function supports many other file formats. For example, if you have tif stacks with multiple slices

+ # instead of multiple tif images in a foldder, then you can pass raw_key=label_key=None.

+

+ # Load images from multiple files in folder via pattern (here: all tif files)

+ raw_key, label_key = "*.tif", "*.tif"

+ # Alternative: if you have tif stacks you can just set raw_key and label_key to None

+ # raw_key, label_key= None, None

+

+ # The 'roi' argument can be used to subselect parts of the data.

+ # Here, we use it to select the first 70 frames fro the test split and the other frames for the val split.

+ if split == "train":

+ roi = np.s_[:70, :, :]

+ else:

+ roi = np.s_[70:, :, :]

+

+ loader = torch_em.default_segmentation_loader(

+ raw_paths=image_dir, raw_key=raw_key,

+ label_paths=segmentation_dir, label_key=label_key,

+ patch_shape=patch_shape, batch_size=batch_size,

+ ndim=2, is_seg_dataset=True, rois=roi,

+ label_transform=torch_em.transform.label.connected_components,

+ )

+ return loader

+

+

+def run_training(checkpoint_name, model_type):

+ """Run the actual model training."""

+

+ # All hyperparameters for training.

+ batch_size = 1 # the training batch size

+ patch_shape = (1, 512, 512) # the size of patches for training

+ n_objects_per_batch = 25 # the number of objects per batch that will be sampled

+ device = torch.device("cuda") # the device/GPU used for training

+ n_iterations = 10000 # how long we train (in iterations)

+

+ # Get the dataloaders.

+ train_loader = get_dataloader("train", patch_shape, batch_size)

+ val_loader = get_dataloader("val", patch_shape, batch_size)

+

+ # Get the segment anything model, the optimizer and the LR scheduler

+ model = sam_training.get_trainable_sam_model(model_type=model_type, device=device)

+ optimizer = torch.optim.Adam(model.parameters(), lr=1e-5)

+ scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, mode="min", factor=0.9, patience=10, verbose=True)

+

+ # This class creates all the training data for a batch (inputs, prompts and labels).

+ convert_inputs = sam_training.ConvertToSamInputs()

+

+ # the trainer which performs training and validation (implemented using "torch_em")

+ trainer = sam_training.SamTrainer(

+ name=checkpoint_name,

+ train_loader=train_loader,

+ val_loader=val_loader,

+ model=model,

+ optimizer=optimizer,

+ # currently we compute loss batch-wise, else we pass channelwise True

+ loss=torch_em.loss.DiceLoss(channelwise=False),

+ metric=torch_em.loss.DiceLoss(),

+ device=device,

+ lr_scheduler=scheduler,

+ logger=sam_training.SamLogger,

+ log_image_interval=10,

+ mixed_precision=True,

+ convert_inputs=convert_inputs,

+ n_objects_per_batch=n_objects_per_batch,

+ n_sub_iteration=8,

+ compile_model=False

+ )

+ trainer.fit(n_iterations)

+

+

+def export_model(checkpoint_name, model_type):

+ """Export the trained model."""

+ # export the model after training so that it can be used by the rest of the micro_sam library

+ export_path = "./finetuned_hela_model.pth"

+ checkpoint_path = os.path.join("checkpoints", checkpoint_name, "best.pt")

+ export_custom_sam_model(

+ checkpoint_path=checkpoint_path,

+ model_type=model_type,

+ save_path=export_path,

+ )

+

+

+def main():

+ """Finetune a Segment Anything model.

+

+ This example uses image data and segmentations from the cell tracking challenge,

+ but can easily be adapted for other data (including data you have annoated with micro_sam beforehand).

+ """

+ # The model_type determines which base model is used to initialize the weights that are finetuned.

+ # We use vit_b here because it can be trained faster. Note that vit_h usually yields higher quality results.

+ model_type = "vit_b"

+

+ # The name of the checkpoint. The checkpoints will be stored in './checkpoints/'

+ checkpoint_name = "sam_hela"

+

+ run_training(checkpoint_name, model_type)

+ export_model(checkpoint_name, model_type)

+

+

+if __name__ == "__main__":

+ main()

diff --git a/examples/finetuning/use_finetuned_model.py b/examples/finetuning/use_finetuned_model.py

new file mode 100644

index 00000000..19600241

--- /dev/null

+++ b/examples/finetuning/use_finetuned_model.py

@@ -0,0 +1,33 @@

+import imageio.v3 as imageio

+

+import micro_sam.util as util

+from micro_sam.sam_annotator import annotator_2d

+

+

+def run_annotator_with_custom_model():

+ """Run the 2d anntator with a custom (finetuned) model.

+

+ Here, we use the model that is produced by `finetuned_hela.py` and apply it

+ for an image from the validation set.

+ """

+ # take the last frame, which is part of the val set, so the model was not directly trained on it

+ im = imageio.imread("./data/DIC-C2DH-HeLa.zip.unzip/DIC-C2DH-HeLa/01/t083.tif")

+

+ # set the checkpoint and the path for caching the embeddings

+ checkpoint = "./finetuned_hela_model.pth"

+ embedding_path = "./embeddings/embeddings-finetuned.zarr"

+

+ model_type = "vit_b" # We finetune a vit_b in the example script.

+ # Adapt this if you finetune a different model type, e.g. vit_h.

+

+ # Load the custom model.

+ predictor = util.get_sam_model(model_type=model_type, checkpoint_path=checkpoint)

+

+ # Run the 2d annotator with the custom model.

+ annotator_2d(

+ im, embedding_path=embedding_path, predictor=predictor, precompute_amg_state=True,

+ )

+

+

+if __name__ == "__main__":

+ run_annotator_with_custom_model()

diff --git a/examples/image_series_annotator.py b/examples/image_series_annotator.py

index 2a1b65e7..1632d793 100644

--- a/examples/image_series_annotator.py

+++ b/examples/image_series_annotator.py

@@ -16,7 +16,8 @@ def series_annotation(use_finetuned_model):

example_data = fetch_image_series_example_data("./data")

image_folder_annotator(

example_data, "./data/series-segmentation-result", embedding_path=embedding_path,

- pattern="*.tif", model_type=model_type

+ pattern="*.tif", model_type=model_type,

+ precompute_amg_state=True,

)

diff --git a/examples/use_as_library/instance_segmentation.py b/examples/use_as_library/instance_segmentation.py

index 89b95900..be9300d3 100644

--- a/examples/use_as_library/instance_segmentation.py

+++ b/examples/use_as_library/instance_segmentation.py

@@ -50,7 +50,8 @@ def cell_segmentation():

# Generate the instance segmentation. You can call this again for different values of 'pred_iou_thresh'

# without having to call initialize again.

- # NOTE: the main advantage of this method is that it's considerably faster than the original implementation.

+ # NOTE: the main advantage of this method is that it's faster than the original implementation,

+ # however the quality is not as high as the original instance segmentation quality yet.

instances_mws = amg_mws.generate(pred_iou_thresh=0.88)

instances_mws = instance_segmentation.mask_data_to_segmentation(

instances_mws, shape=image.shape, with_background=True

@@ -64,7 +65,7 @@ def cell_segmentation():

napari.run()

-def segmentation_with_tiling():

+def cell_segmentation_with_tiling():

"""Run the instance segmentation functionality from micro_sam for segmentation of

cells in a large image. You need to run examples/annotator_2d.py:wholeslide_annotator once before

running this script so that all required data is downloaded and pre-computed.

@@ -111,20 +112,21 @@ def segmentation_with_tiling():

# Generate the instance segmentation. You can call this again for different values of 'pred_iou_thresh'

# without having to call initialize again.

- # NOTE: the main advantage of this method is that it's considerably faster than the original implementation.

+ # NOTE: the main advantage of this method is that it's faster than the original implementation.

+ # however the quality is not as high as the original instance segmentation quality yet.

instances_mws = amg_mws.generate(pred_iou_thresh=0.88)

# Show the results.

v = napari.Viewer()

v.add_image(image)

- # v.add_labels(instances_amg)

+ v.add_labels(instances_amg)

v.add_labels(instances_mws)

napari.run()

def main():

cell_segmentation()

- # segmentation_with_tiling()

+ # cell_segmentation_with_tiling()

if __name__ == "__main__":

diff --git a/finetuning/.gitignore b/finetuning/.gitignore

new file mode 100644

index 00000000..60fd41c2

--- /dev/null

+++ b/finetuning/.gitignore

@@ -0,0 +1,4 @@

+checkpoints/

+logs/

+sam_embeddings/

+results/

diff --git a/finetuning/README.md b/finetuning/README.md

new file mode 100644

index 00000000..6164837d

--- /dev/null

+++ b/finetuning/README.md

@@ -0,0 +1,58 @@

+# Segment Anything Finetuning

+

+Code for finetuning segment anything data on microscopy data and evaluating the finetuned models.

+

+## Example: LiveCELL

+

+**Finetuning**

+

+Run the script `livecell_finetuning.py` for fine-tuning a model on LiveCELL.

+

+**Inference**

+

+The script `livecell_inference.py` can be used to run inference on the test set. It supports different arguments for inference with different configurations.

+For example run

+```

+python livecell_inference.py -c checkpoints/livecell_sam/best.pt -m vit_b -e experiment -i /scratch/projects/nim00007/data/LiveCELL --points --positive 1 --negative 0

+```

+for inference with 1 positive point prompt and no negative point prompt (the prompts are derived from ground-truth).

+

+The arguments `-c`, `-e` and `-i` specify where the checkpoint for the model is, where the predictions from the model and other experiment data will be saved, and where the input dataset (LiveCELL) is stored.

+

+To run the default set of experiments from our publication use the command

+```

+python livecell_inference.py -c checkpoints/livecell_sam/best.pt -m vit_b -e experiment -i /scratch/projects/nim00007/data/LiveCELL -d --prompt_folder /scratch/projects/nim00007/sam/experiments/prompts/livecell

+```

+

+Here `-d` automatically runs the evaluation for these settings:

+- `--points --positive 1 --negative 0` (using point prompts with a single positive point)

+- `--points --positive 2 --negative 4` (using point prompts with two positive points and four negative points)

+- `--points --positive 4 --negative 8` (using point prompts with four positive points and eight negative points)

+- `--box` (using box prompts)

+

+In addition `--prompt_folder` specifies a folder with precomputed prompts. Using pre-computed prompts significantly speeds up the experiments and enables running them in a reproducible manner. (Without it the prompts will be recalculated each time.)

+

+You can also evaluate the automatic instance segmentation functionality, by running

+```

+python livecell_inference.py -c checkpoints/livecell_sam/best.pt -m vit_b -e experiment -i /scratch/projects/nim00007/data/LiveCELL -a

+```

+

+This will first perform a grid-search for the best parameters on a subset of the validation set and then run inference on the test set. This can take up to a day.

+

+**Evaluation**

+

+The script `livecell_evaluation.py` can then be used to evaluate the results from the inference runs.

+E.g. run the script like below to evaluate the previous predictions.

+```

+python livecell_evaluation.py -i /scratch/projects/nim00007/data/LiveCELL -e experiment

+```

+This will create a folder `experiment/results` with csv tables with the results per cell type and averaged over all images.

+

+

+## Finetuning and evaluation code

+

+The subfolders contain the code for different finetuning and evaluation experiments for microscopy data:

+- `livecell`: TODO

+- `generalist`: TODO

+

+Note: we still need to clean up most of this code and will add it later.

diff --git a/finetuning/generalists/cellpose_baseline.py b/finetuning/generalists/cellpose_baseline.py

new file mode 100644

index 00000000..f1a294b0

--- /dev/null

+++ b/finetuning/generalists/cellpose_baseline.py

@@ -0,0 +1,124 @@

+import argparse

+import os

+from glob import glob

+from subprocess import run

+

+import imageio.v3 as imageio

+

+from tqdm import tqdm

+

+DATA_ROOT = "/scratch/projects/nim00007/sam/datasets"

+EXP_ROOT = "/scratch/projects/nim00007/sam/experiments/cellpose"

+

+DATASETS = (

+ "covid-if",

+ "deepbacs",

+ "hpa",

+ "livecell",

+ "lizard",

+ "mouse-embryo",

+ "plantseg-ovules",

+ "plantseg-root",

+ "tissuenet",

+)

+

+

+def load_cellpose_model():

+ from cellpose import models

+

+ device, gpu = models.assign_device(True, True)

+ model = models.Cellpose(gpu=gpu, model_type="cyto", device=device)

+ return model

+

+

+def run_cellpose_segmentation(datasets, job_id):

+ dataset = datasets[job_id]

+ experiment_folder = os.path.join(EXP_ROOT, dataset)

+

+ prediction_folder = os.path.join(experiment_folder, "predictions")

+ os.makedirs(prediction_folder, exist_ok=True)

+

+ image_paths = sorted(glob(os.path.join(DATA_ROOT, dataset, "test", "image*.tif")))

+ model = load_cellpose_model()

+

+ for path in tqdm(image_paths, desc=f"Segmenting {dataset} with cellpose"):

+ fname = os.path.basename(path)

+ out_path = os.path.join(prediction_folder, fname)

+ if os.path.exists(out_path):

+ continue

+ image = imageio.imread(path)

+ if image.ndim == 3:

+ assert image.shape[-1] == 3

+ image = image.mean(axis=-1)

+ assert image.ndim == 2

+ seg = model.eval(image, diameter=None, flow_threshold=None, channels=[0, 0])[0]

+ assert seg.shape == image.shape

+ imageio.imwrite(out_path, seg, compression=5)

+

+

+def submit_array_job(datasets):

+ n_datasets = len(datasets)

+ cmd = ["sbatch", "-a", f"0-{n_datasets-1}", "cellpose_baseline.sbatch"]

+ run(cmd)

+

+

+def evaluate_dataset(dataset):

+ from micro_sam.evaluation.evaluation import run_evaluation

+

+ gt_paths = sorted(glob(os.path.join(DATA_ROOT, dataset, "test", "label*.tif")))

+ experiment_folder = os.path.join(EXP_ROOT, dataset)

+ pred_paths = sorted(glob(os.path.join(experiment_folder, "predictions", "*.tif")))

+ assert len(gt_paths) == len(pred_paths), f"{len(gt_paths)}, {len(pred_paths)}"

+ result_path = os.path.join(experiment_folder, "cellpose.csv")

+ run_evaluation(gt_paths, pred_paths, result_path)

+

+

+def evaluate_segmentations(datasets):

+ for dataset in datasets:

+ # we skip livecell, which has already been processed by cellpose

+ if dataset == "livecell":

+ continue

+ evaluate_dataset(dataset)

+

+

+def check_results(datasets):

+ for ds in datasets:

+ # we skip livecell, which has already been processed by cellpose

+ if ds == "livecell":

+ continue

+ result_path = os.path.join(EXP_ROOT, ds, "cellpose.csv")

+ if not os.path.exists(result_path):

+ print("Cellpose results missing for", ds)

+ print("All checks passed")

+

+

+def main():

+ parser = argparse.ArgumentParser()

+ parser.add_argument("--segment", "-s", action="store_true")

+ parser.add_argument("--evaluate", "-e", action="store_true")

+ parser.add_argument("--check", "-c", action="store_true")

+ parser.add_argument("--datasets", nargs="+")

+ args = parser.parse_args()

+

+ job_id = os.environ.get("SLURM_ARRAY_TASK_ID", None)

+

+ if args.datasets is None:

+ datasets = DATASETS

+ else:

+ datasets = args.datasets

+ assert all(ds in DATASETS for ds in datasets)

+

+ if job_id is not None:

+ run_cellpose_segmentation(datasets, int(job_id))

+ elif args.segment:

+ submit_array_job(datasets)

+ elif args.evaluate:

+ evaluate_segmentations(datasets)

+ elif args.check:

+ check_results(datasets)

+ else:

+ raise ValueError("Doing nothing")

+

+

+if __name__ == "__main__":

+ main()

diff --git a/finetuning/generalists/cellpose_baseline.sbatch b/finetuning/generalists/cellpose_baseline.sbatch

new file mode 100755

index 00000000..c839abab

--- /dev/null

+++ b/finetuning/generalists/cellpose_baseline.sbatch

@@ -0,0 +1,10 @@

+#! /bin/bash

+#SBATCH -c 4

+#SBATCH --mem 48G

+#SBATCH -t 300

+#SBATCH -p grete:shared

+#SBATCH -G A100:1

+#SBATCH -A nim00007

+

+source activate cellpose

+python cellpose_baseline.py $@

diff --git a/finetuning/generalists/compile_results.py b/finetuning/generalists/compile_results.py

new file mode 100644

index 00000000..06165a75

--- /dev/null

+++ b/finetuning/generalists/compile_results.py

@@ -0,0 +1,104 @@

+import os

+from glob import glob

+

+import pandas as pd

+

+from evaluate_generalist import EXPERIMENT_ROOT

+from util import EM_DATASETS, LM_DATASETS

+

+

+def get_results(model, ds):

+ res_folder = os.path.join(EXPERIMENT_ROOT, model, ds, "results")

+ res_paths = sorted(glob(os.path.join(res_folder, "box", "*.csv"))) +\

+ sorted(glob(os.path.join(res_folder, "points", "*.csv")))

+

+ amg_res = os.path.join(res_folder, "amg.csv")

+ if os.path.exists(amg_res):

+ res_paths.append(amg_res)

+

+ results = []

+ for path in res_paths:

+ prompt_res = pd.read_csv(path)

+ prompt_name = os.path.splitext(os.path.relpath(path, res_folder))[0]

+ prompt_res.insert(0, "prompt", [prompt_name])

+ results.append(prompt_res)

+ results = pd.concat(results)

+ results.insert(0, "dataset", results.shape[0] * [ds])

+

+ return results

+

+

+def compile_results(models, datasets, out_path, load_results=False):

+ results = []

+

+ for model in models:

+ model_results = []

+

+ for ds in datasets:

+ ds_results = get_results(model, ds)

+ model_results.append(ds_results)

+

+ model_results = pd.concat(model_results)

+ model_results.insert(0, "model", [model] * model_results.shape[0])

+ results.append(model_results)

+

+ results = pd.concat(results)

+ if load_results:

+ assert os.path.exists(out_path)

+ all_results = pd.read_csv(out_path)

+ results = pd.concat([all_results, results])

+

+ results.to_csv(out_path, index=False)

+

+

+def compile_em():

+ compile_results(

+ ["vit_h", "vit_h_em", "vit_b", "vit_b_em"],

+ EM_DATASETS,

+ os.path.join(EXPERIMENT_ROOT, "evaluation-em.csv")

+ )

+

+

+def add_cellpose_results(datasets, out_path):

+ cp_root = "/scratch/projects/nim00007/sam/experiments/cellpose"

+

+ results = []

+ for dataset in datasets:

+ if dataset == "livecell":

+ continue

+ res_path = os.path.join(cp_root, dataset, "cellpose.csv")

+ ds_res = pd.read_csv(res_path)

+ ds_res.insert(0, "prompt", ["cellpose"] * ds_res.shape[0])

+ ds_res.insert(0, "dataset", [dataset] * ds_res.shape[0])

+ results.append(ds_res)

+

+ results = pd.concat(results)

+ results.insert(0, "model", ["cellpose"] * results.shape[0])

+

+ all_results = pd.read_csv(out_path)

+ results = pd.concat([all_results, results])

+ results.to_csv(out_path, index=False)

+

+

+def compile_lm():

+ res_path = os.path.join(EXPERIMENT_ROOT, "evaluation-lm.csv")

+ compile_results(

+ ["vit_h", "vit_h_lm", "vit_b", "vit_b_lm"], LM_DATASETS, res_path

+ )

+

+ # add the deepbacs and tissuenet specialist results

+ assert os.path.exists(res_path)

+ compile_results(["vit_h_tissuenet", "vit_b_tissuenet"], ["tissuenet"], res_path, True)

+ compile_results(["vit_h_deepbacs", "vit_b_deepbacs"], ["deepbacs"], res_path, True)

+

+ # add the cellpose results

+ add_cellpose_results(LM_DATASETS, res_path)

+

+

+def main():

+ # compile_em()

+ compile_lm()

+

+

+if __name__ == "__main__":

+ main()

diff --git a/finetuning/generalists/create_tissuenet_data.py b/finetuning/generalists/create_tissuenet_data.py

new file mode 100644

index 00000000..18114973

--- /dev/null

+++ b/finetuning/generalists/create_tissuenet_data.py

@@ -0,0 +1,63 @@

+

+import os

+from tqdm import tqdm

+import imageio.v2 as imageio

+import numpy as np

+

+from torch_em.data import MinInstanceSampler

+from torch_em.transform.label import label_consecutive

+from torch_em.data.datasets import get_tissuenet_loader

+from torch_em.transform.raw import standardize, normalize_percentile

+

+

+def rgb_to_gray_transform(raw):

+ raw = normalize_percentile(raw, axis=(1, 2))

+ raw = np.mean(raw, axis=0)

+ raw = standardize(raw)

+ return raw

+

+

+def get_tissuenet_loaders(input_path):

+ sampler = MinInstanceSampler()

+ label_transform = label_consecutive

+ raw_transform = rgb_to_gray_transform

+ val_loader = get_tissuenet_loader(path=input_path, split="val", raw_channel="rgb", label_channel="cell",

+ batch_size=1, patch_shape=(256, 256), num_workers=0,

+ sampler=sampler, label_transform=label_transform, raw_transform=raw_transform)

+ test_loader = get_tissuenet_loader(path=input_path, split="test", raw_channel="rgb", label_channel="cell",

+ batch_size=1, patch_shape=(256, 256), num_workers=0,

+ sampler=sampler, label_transform=label_transform, raw_transform=raw_transform)

+ return val_loader, test_loader

+

+

+def extract_images(loader, out_folder):

+ os.makedirs(out_folder, exist_ok=True)

+ for i, (x, y) in tqdm(enumerate(loader), total=len(loader)):

+ img_path = os.path.join(out_folder, "image_{:04d}.tif".format(i))

+ gt_path = os.path.join(out_folder, "label_{:04d}.tif".format(i))

+