The load balancer also allows instantiating multiple OTP servers on large deployments. (You can start, add, or remove more than one OTP server to the load balancer.) ## Load Balancer Properties -(Applies to Data Tools versions prior to October 2019.) +(Applies to TRANSIT-data-tools versions prior to October 2019.) | Property | Description | |----------|-------------| diff --git a/docs/user/deploying-feeds.md b/docs/user/deploying-feeds.md index 17cb2dba3..4b51976a5 100644 --- a/docs/user/deploying-feeds.md +++ b/docs/user/deploying-feeds.md @@ -4,7 +4,7 @@ Assumptions: * [X] You have [loaded a GTFS feed into a project](./managing-projects-feeds.md). * [X] You have a deployment server available [(example: AWS)](./add-deployment-server.md). -* [X] [An osm-lib server has been set up](https://github.com/conveyal/osm-lib) for Data Tools to fetch Open Streets Map (OSM) data. +* [X] [An osm-lib server has been set up](https://github.com/conveyal/osm-lib) for TRANSIT-data-tools to fetch Open Streets Map (OSM) data. ## Executing a deployment @@ -13,14 +13,14 @@ To deploy or update GTFS feeds to OTP: 1. Open a project. 2. Click on the `Deployments` tab. 3. (Optional) To create a new deployment, click `+ New Deployment`, enter a name, then press or click Enter. -4. Click the name of the deployment to execute. A summary of feeds and existing deployments (if available) are shown for your review. +4. Click on the name of the deployment to execute. A summary of feeds and existing deployments (if available) are shown for your review. 5. Remove the feeds you don't need from the deployment. For the remaining feeds, select the correct feed version. 6. In the `OTP Configuration` pane: * Select the latest OTP version (the first one in the list). * Check `Build graph only` to only generate and output a graph file on EC2 to the S3 server (no OTP server will be running after the graph is generated). * The R5 option is not used. 7. If you select `Custom` under `Build configuration` or `Router configuration`, enter the desired configuration settings. -8. Click the `Deploy` dropdown at the top of the main pane, then pick the server on which to perform the deployment. Existing deployments on that server will be discarded. +8. Click on the `Deploy` dropdown at the top of the main pane, then pick the server on which to perform the deployment. Existing deployments on that server will be discarded. ## Updating the Custom Places Index @@ -32,7 +32,7 @@ The pane also has an option to upload Custom POI CSV files. These files contain ## Watching deployments take place -After click Deploy, you can watch the deployment progress from the right-hand panel: +After you click `Deploy`, you can watch the deployment progress from the right-hand panel: 1. The data bundle is uploaded to S3. 2. One EC2 server is commissioned. diff --git a/docs/user/editor/calendars.md b/docs/user/editor/calendars.md deleted file mode 100644 index cf9fcd401..000000000 --- a/docs/user/editor/calendars.md +++ /dev/null @@ -1,42 +0,0 @@ -# Calendars - -## Editing calendars - -To begin editing calendars, click the 📅 button on the lefthand navigation bar. - - - -Choose a calendar from the list to begin editing. To create a new calendar, click `+ New calendar`. **Note:** as with all newly created items (except patterns), the new calendar will not be saved until the save icon (💾) is clicked. - -## Calendar details - -- **Service ID** - unique ID for the calendar -- **Description** - optional description for calendar (defaults to initial days of week specified) -- **Days of service** - days of week on which the service operates -- **Start/End dates** - the first and last day of that service assigned to the calendar should run - -## Editing schedule exceptions - -Schedule exceptions allow users to define days where special services should replace the regularly operating calendars. To create a schedule exception, click the `Exceptions` tab and then click `+ New exception` (replaces the "New calendar" button). - - - -## Exception details - -- **Name** - name of schedule exception -- **Schedule to run** - the chosen schedule that should replace the regularly operating calendars (see below Exception types) -- **Dates** - one or more dates to which the schedule exception applies - -## Exception types - -There are a number of built-in exception types (or available schedules to run) that allow for a high degree of flexibility when assigning special services. - -- **[Su, M, Tu, W, Th, F, Sa]** - replaces all service for the specified dates with the calendar(s) that operate on the chosen day of the week -- **No service** - indicates that no service of any kind should operated on the specified dates -- **Custom** - replace all service for the specified dates with trips operating on the one or more calendars specified with this option. E.g., run only `holiday` and `holiday-plus` calendar on Thanksgiving Day. -- **Swap** - similar to the **Custom** option, however this option allows for removing one or more specific calendars for the specified dates and/or adding one or more specific calendars. This option is especially useful if only certain routes have altered service on specific dates. For example, a user could remove the `weekday-route-1` calendar and add the `special-route-1` calendar. - -## Editing schedules -Click `Edit schedules` to begin creating or editing trips/frequencies for a trip pattern. You will be redirected to the Schedule Editor. For more information on creating schedules for a pattern, see [Trips](schedules). - -**Note**: At least one route, pattern and calendar must have been created to edit schedules. diff --git a/docs/user/editor/fares.md b/docs/user/editor/fares.md index 5ac92a206..89b195cea 100644 --- a/docs/user/editor/fares.md +++ b/docs/user/editor/fares.md @@ -1,33 +1,52 @@ # Fares -## Editing fares +## Fares Overview -To begin editing fares, click the fare ticket button on the lefthand navigation bar. +### Fare attributes - +Fare attributes describe the basic information about a fare including the price, currency type and transfer information. See the [GTFS specification fare attribute reference](https://gtfs.org/schedule/reference/#fare_attributestxt) for more information. +### Fare rules -Choose a fare from the list to begin editing. To create a new fare, click `+ New fare`. **Note:** as with all newly created items (except patterns), the new fare will not be saved until the save icon (💾) is clicked. +Fare rules describe how much riders pay to use a transit system, based on factors such as distance traveled, time of day, and type of fare media used (such as a mobile app). In other words, they govern how fare attributes are applied. See the [GTFS specification fare rule reference](https://gtfs.org/schedule/reference/#fare_rulestxt) for more information. -## Fare attributes -Fare attributes describe the basic information about a fare. Full details on fare attributes can be found at the [GTFS specification reference](https://developers.google.com/transit/gtfs/reference/fare_attributes-file). +## Editing/Creating Fares -## Fare rules +To begin editing fares, click the fare ticket button on the lefthand navigation bar (outlined in red). -To edit fare rules, you must first create and save a fare with attributes. After choosing a fare, click the `Fare rules` tab and define one or more rules for this fare using the following types: +Choose a fare from the list to begin editing. To create a new fare, click `+ New fare`, or, if this is the first fare being created for this feed, click `+ Create first fare` (highlighted in yellow). + + + +### Edit fare attributes +In the `Attributes` tab, required and optional information about the fare can be entered, like `fare_id`, `price` and `currency_type`. + +**Note: Be sure to click the save button (💾) after changes to fare attributes or fare rules are made. Clicking save after adding attributes will allow you to edit fare rules.** + +### Edit fare rules + +To define fare rules, you must first create fare zones, which are explained in the next section. + +To edit fare rules, you must first create and save a fare with attributes. After choosing a fare, click the `Rules` tab and define one or more rules for this fare using the following types: 1. **Route** - applies to any itinerary that includes the route 2. **From/to zone** - applies to any itinerary that travels from the origin zone to the destination zone 3. **Contains zone** - applies to any itinerary that passes through *each* `contains` zone -**Note:** fare rules can be tricky, see the [GTFS specification reference](https://developers.google.com/transit/gtfs/reference/fare_rules-file) for more information on how fare rules apply. - - + -## Creating fare zones +### Creating fare zones -To create a fare zone for use in fare rules, you must first select a stop that you would like to include in the zone. Click in the `zone_id` dropdown and begin typing the new `zone_id`. Click `Create new zone: [zone_id]` and then save the stop. Repeat for as many zones as needed. +To create a fare zone, you must first select a stop that you would like to include in the zone by clicking the location pin icon on the sidebar and selecting one of the stop names. Next, click in the `zone_id` dropdown and begin typing the new `zone_id`. Click `Create new zone: [zone_id]` and then save the stop. Repeat for as many zones as needed. - + -Once created and assigned to one or more stop, fare zones can be used when defining fare rules for **From/to zone** or **Contains zone**. +### Tutorial Video: Editing/Creating Fares + diff --git a/docs/user/editor/introduction.md b/docs/user/editor/getting-started.md similarity index 94% rename from docs/user/editor/introduction.md rename to docs/user/editor/getting-started.md index a15c8b3bf..0721878aa 100644 --- a/docs/user/editor/introduction.md +++ b/docs/user/editor/getting-started.md @@ -1,4 +1,4 @@ -# Introduction +# Getting Started ## Getting started @@ -22,6 +22,8 @@ When editing routes, stop, calendars, and any other entities in the Editor, you The primary tables in GTFS (feed info, routes, stops, calendars, and fares) all correspond to items in the lefthand navigation bar, which allow you to create, edit, or delete records for each of these tables. + + Some tables are nested underneath these primary tables. Here's how to find them and what they're called in the Editor: - **[Routes](routes)** @@ -36,7 +38,7 @@ Some tables are nested underneath these primary tables. Here's how to find them In the bottom, righthand corner of the Editor, you'll find the quick access toolbar that has a few convenient features you might need while editing. - + From left to right, these functions are: diff --git a/docs/user/editor/patterns.md b/docs/user/editor/patterns.md index e68b63d7b..401d595ca 100644 --- a/docs/user/editor/patterns.md +++ b/docs/user/editor/patterns.md @@ -1,33 +1,19 @@ # Trip Patterns -## Editing trip patterns +## Patterns +Patterns refer to the recurring schedules and frequencies of transit trips. They can be thought of as a template for a particular route, indicating the days of the week and times of day that trips will be available, as well as the frequency of service during those periods. -To begin editing trip patterns (or patterns), first select or create a route. Click the `Trip patterns` tab. +## Editing/Creating Trip Patterns - +To begin editing trip patterns, first click the `Routes` tab (outlined in red). Then, click or create a route Click the `Trip patterns` tab, and start editing any relevant fields including: -Choose a pattern from the list to begin editing. To create a new pattern, click `+ New pattern`. - -## Pattern toolbar - -### Zoom to pattern extents -Clicking the 🔍 button (in the top toolbar) with a pattern selected adjusts the map view to show the entire pattern you are editing. - -### Duplicate pattern -Creates a duplicate of the active pattern (duplicated pattern name becomes `[Pattern name] copy`) if you need to create a similar, but different trip pattern. - -### Reverse pattern -To reverse the sequence of stops for a pattern, click the yellow opposing arrows button. **Note**: this is a destructive action and should usually only be used with Duplicate Pattern. - -### Delete pattern - -Deletes the active pattern. **Note**: deleted patterns cannot be recovered. - -## Pattern details - -- **Name** – the name of the trip pattern within the route, for example a service that runs the full length of the route or a service that only runs part of the length of the route. +- **Name:** The name of the pattern within the route is initially set by default to a designation like "27 stops from Willowridge Rd at Richgrove Dr to Kipling Station (13 trips)." However, it can be customized to a more meaningful label if desired. +- **Type:** Specifies whether the pattern uses timetables or schedules. For more information on the differences between schedules and timetables, consult [Schedules](schedules.md). +- **Direction:** Specifies whether the pattern is inbound or outbound. This corresponds to the `direction_id` field in GTFS. All trips associated with this pattern will be assigned the direction provided here. +- **Editing schedules:** +Click `Edit schedules` to begin creating or editing trips/frequencies for a trip pattern. You will be redirected to the Schedule Editor. For more information on creating schedules for a pattern, see [Trips](schedules). - **Use timetables/frequencies** - whether the pattern uses timetables, i.e., individual entries for each trip made over the course of a day, or frequencies, which define varying frequencies (or headways) according to time intervals over the course of a day. - **Direction** - corresponds to the `direction_id` field in GTFS. All trips associated with this pattern will be assigned the direction provided here. @@ -36,6 +22,24 @@ Click `Edit schedules` to begin creating or editing trips/frequencies for a trip For more information on creating schedules for a pattern, [see the Trips section](schedules). +To add a pattern, click the `+ New pattern` button (highlighted in yellow). + + + +### Pattern editing tools: +The pattern toolbar contains several helpful buttons to help with the pattern editing process. + +

+

+- **Zoom to pattern extents:**

+Clicking the search (🔍) button (in the top toolbar) with a pattern selected adjusts the map view to show the entire pattern you are editing.

+- **Duplicating pattern:**

+Used to create a similar, but different trip pattern. When duplicating of the active pattern, its name becomes `[Pattern name] copy`.

+- **Reverse pattern:**

+To reverse the sequence of stops for a pattern, click the button with opposing arrows. Note: this is a destructive action and should usually only be used after duplicating a pattern.

+- **Delete pattern:** Deletes the active pattern. Note: deleted patterns cannot be recovered.

+

+

## Stop sequence

### Adding stops

To begin constructing a pattern, click `+ Add stop`. You can then search for stops to add by name in the dropdown that appears or zoom into the map and add stops by clicking on them and clicking `+ Add stop`.

@@ -45,30 +49,25 @@ To begin constructing a pattern, click `+ Add stop`. You can then search for sto

As you add stops, the pattern shape will update according to the street network. Keep selecting and adding stops in the sequence the route passes through them until the route is complete.

### Inserting stops mid-sequence

-If you realize you have missed a stop from the sequence it can be added in after the fact. When adding via the dropdown, stops can only be added to the end of the sequence; however, if you're adding via the map, you can choose where exactly to insert the stop.

+First, click the `From Stops` button so that Datatools knows the pattern can be regenerated by editing stops. If you realize that you have missed a stop from the sequence, it can be added after the fact. When adding via the dropdown, stops can only be added to the end of the sequence; however, if you're adding via the map, you can choose where exactly to insert the stop.

-

+

### Pattern stop toolbar

The pattern stop toolbar can be found by clicking the pattern stop in the map view.

-

-

-- **Save** - saves any edits to the travel and dwell times.

-- **Edit** - allows user to begin editing the stop's details (clicking the browser **Back** button will bring you back to editing the pattern). **Note**: be sure you have saved any pattern information before jumping to edit a stop.

-- **Remove** - removes the stop from the pattern.

-- **Add** - add the stop to the sequence at an additional location. This is useful for patterns that might visit the same stop more than once (e.g., loop routes).

+- **Save:** saves any edits to the travel and dwell times.

+- **Edit:** allows user to begin editing the stop's details (clicking the browser **Back** button will bring you back to editing the pattern). **Note**: be sure you have saved any pattern information before jumping to edit a stop.

+- **Remove:** removes the stop from the pattern.

+- **Add:** add the stop to the sequence at an additional location. This is useful for patterns that might visit the same stop more than once (e.g., loop routes).

### Changing the order of stops

-If a stop is in the wrong order, you can click and drag the stop from the list view into the correct sequence. The sequence displayed on the stop being dragged will only update after being dropped into place.

+First, click the `From Stops` button so that Datatools knows the pattern can be regenerated by editing stops. If a stop is in the wrong order, you can click and drag and drop the stop from the list view into the correct sequence. The sequence displayed on the stop being dragged will only update after being dropped into place.

-

-

-**Note**: if inserting stops mid-sequence or changing the order of stops, the pattern shape will not update automatically. You must edit the pattern manually or click the `Create` button to regenerate the pattern shape from the stops.

+

### Removing stops

-Stops can be removed from the pattern by clicking the red 🗑 button found in the expanded stop card or by clicking the pattern stop on the map view.

-

+Stops can be removed from the pattern by clicking the red trash (🗑) icon found in the expanded stop card or by clicking the pattern stop on the map view.

## Stop timings

### Pattern stop colors

@@ -86,26 +85,41 @@ Once you have adjusted the stop timings, another warning will appear prompting y

### Calculate timings

-The average speed for the route can be used to calculate all the time gaps between stops in one go. Once the stops have been added and the pattern alignment is drawn simply click **Calc. Times** and the time between stops will automatically fill in.

+The average speed for the route can be used to calculate all the time gaps between stops in one go. A few parameters can be specified before calculating times:

- **Average speed** - average speed (in km/hr) to use when calculating timings

- **Dwell time** – allows you to assign the same dwell time to all stops within a trip

pattern

-### Manual timings

-Individual timings for each pattern stop can also be assigned either through expanded the stop card in the list of stops or via clicking the pattern stop on the map view.

+Once the stops have been added and the pattern alignment is drawn simply click `Calc. times` and the time between stops will automatically fill in.

-- Travel time – the time it took to get from the previous station (should be 00:00 on the first stop of the sequence)

-- Dwell time – the time the vehicle rests at the stop

+Another option is to click the `Normalize stop times` button above all the stops make all arrival and departure times on the pattern match a default travel time for each stop.

+### Manual timings

+Specific timings for each pattern stop can be set by either clicking on the stop on the map view or expanding the stop card in the list view. The two types of times that can be edited are:

+

+- **Travel time:** the time it took to get from the previous station (should be 00:00 on the first stop of the sequence)

+- **Dwell time:** the time the vehicle rests at the stop

+

+### Tutorial Video: Editing/Creating Patterns

+The following video demonstrates how to create patterns as outlined above, in a step by step manner.

+

+

+

+- **Zoom to pattern extents:**

+Clicking the search (🔍) button (in the top toolbar) with a pattern selected adjusts the map view to show the entire pattern you are editing.

+- **Duplicating pattern:**

+Used to create a similar, but different trip pattern. When duplicating of the active pattern, its name becomes `[Pattern name] copy`.

+- **Reverse pattern:**

+To reverse the sequence of stops for a pattern, click the button with opposing arrows. Note: this is a destructive action and should usually only be used after duplicating a pattern.

+- **Delete pattern:** Deletes the active pattern. Note: deleted patterns cannot be recovered.

+

+

## Stop sequence

### Adding stops

To begin constructing a pattern, click `+ Add stop`. You can then search for stops to add by name in the dropdown that appears or zoom into the map and add stops by clicking on them and clicking `+ Add stop`.

@@ -45,30 +49,25 @@ To begin constructing a pattern, click `+ Add stop`. You can then search for sto

As you add stops, the pattern shape will update according to the street network. Keep selecting and adding stops in the sequence the route passes through them until the route is complete.

### Inserting stops mid-sequence

-If you realize you have missed a stop from the sequence it can be added in after the fact. When adding via the dropdown, stops can only be added to the end of the sequence; however, if you're adding via the map, you can choose where exactly to insert the stop.

+First, click the `From Stops` button so that Datatools knows the pattern can be regenerated by editing stops. If you realize that you have missed a stop from the sequence, it can be added after the fact. When adding via the dropdown, stops can only be added to the end of the sequence; however, if you're adding via the map, you can choose where exactly to insert the stop.

-

+

### Pattern stop toolbar

The pattern stop toolbar can be found by clicking the pattern stop in the map view.

-

-

-- **Save** - saves any edits to the travel and dwell times.

-- **Edit** - allows user to begin editing the stop's details (clicking the browser **Back** button will bring you back to editing the pattern). **Note**: be sure you have saved any pattern information before jumping to edit a stop.

-- **Remove** - removes the stop from the pattern.

-- **Add** - add the stop to the sequence at an additional location. This is useful for patterns that might visit the same stop more than once (e.g., loop routes).

+- **Save:** saves any edits to the travel and dwell times.

+- **Edit:** allows user to begin editing the stop's details (clicking the browser **Back** button will bring you back to editing the pattern). **Note**: be sure you have saved any pattern information before jumping to edit a stop.

+- **Remove:** removes the stop from the pattern.

+- **Add:** add the stop to the sequence at an additional location. This is useful for patterns that might visit the same stop more than once (e.g., loop routes).

### Changing the order of stops

-If a stop is in the wrong order, you can click and drag the stop from the list view into the correct sequence. The sequence displayed on the stop being dragged will only update after being dropped into place.

+First, click the `From Stops` button so that Datatools knows the pattern can be regenerated by editing stops. If a stop is in the wrong order, you can click and drag and drop the stop from the list view into the correct sequence. The sequence displayed on the stop being dragged will only update after being dropped into place.

-

-

-**Note**: if inserting stops mid-sequence or changing the order of stops, the pattern shape will not update automatically. You must edit the pattern manually or click the `Create` button to regenerate the pattern shape from the stops.

+

### Removing stops

-Stops can be removed from the pattern by clicking the red 🗑 button found in the expanded stop card or by clicking the pattern stop on the map view.

-

+Stops can be removed from the pattern by clicking the red trash (🗑) icon found in the expanded stop card or by clicking the pattern stop on the map view.

## Stop timings

### Pattern stop colors

@@ -86,26 +85,41 @@ Once you have adjusted the stop timings, another warning will appear prompting y

### Calculate timings

-The average speed for the route can be used to calculate all the time gaps between stops in one go. Once the stops have been added and the pattern alignment is drawn simply click **Calc. Times** and the time between stops will automatically fill in.

+The average speed for the route can be used to calculate all the time gaps between stops in one go. A few parameters can be specified before calculating times:

- **Average speed** - average speed (in km/hr) to use when calculating timings

- **Dwell time** – allows you to assign the same dwell time to all stops within a trip

pattern

-### Manual timings

-Individual timings for each pattern stop can also be assigned either through expanded the stop card in the list of stops or via clicking the pattern stop on the map view.

+Once the stops have been added and the pattern alignment is drawn simply click `Calc. times` and the time between stops will automatically fill in.

-- Travel time – the time it took to get from the previous station (should be 00:00 on the first stop of the sequence)

-- Dwell time – the time the vehicle rests at the stop

+Another option is to click the `Normalize stop times` button above all the stops make all arrival and departure times on the pattern match a default travel time for each stop.

+### Manual timings

+Specific timings for each pattern stop can be set by either clicking on the stop on the map view or expanding the stop card in the list view. The two types of times that can be edited are:

+

+- **Travel time:** the time it took to get from the previous station (should be 00:00 on the first stop of the sequence)

+- **Dwell time:** the time the vehicle rests at the stop

+

+### Tutorial Video: Editing/Creating Patterns

+The following video demonstrates how to create patterns as outlined above, in a step by step manner.

+

+-## Creating pattern alignments -As mentioned above, pattern shapes will begin to draw automatically as stops are added in sequence. However, if you need to make adjustments to the auto-generated shape, clicking `Edit` will allow you to begin editing the shape segments between stops. +## Editing/Creating Pattern Geometry +As mentioned above, pattern shapes will begin to draw automatically as stops are added in sequence. However, if you need to make adjustments to the auto-generated shape, clicking `Edit pattern geometry` will allow you to begin editing the shape segments between stops. ### Basic editing -Once editing a pattern shape, there are a few behaviors you should be aware of: +While editing a pattern shape, there are a few behaviors you should be aware of: - Dragging a handle (✕) will create a new alignment between stops or surrounding handles - Clicking the pattern will add additional handles to the segment @@ -113,23 +127,25 @@ Once editing a pattern shape, there are a few behaviors you should be aware of: ### Shape toolbar #### Before editing -- **Edit** - begin editing the pattern shape -- **Delete** - delete the pattern shape -- **Create** - creates alignment that follows streets from pattern stops +- **Edit:** Begin editing the pattern shape +- **Delete:** Delete the pattern shape +- **Create:** Creates alignment that follows streets from pattern stops #### While editing -- **Save** - Save changes to pattern shape and leave editing mode. -- **Undo** - Undo last change. -- **Cancel** - Cancel edits and leave editing mode. +- **Save:** Save changes to pattern shape and leave editing mode +- **Undo:** Undo last change +- **Cancel:** Cancel edits and leave editing mode ### Edit settings - **Snap mode selector** - Use the dropdown selector to choose how pattern segments between handles and stops are aligned. The available snapping modes are: - - **Snap to streets** - snaps segments between handles and stops to streets. Can toggle the 'Avoid highways in routing' checkbox as needed. - - **Snap to rail** - snaps segments between handles and stops to rail. - - **None** - segments will form straight lines between handles and stops. -- **Snap to stops** - keeps shape segments snapped to stops. If unchecked, stop handles will become draggable. In most cases, this setting should remain checked. -- **Show stops** - toggles whether stops are visible because sometimes stop markers can get in the way when trying to draw shapes. + - **Snap to streets** - snaps segments between handles and stops to streets. Can toggle the 'Avoid highways in routing' checkbox as needed. + - **Snap to rail** - snaps segments between handles and stops to rail. + - **None** - segments will form straight lines between handles and stops. +- **Hide stop handles:** Keeps shape segments snapped to stops. If unchecked, stop handles will become draggable. In most cases, this setting should remain checked. +- **Hide inactive segments:** Toggles whether to show segments that are not currently being edited. +- **Show stops** - Toggles whether stops are visible because sometimes stop markers can get in the way when trying to draw shapes. +- **Show tooltips:** Toggles whether to show tips when hovering over a segment, e.g. "Click to edit segment" or "Click to add control point".  @@ -137,12 +153,23 @@ Once editing a pattern shape, there are a few behaviors you should be aware of: There are a few different editing modes that allow for the quick and easy creation of pattern shapes: -- **Drag handles** (default) - drag handles to change the pattern segment between stops. This mode is also in effect during the advanced modes listed below. -- **Add stop at click** - at each click on the map, a new stop is generated and the pattern is extended to this stop. -- **Add stop at interval** - at each click on the map, stops are generated along the auto-generated pattern extended to the map click at the user-defined spacing interval from 100 to 2000 meters. -- **Add stop at intersection** (experimental, not available in all regions) - at each click on the map, stops are generated along the auto-generated pattern extended to the map click according to the user-defined parameters: - - **Offset from intersection** - distance the stop should be placed from the intersection - - **Before/after** - whether stop should be placed before or after intersection - - **Every *n* intersections** - the number of intersections at which each new stop should be placed +- **Drag handles:** (default) Drag handles to change the pattern segment between stops. This mode is also in effect during the advanced modes listed below. +- **Add stop at click:** At each click on the map, a new stop is generated and the pattern is extended to this stop. +- **Add stop at interval:** At each click on the map, stops are generated along the auto-generated pattern extended to the map click at the user-defined spacing interval from 100 to 2000 meters. +- **Add stop at intersection:** (experimental, not available in all regions) - at each click on the map, stops are generated along the auto-generated pattern extended to the map click according to the user-defined parameters: + - **Offset from intersection:** Distance the stop should be placed from the intersection + - **Before/after:** Whether the stop should be placed before or after intersection + - **Every *n* intersections:** The number of intersections at which each new stop should be placed **Note**: the last three advanced editing modes should only be used when creating routes in new areas where stops don't already exist. + +### Tutorial Video: Editing Pattern Geometry +The following video demonstrates the steps for editing patterns, as outlined above. + diff --git a/docs/user/editor/routes.md b/docs/user/editor/routes.md index 6e3e5589f..d187c4be2 100644 --- a/docs/user/editor/routes.md +++ b/docs/user/editor/routes.md @@ -1,36 +1,46 @@ # Routes +In GTFS, a route is a group of trips that follow one or more patterns and operate throughout a service day. It is identified by a unique route_id and contains information such as the route name, type, and operating agency. For more information, view the [GTFS specification route reference](https://gtfs.org/schedule/reference/#routestxt) -## Editing routes +## Editing/Creating Routes -To begin editing routes, click the 🚍 button on the lefthand navigation bar. +To begin editing routes, click the bus (🚍) button on the lefthand navigation bar. - +

-Choose a route from the list or search by route name in the dropdown. To create a new route, click `+ New route`. **Note:** as with all newly created items (except patterns), the new route will not be saved until the save icon (💾) is clicked.

+Choose a route from the list or search by route name in the dropdown. To create a new route, click `New route` or `+ Create first route` if this is the first route being created.

-## Zoom to route extents

+### Zoom to route extents

Clicking the 🔍 button (in the top toolbar) with a route selected adjusts the map view to show the entire route (i.e., all patterns) you are editing.

## Route details

-Some of the fields to be filled in are required before you can ‘Save and Continue’ and move to the next step, these are:

+The following fields are required before you can hit `Save and Continue`:

-- **Short name** – name of the service/route, this may just be a number

-- **Long name** – the full name of the route, often including the origin and destination

-- **Route Type** – the type of vehicle/transport used on the route

-Other fields in this section are optional and do not have to be filled in, these are:

-- **Description** – a description of the route, do not simply repeat the information in ‘Long name’

-- **URL** – a link to a webpage with information on the specific route, such as the timetable

-- **Color** – if a route has a color (for use in trip planners etc) it can be assigned here

-- **Text color** – if a route has a text color (for use in trip planners etc) it can be assigned here

-- **Comments** – any additional information about the route can be placed here

+- **Status:** Takes the following values:

+ - **In-Progress:** Showing a route has not been completely entered

+ - **Pending Approval:** A route has all the information entered and is awaiting a senior person to sign it off

+ - **Approved:** All the above stages have been completed

+- **Publicly Visible?** This must be set to "Yes" for the route to be included in a GTFS output.

+- **route_id:** An identifier for the route. A randomly generated ID is provided by default.

+- **route_short_name:** Name of the service/route, this may just be a number

+- **route_long_name:** The full name of the route, often including the origin and destination

+- **route_type:** The type of vehicle/transport used on the route

-Once all the required fields, and any of the desired optional fields, are filled in click ‘Save and continue’.

+The following fields are optional:

+- **agency_id:** The agency identifier from the defined agencies. Generally this field is automatically populated.

+- **route_desc:** A description of the route, do not simply repeat the information in ‘Long name’

+- **route_sort_order:** Orders the routes for presentation to GTFS consumers. Routes with smaller route_sort_order values should be displayed first

+- **route_url:** A link to a webpage with information on the specific route, such as the timetable

+- **route_color:** If a route has a color (for use in trip planners etc) it can be assigned here

+- **route_text_color:** If a route has a text color (for use in trip planners etc) it can be assigned here

+- **Is route wheelchair accessible?** Either "Yes", "No", or "No Information"

+- **Route branding URL:** A link to a webpage with route branding information

+- **Route branding asset:** A route image

-## Review

+Once all the required fields and any of the desired optional fields are filled in, click `Save`.

-This final page allows you to show if a route has been completely entered, and if it has whether it has been checked and approved for inclusion in the GTFS feed.

+**Note:** as with all newly created items (except patterns), the new route will not be saved until the save icon (💾) is clicked.

### Status

@@ -54,3 +64,15 @@ Routes in the Pending Approval or In Progress phase will not be publicly visible

Once you've created and saved a route, you can then begin creating trip patterns for the route.

[Learn about editing trip patterns »](patterns)

+

+### Tutorial Video: Editing/Creating Routes

+This video provides a step-by-step demonstration of how to edit or create a route.

+

+

diff --git a/docs/user/editor/schedules.md b/docs/user/editor/schedules.md

index d46c7f8b4..d845ae9e2 100644

--- a/docs/user/editor/schedules.md

+++ b/docs/user/editor/schedules.md

@@ -1,4 +1,9 @@

-# Trips

+# Schedules/Calendars

+

+## Schedule and Calendar Overview

+The schedule editor allows for the creation of trips/frequencies for any combination of route, pattern, and/or calendar. To manage or edit schedules or exceptions, navigate to the `Calendar` tab located in the left-hand menu:

+

+

## Keyboard shortcuts

There are a number of keyboard shortcuts for jumping between and modifying trips. To view these, click the `?` in the top righthand corner of the timetable editor. You can also view these at any time while editing trips by typing `?`. The shortcuts are shown below:

@@ -8,7 +13,85 @@ There are a number of keyboard shortcuts for jumping between and modifying trips

## Selecting schedules

The schedule editor allows users to create trips/frequencies for any route, pattern, and calendar combination. The selectors at the top of the page allow users to navigate between calendars for a given pattern or switch between patterns for a route or even routes within the feed.

-Each selection has a set of statistics associated with it to give you a sense of how it fits in with the feed:

+### Calendars

+Transit calendars in GTFS are used to define the days of the week on which transit services are available. See the [GTFS specification calendar reference](https://gtfs.org/schedule/reference/#calendartxt) for more information.

+

+### Exceptions

+Exceptions are deviations from the regular transit service schedule, such as holidays, special events, cancellations and service disruptions. See the [GTFS specification calendar dates reference](https://gtfs.org/schedule/reference/#calendar_datestxt) for more information.

+

+### Schedules/Timetable-based routes

+Timetable-based routes follow a fixed schedule in which the start time, end time, and all the intermediate stops are pre-defined.

+

+### Frequency-based routes

+Unlike the fixed nature of timetable-based trips, frequency-based trips run at regular intervals, with a fixed amount of time between consecutive trips. Frequency-based service offers more flexibility and easier adjustment to changing demand. Visit [GTFS specification frequency reference](https://gtfs.org/schedule/reference/#frequenciestxt) for more information.

+

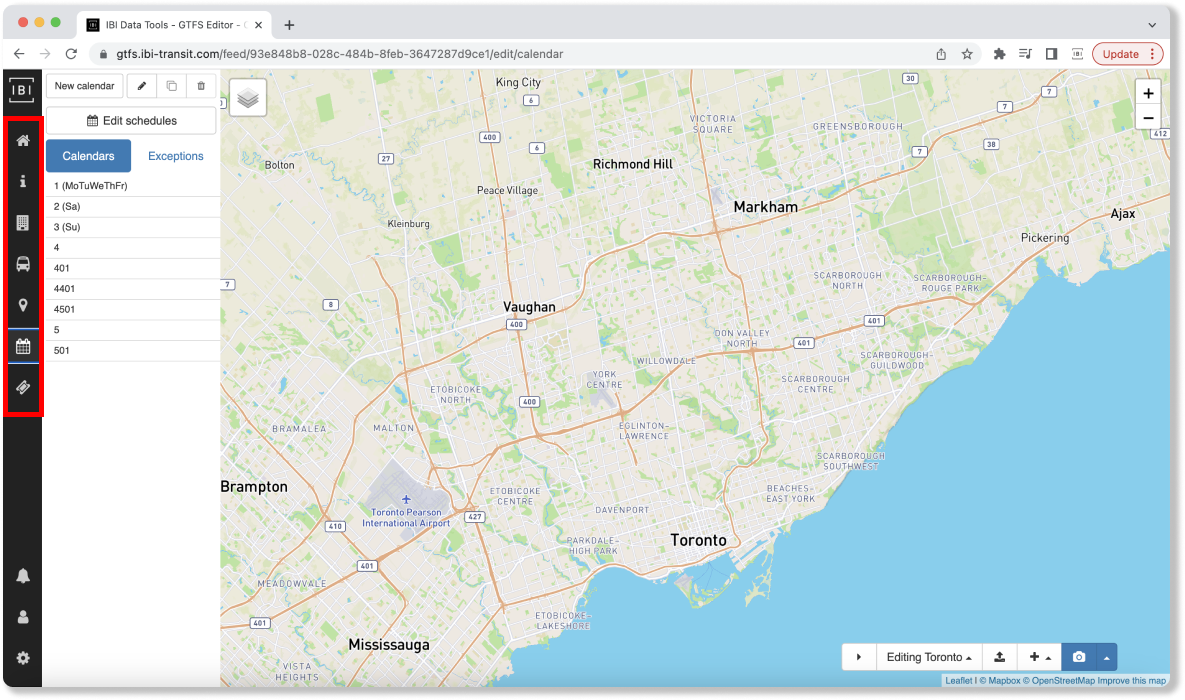

+## Editing/Creating Calendars

+To start editing a calendar, click `+ Create first calendar` if this is the first calendar being added or click an existing calendar to begin adding/editing its properties which include:

+

+- **Service ID:** Unique ID for the calendar

+- **Description:** Optional description for calendar (defaults to initial days of week specified)

+- **Days of service:** Days of week on which the service operates

+- **Start/End dates:** The first and last day of that service assigned to the calendar should run

+

+**Note: Be sure to click the save button (💾) after changes any changes to calendars are made.**

+

+### Tutorial Video: Creating/Editing Calendars

+

+

-Choose a route from the list or search by route name in the dropdown. To create a new route, click `+ New route`. **Note:** as with all newly created items (except patterns), the new route will not be saved until the save icon (💾) is clicked.

+Choose a route from the list or search by route name in the dropdown. To create a new route, click `New route` or `+ Create first route` if this is the first route being created.

-## Zoom to route extents

+### Zoom to route extents

Clicking the 🔍 button (in the top toolbar) with a route selected adjusts the map view to show the entire route (i.e., all patterns) you are editing.

## Route details

-Some of the fields to be filled in are required before you can ‘Save and Continue’ and move to the next step, these are:

+The following fields are required before you can hit `Save and Continue`:

-- **Short name** – name of the service/route, this may just be a number

-- **Long name** – the full name of the route, often including the origin and destination

-- **Route Type** – the type of vehicle/transport used on the route

-Other fields in this section are optional and do not have to be filled in, these are:

-- **Description** – a description of the route, do not simply repeat the information in ‘Long name’

-- **URL** – a link to a webpage with information on the specific route, such as the timetable

-- **Color** – if a route has a color (for use in trip planners etc) it can be assigned here

-- **Text color** – if a route has a text color (for use in trip planners etc) it can be assigned here

-- **Comments** – any additional information about the route can be placed here

+- **Status:** Takes the following values:

+ - **In-Progress:** Showing a route has not been completely entered

+ - **Pending Approval:** A route has all the information entered and is awaiting a senior person to sign it off

+ - **Approved:** All the above stages have been completed

+- **Publicly Visible?** This must be set to "Yes" for the route to be included in a GTFS output.

+- **route_id:** An identifier for the route. A randomly generated ID is provided by default.

+- **route_short_name:** Name of the service/route, this may just be a number

+- **route_long_name:** The full name of the route, often including the origin and destination

+- **route_type:** The type of vehicle/transport used on the route

-Once all the required fields, and any of the desired optional fields, are filled in click ‘Save and continue’.

+The following fields are optional:

+- **agency_id:** The agency identifier from the defined agencies. Generally this field is automatically populated.

+- **route_desc:** A description of the route, do not simply repeat the information in ‘Long name’

+- **route_sort_order:** Orders the routes for presentation to GTFS consumers. Routes with smaller route_sort_order values should be displayed first

+- **route_url:** A link to a webpage with information on the specific route, such as the timetable

+- **route_color:** If a route has a color (for use in trip planners etc) it can be assigned here

+- **route_text_color:** If a route has a text color (for use in trip planners etc) it can be assigned here

+- **Is route wheelchair accessible?** Either "Yes", "No", or "No Information"

+- **Route branding URL:** A link to a webpage with route branding information

+- **Route branding asset:** A route image

-## Review

+Once all the required fields and any of the desired optional fields are filled in, click `Save`.

-This final page allows you to show if a route has been completely entered, and if it has whether it has been checked and approved for inclusion in the GTFS feed.

+**Note:** as with all newly created items (except patterns), the new route will not be saved until the save icon (💾) is clicked.

### Status

@@ -54,3 +64,15 @@ Routes in the Pending Approval or In Progress phase will not be publicly visible

Once you've created and saved a route, you can then begin creating trip patterns for the route.

[Learn about editing trip patterns »](patterns)

+

+### Tutorial Video: Editing/Creating Routes

+This video provides a step-by-step demonstration of how to edit or create a route.

+

+

diff --git a/docs/user/editor/schedules.md b/docs/user/editor/schedules.md

index d46c7f8b4..d845ae9e2 100644

--- a/docs/user/editor/schedules.md

+++ b/docs/user/editor/schedules.md

@@ -1,4 +1,9 @@

-# Trips

+# Schedules/Calendars

+

+## Schedule and Calendar Overview

+The schedule editor allows for the creation of trips/frequencies for any combination of route, pattern, and/or calendar. To manage or edit schedules or exceptions, navigate to the `Calendar` tab located in the left-hand menu:

+

+

## Keyboard shortcuts

There are a number of keyboard shortcuts for jumping between and modifying trips. To view these, click the `?` in the top righthand corner of the timetable editor. You can also view these at any time while editing trips by typing `?`. The shortcuts are shown below:

@@ -8,7 +13,85 @@ There are a number of keyboard shortcuts for jumping between and modifying trips

## Selecting schedules

The schedule editor allows users to create trips/frequencies for any route, pattern, and calendar combination. The selectors at the top of the page allow users to navigate between calendars for a given pattern or switch between patterns for a route or even routes within the feed.

-Each selection has a set of statistics associated with it to give you a sense of how it fits in with the feed:

+### Calendars

+Transit calendars in GTFS are used to define the days of the week on which transit services are available. See the [GTFS specification calendar reference](https://gtfs.org/schedule/reference/#calendartxt) for more information.

+

+### Exceptions

+Exceptions are deviations from the regular transit service schedule, such as holidays, special events, cancellations and service disruptions. See the [GTFS specification calendar dates reference](https://gtfs.org/schedule/reference/#calendar_datestxt) for more information.

+

+### Schedules/Timetable-based routes

+Timetable-based routes follow a fixed schedule in which the start time, end time, and all the intermediate stops are pre-defined.

+

+### Frequency-based routes

+Unlike the fixed nature of timetable-based trips, frequency-based trips run at regular intervals, with a fixed amount of time between consecutive trips. Frequency-based service offers more flexibility and easier adjustment to changing demand. Visit [GTFS specification frequency reference](https://gtfs.org/schedule/reference/#frequenciestxt) for more information.

+

+## Editing/Creating Calendars

+To start editing a calendar, click `+ Create first calendar` if this is the first calendar being added or click an existing calendar to begin adding/editing its properties which include:

+

+- **Service ID:** Unique ID for the calendar

+- **Description:** Optional description for calendar (defaults to initial days of week specified)

+- **Days of service:** Days of week on which the service operates

+- **Start/End dates:** The first and last day of that service assigned to the calendar should run

+

+**Note: Be sure to click the save button (💾) after changes any changes to calendars are made.**

+

+### Tutorial Video: Creating/Editing Calendars

+

++ +## Editing/Creating Exceptions +To start editing an exception, click any existing exception (if applicable) on the left pane. You will be able to edit properties such as exception name, customize the exception type, click calendars to add, remove or swap and the time range the exception is applied to. To make a new exception, click `New exception` on the top left of the pane (highlighted in yellow). + +

+

+You will be able to add or edit properties such as:

+

+- **Name:** Name of schedule exception

+- **Schedule to run:** The chosen schedule that should replace the regularly operating calendars (see below Exception types)

+- **Dates:** One or more dates to which the schedule exception applies

+

+### Exception types

+

+There are a number of built-in exception types (or available schedules to run) that allow for a high degree of flexibility when assigning special services.

+

+- **[Su, M, Tu, W, Th, F, Sa]** - replaces all service for the specified dates with the calendar(s) that operate on the chosen day of the week

+- **No service** - indicates that no service of any kind should operated on the specified dates

+- **Custom** - replace all service for the specified dates with trips operating on the one or more calendars specified with this option. E.g., run only `holiday` and `holiday-plus` calendar on Thanksgiving Day.

+- **Swap** - similar to the **Custom** option, however this option allows for removing one or more specific calendars for the specified dates and/or adding one or more specific calendars. This option is especially useful if only certain routes have altered service on specific dates. For example, a user could remove the `weekday-route-1` calendar and add the `special-route-1` calendar.

+

+**Note: Be sure to click the save button (💾) after changes any changes to exceptions are made.**

+### Tutorial Video: Creating/Editing Exceptions

+

+

+

+You will be able to add or edit properties such as:

+

+- **Name:** Name of schedule exception

+- **Schedule to run:** The chosen schedule that should replace the regularly operating calendars (see below Exception types)

+- **Dates:** One or more dates to which the schedule exception applies

+

+### Exception types

+

+There are a number of built-in exception types (or available schedules to run) that allow for a high degree of flexibility when assigning special services.

+

+- **[Su, M, Tu, W, Th, F, Sa]** - replaces all service for the specified dates with the calendar(s) that operate on the chosen day of the week

+- **No service** - indicates that no service of any kind should operated on the specified dates

+- **Custom** - replace all service for the specified dates with trips operating on the one or more calendars specified with this option. E.g., run only `holiday` and `holiday-plus` calendar on Thanksgiving Day.

+- **Swap** - similar to the **Custom** option, however this option allows for removing one or more specific calendars for the specified dates and/or adding one or more specific calendars. This option is especially useful if only certain routes have altered service on specific dates. For example, a user could remove the `weekday-route-1` calendar and add the `special-route-1` calendar.

+

+**Note: Be sure to click the save button (💾) after changes any changes to exceptions are made.**

+### Tutorial Video: Creating/Editing Exceptions

+

++ +## Editing/Creating Timetables +To begin editing a timetable, click the `Edit schedules` button in the top left corner of the screen (highlighed in yellow). + +(Alternatively, if you are in the `Routes` tab (see [Routes](/user/editor/routes/)), click an existing route or route click the `New route` button --> click the `Trip patterns` tab --> select a pattern --> click `Use timetables` in the `Type:` dropdown --> click the `Edit schedules` button) + +

+

+**Note**: At least one route, pattern and calendar must have been created to edit schedules.

+

+The selectors located at the top of the page allow users to navigate between calendars for a specific pattern or switch between patterns for a route or multiple routes within the feed. Variations of route, pattern and the schedule can be selected to generate the desired timetable.

+

+

+

+**Note**: At least one route, pattern and calendar must have been created to edit schedules.

+

+The selectors located at the top of the page allow users to navigate between calendars for a specific pattern or switch between patterns for a route or multiple routes within the feed. Variations of route, pattern and the schedule can be selected to generate the desired timetable.

+

+ +

+Each selection has a set of statistics associated with it shown as a number in a grey or green box, that, when hovered over, provides the following information:

- **Route**

- \# of trips for the entire route

@@ -18,11 +101,22 @@ Each selection has a set of statistics associated with it to give you a sense of

- \# of trips for selected pattern

- \# of trips in calendar for entire feed

-

+Once a route, pattern and calendar is selected, a timetable with the following trip details will appear:

-## Schedule toolbar

+- **Block ID** - identifies the vehicle used for the trip

+- **Trip ID** - unique identifier for the trip

+- **Trip Headsign** - headsign for the trip

+- **Arrival/Departure Times** - arrival and departure times (departures shown in grey text) for each stop along the pattern

+

+

+

+To select trips to offset, duplicate or delete, click the row number on the lefthand side of the row. To toggle selection of all trips, click the box in the upper lefthand corner.

-

+

+

+After trips are selected, navigate to the schedule toolbar at the top right of the screen.

+

+## Schedule toolbar

- **Add trip** - add blank trip (first timepoint is `00:00:00`)

- **Duplicate trip(s)** - duplicate the last trip in the spreadsheet or whichever rows are selected

@@ -31,40 +125,48 @@ Each selection has a set of statistics associated with it to give you a sense of

- **Save** - save all changes

- **Offset trip(s)** - specify an offset (`HH:MM`) to offset the last trip in the spreadsheet or whichever rows are selected

-## Selecting trips

-To select trips to offset, duplicate or delete, click the row number on the lefthand side of the row. To toggle selection of all trips, click the box in the upper lefthand corner.

-

+

+

+** Note: When entering times manually into the schedule editor they will automatically be converted to a standardized format `13:00:00`**

-## Recognized time entry formats

-When entering times manually into the schedule editor they will automatically be converted to a standardized format `13:00:00`. The following time formats are automatically recognized and converted:

+The following time formats are automatically recognized and converted:

- 24-hr

- `13:00:00`

- `13:00`

+ - `1300`

- 12-hr

- - `1:00p`

+ - `1pm`

- `1:00pm`

- `1:00 pm`

+ - `1:00:00pm`

- `1:00:00 pm`

-## Editing timetables

-Trip details include:

+### Tutorial Video: Editing/Creating Timetables

+The following video demonstrates the creation and editing of timetables described above.

-- **Block ID** - identifies the vehicle used for the trip

-- **Trip ID** - unique identifier for the trip

-- **Trip Headsign** - headsign for the trip

-- **Arrival/Departure Times** - arrival and departure times (departures shown in grey text) for each stop along the pattern

+

-

+

+

+Each selection has a set of statistics associated with it shown as a number in a grey or green box, that, when hovered over, provides the following information:

- **Route**

- \# of trips for the entire route

@@ -18,11 +101,22 @@ Each selection has a set of statistics associated with it to give you a sense of

- \# of trips for selected pattern

- \# of trips in calendar for entire feed

-

+Once a route, pattern and calendar is selected, a timetable with the following trip details will appear:

-## Schedule toolbar

+- **Block ID** - identifies the vehicle used for the trip

+- **Trip ID** - unique identifier for the trip

+- **Trip Headsign** - headsign for the trip

+- **Arrival/Departure Times** - arrival and departure times (departures shown in grey text) for each stop along the pattern

+

+

+

+To select trips to offset, duplicate or delete, click the row number on the lefthand side of the row. To toggle selection of all trips, click the box in the upper lefthand corner.

-

+

+

+After trips are selected, navigate to the schedule toolbar at the top right of the screen.

+

+## Schedule toolbar

- **Add trip** - add blank trip (first timepoint is `00:00:00`)

- **Duplicate trip(s)** - duplicate the last trip in the spreadsheet or whichever rows are selected

@@ -31,40 +125,48 @@ Each selection has a set of statistics associated with it to give you a sense of

- **Save** - save all changes

- **Offset trip(s)** - specify an offset (`HH:MM`) to offset the last trip in the spreadsheet or whichever rows are selected

-## Selecting trips

-To select trips to offset, duplicate or delete, click the row number on the lefthand side of the row. To toggle selection of all trips, click the box in the upper lefthand corner.

-

+

+

+** Note: When entering times manually into the schedule editor they will automatically be converted to a standardized format `13:00:00`**

-## Recognized time entry formats

-When entering times manually into the schedule editor they will automatically be converted to a standardized format `13:00:00`. The following time formats are automatically recognized and converted:

+The following time formats are automatically recognized and converted:

- 24-hr

- `13:00:00`

- `13:00`

+ - `1300`

- 12-hr

- - `1:00p`

+ - `1pm`

- `1:00pm`

- `1:00 pm`

+ - `1:00:00pm`

- `1:00:00 pm`

-## Editing timetables

-Trip details include:

+### Tutorial Video: Editing/Creating Timetables

+The following video demonstrates the creation and editing of timetables described above.

-- **Block ID** - identifies the vehicle used for the trip

-- **Trip ID** - unique identifier for the trip

-- **Trip Headsign** - headsign for the trip

-- **Arrival/Departure Times** - arrival and departure times (departures shown in grey text) for each stop along the pattern

+

-

++## Editing/Creating Frequencies +To edit/create frequencies, navigate to the `Routes` tab (see [Routes](/user/editor/routes/)), click an existing route or route click the `New route` button --> click the `Trip patterns` tab --> click a pattern --> click `Use frequencies` in the 'Type:` dropdown --> click the `Edit schedules` button -## Editing frequencies Frequency details include: - **Block ID** - identifies the vehicle used for the trip - **Trip ID** - unique identifier for the trip - **Trip Headsign** - headsign for the trip - **Start/End Times** - define the beginning and end time for the interval over which the frequency operates -- **Headway** - headway (in minutes) that the pattern runs during the time interval +- **Headway** - headway (in seconds) that the pattern runs during the time interval + +Editing frequencies follow the [same editing procedures](#tutorial-video-editingcreating-timetables) as editing timetables. - +

\ No newline at end of file

diff --git a/docs/user/editor/stops.md b/docs/user/editor/stops.md

index 054b9ee36..86134a0f6 100644

--- a/docs/user/editor/stops.md

+++ b/docs/user/editor/stops.md

@@ -2,37 +2,66 @@

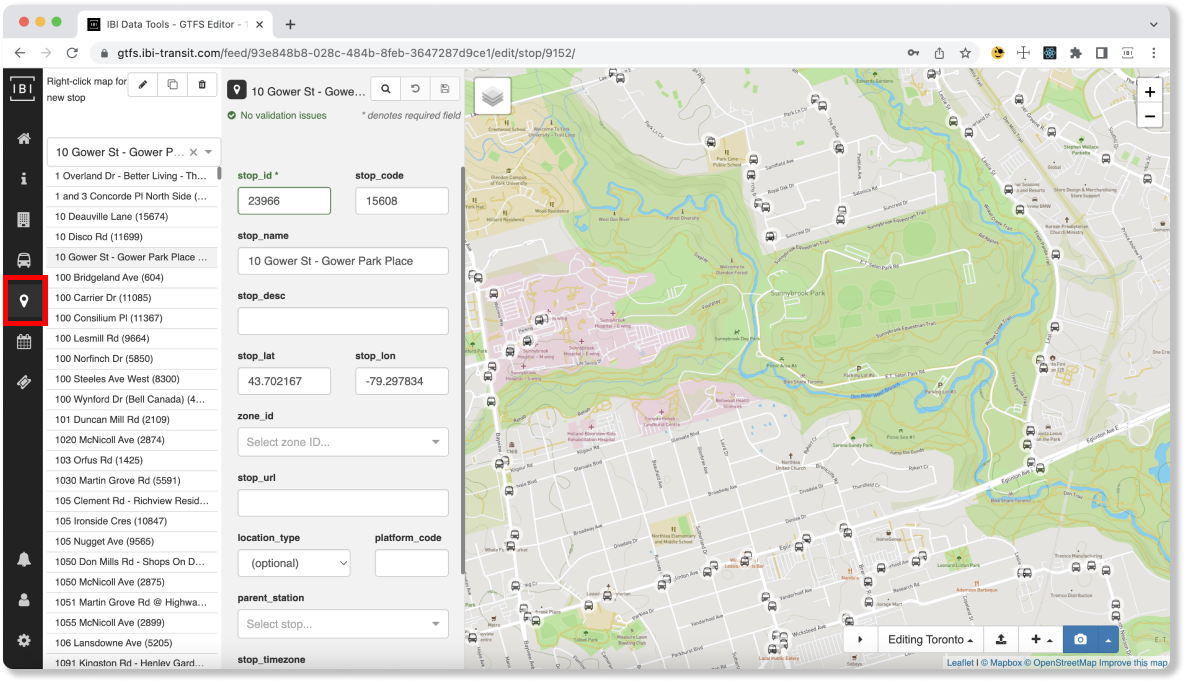

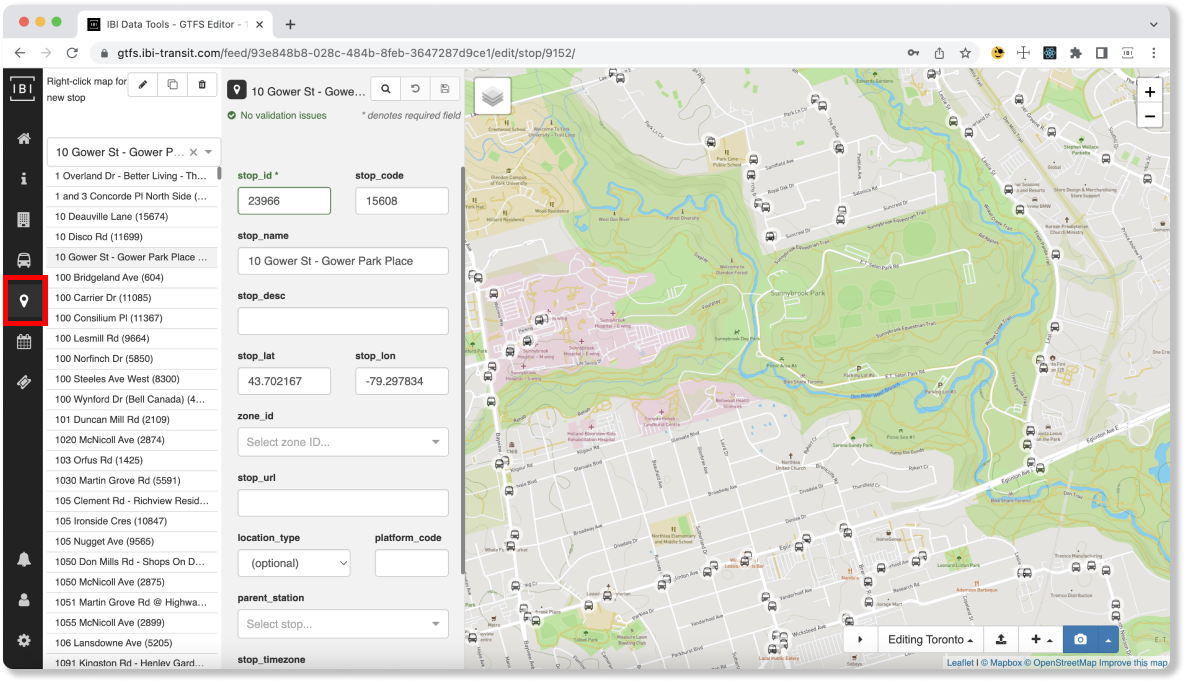

## Editing stops

-To begin editing stops, click the map marker icon button on the lefthand navigation bar.

+To begin editing stops, click the map marker icon button on the lefthand navigation bar (outlined in red).

-

+

-## Selecting a stop

+### Selecting a stop

Choose a stop from the list or search by stop name in the dropdown.

You can also **zoom into the map** while the stop list is visible and once you're close enough you'll begin to see stops displayed. Click one to begin editing its details.

-## Creating a stop: right-click on map

+### Creating a stop

-To create a new stop, **right-click on the map** in the location you would like to place the stop. **Note:** as with all newly created items (except patterns), the new stop will not be saved until the save icon (💾) is clicked.

+To create a new stop, **right-click on the map** in the location you would like to place the stop.

-## Moving a stop

+**Note:** as with all newly created items (except patterns), the new stop will not be saved until the save icon (💾) is clicked.

-To move a selected stop simply **click and drag the stop to the new location**. Or, if already you know the latitude and longitude coordinates, you can copy these into the text fields. After moving the stop, click save to keep the changes.

+### Editing a stop

+Once a stop is created or selected, the following parameters are required:

+- **Stop ID (`stop_id`):** Identifies a stop, station, or station entrance.

+- **Location (`stop_lat/stop_lon`):** These are defined by latitude and longitude. **Note:** Stop locations should have an error of no more than four meters when compared to the actual stop position.

-

+### Moving a stop

-## View all stops for feed

+To move a selected stop, **click and drag the stop to the new location**. Or, if you already know the latitude and longitude coordinates, you can copy these into the text fields. After moving the stop, click save to keep the changes.

+

+### View All Stops for a Feed

To view all stops for a feed, hover over the map layers icon (in the top, lefthand corner of the map) and turn on the `Stop locations` layer. When you do, you'll see all of the stops (which appear as grey circles) for the feed even at wide zoom levels. This layer can be viewed whether or not the stop list is visible, so it can be helpful for users who would like to view stop locations alongside routes or trip patterns.

-

+

\ No newline at end of file

diff --git a/docs/user/editor/stops.md b/docs/user/editor/stops.md

index 054b9ee36..86134a0f6 100644

--- a/docs/user/editor/stops.md

+++ b/docs/user/editor/stops.md

@@ -2,37 +2,66 @@

## Editing stops

-To begin editing stops, click the map marker icon button on the lefthand navigation bar.

+To begin editing stops, click the map marker icon button on the lefthand navigation bar (outlined in red).

-

+

-## Selecting a stop

+### Selecting a stop

Choose a stop from the list or search by stop name in the dropdown.

You can also **zoom into the map** while the stop list is visible and once you're close enough you'll begin to see stops displayed. Click one to begin editing its details.

-## Creating a stop: right-click on map

+### Creating a stop

-To create a new stop, **right-click on the map** in the location you would like to place the stop. **Note:** as with all newly created items (except patterns), the new stop will not be saved until the save icon (💾) is clicked.

+To create a new stop, **right-click on the map** in the location you would like to place the stop.

-## Moving a stop

+**Note:** as with all newly created items (except patterns), the new stop will not be saved until the save icon (💾) is clicked.

-To move a selected stop simply **click and drag the stop to the new location**. Or, if already you know the latitude and longitude coordinates, you can copy these into the text fields. After moving the stop, click save to keep the changes.

+### Editing a stop

+Once a stop is created or selected, the following parameters are required:

+- **Stop ID (`stop_id`):** Identifies a stop, station, or station entrance.

+- **Location (`stop_lat/stop_lon`):** These are defined by latitude and longitude. **Note:** Stop locations should have an error of no more than four meters when compared to the actual stop position.

-

+### Moving a stop

-## View all stops for feed

+To move a selected stop, **click and drag the stop to the new location**. Or, if you already know the latitude and longitude coordinates, you can copy these into the text fields. After moving the stop, click save to keep the changes.

+

+### View All Stops for a Feed

To view all stops for a feed, hover over the map layers icon (in the top, lefthand corner of the map) and turn on the `Stop locations` layer. When you do, you'll see all of the stops (which appear as grey circles) for the feed even at wide zoom levels. This layer can be viewed whether or not the stop list is visible, so it can be helpful for users who would like to view stop locations alongside routes or trip patterns.

-

+ Clicking on a stop shown in this layer will select the stop for editing, but be careful—it can be tricky to select the right stop from very far away!

+### Tutorial Video: Editing/Creating Stops

+The following video demonstrates the creation and editing of stops outlined below, in a step by step manner. The video covers:

+- Adding stops

+- Editing stop positions

+- Editing stop details

+- Showing all stops on map interface

+

+

+

OLD POSITION --X--> O

+ // O --------------------------> O

+ if (!movedFromEnd) {

+ // Delete old "to" segment control points and segments, no "to" segment if we're moving from the end

+ // $FlowFixMe

+ const previousToSegments = previousToStopControlPoint.cpIndex - movedStopControlPoint.cpIndex; // Semi-colon for babel parsing.

+ [deletedControlPoints, deletedSegments] = updateControlPointsAndSegments(deletedControlPoints, deletedSegments, movedStopControlPoint.cpIndex, previousToSegments)

+ }

+

+ if (!movedFromStart) {

+ // Delete old "from" segment control points and segments, no "from" segment if we're moving from the start

+ // $FlowFixMe

+ const previousFromSegments = movedStopControlPoint.cpIndex - previousFromStopControlPoint.cpIndex; // Semi-colon for babel parsing.

+ // $FlowFixMe

+ [deletedControlPoints, deletedSegments] = updateControlPointsAndSegments(deletedControlPoints, deletedSegments, previousFromStopControlPoint.cpIndex, previousFromSegments)

+ }

+

+ return [deletedControlPoints, deletedSegments]

+}

+

+/**

+ * Method to remove a segment and insert a new one that points to the new stop that is being inserted.

+ * TODO: Refactor this method to make things cleaner.

+ */

+const removeNewSegments = (

+ deletedControlPoints: Array

Clicking on a stop shown in this layer will select the stop for editing, but be careful—it can be tricky to select the right stop from very far away!

+### Tutorial Video: Editing/Creating Stops

+The following video demonstrates the creation and editing of stops outlined below, in a step by step manner. The video covers:

+- Adding stops

+- Editing stop positions

+- Editing stop details

+- Showing all stops on map interface

+

+

+

OLD POSITION --X--> O

+ // O --------------------------> O

+ if (!movedFromEnd) {

+ // Delete old "to" segment control points and segments, no "to" segment if we're moving from the end

+ // $FlowFixMe

+ const previousToSegments = previousToStopControlPoint.cpIndex - movedStopControlPoint.cpIndex; // Semi-colon for babel parsing.

+ [deletedControlPoints, deletedSegments] = updateControlPointsAndSegments(deletedControlPoints, deletedSegments, movedStopControlPoint.cpIndex, previousToSegments)

+ }

+

+ if (!movedFromStart) {

+ // Delete old "from" segment control points and segments, no "from" segment if we're moving from the start

+ // $FlowFixMe

+ const previousFromSegments = movedStopControlPoint.cpIndex - previousFromStopControlPoint.cpIndex; // Semi-colon for babel parsing.

+ // $FlowFixMe

+ [deletedControlPoints, deletedSegments] = updateControlPointsAndSegments(deletedControlPoints, deletedSegments, previousFromStopControlPoint.cpIndex, previousFromSegments)

+ }

+

+ return [deletedControlPoints, deletedSegments]

+}

+

+/**

+ * Method to remove a segment and insert a new one that points to the new stop that is being inserted.

+ * TODO: Refactor this method to make things cleaner.

+ */

+const removeNewSegments = (

+ deletedControlPoints: Array

{/* These are the available snapshots */}

- Available snapshots}>

-

+

+

+ {this.messages('availableSnapshots')}

+

{snapshots.length === 0

- ? No other snapshots

+ ? {this.messages('noOtherSnapshots')}

: snapshots.map(s => {

return (

{

-

-

+

+

@@ -121,11 +129,12 @@ export default class EditorFeedSourcePanel extends Component {

}

}

-type ItemProps = {

+export type ItemProps = {

createFeedVersionFromSnapshot: typeof versionActions.createFeedVersionFromSnapshot,

deleteSnapshot: typeof snapshotActions.deleteSnapshot,

disabled: boolean,

downloadSnapshot: typeof snapshotActions.downloadSnapshot,

+ exportPatternsModal: any,

feedSource: Feed,

modal: any,

restoreSnapshot: typeof snapshotActions.restoreSnapshot,

@@ -141,8 +150,7 @@ class SnapshotItem extends Component {

}

_onClickExport = () => {

- const {createFeedVersionFromSnapshot, feedSource, snapshot} = this.props

- createFeedVersionFromSnapshot(feedSource, snapshot.id)

+ this.props.exportPatternsModal.open({snapshot: this.props.snapshot})

}

_onDeleteSnapshot = () => {

@@ -155,7 +163,7 @@ class SnapshotItem extends Component {

}

_onRestoreSnapshot = () => {

- const {restoreSnapshot, feedSource, snapshot} = this.props

+ const {feedSource, restoreSnapshot, snapshot} = this.props

this.props.modal.open({

title: `${this.messages('restore')}`,

body: this.messages('confirmLoad'),

@@ -172,14 +180,14 @@ class SnapshotItem extends Component {

return (

{

disabled={createDisabled}

onClick={this._onClickNew}>

return (

@@ -226,7 +232,7 @@ export default class EntityList extends Component {

block

disabled={!hasRoutes}

onClick={this._onClickTimetableEditor}>

- {

@@ -168,7 +169,7 @@ export default class EntityListButtons extends Component {

: ,

+ validationState: string

+}

+

+type State = {

+ isDeleted: boolean,

+ isDuplicate: boolean

}

-export default class ExceptionDate extends Component {

- _onDateChange = (millis: number) => {

+export const inputStyleProps = {

+ padding: 0,

+ textAlign: 'center'

+}

+

+export default class ExceptionDate extends Component {

+ messages = getComponentMessages('ExceptionDate')

+

+ _addRange = () => {

const {activeComponent, activeEntity, index, updateActiveGtfsEntity} = this.props

const dates = [...activeEntity.dates]

- dates[index] = moment(+millis).format('YYYYMMDD')

+

+ const currentDate = dates[index]

+

+ // A default range of 1 day is created

+ dates.push(moment(currentDate).add(1, 'days').format('YYYYMMDD'))

+

+ sortDates(dates) // Sort before update for maintaining indices

+ updateActiveGtfsEntity({

+ component: activeComponent,

+ entity: activeEntity,

+ props: {dates}

+ })

+ }

+

+ _onDateChange = (millis: number | string) => {

+ if (millis === 'Invalid date') return // Invalid date thrown from Datetimepicker is usually caused by typing

+

+ const {activeComponent, activeEntity, index, updateActiveGtfsEntity} = this.props

+ const dates = [...activeEntity.dates]

+ const newDate = moment(+millis).format('YYYYMMDD')

+

+ // Check if the date selected is already in an existing range.

+ // Invalid date results from typing in the Datetimepicker component (which is valid as long as it resolves to a date)

+ if (dates.some(date => date === newDate && date !== 'Invalid date')) {

+ dates.splice(index, 1)

+ toast.warn(

+ this.messages('dateRemoved'),

+ {

+ position: 'top-right',

+ autoClose: 4000,

+ hideProgressBar: false,

+ closeOnClick: true,

+ pauseOnHover: true,

+ draggable: true

+ }

+ )

+ } else dates[index] = newDate

+

+ sortDates(dates)

updateActiveGtfsEntity({

component: activeComponent,

entity: activeEntity,

@@ -43,39 +99,76 @@ export default class ExceptionDate extends Component {

}

render () {

- const {date, index, isNotValid} = this.props

+ const {date, index, validationErrors, validationState} = this.props

const dateTimeProps = {

mode: 'date',

- dateTime: date ? +moment(date) : +moment(),

+ dateTime: date ? +moment(date) : undefined,

onChange: this._onDateChange,

- defaultText: undefined

+ defaultText: undefined,

+ size: 'sm'

}

+

if (!date) {

- dateTimeProps.defaultText = 'Please select a date'

+ dateTimeProps.defaultText = this.messages('selectDate')

}

return (

- ,

+ validationState: string

+}

+

+type State = {

+ forceErrorState: boolean

+}

+

+export const sortDates = (dates: Array): void => { dates.sort((a, b) => moment(a).diff(moment(b))) }

+

+export default class ExceptionDateRange extends Component {

+ messages = getComponentMessages('ExceptionDateRange')

+

+ state = {

+ forceErrorState: false

+ }

+

+ // This is kept as an arrow function in order to access the component's `this`

+ _onRangeUpdate = (millis: number | string, isStartDate: boolean) => {

+ if (millis === 'Invalid date') return // Invalid date thrown from Datetimepicker is usually caused by typing

+ const {activeComponent, activeEntity, range, updateActiveGtfsEntity} = this.props

+ const {endDate, startDate} = range

+

+ const newRangeBoundary = moment(+millis)

+ const startMoment = moment(startDate)

+ const endMoment = moment(endDate)

+

+ // This prevents the user from inputting invalid data. Validation is not required since this behaviour

+ // is fully prevented (see also use of minDate and maxDate in DateTimePickers)

+ if (

+ (isStartDate && newRangeBoundary.diff(endMoment) > 0) ||

+ (!isStartDate && newRangeBoundary.diff(startMoment) < 0)

+ ) {

+ // You should stop typing and have hit an incorrect date and we should flag the entry as incorrect

+ // All we really need to do is set the validation state to be an error.

+ this.setState({forceErrorState: true})

+ return

+ }

+ // If we've made it here, our date is valid and so we should not override validation

+ // TODO: add rest of method to callback in case state does not update?

+ this.setState({forceErrorState: false}, () => {

+ // Filter out new dates (0s), add them back in later to preserve new dates in final array

+ const newDates = activeEntity.dates.filter(date => typeof date === 'number')

+ let dates = activeEntity.dates.filter(date => typeof date === 'string')

+

+ // Check the updated bounds are larger than before then add or remove dates accordingly.

+ // $FlowFixMe: Flow doesn't recognise array filter above.

+ dates = updateDates(isStartDate, newRangeBoundary, dates, startMoment, endMoment)

+

+ // We need to sort the dates before update so that indices are maintained for validation.

+ sortDates(dates)

+

+ updateActiveGtfsEntity({

+ component: activeComponent,

+ entity: activeEntity,

+ props: {dates: [...dates, ...newDates]}

+ })

+ })

+ }

+

+ _onSelect = (key: string) => {

+ const {activeComponent, activeEntity, range, updateActiveGtfsEntity} = this.props

+ let dates = [...activeEntity.dates]

+

+ const newDates = activeEntity.dates.filter(date => typeof date === 'number')

+ // $FlowFixMe Flow does not recognize filtering on typeof

+ dates = (dates.filter(date => typeof date === 'string'): Array)

+

+ const startMoment = moment(range.startDate)

+ const endMoment = moment(range.endDate)

+ const deleteStart = key === 'range' ? startMoment : startMoment.add(1, 'days') // Preserve the first day if deleting only the end date

+

+ dates = modifyRangeOfDates(dates, endMoment, deleteStart, true)

+

+ // We need to sort the dates before update so that indices are maintained for valdiation.

+ sortDates(dates)

+

+ updateActiveGtfsEntity({

+ component: activeComponent,

+ entity: activeEntity,

+ props: {dates: [...dates, ...newDates]}

+ })

+ }

+

+ render () {

+ const {index, range, validationErrors, validationState} = this.props

+ const endMoment = range.endDate ? moment(range.endDate) : moment(range.startDate).add(1, 'days')

+ const startMoment = range.startDate ? moment(range.startDate) : undefined

+

+ const dateTimeProps = {

+ defaultText: undefined,

+ mode: 'date',

+ size: 'sm',

+ style: {gridColumn: 'span 5'}