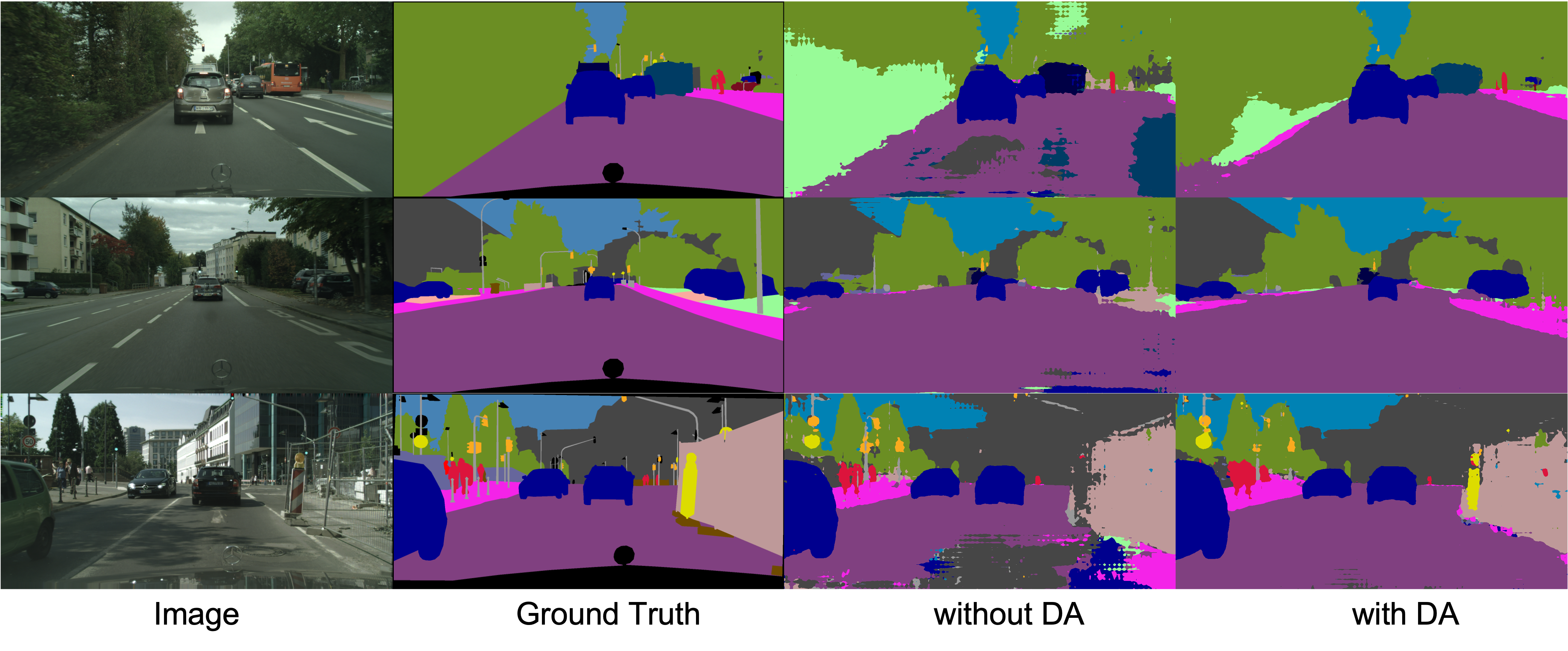

Domain adaptation is the ability to apply an algorithm trained in one or more "source domains" to a different (but related) "target domain". With domain adaptation algorithms, performance drop caused by domain shift can be alleviated. Specifically, none of the manually labeled images will be used in unsupervised domain adaptation(UDA). The following picture shows the result of applying our unsupervised domain adaptation algorithms on semantic segmentation task. By comparing the segmentation results between "without DA" and "with DA", we can observe a remarkable performance gain.

In this project, we reproduce PixMatch [Paper|Code] with PaddlePaddle and reaches mIOU = 47.8% on Cityscapes Dataset.

On top of that, we also tried several adjustments including:

- Add edge constrain branch to improve edge accuracy (negative results, still needs to adjust)

- Use edge as prior information to fix segmentation result (negative results, still needs to adjust)

- Align features' structure across domain (positive result, reached mIOU=48.0%)

| Model | Backbone | Resolution | Training Iters | mIoU | Links |

|---|---|---|---|---|---|

| PixMatch | resnet101 | 1280x640 | 60000 | 47.8% | model |log |vdl |

| Pixmatch-featpullin | resnet101 | 1280x640 | 100000 | 48.0% | model |log |vdl |

If you would like to try out our project, there are serveral things you need to figure out.

git clone https://github.com/PaddlePaddle/PaddleSeg.git

cd contrib/DomainAdaptation/

pip install -r requirments.txt

python -m pip install paddlepaddle-gpu==2.2.0 -i https://mirror.baidu.com/pypi/simple

-

Download GTA5 dataset, cityscapes dataset and relative data_list.

-

Orgranize the dataset and data list as following:

data ├── cityscapes │ ├── gtFine │ │ ├── train │ │ │ ├── aachen │ │ │ └── ... │ │ └── val │ └── leftImg8bit │ ├── train │ └── val ├── GTA5 │ ├── images │ ├── labels │ └── list ├── city_list └── gta5_list

-

-

Download pretrained model on GTA5 and save it to models/.

-

Train on one GPU

- Try the project as the reproduction of PixMatch:

sh run-DA_src.sh - Try the project for other experiments: change to another config file in the training script

- Try the project as the reproduction of PixMatch:

-

Validate on one GPU:

-

Download the trained model on cityscapes, and save it to models/model.pdparams:

-

Validate with the following script. Since we forget to save the ema model, the validation result is 46% :

python3 -m paddle.distributed.launch val.py --config configs/deeplabv2/deeplabv2_resnet101_os8_gta5cityscapes_1280x640_160k_newds_gta5src.yml --model_path models/model.pdparams --num_workers 4

-