diff --git a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/DataX-write.md b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/DataX-write.md

deleted file mode 100644

index e1369c3c6..000000000

--- a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/DataX-write.md

+++ /dev/null

@@ -1,212 +0,0 @@

-# Writing Data to MatrixOne Using DataX

-

-## Overview

-

-This article explains using the DataX tool to write data to offline MatrixOne databases.

-

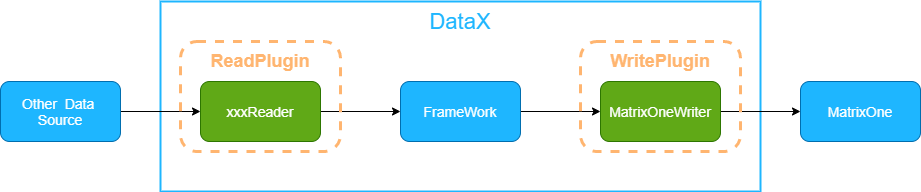

-DataX is an open-source heterogeneous data source offline synchronization tool developed by Alibaba. It provides stable and efficient data synchronization functions to achieve efficient data synchronization between various heterogeneous data sources.

-

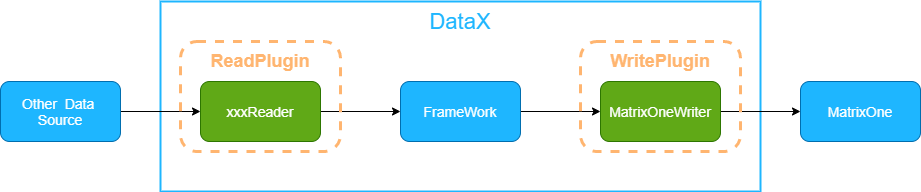

-DataX divides the synchronization of different data sources into two main components: **Reader (read data source)** and **Writer (write to the target data source)**. The DataX framework theoretically supports data synchronization work for any data source type.

-

-MatrixOne is highly compatible with MySQL 8.0. However, since the MySQL Writer plugin with DataX is adapted to the MySQL 5.1 JDBC driver, the community has separately modified the MatrixOneWriter plugin based on the MySQL 8.0 driver to improve compatibility. The MatrixOneWriter plugin implements the functionality of writing data to the target table in the MatrixOne database. In the underlying implementation, MatrixOneWriter connects to the remote MatrixOne database via JDBC and executes the corresponding `insert into ...` SQL statements to write data to MatrixOne. It also supports batch commits for performance optimization.

-

-MatrixOneWriter uses DataX to retrieve generated protocol data from the Reader and generates the corresponding `insert into ...` statements based on your configured `writeMode`. In the event of primary key or uniqueness index conflicts, conflicting rows are excluded, and writing continues. For performance optimization, we use the `PreparedStatement + Batch` method and set the `rewriteBatchedStatements=true` option to buffer data to the thread context buffer. The write request is triggered only when the data volume in the buffer reaches the specified threshold.

-

-

-

-!!! note

- To execute the entire task, you must have permission to execute `insert into ...`. Whether other permissions are required depends on the `preSql` and `postSql` in your task configuration.

-

-MatrixOneWriter mainly aims at ETL development engineers who use MatrixOneWriter to import data from data warehouses into MatrixOne. At the same time, MatrixOneWriter can also serve as a data migration tool for users such as DBAs.

-

-## Before you start

-

-Before using DataX to write data to MatrixOne, you need to complete the installation of the following software:

-

-- Install [JDK 8+ version](https://www.oracle.com/sg/java/technologies/javase/javase8-archive-downloads.html).

-- Install [Python 3.8 (or newer)](https://www.python.org/downloads/).

-- Download the [DataX](https://datax-opensource.oss-cn-hangzhou.aliyuncs.com/202210/datax.tar.gz) installation package and unzip it.

-- Download [matrixonewriter.zip](https://community-shared-data-1308875761.cos.ap-beijing.myqcloud.com/artwork/docs/develop/Computing-Engine/datax-write/matrixonewriter.zip) and unzip it to the `plugin/writer/` directory in the root directory of your DataX project.

-- Install the [MySQL Client](https://dev.mysql.com/downloads/mysql).

-- [Install and start MatrixOne](../../../Get-Started/install-standalone-matrixone.md).

-

-## Steps

-

-### Create a MatrixOne Table

-

-Connect to MatrixOne using the MySQL Client and create a test table in MatrixOne:

-

-```sql

-CREATE DATABASE mo_demo;

-USE mo_demo;

-CREATE TABLE m_user(

- M_ID INT NOT NULL,

- M_NAME CHAR(25) NOT NULL

-);

-```

-

-### Configure the Data Source

-

-In this example, we use data generated **in memory** as the data source:

-

-```json

-"reader": {

- "name": "streamreader",

- "parameter": {

- "column" : [ # You can write multiple columns

- {

- "value": 20210106, # Represents the value of this column

- "type": "long" # Represents the type of this column

- },

- {

- "value": "matrixone",

- "type": "string"

- }

- ],

- "sliceRecordCount": 1000 # Indicates how many times to print

- }

-}

-```

-

-### Write the Job Configuration File

-

-Use the following command to view the configuration template:

-

-```shell

-python datax.py -r {YOUR_READER} -w matrixonewriter

-```

-

-Write the job configuration file `stream2matrixone.json`:

-

-```json

-{

- "job": {

- "setting": {

- "speed": {

- "channel": 1

- }

- },

- "content": [

- {

- "reader": {

- "name": "streamreader",

- "parameter": {

- "column" : [

- {

- "value": 20210106,

- "type": "long"

- },

- {

- "value": "matrixone",

- "type": "string"

- }

- ],

- "sliceRecordCount": 1000

- }

- },

- "writer": {

- "name": "matrixonewriter",

- "parameter": {

- "writeMode": "insert",

- "username": "root",

- "password": "111",

- "column": [

- "M_ID",

- "M_NAME"

- ],

- "preSql": [

- "delete from m_user"

- ],

- "connection": [

- {

- "jdbcUrl": "jdbc:mysql://127.0.0.1:6001/mo_demo",

- "table": [

- "m_user"

- ]

- }

- ]

- }

- }

- }

- ]

- }

-}

-```

-

-### Start DataX

-

-Execute the following command to start DataX:

-

-```shell

-$ cd {YOUR_DATAX_DIR_BIN}

-$ python datax.py stream2matrixone.json

-```

-

-### View the Results

-

-Connect to MatrixOne using the MySQL Client and use `select` to query the inserted results. The 1000 records in memory have been successfully written to MatrixOne.

-

-```sql

-mysql> select * from m_user limit 5;

-+----------+-----------+

-| m_id | m_name |

-+----------+-----------+

-| 20210106 | matrixone |

-| 20210106 | matrixone |

-| 20210106 | matrixone |

-| 20210106 | matrixone |

-| 20210106 | matrixone |

-+----------+-----------+

-5 rows in set (0.01 sec)

-

-mysql> select count(*) from m_user limit 5;

-+----------+

-| count(*) |

-+----------+

-| 1000 |

-+----------+

-1 row in set (0.00 sec)

-```

-

-## Parameter Descriptions

-

-Here are some commonly used parameters for MatrixOneWriter:

-

-| Parameter Name | Parameter Description | Mandatory | Default Value |

-| --- | --- | --- | --- |

-| **jdbcUrl** | JDBC connection information for the target database. DataX will append some attributes to the provided `jdbcUrl` during runtime, such as `yearIsDateType=false&zeroDateTimeBehavior=CONVERT_TO_NULL&rewriteBatchedStatements=true&tinyInt1isBit=false&serverTimezone=Asia/Shanghai`. | Yes | None |

-| **username** | Username for the target database. | Yes | None |

-| **password** | Password for the target database. | Yes | None |

-| **table** | Name of the target table. Supports writing to one or more tables. If configuring multiple tables, make sure their structures are consistent. | Yes | None |

-| **column** | Fields in the target table that must be written with data, separated by commas. For example: `"column": ["id","name","age"]`. To write all columns, you can use `*`, for example: `"column": ["*"]`. | Yes | None |

-| **preSql** | Standard SQL statements to be executed before writing data to the target table. | No | None |

-| **postSql** | Standard SQL statements to be executed after writing data to the target table. | No | None |

-| **writeMode** | Controls the SQL statements used when writing data to the target table. You can choose `insert` or `update`. | `insert` or `update` | `insert` |

-| **batchSize** | Size of records for batch submission. This can significantly reduce network interactions between DataX and MatrixOne, improving overall throughput. However, setting it too large may cause DataX to run out of memory. | No | 1024 |

-

-## Type Conversion

-

-MatrixOneWriter supports most MatrixOne data types, but a few types still need to be supported, so you need to pay special attention to your data types.

-

-Here is a list of type conversions that MatrixOneWriter performs for MatrixOne data types:

-

-| DataX Internal Type | MatrixOne Data Type |

-| ------------------- | ------------------- |

-| Long | int, tinyint, smallint, bigint |

-| Double | float, double, decimal |

-| String | varchar, char, text |

-| Date | date, datetime, timestamp, time |

-| Boolean | bool |

-| Bytes | blob |

-

-## Additional References

-

-- MatrixOne is compatible with the MySQL protocol. MatrixOneWriter is a modified version of the MySQL Writer with adjustments for JDBC driver versions. You can still use the MySQL Writer to write to MatrixOne.

-

-- To add the MatrixOne Writer in DataX, you need to download [matrixonewriter.zip](https://community-shared-data-1308875761.cos.ap-beijing.myqcloud.com/artwork/docs/develop/Computing-Engine/datax-write/matrixonewriter.zip) and unzip it into the `plugin/writer/` directory in the root directory of your DataX project.

-

-## Ask and Questions

-

-**Q: During runtime, I encountered the error "Configuration information error, the configuration file you provided /{YOUR_MATRIXONE_WRITER_PATH}/plugin.json does not exist." What should I do?**

-

-A: DataX attempts to find the plugin.json file by searching for similar folders when it starts. If the matrixonewriter.zip file also exists in the same directory, DataX will try to find it in `.../datax/plugin/writer/matrixonewriter.zip/plugin.json`. In the MacOS environment, DataX will also attempt to see it in `.../datax/plugin/writer/.DS_Store/plugin.json`. In this case, you need to delete these extra files or folders.

diff --git a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink.md b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink.md

deleted file mode 100644

index 77b791158..000000000

--- a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink.md

+++ /dev/null

@@ -1,806 +0,0 @@

-# Writing Real-Time Data to MatrixOne Using Flink

-

-## Overview

-

-Apache Flink is a powerful framework and distributed processing engine focusing on stateful computation. It is suitable for processing both unbounded and bounded data streams efficiently. Flink can operate efficiently in various common cluster environments and performs calculations at memory speed. It supports processing data of any scale.

-

-### Scenarios

-

-* Event-Driven Applications

-

- Event-driven applications typically have states and extract data from one or more event streams. They trigger computations, update states, or perform other external actions based on incoming events. Typical event-driven applications include anti-fraud systems, anomaly detection, rule-based alert systems, and business process monitoring.

-

-* Data Analytics Applications

-

- The primary goal of data analytics tasks is to extract valuable information and metrics from raw data. Flink supports streaming and batch analytics applications, making it suitable for various scenarios such as telecom network quality monitoring, product updates, and experiment evaluation analysis in mobile applications, real-time ad-hoc analysis in the consumer technology space, and large-scale graph analysis.

-

-* Data Pipeline Applications

-

- Extract, transform, load (ETL) is a standard method for transferring data between different storage systems. Data pipelines and ETL jobs are similar in that they can perform data transformation and enrichment and move data from one storage system to another. The difference is that data pipelines run in a continuous streaming mode rather than being triggered periodically. Typical data pipeline applications include real-time query index building in e-commerce and continuous ETL.

-

-This document will introduce two examples. One involves using the Flink computing engine to write real-time data to MatrixOne, and the other uses the Flink computing engine to write streaming data to the MatrixOne database.

-

-## Before you start

-

-### Hardware Environment

-

-The hardware requirements for this practice are as follows:

-

-| Server Name | Server IP | Installed Software | Operating System |

-| node1 | 192.168.146.10 | MatrixOne | Debian11.1 x86 |

-| node2 | 192.168.146.12 | kafka | Centos7.9 |

-| node3 | 192.168.146.11 | IDEA,MYSQL | win10 |

-

-### Software Environment

-

-This practice requires the installation and deployment of the following software environments:

-

-- Install and start MatrixOne by following the steps in [Install standalone MatrixOne](../../../Get-Started/install-standalone-matrixone.md).

-- Download and install [IntelliJ IDEA version 2022.2.1 or higher](https://www.jetbrains.com/idea/download/).

-- Download and install [Kafka 2.13 - 3.5.0](https://archive.apache.org/dist/kafka/3.5.0/kafka_2.13-3.5.0.tgz).

-- Download and install [Flink 1.17.0](https://archive.apache.org/dist/flink/flink-1.17.0/flink-1.17.0-bin-scala_2.12.tgz).

-- Download and install the [MySQL Client 8.0.33](https://downloads.mysql.com/archives/get/p/23/file/mysql-server_8.0.33-1ubuntu23.04_amd64.deb-bundle.tar).

-

-## Example 1: Migrating Data from MySQL to MatrixOne

-

-### Step 1: Initialize the Project

-

-1. Start IDEA, click **File > New > Project**, select **Spring Initializer**, and fill in the following configuration parameters:

-

- - **Name**:matrixone-flink-demo

- - **Location**:~\Desktop

- - **Language**:Java

- - **Type**:Maven

- - **Group**:com.example

- - **Artifact**:matrixone-flink-demo

- - **Package name**:com.matrixone.flink.demo

- - **JDK** 1.8

-

-

-

-2. Add project dependencies and edit the content of `pom.xml` in the project root directory as follows:

-

-```xml

-

-

- 4.0.0

-

- com.matrixone.flink

- matrixone-flink-demo

- 1.0-SNAPSHOT

-

-

- 2.12

- 1.8

- 1.17.0

- compile

-

-

-

-

-

-

- org.apache.flink

- flink-connector-hive_2.12

- ${flink.version}

-

-

-

- org.apache.flink

- flink-java

- ${flink.version}

-

-

-

- org.apache.flink

- flink-streaming-java

- ${flink.version}

-

-

-

- org.apache.flink

- flink-clients

- ${flink.version}

-

-

-

- org.apache.flink

- flink-table-api-java-bridge

- ${flink.version}

-

-

-

- org.apache.flink

- flink-table-planner_2.12

- ${flink.version}

-

-

-

-

- org.apache.flink

- flink-connector-jdbc

- 1.15.4

-

-

- mysql

- mysql-connector-java

- 8.0.33

-

-

-

-

- org.apache.kafka

- kafka_2.13

- 3.5.0

-

-

- org.apache.flink

- flink-connector-kafka

- 3.0.0-1.17

-

-

-

-

- com.alibaba.fastjson2

- fastjson2

- 2.0.34

-

-

-

-

-

-

-

-

-

-

- org.apache.maven.plugins

- maven-compiler-plugin

- 3.8.0

-

- ${java.version}

- ${java.version}

- UTF-8

-

-

-

- maven-assembly-plugin

- 2.6

-

-

- jar-with-dependencies

-

-

-

-

- make-assembly

- package

-

- single

-

-

-

-

-

-

-

-

-

-```

-

-### Step 2: Read MatrixOne Data

-

-After connecting to MatrixOne using the MySQL client, create the necessary database and data tables for the demonstration.

-

-1. Create a database, tables and import data in MatrixOne:

-

- ```SQL

- CREATE DATABASE test;

- USE test;

- CREATE TABLE `person` (`id` INT DEFAULT NULL, `name` VARCHAR(255) DEFAULT NULL, `birthday` DATE DEFAULT NULL);

- INSERT INTO test.person (id, name, birthday) VALUES(1, 'zhangsan', '2023-07-09'),(2, 'lisi', '2023-07-08'),(3, 'wangwu', '2023-07-12');

- ```

-

-2. In IDEA, create the `MoRead.java` class to read MatrixOne data using Flink:

-

- ```java

- package com.matrixone.flink.demo;

-

- import org.apache.flink.api.common.functions.MapFunction;

- import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

- import org.apache.flink.api.java.ExecutionEnvironment;

- import org.apache.flink.api.java.operators.DataSource;

- import org.apache.flink.api.java.operators.MapOperator;

- import org.apache.flink.api.java.typeutils.RowTypeInfo;

- import org.apache.flink.connector.jdbc.JdbcInputFormat;

- import org.apache.flink.types.Row;

-

- import java.text.SimpleDateFormat;

-

- /**

- * @author MatrixOne

- * @description

- */

- public class MoRead {

-

- private static String srcHost = "192.168.146.10";

- private static Integer srcPort = 6001;

- private static String srcUserName = "root";

- private static String srcPassword = "111";

- private static String srcDataBase = "test";

-

- public static void main(String[] args) throws Exception {

-

- ExecutionEnvironment environment = ExecutionEnvironment.getExecutionEnvironment();

- // Set parallelism

- environment.setParallelism(1);

- SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd");

-

- // Set query field type

- RowTypeInfo rowTypeInfo = new RowTypeInfo(

- new BasicTypeInfo[]{

- BasicTypeInfo.INT_TYPE_INFO,

- BasicTypeInfo.STRING_TYPE_INFO,

- BasicTypeInfo.DATE_TYPE_INFO

- },

- new String[]{

- "id",

- "name",

- "birthday"

- }

- );

-

- DataSource dataSource = environment.createInput(JdbcInputFormat.buildJdbcInputFormat()

- .setDrivername("com.mysql.cj.jdbc.Driver")

- .setDBUrl("jdbc:mysql://" + srcHost + ":" + srcPort + "/" + srcDataBase)

- .setUsername(srcUserName)

- .setPassword(srcPassword)

- .setQuery("select * from person")

- .setRowTypeInfo(rowTypeInfo)

- .finish());

-

- // Convert Wed Jul 12 00:00:00 CST 2023 date format to 2023-07-12

- MapOperator mapOperator = dataSource.map((MapFunction) row -> {

- row.setField("birthday", sdf.format(row.getField("birthday")));

- return row;

- });

-

- mapOperator.print();

- }

- }

- ```

-

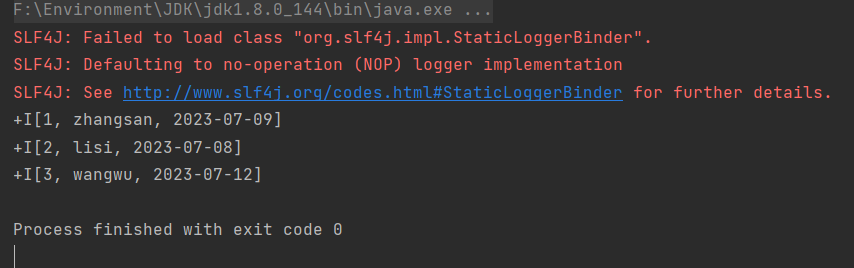

-3. Run `MoRead.Main()` in IDEA, the result is as below:

-

-

-

-### Step 3: Write MySQL Data to MatrixOne

-

-Now, you can begin migrating MySQL data to MatrixOne using Flink.

-

-1. Prepare MySQL data: On node3, use the MySQL client to connect to the local MySQL instance. Create the necessary database, tables, and insert data:

-

- ```sql

- mysql -h127.0.0.1 -P3306 -uroot -proot

- mysql> CREATE DATABASE motest;

- mysql> USE motest;

- mysql> CREATE TABLE `person` (`id` int DEFAULT NULL, `name` varchar(255) DEFAULT NULL, `birthday` date DEFAULT NULL);

- mysql> INSERT INTO motest.person (id, name, birthday) VALUES(2, 'lisi', '2023-07-09'),(3, 'wangwu', '2023-07-13'),(4, 'zhaoliu', '2023-08-08');

- ```

-

-2. Clear MatrixOne table data:

-

- On node3, use the MySQL client to connect to the local MatrixOne instance. Since this example continues to use the `test` database from the previous MatrixOne data reading example, you need to clear the data from the `person` table first.

-

- ```sql

- -- On node3, use the MySQL client to connect to the local MatrixOne

- mysql -h192.168.146.10 -P6001 -uroot -p111

- mysql> TRUNCATE TABLE test.person;

- ```

-

-3. Write code in IDEA:

-

- Create the `Person.java` and `Mysql2Mo.java` classes to use Flink to read MySQL data. Refer to the following example for the `Mysql2Mo.java` class code:

-

-```java

-package com.matrixone.flink.demo.entity;

-

-

-import java.util.Date;

-

-public class Person {

-

- private int id;

- private String name;

- private Date birthday;

-

- public int getId() {

- return id;

- }

-

- public void setId(int id) {

- this.id = id;

- }

-

- public String getName() {

- return name;

- }

-

- public void setName(String name) {

- this.name = name;

- }

-

- public Date getBirthday() {

- return birthday;

- }

-

- public void setBirthday(Date birthday) {

- this.birthday = birthday;

- }

-}

-```

-

-```java

-package com.matrixone.flink.demo;

-

-import com.matrixone.flink.demo.entity.Person;

-import org.apache.flink.api.common.functions.MapFunction;

-import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

-import org.apache.flink.api.java.typeutils.RowTypeInfo;

-import org.apache.flink.connector.jdbc.*;

-import org.apache.flink.streaming.api.datastream.DataStreamSink;

-import org.apache.flink.streaming.api.datastream.DataStreamSource;

-import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

-import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

-import org.apache.flink.types.Row;

-

-import java.sql.Date;

-

-/**

- * @author MatrixOne

- * @description

- */

-public class Mysql2Mo {

-

- private static String srcHost = "127.0.0.1";

- private static Integer srcPort = 3306;

- private static String srcUserName = "root";

- private static String srcPassword = "root";

- private static String srcDataBase = "motest";

-

- private static String destHost = "192.168.146.10";

- private static Integer destPort = 6001;

- private static String destUserName = "root";

- private static String destPassword = "111";

- private static String destDataBase = "test";

- private static String destTable = "person";

-

-

- public static void main(String[] args) throws Exception {

-

- StreamExecutionEnvironment environment = StreamExecutionEnvironment.getExecutionEnvironment();

- //Set parallelism

- environment.setParallelism(1);

- //Set query field type

- RowTypeInfo rowTypeInfo = new RowTypeInfo(

- new BasicTypeInfo[]{

- BasicTypeInfo.INT_TYPE_INFO,

- BasicTypeInfo.STRING_TYPE_INFO,

- BasicTypeInfo.DATE_TYPE_INFO

- },

- new String[]{

- "id",

- "name",

- "birthday"

- }

- );

-

- // add srouce

- DataStreamSource dataSource = environment.createInput(JdbcInputFormat.buildJdbcInputFormat()

- .setDrivername("com.mysql.cj.jdbc.Driver")

- .setDBUrl("jdbc:mysql://" + srcHost + ":" + srcPort + "/" + srcDataBase)

- .setUsername(srcUserName)

- .setPassword(srcPassword)

- .setQuery("select * from person")

- .setRowTypeInfo(rowTypeInfo)

- .finish());

-

- //run ETL

- SingleOutputStreamOperator mapOperator = dataSource.map((MapFunction) row -> {

- Person person = new Person();

- person.setId((Integer) row.getField("id"));

- person.setName((String) row.getField("name"));

- person.setBirthday((java.util.Date)row.getField("birthday"));

- return person;

- });

-

- //set matrixone sink information

- mapOperator.addSink(

- JdbcSink.sink(

- "insert into " + destTable + " values(?,?,?)",

- (ps, t) -> {

- ps.setInt(1, t.getId());

- ps.setString(2, t.getName());

- ps.setDate(3, new Date(t.getBirthday().getTime()));

- },

- new JdbcConnectionOptions.JdbcConnectionOptionsBuilder()

- .withDriverName("com.mysql.cj.jdbc.Driver")

- .withUrl("jdbc:mysql://" + destHost + ":" + destPort + "/" + destDataBase)

- .withUsername(destUserName)

- .withPassword(destPassword)

- .build()

- )

- );

-

- environment.execute();

- }

-

-}

-```

-

-### Step 4: View the Execution Results

-

-Execute the following SQL in MatrixOne to view the execution results:

-

-```sql

-mysql> select * from test.person;

-+------+---------+------------+

-| id | name | birthday |

-+------+---------+------------+

-| 2 | lisi | 2023-07-09 |

-| 3 | wangwu | 2023-07-13 |

-| 4 | zhaoliu | 2023-08-08 |

-+------+---------+------------+

-3 rows in set (0.01 sec)

-```

-

-## Example 2: Importing Kafka data to MatrixOne

-

-### Step 1: Start the Kafka Service

-

-Kafka cluster coordination and metadata management can be achieved using KRaft or ZooKeeper. Here, we will use Kafka version 3.5.0, eliminating the need for a standalone ZooKeeper software and utilizing Kafka's built-in **KRaft** for metadata management. Follow the steps below to configure the settings. The configuration file can be found in the Kafka software's root directory under `config/kraft/server.properties`.

-

-The configuration file is as follows:

-

-```properties

-# Licensed to the Apache Software Foundation (ASF) under one or more

-# contributor license agreements. See the NOTICE file distributed with

-# this work for additional information regarding copyright ownership.

-# The ASF licenses this file to You under the Apache License, Version 2.0

-# (the "License"); you may not use this file except in compliance with

-# the License. You may obtain a copy of the License at

-#

-# http://www.apache.org/licenses/LICENSE-2.0

-#

-# Unless required by applicable law or agreed to in writing, software

-# distributed under the License is distributed on an "AS IS" BASIS,

-# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-# See the License for the specific language governing permissions and

-# limitations under the License.

-

-#

-# This configuration file is intended for use in KRaft mode, where

-# Apache ZooKeeper is not present. See config/kraft/README.md for details.

-#

-

-############################# Server Basics #############################

-

-# The role of this server. Setting this puts us in KRaft mode

-process.roles=broker,controller

-

-# The node id associated with this instance's roles

-node.id=1

-

-# The connect string for the controller quorum

-controller.quorum.voters=1@192.168.146.12:9093

-

-############################# Socket Server Settings #############################

-

-# The address the socket server listens on.

-# Combined nodes (i.e. those with `process.roles=broker,controller`) must list the controller listener here at a minimum.

-# If the broker listener is not defined, the default listener will use a host name that is equal to the value of java.net.InetAddress.getCanonicalHostName(),

-# with PLAINTEXT listener name, and port 9092.

-# FORMAT:

-# listeners = listener_name://host_name:port

-# EXAMPLE:

-# listeners = PLAINTEXT://your.host.name:9092

-#listeners=PLAINTEXT://:9092,CONTROLLER://:9093

-listeners=PLAINTEXT://192.168.146.12:9092,CONTROLLER://192.168.146.12:9093

-

-# Name of listener used for communication between brokers.

-inter.broker.listener.name=PLAINTEXT

-

-# Listener name, hostname and port the broker will advertise to clients.

-# If not set, it uses the value for "listeners".

-#advertised.listeners=PLAINTEXT://localhost:9092

-

-# A comma-separated list of the names of the listeners used by the controller.

-# If no explicit mapping set in `listener.security.protocol.map`, default will be using PLAINTEXT protocol

-# This is required if running in KRaft mode.

-controller.listener.names=CONTROLLER

-

-# Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details

-listener.security.protocol.map=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

-

-# The number of threads that the server uses for receiving requests from the network and sending responses to the network

-num.network.threads=3

-

-# The number of threads that the server uses for processing requests, which may include disk I/O

-num.io.threads=8

-

-# The send buffer (SO_SNDBUF) used by the socket server

-socket.send.buffer.bytes=102400

-

-# The receive buffer (SO_RCVBUF) used by the socket server

-socket.receive.buffer.bytes=102400

-

-# The maximum size of a request that the socket server will accept (protection against OOM)

-socket.request.max.bytes=104857600

-

-

-############################# Log Basics #############################

-

-# A comma separated list of directories under which to store log files

-log.dirs=/home/software/kafka_2.13-3.5.0/kraft-combined-logs

-

-# The default number of log partitions per topic. More partitions allow greater

-# parallelism for consumption, but this will also result in more files across

-# the brokers.

-num.partitions=1

-

-# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

-# This value is recommended to be increased for installations with data dirs located in RAID array.

-num.recovery.threads.per.data.dir=1

-

-############################# Internal Topic Settings #############################

-# The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state"

-# For anything other than development testing, a value greater than 1 is recommended to ensure availability such as 3.

-offsets.topic.replication.factor=1

-transaction.state.log.replication.factor=1

-transaction.state.log.min.isr=1

-

-############################# Log Flush Policy #############################

-

-# Messages are immediately written to the filesystem but by default we only fsync() to sync

-# the OS cache lazily. The following configurations control the flush of data to disk.

-# There are a few important trade-offs here:

-# 1. Durability: Unflushed data may be lost if you are not using replication.

-# 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush.

-# 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to excessive seeks.

-# The settings below allow one to configure the flush policy to flush data after a period of time or

-# every N messages (or both). This can be done globally and overridden on a per-topic basis.

-

-# The number of messages to accept before forcing a flush of data to disk

-#log.flush.interval.messages=10000

-

-# The maximum amount of time a message can sit in a log before we force a flush

-#log.flush.interval.ms=1000

-

-############################# Log Retention Policy #############################

-

-# The following configurations control the disposal of log segments. The policy can

-# be set to delete segments after a period of time, or after a given size has accumulated.

-# A segment will be deleted whenever *either* of these criteria are met. Deletion always happens

-# from the end of the log.

-

-# The minimum age of a log file to be eligible for deletion due to age

-log.retention.hours=72

-

-# A size-based retention policy for logs. Segments are pruned from the log unless the remaining

-# segments drop below log.retention.bytes. Functions independently of log.retention.hours.

-#log.retention.bytes=1073741824

-

-# The maximum size of a log segment file. When this size is reached a new log segment will be created.

-log.segment.bytes=1073741824

-

-# The interval at which log segments are checked to see if they can be deleted according

-# to the retention policies

-log.retention.check.interval.ms=300000

-```

-

-After the file configuration is completed, execute the following command to start the Kafka service:

-

-```shell

-#Generate cluster ID

-$ KAFKA_CLUSTER_ID="$(bin/kafka-storage.sh random-uuid)"

-#Set log directory format

-$ bin/kafka-storage.sh format -t $KAFKA_CLUSTER_ID -c config/kraft/server.properties

-#Start Kafka service

-$ bin/kafka-server-start.sh config/kraft/server.properties

-```

-

-### Step 2: Create a Kafka Topic

-

-To enable Flink to read data from and write data to MatrixOne, we first need to create a Kafka topic named "matrixone." In the command below, use the `--bootstrap-server` parameter to specify the Kafka service's listening address as `192.168.146.12:9092`:

-

-```shell

-$ bin/kafka-topics.sh --create --topic matrixone --bootstrap-server 192.168.146.12:9092

-```

-

-### Step 3: Read MatrixOne Data

-

-After connecting to the MatrixOne database, perform the following steps to create the necessary database and tables:

-

-1. Create a database, and tables and import data in MatrixOne:

-

- ```sql

- CREATE TABLE `users` (

- `id` INT DEFAULT NULL,

- `name` VARCHAR(255) DEFAULT NULL,

- `age` INT DEFAULT NULL

- )

- ```

-

-2. Write code in the IDEA integrated development environment:

-

- In IDEA, create two classes: `User.java` and `Kafka2Mo.java`. These classes read from Kafka and write data to the MatrixOne database using Flink.

-

-```java

-package com.matrixone.flink.demo.entity;

-

-public class User {

-

- private int id;

- private String name;

- private int age;

-

- public int getId() {

- return id;

- }

-

- public void setId(int id) {

- this.id = id;

- }

-

- public String getName() {

- return name;

- }

-

- public void setName(String name) {

- this.name = name;

- }

-

- public int getAge() {

- return age;

- }

-

- public void setAge(int age) {

- this.age = age;

- }

-}

-```

-

-```java

-package com.matrixone.flink.demo;

-

-import com.alibaba.fastjson2.JSON;

-import com.matrixone.flink.demo.entity.User;

-import org.apache.flink.api.common.eventtime.WatermarkStrategy;

-import org.apache.flink.api.common.serialization.AbstractDeserializationSchema;

-import org.apache.flink.connector.jdbc.JdbcExecutionOptions;

-import org.apache.flink.connector.jdbc.JdbcSink;

-import org.apache.flink.connector.jdbc.JdbcStatementBuilder;

-import org.apache.flink.connector.jdbc.internal.options.JdbcConnectorOptions;

-import org.apache.flink.connector.kafka.source.KafkaSource;

-import org.apache.flink.connector.kafka.source.enumerator.initializer.OffsetsInitializer;

-import org.apache.flink.streaming.api.datastream.DataStreamSource;

-import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

-import org.apache.kafka.clients.consumer.OffsetResetStrategy;

-

-import java.nio.charset.StandardCharsets;

-

-/**

- * @author MatrixOne

- * @desc

- */

-public class Kafka2Mo {

-

- private static String srcServer = "192.168.146.12:9092";

- private static String srcTopic = "matrixone";

- private static String consumerGroup = "matrixone_group";

-

- private static String destHost = "192.168.146.10";

- private static Integer destPort = 6001;

- private static String destUserName = "root";

- private static String destPassword = "111";

- private static String destDataBase = "test";

- private static String destTable = "person";

-

- public static void main(String[] args) throws Exception {

-

- //Initialize environment

- StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

- //Set parallelism

- env.setParallelism(1);

-

- //Set kafka source information

- KafkaSource source = KafkaSource.builder()

- //Kafka service

- .setBootstrapServers(srcServer)

- //Message topic

- .setTopics(srcTopic)

- //Consumer group

- .setGroupId(consumerGroup)

- //Offset When no offset is submitted, consumption starts from the beginning.

- .setStartingOffsets(OffsetsInitializer.committedOffsets(OffsetResetStrategy.LATEST))

- //Customized parsing message content

- .setValueOnlyDeserializer(new AbstractDeserializationSchema() {

- @Override

- public User deserialize(byte[] message) {

- return JSON.parseObject(new String(message, StandardCharsets.UTF_8), User.class);

- }

- })

- .build();

- DataStreamSource kafkaSource = env.fromSource(source, WatermarkStrategy.noWatermarks(), "kafka_maxtixone");

- //kafkaSource.print();

-

- //set matrixone sink information

- kafkaSource.addSink(JdbcSink.sink(

- "insert into users (id,name,age) values(?,?,?)",

- (JdbcStatementBuilder) (preparedStatement, user) -> {

- preparedStatement.setInt(1, user.getId());

- preparedStatement.setString(2, user.getName());

- preparedStatement.setInt(3, user.getAge());

- },

- JdbcExecutionOptions.builder()

- //default value is 5000

- .withBatchSize(1000)

- //default value is 0

- .withBatchIntervalMs(200)

- //Maximum attempts

- .withMaxRetries(5)

- .build(),

- JdbcConnectorOptions.builder()

- .setDBUrl("jdbc:mysql://"+destHost+":"+destPort+"/"+destDataBase)

- .setUsername(destUserName)

- .setPassword(destPassword)

- .setDriverName("com.mysql.cj.jdbc.Driver")

- .setTableName(destTable)

- .build()

- ));

- env.execute();

- }

-}

-```

-

-After writing the code, you can run the Flink task by selecting the `Kafka2Mo.java` file in IDEA and executing `Kafka2Mo.Main()`.

-

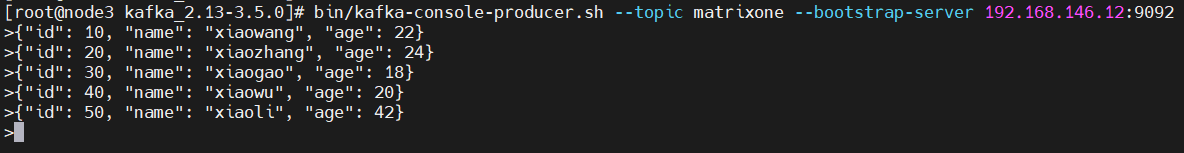

-### Step 4: Generate data

-

-You can add data to Kafka's "matrixone" topic using the command-line producer tools provided by Kafka. In the following command, use the `--topic` parameter to specify the topic to add to and the `--bootstrap-server` parameter to determine the listening address of the Kafka service.

-

-```shell

-bin/kafka-console-producer.sh --topic matrixone --bootstrap-server 192.168.146.12:9092

-```

-

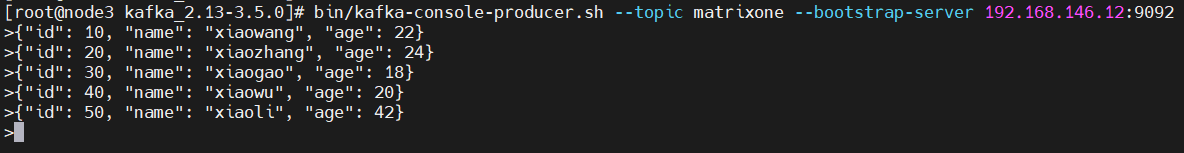

-After executing the above command, you will wait for the message content to be entered on the console. Enter the message values (values) directly, with each line representing one message (separated by newline characters), as follows:

-

-```shell

-{"id": 10, "name": "xiaowang", "age": 22}

-{"id": 20, "name": "xiaozhang", "age": 24}

-{"id": 30, "name": "xiaogao", "age": 18}

-{"id": 40, "name": "xiaowu", "age": 20}

-{"id": 50, "name": "xiaoli", "age": 42}

-```

-

-

-

-### Step 5: View execution results

-

-Execute the following SQL query results in MatrixOne:

-

-```sql

-mysql> select * from test.users;

-+------+-----------+------+

-| id | name | age |

-+------+-----------+------+

-| 10 | xiaowang | 22 |

-| 20 | xiaozhang | 24 |

-| 30 | xiaogao | 18 |

-| 40 | xiaowu | 20 |

-| 50 | xiaoli | 42 |

-+------+-----------+------+

-5 rows in set (0.01 sec)

-```

diff --git a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-kafka-matrixone.md b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-kafka-matrixone.md

new file mode 100644

index 000000000..f5618e9be

--- /dev/null

+++ b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-kafka-matrixone.md

@@ -0,0 +1,359 @@

+# Write Kafka data to MatrixOne using Flink

+

+This chapter describes how to write Kafka data to MatrixOne using Flink.

+

+## Pre-preparation

+

+This practice requires the installation and deployment of the following software environments:

+

+- Complete [standalone MatrixOne deployment](https://docs.matrixorigin.cn/1.2.2/MatrixOne/Get-Started/install-standalone-matrixone/).

+- Download and install [lntelliJ IDEA (2022.2.1 or later version)](https://www.jetbrains.com/idea/download/).

+- Select the [JDK 8+ version](https://www.oracle.com/sg/java/technologies/javase/javase8-archive-downloads.html) version to download and install depending on your system environment.

+- Download and install [Kafka](https://archive.apache.org/dist/kafka/3.5.0/kafka_2.13-3.5.0.tgz).

+- Download and install [Flink](https://archive.apache.org/dist/flink/flink-1.17.0/flink-1.17.0-bin-scala_2.12.tgz) with a minimum supported version of 1.11.

+- Download and install the [MySQL Client](https://dev.mysql.com/downloads/mysql).

+

+## Operational steps

+

+### Step one: Start the Kafka service

+

+Kafka cluster coordination and metadata management can be achieved through KRaft or ZooKeeper. Here, instead of relying on standalone ZooKeeper software, we'll use Kafka's own **KRaft** for metadata management. Follow these steps to configure the configuration file, which is located in `config/kraft/server.properties` in the root of the Kafka software.

+

+The configuration file reads as follows:

+

+```properties

+# Licensed to the Apache Software Foundation (ASF) under one or more

+# contributor license agreements. See the NOTICE file distributed with

+# this work for additional information regarding copyright ownership.

+# The ASF licenses this file to You under the Apache License, Version 2.0

+# (the "License"); you may not use this file except in compliance with

+# the License. You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+

+#

+# This configuration file is intended for use in KRaft mode, where

+# Apache ZooKeeper is not present. See config/kraft/README.md for details.

+#

+

+############################# Server Basics #############################

+

+# The role of this server. Setting this puts us in KRaft mode

+process.roles=broker,controller

+

+# The node id associated with this instance's roles

+node.id=1

+

+# The connect string for the controller quorum

+controller.quorum.voters=1@xx.xx.xx.xx:9093

+

+############################# Socket Server Settings #############################

+

+# The address the socket server listens on.

+# Combined nodes (i.e. those with `process.roles=broker,controller`) must list the controller listener here at a minimum.

+# If the broker listener is not defined, the default listener will use a host name that is equal to the value of java.net.InetAddress.getCanonicalHostName(),

+# with PLAINTEXT listener name, and port 9092.

+# FORMAT:

+# listeners = listener_name://host_name:port

+# EXAMPLE:

+# listeners = PLAINTEXT://your.host.name:9092

+#listeners=PLAINTEXT://:9092,CONTROLLER://:9093 listeners=PLAINTEXT://xx.xx.xx.xx:9092,CONTROLLER://xx.xx.xx.xx:9093

+

+# Name of listener used for communication between brokers.

+inter.broker.listener.name=PLAINTEXT

+

+# Listener name, hostname and port the broker will advertise to clients.

+# If not set, it uses the value for "listeners".

+#advertised.listeners=PLAINTEXT://localhost:9092

+

+# A comma-separated list of the names of the listeners used by the controller.

+# If no explicit mapping set in `listener.security.protocol.map`, default will be using PLAINTEXT protocol

+# This is required if running in KRaft mode.

+controller.listener.names=CONTROLLER

+

+# Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details

+listener.security.protocol.map=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

+

+# The number of threads that the server uses for receiving requests from the network and sending responses to the network

+num.network.threads=3

+

+# The number of threads that the server uses for processing requests, which may include disk I/O

+num.io.threads=8

+

+# The send buffer (SO_SNDBUF) used by the socket server

+socket.send.buffer.bytes=102400

+

+# The receive buffer (SO_RCVBUF) used by the socket server

+socket.receive.buffer.bytes=102400

+

+# The maximum size of a request that the socket server will accept (protection against OOM)

+socket.request.max.bytes=104857600

+

+

+############################# Log Basics #############################

+

+# A comma separated list of directories under which to store log files

+log.dirs=/home/software/kafka_2.13-3.5.0/kraft-combined-logs

+

+# The default number of log partitions per topic. More partitions allow greater

+# parallelism for consumption, but this will also result in more files across

+# the brokers.

+num.partitions=1

+

+# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

+# This value is recommended to be increased for installations with data dirs located in RAID array.

+num.recovery.threads.per.data.dir=1

+

+############################# Internal Topic Settings #############################

+# The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state"

+# For anything other than development testing, a value greater than 1 is recommended to ensure availability such as 3.

+offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1

+

+############################# Log Flush Policy #############################

+

+# Messages are immediately written to the filesystem but by default we only fsync() to sync

+# the OS cache lazily. The following configurations control the flush of data to disk.

+# There are a few important trade-offs here:

+# 1. Durability: Unflushed data may be lost if you are not using replication.

+# 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush.

+# 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to excessive seeks.

+# The settings below allow one to configure the flush policy to flush data after a period of time or

+# every N messages (or both). This can be done globally and overridden on a per-topic basis.

+

+# The number of messages to accept before forcing a flush of data to disk

+#log.flush.interval.messages=10000

+

+# The maximum amount of time a message can sit in a log before we force a flush

+#log.flush.interval.ms=1000

+

+############################# Log Retention Policy #############################

+

+# The following configurations control the disposal of log segments. The policy can

+# be set to delete segments after a period of time, or after a given size has accumulated.

+# A segment will be deleted whenever *either* of these criteria are met. Deletion always happens

+# from the end of the log.

+

+# The minimum age of a log file to be eligible for deletion due to age

+log.retention.hours=72

+

+# A size-based retention policy for logs. Segments are pruned from the log unless the remaining

+# segments drop below log.retention.bytes. Functions independently of log.retention.hours.

+#log.retention.bytes=1073741824

+

+# The maximum size of a log segment file. When this size is reached a new log segment will be created.

+log.segment.bytes=1073741824

+

+# The interval at which log segments are checked to see if they can be deleted according

+# to the retention policies

+log.retention.check.interval.ms=300000

+```

+

+When the file configuration is complete, start the Kafka service by executing the following command:

+

+```shell

+#Generate cluster ID

+$ KAFKA_CLUSTER_ID="$(bin/kafka-storage.sh random-uuid)" #Set log directory format

+$ bin/kafka-storage.sh format -t $KAFKA_CLUSTER_ID -c config/kraft/server.properties #Start Kafka service

+$ bin/kafka-server-start.sh config/kraft/server.properties

+```

+

+### Step two: Create a Kafka theme

+

+In order for Flink to read data from and write to MatrixOne, we need to first create a Kafka theme called "matrixone." Specify the listening address of the Kafka service as `xx.xx.xx.xx:9092` using the `--bootstrap-server` parameter in the following command:

+

+```shell

+$ bin/kafka-topics.sh --create --topic matrixone --bootstrap-server xx.xx.xx.xx:9092

+```

+

+### Step Three: Read MatrixOne Data

+

+After connecting to the MatrixOne database, you need to do the following to create the required databases and data tables:

+

+1. Create databases and data tables in MatrixOne and import data:

+

+ ```sql

+ CREATE TABLE `users` (

+ `id` INT DEFAULT NULL,

+ `name` VARCHAR(255) DEFAULT NULL,

+ `age` INT DEFAULT NULL

+ )

+ ```

+

+2. Write code in the IDEA integrated development environment:

+

+ In IDEA, create two classes: `User.java` and `Kafka2Mo.java`. These classes are used to read data from Kafka using Flink and write the data to the MatrixOne database.

+

+```java

+

+package com.matrixone.flink.demo.entity;

+

+public class User {

+

+ private int id;

+ private String name;

+ private int age;

+

+ public int getId() {

+ return id;

+ }

+

+ public void setId(int id) {

+ this.id = id;

+ }

+

+ public String getName() {

+ return name;

+ }

+

+ public void setName(String name) {

+ this.name = name;

+ }

+

+ public int getAge() {

+ return age;

+ }

+

+ public void setAge(int age) {

+ this.age = age;

+ }

+}

+```

+

+```java

+package com.matrixone.flink.demo;

+

+import com.alibaba.fastjson2.JSON;

+import com.matrixone.flink.demo.entity.User;

+import org.apache.flink.api.common.eventtime.WatermarkStrategy;

+import org.apache.flink.api.common.serialization.AbstractDeserializationSchema;

+import org.apache.flink.connector.jdbc.JdbcExecutionOptions;

+import org.apache.flink.connector.jdbc.JdbcSink;

+import org.apache.flink.connector.jdbc.JdbcStatementBuilder;

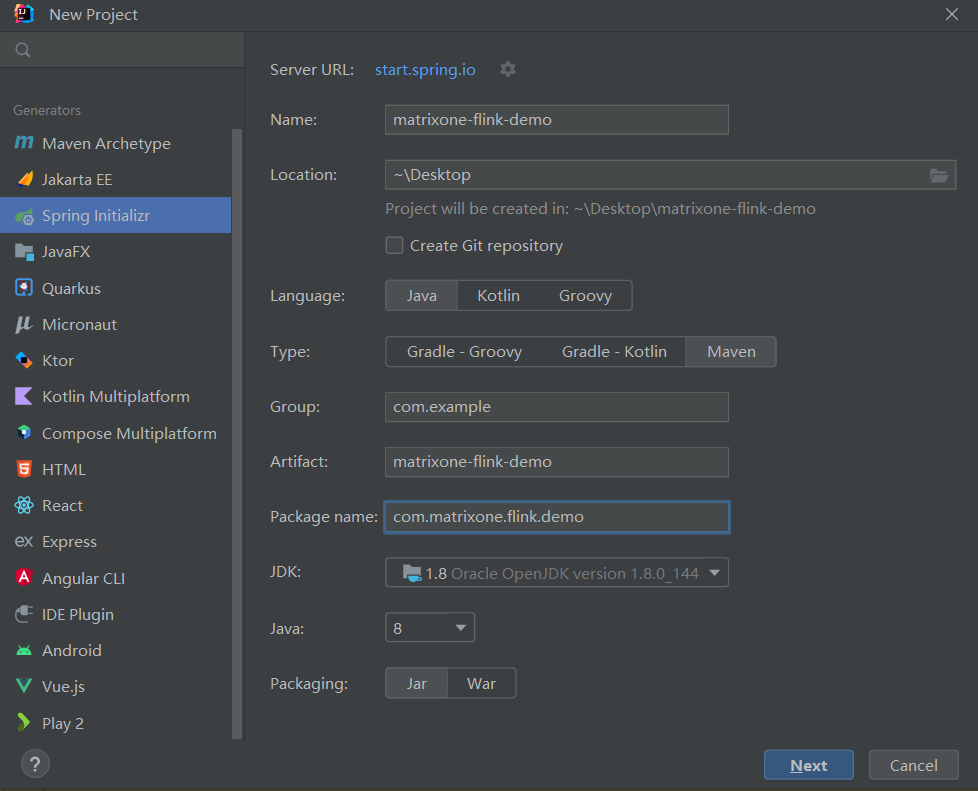

+import org.apache.flink.connector.jdbc.internal.options.JdbcConnectorOptions;

+import org.apache.flink.connector.kafka.source.KafkaSource;

+import org.apache.flink.connector.kafka.source.enumerator.initializer.OffsetsInitializer;

+import org.apache.flink.streaming.api.datastream.DataStreamSource;

+import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

+import org.apache.kafka.clients.consumer.OffsetResetStrategy;

+

+import java.nio.charset.StandardCharsets;

+

+/**

+ * @author MatrixOne

+ * @desc

+ */

+public class Kafka2Mo {

+

+ private static String srcServer = "xx.xx.xx.xx:9092";

+ private static String srcTopic = "matrixone";

+ private static String consumerGroup = "matrixone_group";

+

+ private static String destHost = "xx.xx.xx.xx";

+ private static Integer destPort = 6001;

+ private static String destUserName = "root";

+ private static String destPassword = "111";

+ private static String destDataBase = "test";

+ private static String destTable = "person";

+

+ public static void main(String[] args) throws Exception {

+

+ // Initialize the environment

+ StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

+ // Set parallelism

+ env.setParallelism(1);

+

+ // Set kafka source information

+ KafkaSource source = KafkaSource.builder()

+ //Kafka service

+ .setBootstrapServers(srcServer)

+ // message subject

+ .setTopics(srcTopic)

+ // consumption group

+ .setGroupId(consumerGroup)

+ // offset Consume from the beginning when no offset is submitted

+ .setStartingOffsets(OffsetsInitializer.committedOffsets(OffsetResetStrategy.LATEST))

+ // custom parse message content

+ .setValueOnlyDeserializer(new AbstractDeserializationSchema() {

+ @Override

+ public User deserialize(byte[] message) {

+ return JSON.parseObject(new String(message, StandardCharsets.UTF_8), User.class);

+ }

+ })

+ .build();

+ DataStreamSource kafkaSource = env.fromSource(source, WatermarkStrategy.noWatermarks(), "kafka_maxtixone");

+ //kafkaSource.print();

+

+ // Set matrixone sink information

+ kafkaSource.addSink(JdbcSink.sink()

+ "insert into users (id,name,age) values(?,?,?)",

+ (JdbcStatementBuilder) (preparedStatement, user) -> {

+ preparedStatement.setInt(1, user.getId());

+ preparedStatement.setString(2, user.getName());

+ preparedStatement.setInt(3, user.getAge());

+ },

+ JdbcExecutionOptions.builder()

+ //Default value 5000

+ .withBatchSize(1000)

+ //Default value is 0

+ .withBatchIntervalMs(200)

+ // Maximum number of attempts

+ .withMaxRetries(5)

+ .build(),

+ JdbcConnectorOptions.builder()

+ .setDBUrl("jdbc:mysql://"+destHost+":"+destPort+"/"+destDataBase)

+ .setUsername(destUserName)

+ .setPassword(destPassword)

+ .setDriverName("com.mysql.cj.jdbc.Driver")

+ .setTableName(destTable)

+ .build()

+ ));

+ env.execute();

+ }

+}

+```

+

+Once the code is written, you can run the Flink task, which is to select the `Kafka2Mo.java` file in IDEA and execute `Kafka2Mo.Main()`.

+

+### Step Four: Generating Data

+

+Using the command-line producer tools provided by Kafka, you can add data to Kafka's "matrixone" theme. In the following command, use the `--topic` parameter to specify the topic to add to, and the `--bootstrap-server` parameter to specify the listening address of the Kafka service.

+

+```shell

+bin/kafka-console-producer.sh --topic matrixone --bootstrap-server xx.xx.xx.xx:9092

+```

+

+After executing the above command, you will wait on the console to enter the message content. Simply enter the message value (value) directly, one message per line (separated by a newline character), as follows:

+

+```shell

+{"id": 10, "name": "xiaowang", "age": 22}

+{"id": 20, "name": "xiaozhang", "age": 24}

+{"id": 30, "name": "xiaogao", "age": 18}

+{"id": 40, "name": "xiaowu", "age": 20}

+{"id": 50, "name": "xiaoli", "age": 42}

+```

+

+

+

+### Step Five: View Implementation Results

+

+Execute the following SQL query results in MatrixOne:

+

+```sql

+mysql> select * from test.users;

++------+-----------+------+

+| id | name | age |

++------+-----------+------+

+| 10 | xiaowang | 22 |

+| 20 | xiaozhang | 24 |

+| 30 | xiaogao | 18 |

+| 40 | xiaowu | 20 |

+| 50 | xiaoli | 42 |

++------+-----------+------+

+5 rows in set (0.01 sec)

+```

\ No newline at end of file

diff --git a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mongo-matrixone.md b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mongo-matrixone.md

new file mode 100644

index 000000000..27d9b16c6

--- /dev/null

+++ b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mongo-matrixone.md

@@ -0,0 +1,155 @@

+# Write MongoDB data to MatrixOne using Flink

+

+This chapter describes how to write MongoDB data to MatrixOne using Flink.

+

+## Pre-preparation

+

+This practice requires the installation and deployment of the following software environments:

+

+- Complete [standalone MatrixOne deployment](https://docs.matrixorigin.cn/1.2.2/MatrixOne/Get-Started/install-standalone-matrixone/).

+- Download and install [lntelliJ IDEA (2022.2.1 or later version)](https://www.jetbrains.com/idea/download/).

+- Select the [JDK 8+ version](https://www.oracle.com/sg/java/technologies/javase/javase8-archive-downloads.html) version to download and install depending on your system environment.

+- Download and install [Flink](https://archive.apache.org/dist/flink/flink-1.17.0/flink-1.17.0-bin-scala_2.12.tgz) with a minimum supported version of 1.11.

+- Download and install [MongoDB](https://www.mongodb.com/).

+- Download and install [MySQL](https://downloads.mysql.com/archives/get/p/23/file/mysql-server_8.0.33-1ubuntu23.04_amd64.deb-bundle.tar), the recommended version is 8.0.33.

+

+## Operational steps

+

+### Turn on Mongodb replica set mode

+

+Shutdown command:

+

+```bash

+mongod -f /opt/software/mongodb/conf/config.conf --shutdown

+```

+

+Add the following parameters to /opt/software/mongodb/conf/config.conf

+

+```shell

+replication:

+replSetName: rs0 #replication set name

+```

+

+Restart mangod

+

+```bash

+mongod -f /opt/software/mongodb/conf/config.conf

+```

+

+Then go into mongo and execute `rs.initiate()` then `rs.status()`

+

+```shell

+> rs.initiate()

+{

+"info2" : "no configuration specified. Using a default configuration for the set",

+"me" : "xx.xx.xx.xx:27017",

+"ok" : 1

+}

+rs0:SECONDARY> rs.status()

+```

+

+See the following information indicating that the replication set started successfully

+

+```bash

+"members" : [

+{

+"_id" : 0,

+"name" : "xx.xx.xx.xx:27017",

+"health" : 1,

+"state" : 1,

+"stateStr" : "PRIMARY",

+"uptime" : 77,

+"optime" : {

+"ts" : Timestamp(1665998544, 1),

+"t" : NumberLong(1)

+},

+"optimeDate" : ISODate("2022-10-17T09:22:24Z"),

+"syncingTo" : "",

+"syncSourceHost" : "",

+"syncSourceId" : -1,

+"infoMessage" : "could not find member to sync from",

+"electionTime" : Timestamp(1665998504, 2),

+"electionDate" : ISODate("2022-10-17T09:21:44Z"),

+"configVersion" : 1,

+"self" : true,

+"lastHeartbeatMessage" : ""

+}

+],

+"ok" : 1,

+

+rs0:PRIMARY> show dbs

+admin 0.000GB

+config 0.000GB

+local 0.000GB

+test 0.000GB

+```

+

+### Create source table (mongodb) in flinkcdc sql interface

+

+Execute in the lib directory in the flink directory and download the cdcjar package for mongodb

+

+```bash

+wget

+```

+

+Build a mapping table for the data source mongodb, the column names must also be identical

+

+```sql

+CREATE TABLE products (

+ _id STRING,#There must be this column, and it must also be the primary key, because mongodb automatically generates an id for each row of data

+ `name` STRING,

+ age INT,

+ PRIMARY KEY(_id) NOT ENFORCED

+) WITH (

+ 'connector' = 'mongodb-cdc',

+ 'hosts' = 'xx.xx.xx.xx:27017',

+ 'username' = 'root',

+ 'password' = '',

+ 'database' = 'test',

+ 'collection' = 'test'

+);

+```

+

+Once established you can execute `select * from` products; check if the connection is successful

+

+### Create sink table in flinkcdc sql interface (MatrixOne)

+

+Create a mapping table for matrixone with the same structure and no columns with ids

+

+```sql

+CREATE TABLE cdc_matrixone (

+ `name` STRING,

+ age INT,

+ PRIMARY KEY (`name`) NOT ENFORCED

+)WITH (

+'connector' = 'jdbc',

+'url' = 'jdbc:mysql://xx.xx.xx.xx:6001/test',

+'driver' = 'com.mysql.cj.jdbc.Driver',

+'username' = 'root',

+'password' = '111',

+'table-name' = 'mongodbtest'

+);

+```

+

+### Turn on the cdc synchronization task

+

+Once the sync task is turned on here, mongodb additions and deletions can be synchronized

+

+```sql

+INSERT INTO cdc_matrixone SELECT `name`,age FROM products;

+

+#insert

+rs0:PRIMARY> db.test.insert({"name" : "ddd", "age" : 90})

+WriteResult({ "nInserted" : 1 })

+rs0:PRIMARY> db.test.find()

+{ "_id" : ObjectId("6347e3c6229d6017c82bf03d"), "name" : "aaa", "age" : 20 }

+{ "_id" : ObjectId("6347e64a229d6017c82bf03e"), "name" : "bbb", "age" : 18 }

+{ "_id" : ObjectId("6347e652229d6017c82bf03f"), "name" : "ccc", "age" : 28 }

+{ "_id" : ObjectId("634d248f10e21b45c73b1a36"), "name" : "ddd", "age" : 90 }

+#update

+rs0:PRIMARY> db.test.update({'name':'ddd'},{$set:{'age':'99'}})

+WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

+#delete

+rs0:PRIMARY> db.test.remove({'name':'ddd'})

+WriteResult({ "nRemoved" : 1 })

+```

\ No newline at end of file

diff --git a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mysql-matrixone.md b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mysql-matrixone.md

new file mode 100644

index 000000000..be51aab3b

--- /dev/null

+++ b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-mysql-matrixone.md

@@ -0,0 +1,431 @@

+# Writing MySQL data to MatrixOne using Flink

+

+This chapter describes how to write MySQL data to MatrixOne using Flink.

+

+## Pre-preparation

+

+This practice requires the installation and deployment of the following software environments:

+

+- Complete [standalone MatrixOne deployment](https://docs.matrixorigin.cn/1.2.2/MatrixOne/Get-Started/install-standalone-matrixone/).

+- Download and install [lntelliJ IDEA (2022.2.1 or later version)](https://www.jetbrains.com/idea/download/).

+- Select the [JDK 8+ version](https://www.oracle.com/sg/java/technologies/javase/javase8-archive-downloads.html) version to download and install depending on your system environment.

+- Download and install [Flink](https://archive.apache.org/dist/flink/flink-1.17.0/flink-1.17.0-bin-scala_2.12.tgz) with a minimum supported version of 1.11.

+- Download and install [MySQL](https://downloads.mysql.com/archives/get/p/23/file/mysql-server_8.0.33-1ubuntu23.04_amd64.deb-bundle.tar), the recommended version is 8.0.33.

+

+## Operational steps

+

+### Step one: Initialize the project

+

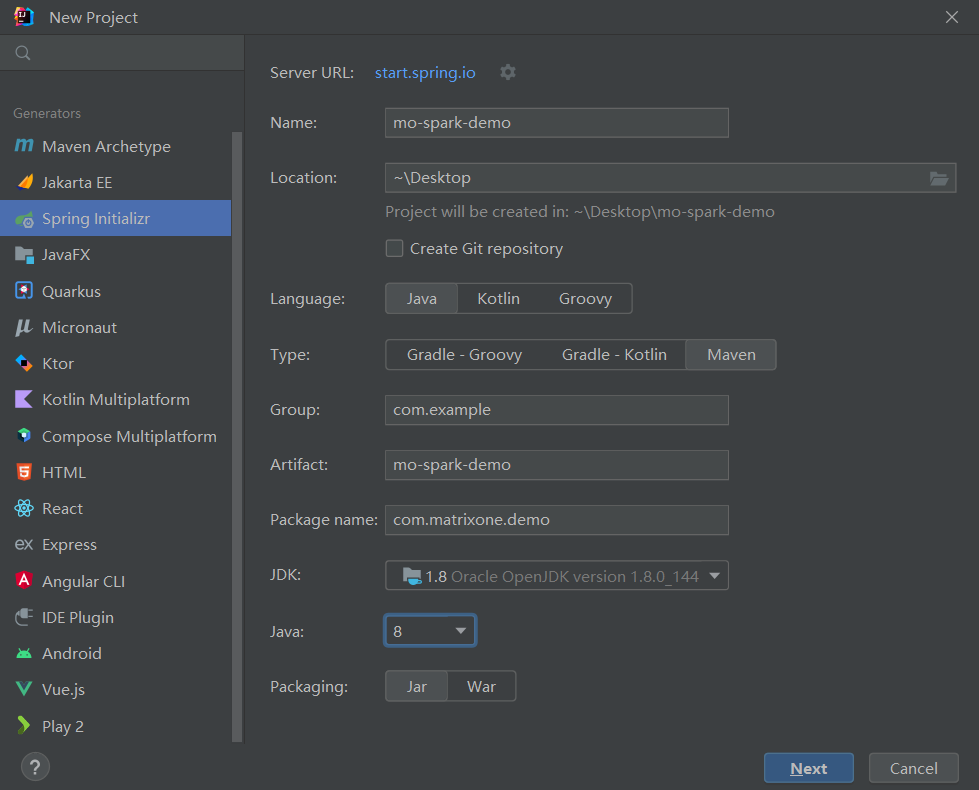

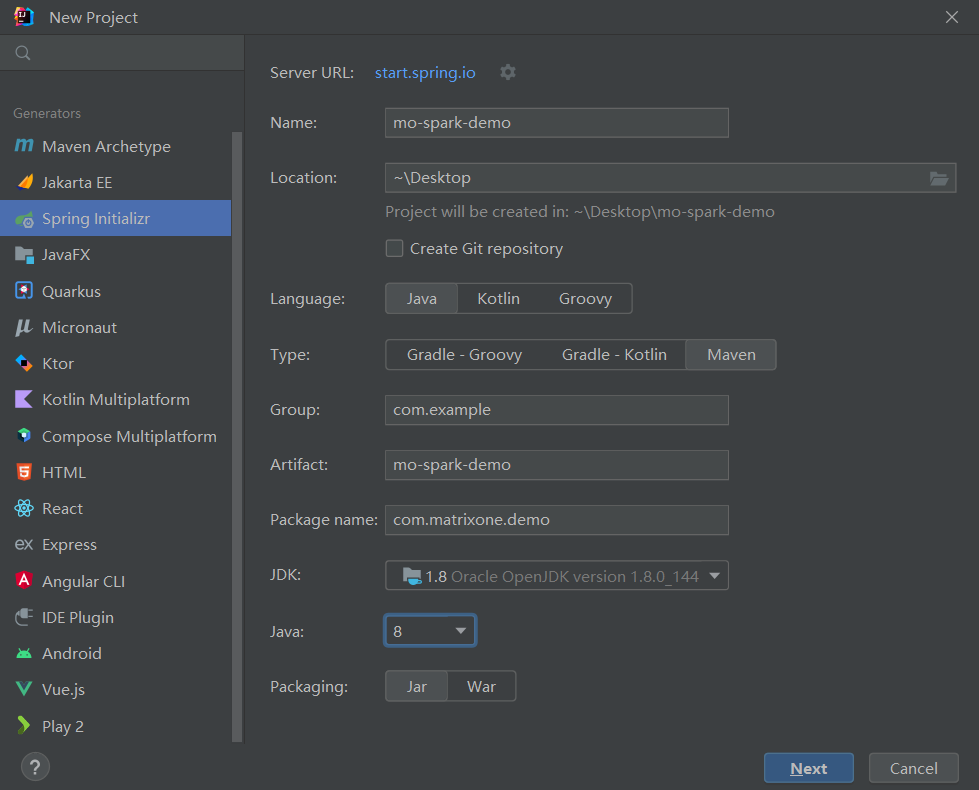

+1. Open IDEA, click **File > New > Project**, select **Spring Initializer**, and fill in the following configuration parameters:

+

+ - **Name**:matrixone-flink-demo

+ - **Location**:~\Desktop

+ - **Language**:Java

+ - **Type**:Maven

+ - **Group**:com.example

+ - **Artifact**:matrixone-flink-demo

+ - **Package name**:com.matrixone.flink.demo

+ - **JDK** 1.8

+

+ An example configuration is shown in the following figure:

+

+

+

+

+

+2. Add project dependencies, edit the `pom.xml` file in the root of your project, and add the following to the file:

+

+```xml

+

+

+ 4.0.0

+

+ com.matrixone.flink

+ matrixone-flink-demo

+ 1.0-SNAPSHOT

+

+

+ 2.12

+ 1.8

+ 1.17.0

+ compile

+

+

+

+

+

+

+ org.apache.flink

+ flink-connector-hive_2.12

+ ${flink.version}

+

+

+

+ org.apache.flink

+ flink-java

+ ${flink.version}

+

+

+

+ org.apache.flink

+ flink-streaming-java

+ ${flink.version}

+

+

+

+ org.apache.flink

+ flink-clients

+ ${flink.version}

+

+

+

+ org.apache.flink

+ flink-table-api-java-bridge

+ ${flink.version}

+

+

+

+ org.apache.flink

+ flink-table-planner_2.12

+ ${flink.version}

+

+

+

+

+ org.apache.flink

+ flink-connector-jdbc

+ 1.15.4

+

+

+ mysql

+ mysql-connector-java

+ 8.0.33

+

+

+

+

+ org.apache.kafka

+ kafka_2.13

+ 3.5.0

+

+

+ org.apache.flink

+ flink-connector-kafka

+ 3.0.0-1.17

+

+

+

+

+ com.alibaba.fastjson2

+ fastjson2

+ 2.0.34

+

+

+

+

+

+

+

+

+

+

+ org.apache.maven.plugins

+ maven-compiler-plugin

+ 3.8.0

+

+ ${java.version}

+ ${java.version}

+ UTF-8

+

+

+

+ maven-assembly-plugin

+ 2.6

+

+

+ jar-with-dependencies

+

+

+

+

+ make-assembly

+ package

+

+ single

+

+

+

+

+

+

+

+

+

+```

+

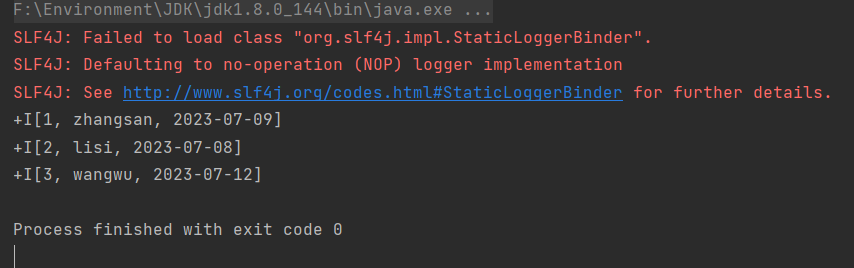

+### Step Two: Read MatrixOne Data

+

+After connecting to MatrixOne using a MySQL client, create the database you need for the demo, as well as the data tables.

+

+1. Create databases, data tables, and import data in MatrixOne:

+

+ ```SQL

+ CREATE DATABASE test;

+ USE test;

+ CREATE TABLE `person` (`id` INT DEFAULT NULL, `name` VARCHAR(255) DEFAULT NULL, `birthday` DATE DEFAULT NULL);

+ INSERT INTO test.person (id, name, birthday) VALUES(1, 'zhangsan', '2023-07-09'),(2, 'lisi', '2023-07-08'),(3, 'wangwu', '2023-07-12');

+ ```

+

+2. Create a `MoRead.java` class in IDEA to read MatrixOne data using Flink:

+

+ ```java

+ package com.matrixone.flink.demo;

+

+ import org.apache.flink.api.common.functions.MapFunction;

+ import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

+ import org.apache.flink.api.java.ExecutionEnvironment;

+ import org.apache.flink.api.java.operators.DataSource;

+ import org.apache.flink.api.java.operators.MapOperator;

+ import org.apache.flink.api.java.typeutils.RowTypeInfo;

+ import org.apache.flink.connector.jdbc.JdbcInputFormat;

+ import org.apache.flink.types.Row;

+

+ import java.text.SimpleDateFormat;

+

+ /**

+ * @author MatrixOne

+ * @description

+ */

+ public class MoRead {

+ private static String srcHost = "xx.xx.xx.xx";

+ private static Integer srcPort = 6001;

+ private static String srcUserName = "root";

+ private static String srcPassword = "111";

+ private static String srcDataBase = "test";

+

+ public static void main(String[] args) throws Exception {

+

+ ExecutionEnvironment environment = ExecutionEnvironment.getExecutionEnvironment();

+ // Set parallelism

+ environment.setParallelism(1);

+ SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd");

+

+ // Set the field type of the query

+ RowTypeInfo rowTypeInfo = new RowTypeInfo(

+ new BasicTypeInfo[]{

+ BasicTypeInfo.INT_TYPE_INFO,

+ BasicTypeInfo.STRING_TYPE_INFO,

+ BasicTypeInfo.DATE_TYPE_INFO

+ },

+ new String[]{

+ "id",

+ "name",

+ "birthday"

+ }

+ );

+

+ DataSource dataSource = environment.createInput(JdbcInputFormat.buildJdbcInputFormat()

+ .setDrivername("com.mysql.cj.jdbc.Driver")

+ .setDBUrl("jdbc:mysql://" + srcHost + ":" + srcPort + "/" + srcDataBase)

+ .setUsername(srcUserName)

+ .setPassword(srcPassword)

+ .setQuery("select * from person")

+ .setRowTypeInfo(rowTypeInfo)

+ .finish());

+

+ // Convert Wed Jul 12 00:00:00 CST 2023 date format to 2023-07-12

+ MapOperator mapOperator = dataSource.map((MapFunction) row -> {

+ row.setField("birthday", sdf.format(row.getField("birthday")));

+ return row;

+ });

+

+ mapOperator.print();

+ }

+ }

+ ```

+

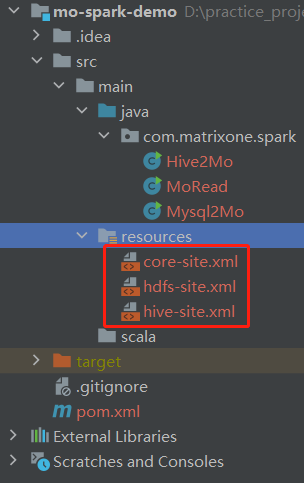

+3. Run `MoRead.Main()` in IDEA with the following result:

+

+

+

+### Step Three: Write MySQL Data to MatrixOne

+

+You can now start migrating MySQL data to MatrixOne using Flink.

+

+1. Prepare MySQL data: On node3, connect to your local Mysql using the Mysql client, create the required database, data table, and insert the data:

+

+ ```sql

+ mysql -h127.0.0.1 -P3306 -uroot -proot

+ mysql> CREATE DATABASE motest;

+ mysql> USE motest;

+ mysql> CREATE TABLE `person` (`id` int DEFAULT NULL, `name` varchar(255) DEFAULT NULL, `birthday` date DEFAULT NULL);

+ mysql> INSERT INTO motest.person (id, name, birthday) VALUES(2, 'lisi', '2023-07-09'),(3, 'wangwu', '2023-07-13'),(4, 'zhaoliu', '2023-08-08');

+ ```

+

+2. Empty MatrixOne table data:

+

+ On node3, connect node1's MatrixOne using a MySQL client. Since this example continues to use the `test` database from the example that read the MatrixOne data earlier, we need to first empty the data from the `person` table.

+

+ ```sql

+ -- on node3, connect node1's MatrixOne

+ mysql -hxx.xx.xx.xx -P6001 -uroot -p111

+ mysql> TRUNCATE TABLE test.person using the Mysql client;

+ ```

+

+3. Write code in IDEA:

+

+ Create `Person.java` and `Mysql2Mo.java` classes, use Flink to read MySQL data, perform simple ETL operations (convert Row to Person object), and finally write the data to MatrixOne.

+

+```java

+package com.matrixone.flink.demo.entity;

+

+

+import java.util.Date;

+

+public class Person {

+

+ private int id;

+ private String name;

+ private Date birthday;

+

+ public int getId() {

+ return id;

+ }

+

+ public void setId(int id) {

+ this.id = id;

+ }

+

+ public String getName() {

+ return name;

+ }

+

+ public void setName(String name) {

+ this.name = name;

+ }

+

+ public Date getBirthday() {

+ return birthday;

+ }

+

+ public void setBirthday(Date birthday) {

+ this.birthday = birthday;

+ }

+}

+```

+

+```java

+package com.matrixone.flink.demo;

+

+import com.matrixone.flink.demo.entity.Person;

+import org.apache.flink.api.common.functions.MapFunction;

+import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

+import org.apache.flink.api.java.typeutils.RowTypeInfo;

+import org.apache.flink.connector.jdbc.*;

+import org.apache.flink.streaming.api.datastream.DataStreamSink;

+import org.apache.flink.streaming.api.datastream.DataStreamSource;

+import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

+import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

+import org.apache.flink.types.Row;

+

+import java.sql.Date;

+

+/**

+ * @author MatrixOne

+ * @description

+ */

+public class Mysql2Mo {

+

+ private static String srcHost = "127.0.0.1";

+ private static Integer srcPort = 3306;

+ private static String srcUserName = "root";

+ private static String srcPassword = "root";

+ private static String srcDataBase = "motest";

+

+ private static String destHost = "xx.xx.xx.xx";

+ private static Integer destPort = 6001;

+ private static String destUserName = "root";

+ private static String destPassword = "111";

+ private static String destDataBase = "test";

+ private static String destTable = "person";

+

+

+ public static void main(String[] args) throws Exception {

+

+ StreamExecutionEnvironment environment = StreamExecutionEnvironment.getExecutionEnvironment();

+ // Set parallelism

+ environment.setParallelism(1);

+ // Set the field type of the query

+ RowTypeInfo rowTypeInfo = new RowTypeInfo(

+ new BasicTypeInfo[]{

+ BasicTypeInfo.INT_TYPE_INFO,

+ BasicTypeInfo.STRING_TYPE_INFO,

+ BasicTypeInfo.DATE_TYPE_INFO

+ },

+ new String[]{

+ "id",

+ "name",

+ "birthday"

+ }

+ );

+

+ // Add srouce

+ DataStreamSource dataSource = environment.createInput(JdbcInputFormat.buildJdbcInputFormat()

+ .setDrivername("com.mysql.cj.jdbc.Driver")

+ .setDBUrl("jdbc:mysql://" + srcHost + ":" + srcPort + "/" + srcDataBase)

+ .setUsername(srcUserName)

+ .setPassword(srcPassword)

+ .setQuery("select * from person")

+ .setRowTypeInfo(rowTypeInfo)

+ .finish());

+

+ // Conduct ETL

+ SingleOutputStreamOperator mapOperator = dataSource.map((MapFunction) row -> {

+ Person person = new Person();

+ person.setId((Integer) row.getField("id"));

+ person.setName((String) row.getField("name"));

+ person.setBirthday((java.util.Date)row.getField("birthday"));

+ return person;

+ });

+

+ // Set matrixone sink information

+ mapOperator.addSink(

+ JdbcSink.sink(

+ "insert into " + destTable + " values(?,?,?)",

+ (ps, t) -> {

+ ps.setInt(1, t.getId());

+ ps.setString(2, t.getName());

+ ps.setDate(3, new Date(t.getBirthday().getTime()));

+ },

+ new JdbcConnectionOptions.JdbcConnectionOptionsBuilder()

+ .withDriverName("com.mysql.cj.jdbc.Driver")

+ .withUrl("jdbc:mysql://" + destHost + ":" + destPort + "/" + destDataBase)

+ .withUsername(destUserName)

+ .withPassword(destPassword)

+ .build()

+ )

+ );

+

+ environment.execute();

+ }

+

+}

+```

+

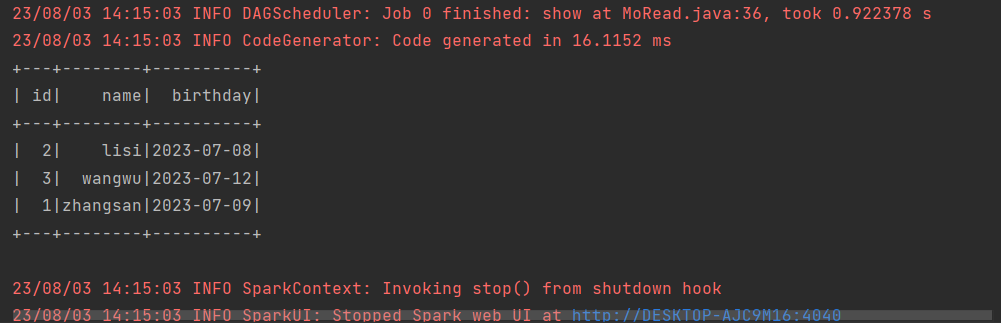

+### Step Four: View Implementation Results

+

+Execute the following SQL query results in MatrixOne:

+

+```sql

+mysql> select * from test.person;

++------+---------+------------+

+| id | name | birthday |

++------+---------+------------+

+| 2 | lisi | 2023-07-09 |

+| 3 | wangwu | 2023-07-13 |

+| 4 | zhaoliu | 2023-08-08 |

++------+---------+------------+

+3 rows in set (0.01 sec)

+```

diff --git a/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-oracle-matrixone.md b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-oracle-matrixone.md

new file mode 100644

index 000000000..fe5db3981

--- /dev/null

+++ b/docs/MatrixOne/Develop/Ecological-Tools/Computing-Engine/Flink/flink-oracle-matrixone.md

@@ -0,0 +1,142 @@

+# Write Oracle data to MatrixOne using Flink

+