-

Notifications

You must be signed in to change notification settings - Fork 21

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

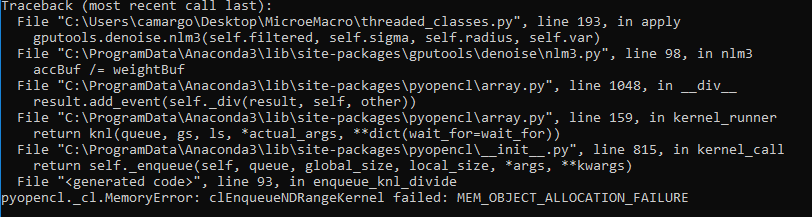

pyopencl._cl.MemoryError: clEnqueueNDRangeKernel failed: MEM_OBJECT_ALLOCATION_FAILURE #14

Comments

|

Hi, Thanks for the feedback!

I don't fully understand that. You mean the GPU memory is still allocated? Sometimes, a memory allocation error such as this indeed leads to strange behaviour afterwards - how large was the image and what does |

|

Hello! Thanks for listening!

Exactly, the memory is still allocated and the video card continues to process (in the Windows task manager it shows that the images are being computed even with memory error).

I am using an AMD RadeonT R750 4GB, and I am trying to process TIF images 2000x2000 pixels (in fact, the error also happens with RAW files) In the attached images it is possible to observe that the algorithm breaks, but continues to process the images. The prompt is only available after the end of processing (the result of this test was obtained by processing 156 slices of a RAW file, 980x1008 pixels) I know the problem is memory allocation (it happens specifically at line 97 of the nlm3.py file, where the accBuf / = weightBuf division happens). The strange thing is that it does not deallocate from memory, nor does it stop processing. |

|

That's weird, given that |

In fact, on the machine I am using there is extra memory allocation, there is a peak of video memory consumption shortly after the loops of lines 67-69 of the nlm3 function, which is exactly the

Yes. For now I'm working around the problem dividing the set of images. I compute each subset and store it in another array. Thank you again for your time! By the way, good work in developing this project. |

|

So if you run somethings like this, do you see peak memory usage to be greater than 2.1 GB (which it does not on my machine)? And thanks for the feedback! :) |

|

So if you change the shape to |

|

same if you exchange elementwise divide by an elementwise multiply? |

|

With |

|

What happens if you run the following code in the same way? Does it still crash? |

|

It exhibits the same behavior, running smoothly when |

|

Interesting. So it seems that pyopencl's "/=" operator is not inplace, whereas the "*=" operator is (i.e. does not allocate additional memory). I would guess, that So you could use the inplace-divide kernel from above as workaround That should rid you of the original allocation error. Why the kernel keeps on running, however, I still have no idea about ;) |

|

The proposed modifications really worked! I no longer receive the memory error, so the program is being limited only by RAM memory. Many thanks for the support @maweigert !

In fact, it is very strange behavior. |

|

I am processing an array with shape of (1024x1024x512), the data type is float32. |

Hi,

I am using the gputools library in my project and I have a problem. When I try to render images with the nlm3 filter and the GPU does not support the amount of images, I get the error of the attached image. However, the GPU continues to process the images. Is there any solution to this problem (kill the process)?

Thanks!

The text was updated successfully, but these errors were encountered: