-

-

Notifications

You must be signed in to change notification settings - Fork 56

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Investigating SemaphoreSlim on net6.0+ #64

Comments

|

Slimming down the above table to compare what we are asking here:

|

NickCraver

added a commit

that referenced

this issue

Jan 15, 2022

Done for #64, runs can now be: ```ps .\Benchmarks.cmd --filter *Lock* ```

NickCraver

added a commit

to StackExchange/StackExchange.Redis

that referenced

this issue

Jan 15, 2022

Since Semaphore slim has been fixed on all the platforms we're building for these days, this tests moving back. Getting some test run comparison data, but all synthetic bechmarks are looking good. See mgravell/Pipelines.Sockets.Unofficial#64 for details

NickCraver

added a commit

to StackExchange/StackExchange.Redis

that referenced

this issue

Jan 19, 2022

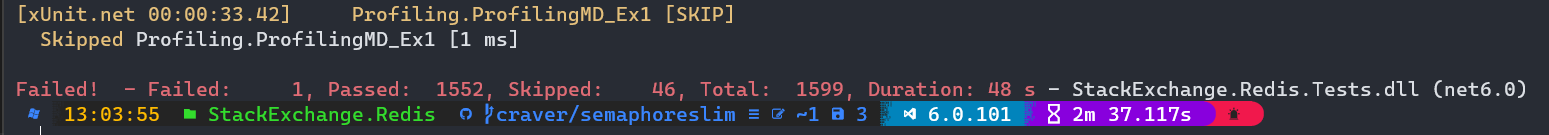

Since Semaphore slim has been fixed on all the platforms we're building for these days, this tests moving back. Getting some test run comparison data, but all synthetic benchmarks are looking good. See mgravell/Pipelines.Sockets.Unofficial#64 for details Here's the main contention test metrics from those benchmarks: | Method | Runtime | Mean | Error | StdDev | Allocated | |------------------------------ |----------- |-------------:|-----------:|-----------:|----------:| | SemaphoreSlim_Sync | .NET 6.0 | 18.246 ns | 0.2540 ns | 0.2375 ns | - | | MutexSlim_Sync | .NET 6.0 | 22.292 ns | 0.1948 ns | 0.1627 ns | - | | SemaphoreSlim_Sync | .NET 4.7.2 | 65.291 ns | 0.5218 ns | 0.4357 ns | - | | MutexSlim_Sync | .NET 4.7.2 | 43.145 ns | 0.3944 ns | 0.3689 ns | - | | | | | | | | | SemaphoreSlim_Async | .NET 6.0 | 20.920 ns | 0.2461 ns | 0.2302 ns | - | | MutexSlim_Async | .NET 6.0 | 42.810 ns | 0.4583 ns | 0.4287 ns | - | | SemaphoreSlim_Async | .NET 4.7.2 | 57.513 ns | 0.5600 ns | 0.5238 ns | - | | MutexSlim_Async | .NET 4.7.2 | 76.444 ns | 0.3811 ns | 0.3379 ns | - | | | | | | | | | SemaphoreSlim_Async_HotPath | .NET 6.0 | 15.182 ns | 0.1708 ns | 0.1598 ns | - | | MutexSlim_Async_HotPath | .NET 6.0 | 29.913 ns | 0.5884 ns | 0.6776 ns | - | | SemaphoreSlim_Async_HotPath | .NET 4.7.2 | 50.912 ns | 0.8782 ns | 0.8215 ns | - | | MutexSlim_Async_HotPath | .NET 4.7.2 | 55.409 ns | 0.7513 ns | 0.6660 ns | - | | | | | | | | | SemaphoreSlim_ConcurrentAsync | .NET 6.0 | 2,084.316 ns | 4.5909 ns | 4.0698 ns | 547 B | | MutexSlim_ConcurrentAsync | .NET 6.0 | 2,120.714 ns | 28.5866 ns | 26.7399 ns | 125 B | | SemaphoreSlim_ConcurrentAsync | .NET 4.7.2 | 3,812.444 ns | 42.4014 ns | 37.5877 ns | 1,449 B | | MutexSlim_ConcurrentAsync | .NET 4.7.2 | 2,883.994 ns | 46.5535 ns | 41.2685 ns | 284 B | We don't have high contention tests, but sanity checking against our test suite (where we don't expect this to matter much): Main branch:  PR branch:  We could scope this back to .NET 6.0+ only, but the code's a lot more `#ifdef` and complicated (because `LockTokens` aren't a thing - it's just a bool "did I get it?")...thoughts?

mgravell

pushed a commit

that referenced

this issue

Mar 3, 2023

Done for #64, runs can now be: ```ps .\Benchmarks.cmd --filter *Lock* ```

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Issue for tracking this discussion (so I can also put benchmarks somewhere).

Getting benchmarks running again for a discussion around going back to SemaphoreSlim now that the async issue has been fixed. Current benchmark run shows positive results there:

cc @mgravell ^ I can take a poke at what a swap looks like this week just wanted to post numbers up!

The text was updated successfully, but these errors were encountered: