This project is an aid to the blind. Till date there has been no technological advancement in the way the blind navigate. So I have used deep learning particularly convolutional neural networks so that they can navigate through the streets. Watch the video

My project is an implementation of CNN's, and we all know that they require a large amount of training data. So the first obstruction in my way was a correclty labeled dataset of images. So I went around my college and recorded a lot of videos(of all types of roads and also offroads).Then I wrote a basic python script to save images from the video(I saved 1 image out of every 5 frames, because the consecutive frame are almost identical). I collected almost 10000 such images almost 3300 for each class(i.e. left right and center).

Left image:

Right Image:

Center Image:

I made a collection of CNN architectures and trained the model. Then I evaluated the performance of all the models and chose the one with the best accuracy. I got a training accuracy of about 97%. I got roughly same accuracy for all the trained model but I realized that the model in which implemented regularization performed better on the test set

The next problem was how can I tell the blind people in which direction to move .

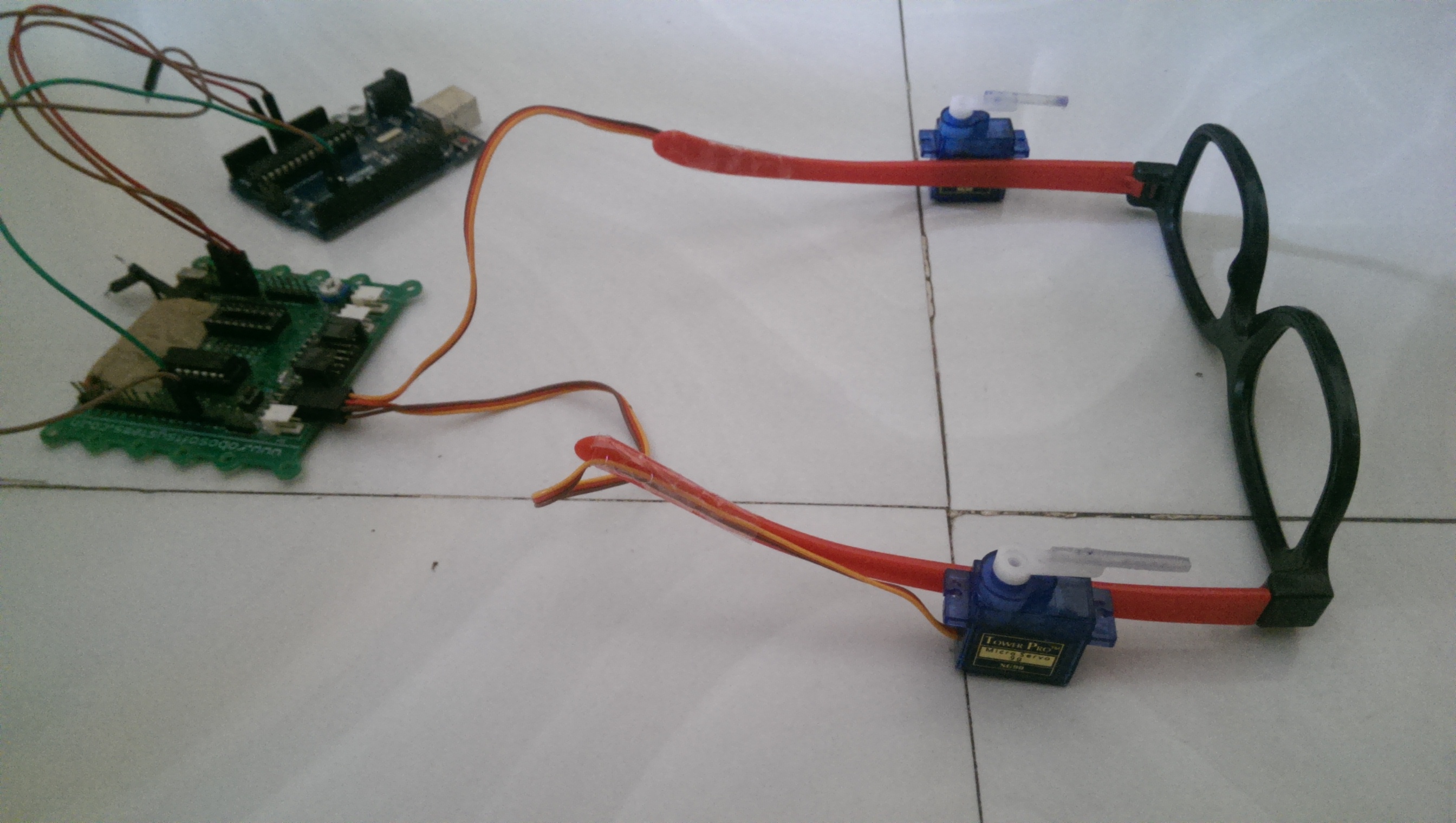

So I connected my python program to an Arduino. I connected the servo motors to arduino and fixed the servo motors to the sides of an spectacle. Using Serial communication I can tell the arduino which servo motor to move which would then press to one side of the blind person's head and would indicate him in which direction to move.

This is how the modified spectacles looked like

This is done using opencv. I load in a pretrained stop sign haar cascade and then identify the location of the stop sign so that the device can steer the blind person.

This is achieved using Dlibs face detector.(trying to implement face recognition)

- Python 3.x

- Tensorflow 1.5

- Keras

- OpenCV 3.4(for loading,resizing images)

- h5py(for saving trained model)

- pyttsx3

- A good grasp over convolutional neural networks. For online resources refer to standford cs231n, deeplearning.ai on coursera or cs231n by standford university

- A good CPU (preferably with a GPU).

- Time

- datetime

- Patience.... A lot of it.

- Start your terminal of cmd depending on your os.

- If you have a NVidia GPU then make sure you have the prerequisites for Tensorflow GPU installation (Refer to official site). Then use this commmand

pip install -r requirements_gpu.txt

- In case you do not have a GPU then use this command

pip install -r requirements_cpu.txt

To train your own classifier you need to gather data for all three type(.i.e images from left side of road, right side, and center region of the road) and then run model_trainer.py(you need to change the directories of images in model_trainer.py first).I used around 10000 images.

It took me about 30 minutes to train each network.

- intel core i3

- 4gb ram

- nvidia 930m graphics card

1)Upload the arduino file on the arduino

2)Change the com number and camera number in the blind runner.py

3)run blind_runner.py

4)Enjoy

Tell me if you liked it by giving a star. Also check out my other repositories, I always make cool stuff. I even have a youtube channel "reactor science" where I post all my work.