You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Hi all, I am trying to train CosyPose on my own dataset.

After playing with TLESS dataset i tried with my own data (one object):

Create "synthetic data" with "start_dataset_recording.py"

Train detector on this snyt dataset

Train coarse pose and refine pose on this synt dataset

Start inference with "webcam" (realsense D435)

Be happy, that the pose estimation looks good for this first try. (The realsense looked on my computerscreen with opened 3d viewer with the trained object)

As my next step i tried to render better data with "BlenderProc". I adjust the "bop_object_physics_positioning" example to render data.

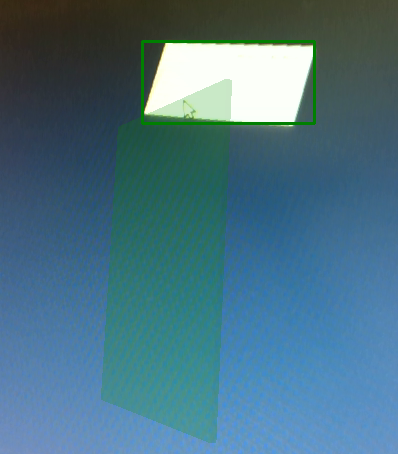

As before I train detector, coarse and refine pose. But the detection of the pose is now wrong. And i think its not unprecise, i think its wrong. Because after visualizing the result of 6d estimation one corner of the object is always in the center of the bounding box of the object detection. Here is an image as example:

What do you think about it? Is it just a very unprecised detection or is something wrong? Perhaps I have an error in rendering the data? Perhaps there is something wrong with my model, or i do something wrong with the camera parameters? Does anyone have an idea?

Currently i try to render new data with one model (27) of the tless dataset, and I will try to train on this data than.

--> Done. I used the *.ply and *.obj (urdf) files from object 27 of the TLESS dataset, and do all the rest on my own: rendering data, train detector, train coarse/refiner pose. After that pose estimation work (a bit unprecise, but ok).

So my issue must be somewhere in my 3d modelfiles.

The last difference i see is, that my 3d model is always in positives values (x/y/z). The TLESS model is "arround 0/0/0", so it has also negativ values... But that can not be a problem?? Or can it??

@hannes56a, I am not sure man. I'd look into it, but I no longer have access to my codebase. What is the size of the training set. Do you have enough variety in the training set? Did you check the evaluation metrics with paper_training_logs.ipynb?

Thank you for your answer. I do not think, that it is an issue in training or size of training set. Because i tried the same (same trainingset size, training parameter, etc...) only with another 3d object model. And with this other object model it works...

Thank you for your answer. I do not think, that it is an issue in training or size of training set. Because i tried the same (same trainingset size, training parameter, etc...) only with another 3d object model. And with this other object model it works...

hello,could you tell me how to train 2D detector ,thanks!

my english is bad ,sorry

Hi all, I am trying to train CosyPose on my own dataset.

After playing with TLESS dataset i tried with my own data (one object):

As my next step i tried to render better data with "BlenderProc". I adjust the "bop_object_physics_positioning" example to render data.

As before I train detector, coarse and refine pose. But the detection of the pose is now wrong. And i think its not unprecise, i think its wrong. Because after visualizing the result of 6d estimation one corner of the object is always in the center of the bounding box of the object detection. Here is an image as example:

What do you think about it? Is it just a very unprecised detection or is something wrong? Perhaps I have an error in rendering the data? Perhaps there is something wrong with my model, or i do something wrong with the camera parameters? Does anyone have an idea?

Currently i try to render new data with one model (27) of the tless dataset, and I will try to train on this data than.

--> Done. I used the *.ply and *.obj (urdf) files from object 27 of the TLESS dataset, and do all the rest on my own: rendering data, train detector, train coarse/refiner pose. After that pose estimation work (a bit unprecise, but ok).

So my issue must be somewhere in my 3d modelfiles.

The last difference i see is, that my 3d model is always in positives values (x/y/z). The TLESS model is "arround 0/0/0", so it has also negativ values... But that can not be a problem?? Or can it??

Please help!

Here are my used files for rendering data /training:

urdfs-versuch03.zip

models.zip

@azad96 Perhaps you have an idea?

The text was updated successfully, but these errors were encountered: