Note 1: The following repository grew out of Romain Brette's critical thought experiment on the free energy principle.

Note 2: This Github repository is accompanied by the following blog post.

|

|---|

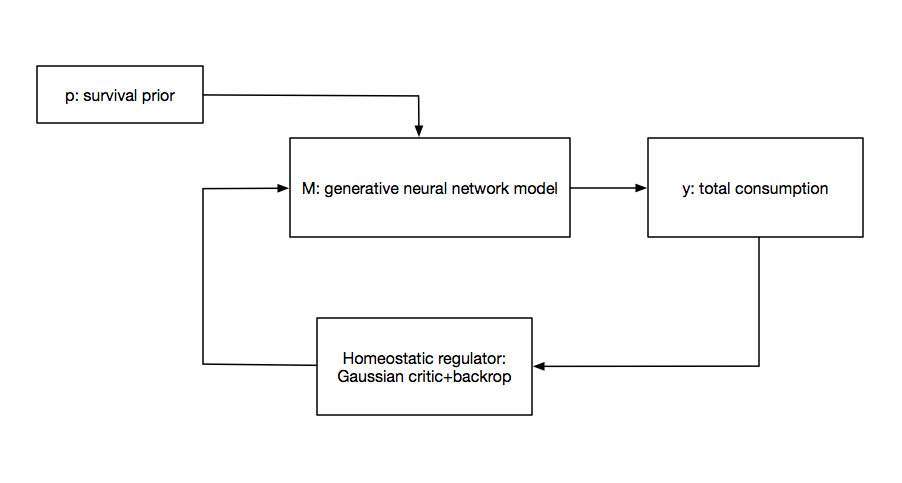

| diagram of the system |

To simulate Romain's problem, I made the following assumptions:

- We have an organism which has to eat k times on average in the last 24 hours and can eat at most once per hour.

- The homeostatic conditions of our organism are given by a Gaussian distribution centered at k with unit variance, a Gaussian food critic if you will. This specifies that our organism should't eat much less than k times a day and shouldn't eat a lot more than k times a day. In fact, this explains why living organisms tend to have masses that are normally distributed during adulthood.

- A food policy consists of a 24-dimensional vector where the values range from 0.0 to 1.0 and we want to maximise the negative log probability that the total consumption is drawn from the Gaussian food critic.

- Food policies are the output of a generative neural network(setup using TensorFlow) whose inputs are either one or zero to indicate a survival prior, with one indicating a preference for survival.

- The backpropagation algorithm, in this case Adagrad, functions as a homeostatic regulator by updating the network with variations in the network weights proportional to the negative logarithmic loss(i.e. surprisal).

Assuming k=3, I ran a simulation in the following notebook and found that the discovered food policy differs significantly from Romain's expectation that the agent would choose to not look for food in order to minimise surprisal. In fact, our simple agent manages to get three meals per day on average so it survives.

- Until recently, the Free Energy Principle has been a constant source of mockery from neuroscientists who misunderstood it and so I hope that by growing a collection of free-energy motivated reinforcement learning examples on Github we may finally have a constructive discussion between scientists

- The details of the first experiment are contained in the following notebook.

- The free-energy principle: a rough guide to the brain? (K. Friston. 2009.)

- The Markov blankets of life: autonomy, active inference and the free energy principle (M. Kirchhoff, T. Parr, E. Palacios, K. Friston and J. Kiverstein. 2018.)

- Free-Energy Minimization and the Dark-Room Problem (K. Friston, C. Thornton and A. Clark. 2012.)

- What is computational neuroscience? (XXIX) The free energy principle (R. Brette. 2018.)