Lokesh Veeramacheneni1, Moritz Wolter1, Hilde Kuehne2, and Juergen Gall1,3

Keywords: CaRA, Canonical Polyadic Decomposition, CPD, Tensor methods, ViT, LoRA

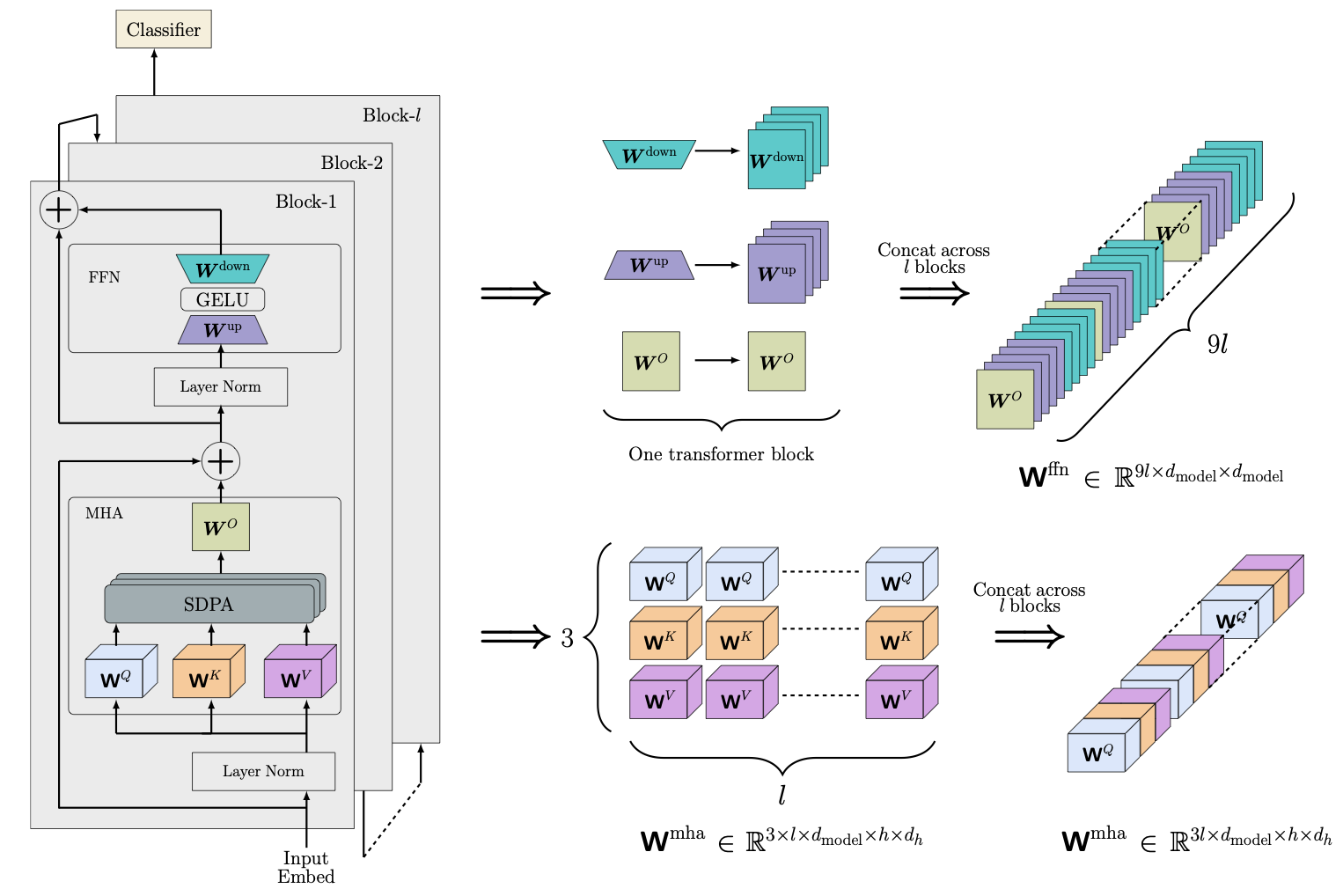

Abstract: Modern methods for fine-tuning a Vision Transformer (ViT) like Low-Rank Adaptation (LoRA) and its variants demonstrate impressive performance. However, these methods ignore the high-dimensional nature of Multi-Head Attention (MHA) weight tensors. To address this limitation, we propose Canonical Rank Adaptation (CaRA). CaRA leverages tensor mathematics, first by tensorising the transformer into two different tensors; one for projection layers in MHA and the other for feed-forward layers. Second, the tensorised formulation is fine-tuned using the low-rank adaptation in Canonical-Polyadic Decomposition (CPD) form. Employing CaRA efficiently minimizes the number of trainable parameters. Experimentally, CaRA outperforms existing Parameter-Efficient Fine-Tuning (PEFT) methods in visual classification benchmarks such as Visual Task Adaptation Benchmark (VTAB)-1k and Fine-Grained Visual Categorization (FGVC).

Use UV to install the requirements

For CPU based pytorch

uv sync --extra cpuFor CUDA based pytorch

uv sync --extra cu118In the case of VTAB-1k benchmark, refer to the dataset download instructions from NOAH. We download the datasets for FGVC benchmark from their respective sources.

Note: Create a data folder in the root and place the datasets inside this folder.

Please refer to the download links provided in the paper.

For fine-tuning ViT use the following command.

export PYTHONPATH=.

python image_classification/vit_cp.py --dataset=<choice_of_dataset> --dim=<rank>We provide the link for fine-tuned models for each dataset in VTAB-1k benchmark here. To reproduce results from the paper, download the model and execute the following command

export PYTHONPATH=.

python image_classification/vit_cp.py --dataset=<choice_of_dataset> --dim=<rank> --evaluate=<path_to_model>The code is built on the implementation of FacT. Thanks to Zahra Ganji for reimplementing VeRA baseline.

If you use this work, please cite using following bibtex entry

@inproceedings{

veeramacheneni2025canonical,

title={Canonical Rank Adaptation: An Efficient Fine-Tuning Strategy for Vision Transformers},

author={Lokesh Veeramacheneni and Moritz Wolter and Hilde Kuehne and Juergen Gall},

booktitle={Forty-second International Conference on Machine Learning},

year={2025}}