-

Notifications

You must be signed in to change notification settings - Fork 417

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

- Loading branch information

Showing

43 changed files

with

822 additions

and

46 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,57 @@ | ||

| name: 'onnx docker' | ||

|

|

||

| on: | ||

| workflow_dispatch: | ||

| push: | ||

| branches: ['master'] | ||

| paths: | ||

| - 'onnx/Dockerfile' | ||

| - 'onnx/export/**' | ||

| - 'onnx/misc/**' | ||

| - 'onnx/version.txt' | ||

| - '.github/workflow/onnx.yml' | ||

| pull_request: | ||

| branches: ['master'] | ||

| paths: | ||

| - 'onnx/Dockerfile' | ||

| - 'onnx/export/**' | ||

| - 'onnx/misc/**' | ||

| - 'onnx/version.txt' | ||

| - '.github/workflow/onnx.yml' | ||

|

|

||

| jobs: | ||

| docker: | ||

| runs-on: ubuntu-latest | ||

| steps: | ||

| - | ||

| name: Checkout | ||

| uses: actions/checkout@v3 | ||

| - | ||

| name: Set Env | ||

| run: | | ||

| VERSION=$(cat onnx/version.txt) | ||

| echo "VERSION=$VERSION" >> $GITHUB_ENV | ||

| - | ||

| name: Set up QEMU | ||

| uses: docker/setup-qemu-action@v2 | ||

| - | ||

| name: Set up Docker Buildx | ||

| uses: docker/setup-buildx-action@v2 | ||

| - | ||

| name: Login to Docker Hub | ||

| uses: docker/login-action@v2 | ||

| with: | ||

| username: ${{ secrets.DOCKER_USERNAME }} | ||

| password: ${{ secrets.DOCKER_PASSWORD }} | ||

| - | ||

| name: Build and push | ||

| uses: docker/build-push-action@v4 | ||

| with: | ||

| context: ./onnx/ | ||

| platforms: linux/amd64,linux/arm64 | ||

| push: true | ||

| tags: ${{secrets.DOCKER_ORG}}/altclip-onnx:latest, ${{secrets.DOCKER_ORG}}/altclip-onnx:${{env.VERSION}} | ||

| cache-from: type=gha | ||

| cache-to: type=gha,mode=max | ||

| build-args: | | ||

| GIT=https://github.com/${{github.repository}}.git |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -17,19 +17,19 @@ | |

|

|

||

|

|

||

| 1. **可通过 API 快速下载模型** | ||

|

|

||

| 提供 API 方便你快速下载模型,并在给定(中/英文)文本上使用这些预训练模型,在从[SuperGLUE](https://super.gluebenchmark.com/)和[CLUE](https://github.com/CLUEbenchmark/CLUE) benchmarks收集的广泛使用的数据集上对它们进行微调。 | ||

|

|

||

| FlagAI 现已支持 30+ 主流模型,包括多模态模型 [**AltCLIP**](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/AltCLIP) 、文生图模型 [**AltDiffusion**](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/AltDiffusion) [](https://huggingface.co/spaces/BAAI/bilingual_stable_diffusion)、最高百亿参数的 **[悟道GLM](/doc_zh/GLM.md)**,[**EVA-CLIP**](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/EVA_CLIP)、**[Galactica](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/galactica)**、**OPT**、**BERT**、**RoBERTa**、**GPT2**、**T5**、**ALM**、**Huggingface Transformers** 等。 | ||

|

|

||

| 2. **仅用十行代码即可进行并行训练** | ||

|

|

||

| 飞智由四个最流行的数据/模型并行库([PyTorch](https://pytorch.org/)/[Deepspeed](https://www.deepspeed.ai/)/[Megatron-LM](https://github.com/NVIDIA/Megatron-LM)/[BMTrain](https://github.com/OpenBMB/BMTrain))提供支持,它们之间实现了无缝集成。 你可以用不到十行代码来并行你的训练/测试过程。 | ||

|

|

||

| 3. **提供提示学习工具包** | ||

|

|

||

| FlagAI 提供了提示学习([prompt-learning](https://github.com/FlagAI-Open/FlagAI/blob/master/docs/TUTORIAL_7_PROMPT_LEARNING.md))的工具包,用于少样本学习(few-shot learning)任务。 | ||

|

|

||

| 4. **尤其擅长中文任务** | ||

|

|

||

| FlagAI 目前支持的模型可以应用于文本分类、信息提取、问答、摘要、文本生成等任务,尤其擅长中文任务。 | ||

|

|

@@ -51,40 +51,40 @@ | |

|

|

||

| ### 模型 | ||

|

|

||

| | 模型名称 | 任务 | 训练 | 微调 | 推理 | 样例 | | ||

| | 模型名称 | 任务 | 训练 | 微调 | 推理 | 样例 | | ||

| | :---------------- | :------- | :-- |:-- | :-- | :--------------------------------------------- | | ||

| | ALM | 阿拉伯语文本生成 | ✅ | ❌ | ✅ | [README.md](/examples/ALM/README.md) | | ||

| | AltCLIP | 文图匹配 | ✅ | ✅ | ✅ | [README.md](/examples/AltCLIP/README.md) | | ||

| | AltCLIP-m18 | 文图匹配 | ✅ | ✅ | ✅ | [README.md](examples/AltCLIP-m18/README.md) | | ||

| | ALM | 阿拉伯语文本生成 | ✅ | ❌ | ✅ | [README.md](/examples/ALM/README.md) | | ||

| | AltCLIP | 文图匹配 | ✅ | ✅ | ✅ | [README.md](/examples/AltCLIP/README.md) | | ||

| | AltCLIP-m18 | 文图匹配 | ✅ | ✅ | ✅ | [README.md](examples/AltCLIP-m18/README.md) | | ||

| | AltDiffusion | 文生图 | ❌ | ❌ | ✅ | [README.md](/examples/AltDiffusion/README.md) | | ||

| | AltDiffusion-m18 | 文生图,支持 18 种语言 | ❌ | ❌ | ✅ | [README.md](/examples/AltDiffusion-m18/README.md) | | ||

| | BERT-title-generation-english | 英文标题生成 | ✅ | ❌ | ✅ | [README.md](/examples/bert_title_generation_english/README.md) | | ||

| | CLIP | 图文匹配 | ✅ | ❌ | ✅ | —— | | ||

| | CPM3-finetune | 文本续写 | ❌ | ✅ | ❌ | —— | | ||

| | CPM3-generate | 文本续写 | ❌ | ❌ | ✅ | —— | | ||

| | CLIP | 图文匹配 | ✅ | ❌ | ✅ | —— | | ||

| | CPM3-finetune | 文本续写 | ❌ | ✅ | ❌ | —— | | ||

| | CPM3-generate | 文本续写 | ❌ | ❌ | ✅ | —— | | ||

| | CPM3_pretrain | 文本续写 | ✅ | ❌ | ❌ | —— | | ||

| | CPM_1 | 文本续写 | ❌ | ❌ | ✅ | [README.md](/examples/cpm_1/README.md) | | ||

| | EVA-CLIP | 图文匹配 | ✅ | ✅ | ✅ | [README.md](/examples/EVA_CLIP/README.md) | | ||

| | Galactica | 文本续写 | ❌ | ❌ | ✅ | —— | | ||

| | Galactica | 文本续写 | ❌ | ❌ | ✅ | —— | | ||

| | GLM-large-ch-blank-filling | 完形填空问答 | ❌ | ❌ | ✅ | [TUTORIAL](/doc_zh/TUTORIAL_11_GLM_BLANK_FILLING_QA.md) | | ||

| | GLM-large-ch-poetry-generation | 诗歌生成 | ✅ | ❌ | ✅ | [TUTORIAL](/doc_zh/TUTORIAL_13_GLM_EXAMPLE_PEOTRY_GENERATION.md) | | ||

| | GLM-large-ch-title-generation | 标题生成 | ✅ | ❌ | ✅ | [TUTORIAL](/doc_zh/TUTORIAL_12_GLM_EXAMPLE_TITLE_GENERATION.md) | | ||

| | GLM-pretrain | 预训练 | ✅ | ❌ | ❌ | —— | | ||

| | GLM-seq2seq | 生成任务 | ✅ | ❌ | ✅ | —— | | ||

| | GLM-superglue | 判别任务 | ✅ | ❌ | ❌ | —— | | ||

| | GLM-pretrain | 预训练 | ✅ | ❌ | ❌ | —— | | ||

| | GLM-seq2seq | 生成任务 | ✅ | ❌ | ✅ | —— | | ||

| | GLM-superglue | 判别任务 | ✅ | ❌ | ❌ | —— | | ||

| | GPT-2-text-writting | 文本续写 | ❌ | ❌ | ✅ | [TUTORIAL](/doc_zh/TUTORIAL_18_GPT2_WRITING.md) | | ||

| | GPT2-text-writting | 文本续写 | ❌ | ❌ | ✅ | —— | | ||

| | GPT2-title-generation | 标题生成 | ❌ | ❌ | ✅ | —— | | ||

| | OPT | 文本续写 | ❌ | ❌ | ✅ | [README.md](/examples/opt/README.md) | | ||

| | GPT2-text-writting | 文本续写 | ❌ | ❌ | ✅ | —— | | ||

| | GPT2-title-generation | 标题生成 | ❌ | ❌ | ✅ | —— | | ||

| | OPT | 文本续写 | ❌ | ❌ | ✅ | [README.md](/examples/opt/README.md) | | ||

| | RoBERTa-base-ch-ner | 命名实体识别 | ✅ | ❌ | ✅ | [TUTORIAL](/doc_zh/TUTORIAL_17_BERT_EXAMPLE_NER.md) | | ||

| | RoBERTa-base-ch-semantic-matching | 语义相似度匹配 | ✅ | ❌ | ✅ | [TUTORIAL](/doc_zh/TUTORIAL_16_BERT_EXAMPLE_SEMANTIC_MATCHING.md) | | ||

| | RoBERTa-base-ch-title-generation | 标题生成 | ✅ | ❌ | ✅ | [TUTORIAL](/doc_zh/TUTORIAL_15_BERT_EXAMPLE_TITLE_GENERATION.md) | | ||

| | RoBERTa-faq | 问答 | ❌ | ❌ | ✅ | [README.md](/examples/roberta_faq/README.md) | | ||

| | Swinv1 | 图片分类 | ✅ | ❌ | ✅ | —— | | ||

| | Swinv2 | 图片分类 | ✅ | ❌ | ✅ | —— | | ||

| | RoBERTa-faq | 问答 | ❌ | ❌ | ✅ | [README.md](/examples/roberta_faq/README.md) | | ||

| | Swinv1 | 图片分类 | ✅ | ❌ | ✅ | —— | | ||

| | Swinv2 | 图片分类 | ✅ | ❌ | ✅ | —— | | ||

| | T5-huggingface-11b | 训练 | ✅ | ❌ | ❌ | [TUTORIAL](/doc_zh/TUTORIAL_14_HUGGINGFACE_T5.md) | | ||

| | T5-title-generation | 标题生成 | ❌ | ❌ | ✅ | [TUTORIAL](/doc_zh/TUTORIAL_19_T5_EXAMPLE_TITLE_GENERATION.md) | | ||

| | T5-flagai-11b | 预训练 | ✅ | ❌ | ❌ | —— | | ||

| | T5-flagai-11b | 预训练 | ✅ | ❌ | ❌ | —— | | ||

| | ViT-cifar100 | 预训练 | ✅ | ❌ | ❌ | —— | | ||

|

|

||

|

|

||

|

|

@@ -144,7 +144,7 @@ ds_report # 检查deepspeed的状态 | |

| ``` | ||

| git clone https://github.com/OpenBMB/BMTrain | ||

| cd BMTrain | ||

| python setup.py install | ||

| python setup.py install | ||

| ``` | ||

| - [可选] 镜像构建,请参照 [Dockerfile](https://github.com/FlagAI-Open/FlagAI/blob/master/Dockerfile) | ||

| - [提示] 单节点docker环境下,运行多卡数据并行需要设置host。 例如,docker节点 [email protected],其端口 7110。 | ||

|

|

@@ -167,7 +167,7 @@ Host 127.0.0.1 | |

| from flagai.auto_model.auto_loader import AutoLoader | ||

| auto_loader = AutoLoader( | ||

| task_name="title-generation", | ||

| model_name="RoBERTa-base-ch" | ||

| model_name="RoBERTa-base-ch" | ||

| ) | ||

| model = auto_loader.get_model() | ||

| tokenizer = auto_loader.get_tokenizer() | ||

|

|

@@ -261,20 +261,23 @@ for text_pair in test_data: | |

|

|

||

| ``` | ||

|

|

||

| ### 模型部署 | ||

|

|

||

| * AltCLIP 部署见 [AltCLIP 的 ONNX 模型导出](./onnx/README.md) | ||

|

|

||

| ## 动态 | ||

| - [31 Mar 2023] 支持v1.6.3版本, 增加AltCLIP-m18模型 [#303](https://github.com/FlagAI-Open/FlagAI/pull/303) 以及 AltDiffusion-m18模型 [#302](https://github.com/FlagAI-Open/FlagAI/pull/302); | ||

| - [17 Mar 2023] 支持v1.6.2版本, 可以使用新的优化器 [#266](https://github.com/FlagAI-Open/FlagAI/pull/266), 并增加了英文gpt模型GPT2-base-en; | ||

| - [31 Mar 2023] 支持v1.6.3版本, 增加AltCLIP-m18模型 [#303](https://github.com/FlagAI-Open/FlagAI/pull/303) 以及 AltDiffusion-m18模型 [#302](https://github.com/FlagAI-Open/FlagAI/pull/302); | ||

| - [17 Mar 2023] 支持v1.6.2版本, 可以使用新的优化器 [#266](https://github.com/FlagAI-Open/FlagAI/pull/266), 并增加了英文gpt模型GPT2-base-en; | ||

| - [2 Mar 2023] 支持v1.6.1版本, 增加Galactica模型 [#234](https://github.com/FlagAI-Open/FlagAI/pull/234), 大模型推理的低资源工具包BMInf [#238](https://github.com/FlagAI-Open/FlagAI/pull/238), 以及P-tuning样例 [#227](https://github.com/FlagAI-Open/FlagAI/pull/238) | ||

| - [12 Jan 2023] 发布v1.6.0版本, 新增支持并行训练库 [**BMTrain**](https://github.com/OpenBMB/BMTrain) 以及集成 [**Flash Attention**](https://github.com/HazyResearch/flash-attention) 到 Bert 和 Vit 模型提速端到端训练, 示例见 [FlashAttentionBERT](https://github.com/FlagAI-Open/FlagAI/blob/master/examples/bert_title_generation_english/train_flash_atten.py)和 [FlashAttentionViT](https://github.com/FlagAI-Open/FlagAI/blob/master/examples/vit_cifar100/train_single_gpu_flash_atten.py). 同时增加了基于对比搜索的文本生成方法 [**SimCTG**](https://github.com/yxuansu/SimCTG) 以及基于 AltDiffusion 进行 DreamBooth 个性化微调, 示例见 [AltDiffusionNaruto](https://github.com/FlagAI-Open/FlagAI/blob/master/examples/AltDiffusion/dreambooth.py). | ||

| - [12 Jan 2023] 发布v1.6.0版本, 新增支持并行训练库 [**BMTrain**](https://github.com/OpenBMB/BMTrain) 以及集成 [**Flash Attention**](https://github.com/HazyResearch/flash-attention) 到 Bert 和 Vit 模型提速端到端训练, 示例见 [FlashAttentionBERT](https://github.com/FlagAI-Open/FlagAI/blob/master/examples/bert_title_generation_english/train_flash_atten.py)和 [FlashAttentionViT](https://github.com/FlagAI-Open/FlagAI/blob/master/examples/vit_cifar100/train_single_gpu_flash_atten.py). 同时增加了基于对比搜索的文本生成方法 [**SimCTG**](https://github.com/yxuansu/SimCTG) 以及基于 AltDiffusion 进行 DreamBooth 个性化微调, 示例见 [AltDiffusionNaruto](https://github.com/FlagAI-Open/FlagAI/blob/master/examples/AltDiffusion/dreambooth.py). | ||

| - [28 Nov 2022] 发布v1.5.0版本, 支持1.1B参数的 [**EVA-CLIP**](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/EVA_CLIP) 以及[ALM: 基于GLM的阿拉伯语大模型], 示例见[**ALM**](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/ALM) | ||

| - [10 Nov 2022] 发布v1.4.0版本, 支持[AltCLIP: 更改CLIP中的语言编码器以扩展语言功能](https://arxiv.org/abs/2211.06679v1), 示例见[**AltCLIP**](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/AltCLIP)以及[**AltDiffusion**](https://github.com/FlagAI-Open/FlagAI/tree/master/examples/AltDiffusion) | ||

| - [29 Aug 2022] 支持v1.3.0版本, 增加CLIP模块以及重新设计了tokenizer的API: [#81](https://github.com/FlagAI-Open/FlagAI/pull/81) | ||

| - [21 Jul 2022] 支持v1.2.0版本, 支持ViT系列模型: [#71](https://github.com/FlagAI-Open/FlagAI/pull/71) | ||

| - [29 Jun 2022] 支持v1.1.0版本, 支持OPT的加载,微调和推理[#63](https://github.com/FlagAI-Open/FlagAI/pull/63) | ||

| - [17 May 2022] 做出了我们的第一份贡献[#1](https://github.com/FlagAI-Open/FlagAI/pull/1) | ||

|

|

||

| ## 许可 LICENSE | ||

| ## 许可 LICENSE | ||

|

|

||

|

|

||

| FlagAI飞智大部分项目基于 [Apache 2.0 license](LICENSE),但是请注意部分项目代码基于其他协议: | ||

|

|

@@ -299,4 +302,4 @@ FlagAI飞智大部分项目基于 [Apache 2.0 license](LICENSE),但是请注 | |

|

|

||

| ] | ||

|

|

||

| </div> | ||

| </div> | ||

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,8 @@ | ||

| export PYTHONPATH=`pwd`:$PYTHONPATH | ||

|

|

||

| if [ -f ".env" ]; then | ||

| set -o allexport | ||

| source .env | ||

| set +o allexport | ||

| fi | ||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,9 @@ | ||

| .env | ||

| .DS_Store | ||

| __pycache__/ | ||

|

|

||

| /onnx/* | ||

| !onnx/.keep | ||

|

|

||

| dist/ | ||

| model/ |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1 @@ | ||

| python 3.10.11 |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,38 @@ | ||

| FROM ubuntu:23.10 | ||

|

|

||

| WORKDIR app | ||

|

|

||

| ADD ./version.txt version | ||

|

|

||

| # sed -i s/ports.ubuntu.com/mirrors.aliyun.com/g /etc/apt/sources.list &&\ | ||

| # sed -i s/archive.ubuntu.com/mirrors.aliyun.com/g /etc/apt/sources.list &&\ | ||

| # sed -i s/security.ubuntu.com/mirrors.aliyun.com/g /etc/apt/sources.list &&\ | ||

| # pip install -i https://mirrors.aliyun.com/pypi/simple/ \ | ||

|

|

||

| RUN \ | ||

| apt-get update && \ | ||

| apt-get install -y git pkg-config bash \ | ||

| python3-full python3-pip python3-aiohttp &&\ | ||

| update-alternatives --install /usr/bin/python python /usr/bin/python3 1 &&\ | ||

| pip install --break-system-packages \ | ||

| setuptools==66.0.0 \ | ||

| urllib3==1.26.16 \ | ||

| scipy transformers huggingface_hub packaging \ | ||

| tqdm requests cython \ | ||

| torch onnx && apt-get clean -y | ||

|

|

||

| ARG GIT | ||

| ENV GIT=$GIT | ||

|

|

||

| RUN git clone --depth=1 $GIT | ||

|

|

||

| ADD os/ / | ||

|

|

||

| RUN cd FlagAI &&\ | ||

| rm setup.cfg &&\ | ||

| python setup.py install | ||

|

|

||

| RUN pip uninstall -y google-auth | ||

|

|

||

| ENV PYTHONPATH /app | ||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,95 @@ | ||

| # AltCLIP 的 ONNX 模型导出 | ||

|

|

||

| ## ONNX 是什么? | ||

|

|

||

| ONNX(Open Neural Network Exchange),开放神经网络交换,用于在各种深度学习训练和推理框架转换的一个中间表示格式。 | ||

|

|

||

| 在实际业务中,可以使用 Pytorch 或者 TensorFlow 训练模型,导出成 ONNX 格式,然后用 ONNX Runtime 直接运行 ONNX。 | ||

|

|

||

| 使用 ONNX 可以减少模型的依赖,降低部署成本。 | ||

|

|

||

| 也可以进一步借助 ONNX 转换成目标设备上运行时支撑的模型格式,比如 [TensorRT Engine](https://developer.nvidia.com/tensorrt)、[NCNN](https://github.com/Tencent/ncnn)、[MNN](https://github.com/alibaba/MNN) 等格式, 优化性能。 | ||

|

|

||

| ## 下载 AltCLIP 的 ONNX | ||

|

|

||

| 可以从[xxai/AltCLIP](https://huggingface.co/xxai/AltCLIP/tree/main)下载打包好的 onnx,并解压到 `FlagAI/onnx/onnx/` 下。 | ||

|

|

||

| 如此就可以直接运行 onnx 的测试,而无需下载原始模型运行导出。 | ||

|

|

||

| ## 文件说明 | ||

|

|

||

| 因为 flagai 的依赖复杂,所以构建容器便于导出。 | ||

|

|

||

| ### 脚本 | ||

|

|

||

| * `./build.sh` 在本地构建容器 | ||

|

|

||

| 可设置环境变量 `ORG=xxai` 使用 [hub.docker.com 上的已构建的镜像](https://hub.docker.com/repository/docker/xxai/altclip-onnx)。 | ||

|

|

||

| 比如,运行 `ORG=xxai ./bash.sh` 。 | ||

|

|

||

| * `./bash.sh` 在本地进入容器的 bash,方便调试 | ||

|

|

||

| * `./export.sh` 运行容器,导出 onnx | ||

|

|

||

| 设置环境变量 MODEL ,可以配置导出、测试脚本运行的模型 。 | ||

|

|

||

| 默认导出的模型是 AltCLIP-XLMR-L-m18 。 | ||

|

|

||

| 其他可选的模型有: | ||

|

|

||

| - AltCLIP-XLMR-L | ||

| - AltCLIP-XLMR-L-m9 | ||

|

|

||

| * `./dist.sh` 运行容器,导出以上 3 个模型的 onnx,并打包放到 dist 目录下。 | ||

|

|

||

| ### 目录 | ||

|

|

||

| * model/ 存放下载的模型 | ||

| * onnx/ 存放导出的 onnx,下载的 onnx 也请解压到这里 | ||

|

|

||

| ### 测试 | ||

|

|

||

| #### onnx 模型的依赖安装 | ||

|

|

||

| test/onnx 下面的依赖很简单,只有 transformers 和 onnxruntime,不依赖于 flagai。 | ||

|

|

||

| onnxruntime 有很多版本可以选择,见[onnxruntime](https://onnxruntime.ai/)。 | ||

|

|

||

| 对于 python 而言,常见的运行时推荐如下: | ||

|

|

||

| * 显卡 `pip install onnxruntime-gpu` | ||

| * ARM 架构的 MAC `pip install onnxruntime-silicon` (目前还不支持 python3.11) | ||

| * INTEL 的 CPU `pip install onnxruntime-openvino` | ||

| * 其他 CPU `pip install onnxruntime` | ||

|

|

||

| 运行 [./test/onnx/setup.sh](./test/onnx/setup.sh) 会自动判断环境,选择安装合适的 onnxruntime 版本和 transformers。 | ||

|

|

||

| #### onnx 模型的测试脚本 | ||

|

|

||

| 请先安装 [direnv](https://github.com/direnv/direnv/blob/master/README.md) 并在本目录下 `direnv allow` 或者手工 `source .envrc` 来设置 PYTHONPATH 环境变量。 | ||

|

|

||

| * [./test/onnx/onnx_img.py](./test/onnx/onnx_img.py) 生成图片向量 | ||

| * [./test/onnx/onnx_txt.py](./test/onnx/onnx_txt.py) 生成文本向量 | ||

| * [./test/onnx/onnx_test.py](./test/onnx/onnx_test.py) 匹配图片向量和文本向量,进行零样本分类 | ||

|

|

||

| 如果想把生成的文本向量和图片向量存入数据库,进行相似性搜索,请先对特征进行归一化。 | ||

|

|

||

| ```python | ||

| image_features /= image_features.norm(dim=-1, keepdim=True) | ||

| text_features /= text_features.norm(dim=-1, keepdim=True) | ||

| ``` | ||

|

|

||

| 可借助向量数据库,提升零样本分类的准确性,参见[ECCV 2022 | 无需下游训练,Tip-Adapter 大幅提升 CLIP 图像分类准确率](https://cloud.tencent.com/developer/article/2126102)。 | ||

|

|

||

| #### pytorch 模型 | ||

|

|

||

| 用于对比 onnx 模型的向量输出,查看是否一致。 | ||

|

|

||

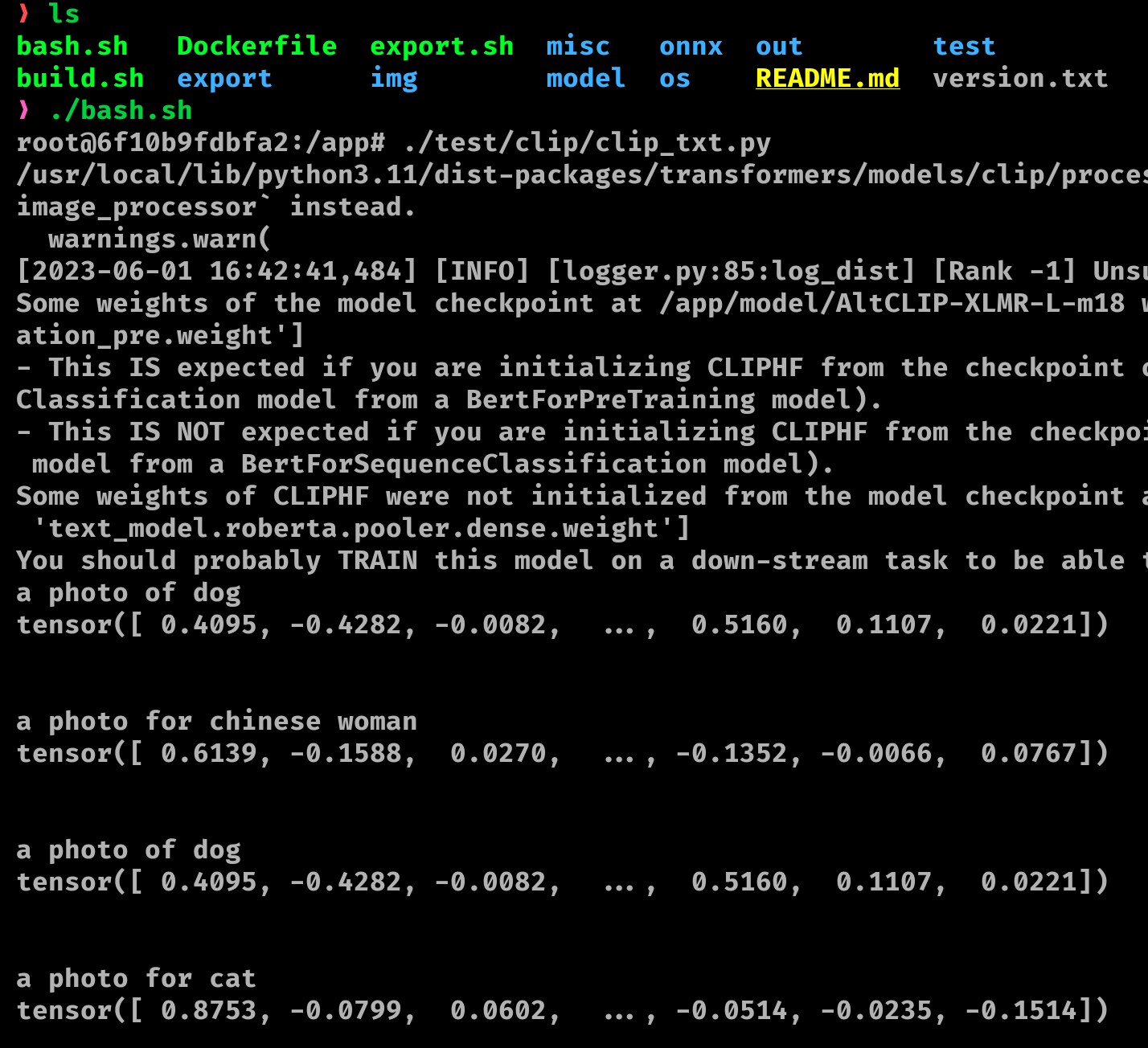

| 因为用到了 flagai,请如下图所示运行 [./bash.sh ](./bash.sh) 进入容器运行调试。 | ||

|

|

||

|  | ||

|

|

||

| * [./test/clip/clip_img.py](./test/clip/clip_img.py) 生成图片向量 | ||

| * [./test/clip/clip_txt.py](./test/clip/clip_txt.py) 生成文本向量 | ||

| * [./test/clip/clip_test.py](./test/clip/clip_test.py) 匹配图片向量和文本向量,进行零样本分类 |

Oops, something went wrong.