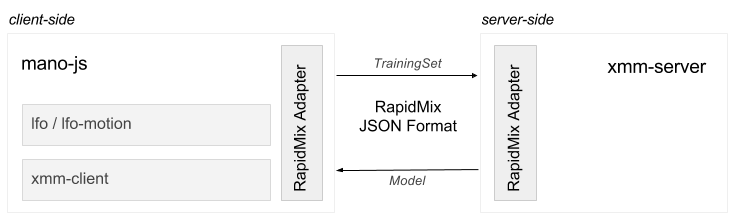

mano-jsis a library targeted at sensor processing and gesture modeling and recognition. The library is designed to offer a high-level client-side wrapper of waves-lfo, lfo-motion, xmm-client.

npm install [--save --save-exact] ircam-rnd/mano-jsimport * as mano from 'mano-js/client';

const processedSensors = new mano.ProcessedSensors();

const example = new mano.Example();

const trainingSet = new mano.TrainingSet();

const xmmProcessor = new mano.XmmProcesssor();

example.setLabel('test');

processedSensors.addListener(example.addElement);

// later...

processedSensors.removeListener(example.addElement);

const rapidMixJsonExample = example.toJSON();

trainingSet.addExample(rapidMixJsonExample);

const rapidMixJsonTrainingSet = trainingSet.toJSON();

xmmProcessor

.train(rapidMixJsonTrainingSet)

.then(() => {

// start decoding

processedSensors.addListener(data => {

const results = xmmProcessor.run(data);

console.log(results);

});

});By default, the training is achieved by calling the dedicated service available at https://como.ircam.fr/api/v1/train, however a similar service can be simply deployed by using the xmm-node and rapid-mix adapters librairies.

An concrete example of such solution is available in examples/mano-js-example.

The library as been developped at Ircam - Centre Pompidou by Joseph Larralde and Benjamin Matuszewski in the framework of the EU H2020 project Rapid-Mix.