In this repo, I'm developing models for a two-stage music synthsis pipeline.

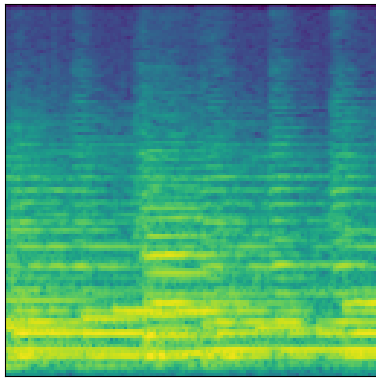

- A generative model that can produce sequences of low-frequency audio features, such as a mel spectrogram, or a sequence of chroma and MFCC features.

- A conditional generative model that can produce raw audio from the low-frequency features.

The second stage is inspired by papers developing spectrogram-to-speech vocoders such as:

- MelGAN: Generative Adversarial Networks for Conditional Waveform Synthesis

- High Fidelity Speech Synthesis with Adversarial Networks

You can read more about early experiments developing models for the second stage in this blog post.