The Data Ingest component interfaces with Geoserver, allowing to list, upload, download, update and delete vector data stores and associated metadata. It provides both a REST API and a UI. The implementation uses the Geoserver Manager library and the Geotools WFS-NG plugin to comunicate with Geoserver.

This is a Spring Boot project. You can compile and run it on the command line with:

mvn spring-boot:runThis will build a servlet and deploy it on a webserver started on port 8080:

http://localhost:8080The build uses the checkstyle Maven plugin for reporting on the style used by developers and the findbugs Maven plugin which looks for bug patterns. If you want to deactivate them on a build, run:

mvn clean install -DrelaxThe docker folder contains an orchestration with the complete service stack for a development environment.

For detailed instructions on how-to run the docker composition which instantiates a service stack, please refer to the related README file. Note that although a copy of the data-ingest service is included in the composition, you'll need to run a separate instance. The docker composition is intended for development only and is designed to interact with either the data-ingest service or the harvester outside the container.

There is a batch of unit tests associated to this build. If you want to build without the tests, run:

mvn clean install -DskipTestsOn the other hand, if you just want to run the test suite, type:

mvn testThe unit tests use a docker junit rule, which launches a container with Geoserver. In some cases, in order to get it working, you may need to set the DOCKER_HOST environment variable. In nix systems:

export DOCKER_HOST=$DOCKER_HOST:$DOCKER_PORTFor instance:

export DOCKER_HOST=localhost:2375On a bash shell, you would set it permanently by adding this instruction to your ~/.bashrc, ~/.bash_profile.

In OsX the docker daemon does not listen on this address, so you should not set the DOCKER_HOST variable.

You can, however, fake the unix docker daemon with this workaround:

socat TCP-LISTEN:2375,reuseaddr,fork,bind=localhost UNIX-CONNECT:/var/run/docker.sock &For improving the speed of downloads, we implemented a file cache, which stores physical files on disk. The cache uses a memory structure (an hashmap), to store the references to the physical files.

When the application starts, the file cache is initialized with three parameters, which are set on the application.properties file:

cache.capacity: the limit of the cache on disk (in bytes)cache path: the disk path of the cachecache.name: the name of the cache, which will be the name of a subdirectory, to be created under undercache.path

The cache.path parameter can be empty, in which case it will default to the TMP directory of the OS. All other variables are mandatory.

As an example, for cache.path=/tmp and cache.name=cache, a directory named /tmp/cache would be created on startup. If that directory already existed, and had adequated permissions, it would be reused; otherwise an error would be thrown. Please not that any errors in the cache initialization would cause the application to not start.

When the application exits, the cache directory is emptied and removed.

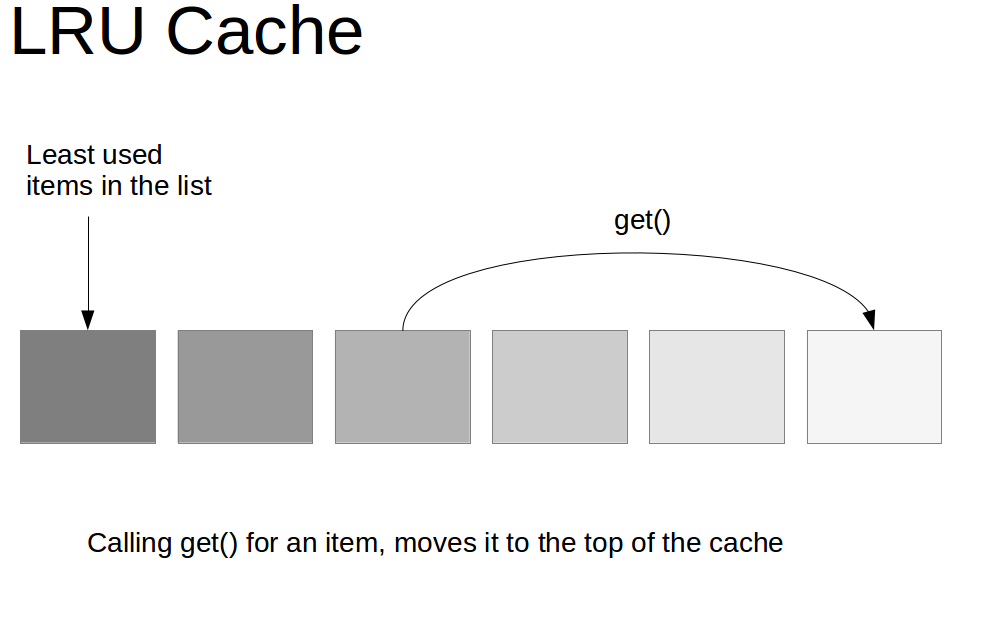

For evicting the cache, we have implemented a LRU policy. This is a well-known algorithm, which discards the least recently used items first.

The eviction is triggered when the file we request for download is going to increase the cache till, or over its capacity. If we request to download a file which is larger than the cache capacity, an error will be thrown, prompting the user to review the cache configuration; this error means that there is no way this file can be downloaded, unless we start the application with a larger cache.

In principle, subsequent requests for the same workspace:dataset will land in the cache. However, there are some situations at which we want to invalidate the cache and force a new download request. As a rule of thumb, we do not want to use the cache, when it no longer represents an accurate image of reality. More specifically, if the cache re-creates the files available on Geoserver, we know that a file is no longer valid, if:

- We remove that file from Geoserver.

- We update that file in GeoServer.

For removal and update events whithin the Data-Ingest API, we can trigger a cache invalidation which will force a new download request. However, GeoServer can also be accessed outside the API, and in that case we have no way of accessing these events. To mitigate this problem, we implemented a couple of strategies. In order to identify updated files, when a file is requested from the cache, we compare the size of the file on disk with the size on GeoServer (by issuing a request to the size headers). This will not identify the scenarios when a file is updated and has the same size, but will identify the most common scenario when a file is updated its size changes.

As a last resource, to prevent the cache to live forever, we also implemented a cache validity (in seconds), which is configurable in.application properties with variable: param.download.max.age.file. Everytime we hit the cache, we check the age of that file, and decide if it should be invalidated.

Finally, to free disk resources from files that no longer exist in GeoServer, we added a function that removes a file from the cache, whenever GeoServer does not list this file anymore. This function is triggered in the download request, which has a call to check if the file exists in GeoServer.

The data ingest API let you to upload zip Shapefiles directly to the connected GeoServer instance.

You can upload a file to an existing workspace WWWWW to define the new datastore and published layer DDDDD by send a POST request to http://YOURHOST:PORT/workspaces/WWWWW/datasets/DDDDD

Example:

curl -v -F file=@/PATHTOYOURFILE/DDDDD.zip -X POST http://YOURHOST:PORT/workspaces/WWWWW/datasets/DDDDDYou can update an existing datastore by uploading the shape file DDDDD.zip to an existing workspace WWWWW to the datastore DDDDD by send a PUT request to http://YOURHOST:PORT/workspaces/WWWWW/datasets/DDDDD

Example:

curl -v -F file=@/PATHTOYOURFILE/DDDDD.zip -X PUT http://YOURHOST:PORT/workspaces/WWWWW/datasets/DDDDDThese methods are asynchronous with the real upload, so they just give a direct feedback on validation of the file and the ticket number associated with the request (TICKETNUM). To check the status of the request the method http://YOURHOST:PORT/checkUploadStatus/TICKETNUM is provided.

- The uploaded file must be archived in ZIP format

- The name of the zip file must be the same of the datastore and of the layer where it will be uploaded

- The files in the zip must have the same name as the zip file

- The zip file must contain a single shapefile and the other mandatory files

- The shapefile must contain a crs value recognised

.shp — shape format; the feature geometry itself

.shx — shape index format; a positional index of the feature geometry to allow seeking forwards and backwards quickly

.dbf — attribute format; columnar attributes for each shape, in dBase IV format

A Swagger based UI for the API is available at:

http://YOURHOST:PORT/swagger-ui.html

The JSON representation is available at:

http://YOURHOST:PORT/v2/api-docs