This guide demonstrates how to install IPEX-LLM on Linux with Intel GPUs. It applies to Intel Data Center GPU Flex Series and Max Series, as well as Intel Arc Series GPU and Intel iGPU.

IPEX-LLM currently supports the Ubuntu 20.04 operating system and later, and supports PyTorch 2.0 and PyTorch 2.1 on Linux. This page demonstrates IPEX-LLM with PyTorch 2.1. Check the Installation page for more details.

- Install Prerequisites

- Install ipex-llm

- Verify Installation

- Runtime Configurations

- A Quick Example

- Tips & Troubleshooting

For Intel Core™ Ultra Processers (Series 1) with processor number 1xxH/U/HL/UL (code name Meteor Lake)

Note

For IPEX-LLM on Linux with Meteor Lake integrated GPU, we have currently verified on Ubuntu 22.04 with kernel 6.5.0-35-generic.

You could check your current kernel version through:

uname -rIf the version displayed is not 6.5.0-35-generic, downgrade or upgrade the kernel to the recommended version.

If your current kernel version is not 6.5.0-35-generic, you could downgrade or upgrade it by:

export VERSION="6.5.0-35"

sudo apt-get install -y linux-headers-$VERSION-generic linux-image-$VERSION-generic linux-modules-extra-$VERSION-generic

sudo sed -i "s/GRUB_DEFAULT=.*/GRUB_DEFAULT=\"1> $(echo $(($(awk -F\' '/menuentry / {print $2}' /boot/grub/grub.cfg \

| grep -no $VERSION | sed 's/:/\n/g' | head -n 1)-2)))\"/" /etc/default/grub

sudo update-grubAnd the reboot your machine:

sudo rebootAfter rebooting, you can use uname -r again to see that your kernel version has been changed to 6.5.0-35-generic.

Next, you need to enable GPU driver support on kernel 6.5.0-35-generic through force_probe parameter:

export FORCE_PROBE_VALUE=$(sudo dmesg | grep i915 | grep -o 'i915\.force_probe=[a-zA-Z0-9]\{4\}')

sudo sed -i "/^GRUB_CMDLINE_LINUX_DEFAULT=/ s/\"\(.*\)\"/\"\1 $FORCE_PROBE_VALUE\"/" /etc/default/grubTip

In addition to using the above command, you could also maunally check your force_probe flag value through:

sudo dmesg | grep i915You may get output like Your graphics device 7d55 is not properly supported by i915 in this kernel version. To force driver probe anyway, use i915.force_probe=7d55, in which 7d55 is the PCI ID depends on your GPU model.

Then, modify the /etc/default/grub file directly. Make sure you add i915.force_probe=xxxx to the value of GRUB_CMDLINE_LINUX_DEFAULT. For example, before modification, you haveGRUB_CMDLINE_LINUX_DEFAULT="quiet splash" inside /etc/default/grub. You then need to change it to GRUB_CMDLINE_LINUX_DEFAULT="quiet splash i915.force_probe=7d55".

Then update grub through:

sudo update-grubReboot the machine then to make the configuration take effect:

sudo rebootCompute packages are also required to be installed for Intel GPU on Ubuntu 22.04 through the following commands:

wget -qO - https://repositories.intel.com/gpu/intel-graphics.key | \

sudo gpg --yes --dearmor --output /usr/share/keyrings/intel-graphics.gpg

echo "deb [arch=amd64,i386 signed-by=/usr/share/keyrings/intel-graphics.gpg] https://repositories.intel.com/gpu/ubuntu jammy client" | \

sudo tee /etc/apt/sources.list.d/intel-gpu-jammy.list

sudo apt update

sudo apt-get install -y libze1 intel-level-zero-gpu intel-opencl-icd clinfoTo complete the GPU driver setup, you need to make sure your user is in the render group:

sudo gpasswd -a ${USER} render

newgrp renderYou could then verify whether the GPU driver is functioning properly with:

clinfo | grep "Device Name"whose output should contain Intel(R) Arc(TM) Graphics or Intel(R) Graphics based on your GPU model.

Tip

You could refer to the official driver guide for client GPUS for more information.

-

Choose one option below depending on your CPU type:

-

Option 1: For

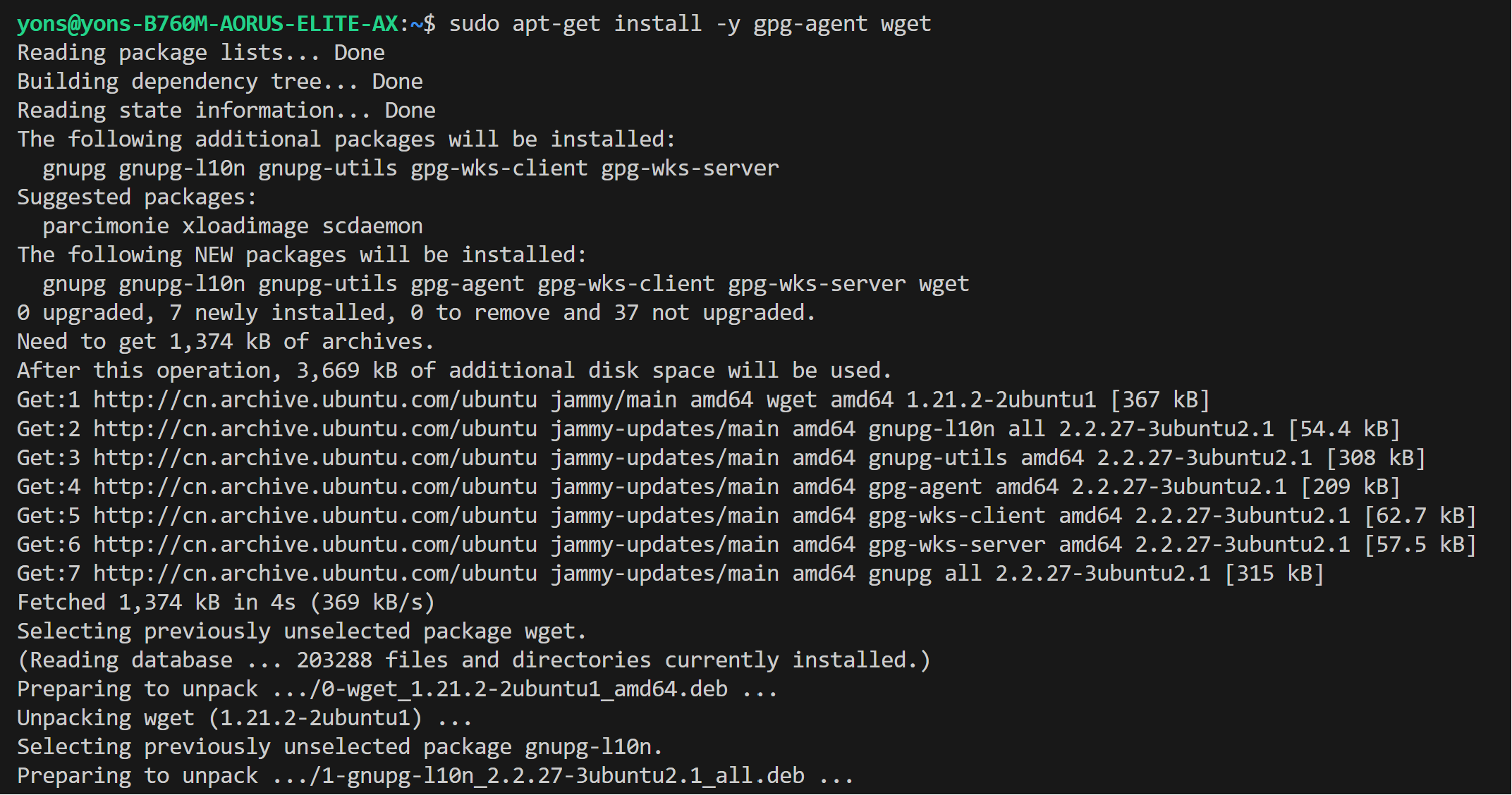

Intel Core CPUwith multiple A770 Arc GPUs. Use the following repository:sudo apt-get install -y gpg-agent wget wget -qO - https://repositories.intel.com/gpu/intel-graphics.key | \ sudo gpg --dearmor --output /usr/share/keyrings/intel-graphics.gpg echo "deb [arch=amd64,i386 signed-by=/usr/share/keyrings/intel-graphics.gpg] https://repositories.intel.com/gpu/ubuntu jammy client" | \ sudo tee /etc/apt/sources.list.d/intel-gpu-jammy.list

-

Option 2: For

Intel Xeon-W/SP CPUwith multiple A770 Arc GPUs. Use this repository for better performance:wget -qO - https://repositories.intel.com/gpu/intel-graphics.key | \ sudo gpg --yes --dearmor --output /usr/share/keyrings/intel-graphics.gpg echo "deb [arch=amd64 signed-by=/usr/share/keyrings/intel-graphics.gpg] https://repositories.intel.com/gpu/ubuntu jammy/lts/2350 unified" | \ sudo tee /etc/apt/sources.list.d/intel-gpu-jammy.list sudo apt update

-

-

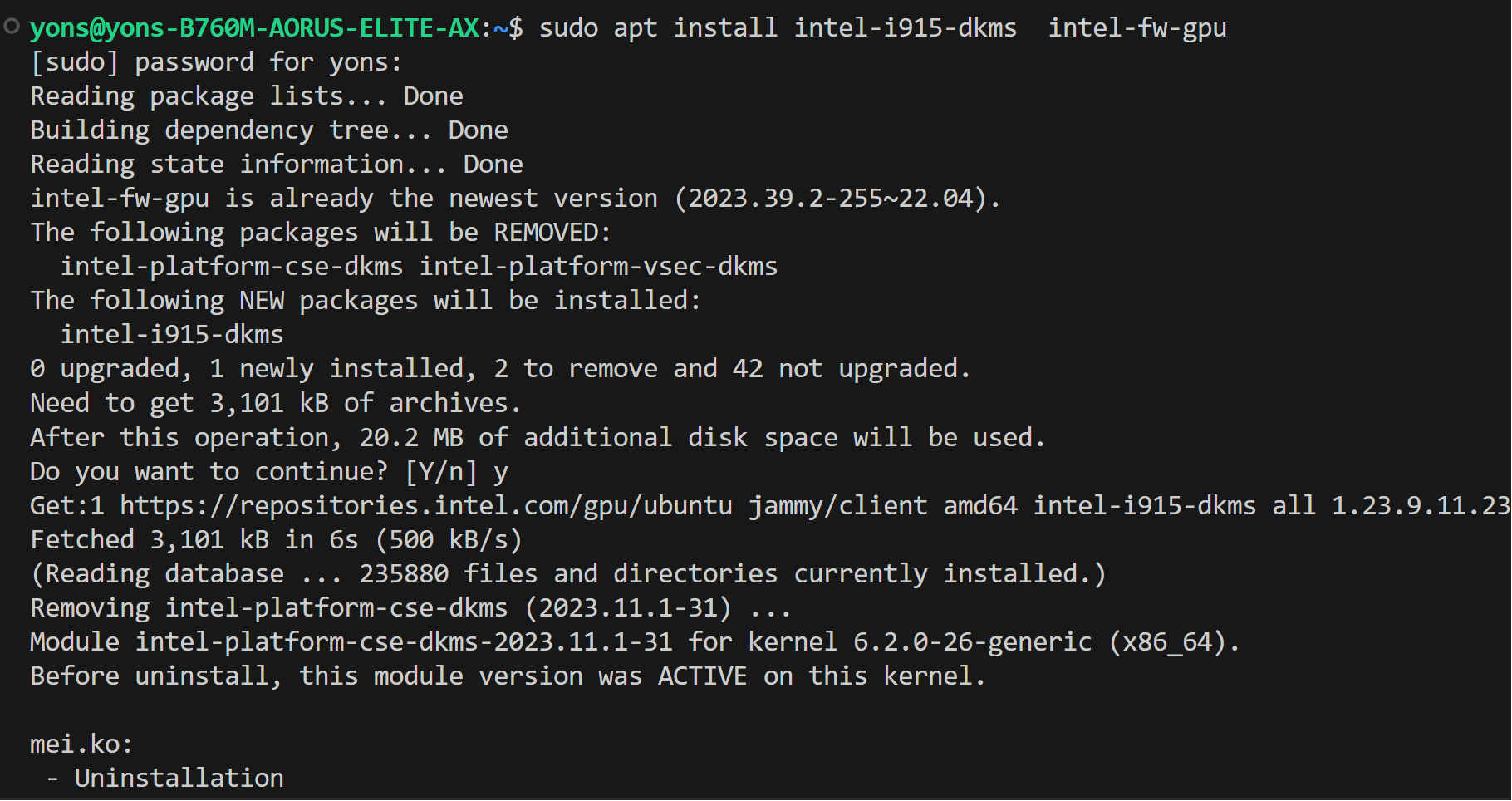

Install drivers

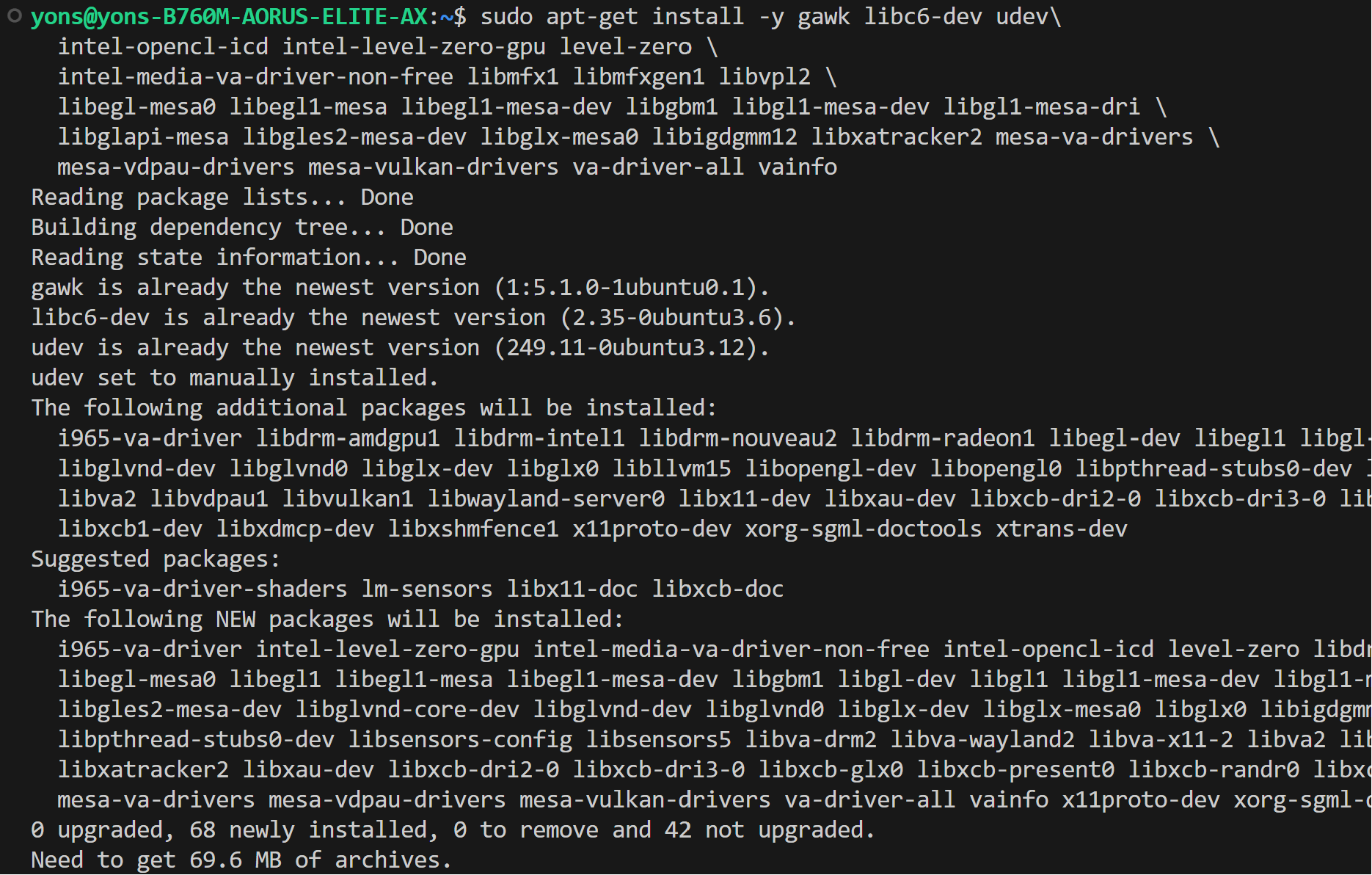

sudo apt-get update # Install out-of-tree driver sudo apt-get -y install \ gawk \ dkms \ linux-headers-$(uname -r) \ libc6-dev sudo apt install intel-i915-dkms intel-fw-gpu # Install Compute Runtime sudo apt-get install -y udev \ intel-opencl-icd intel-level-zero-gpu level-zero \ intel-media-va-driver-non-free libmfx1 libmfxgen1 libvpl2 \ libegl-mesa0 libegl1-mesa libegl1-mesa-dev libgbm1 libgl1-mesa-dev libgl1-mesa-dri \ libglapi-mesa libgles2-mesa-dev libglx-mesa0 libigdgmm12 libxatracker2 mesa-va-drivers \ mesa-vdpau-drivers mesa-vulkan-drivers va-driver-all vainfo sudo reboot

-

Configure permissions

sudo gpasswd -a ${USER} render newgrp render # Verify the device is working with i915 driver sudo apt-get install -y hwinfo hwinfo --display

-

Choose one option below depending on your CPU type:

-

Option 1: For

Intel Core CPUwith multiple A770 Arc GPUs. Use the following repository:sudo apt-get install -y gpg-agent wget wget -qO - https://repositories.intel.com/gpu/intel-graphics.key | \ sudo gpg --dearmor --output /usr/share/keyrings/intel-graphics.gpg echo "deb [arch=amd64,i386 signed-by=/usr/share/keyrings/intel-graphics.gpg] https://repositories.intel.com/gpu/ubuntu jammy client" | \ sudo tee /etc/apt/sources.list.d/intel-gpu-jammy.list

-

Option 2: For

Intel Xeon-W/SP CPUwith multiple A770 Arc GPUs. Use this repository for better performance:wget -qO - https://repositories.intel.com/gpu/intel-graphics.key | \ sudo gpg --yes --dearmor --output /usr/share/keyrings/intel-graphics.gpg echo "deb [arch=amd64 signed-by=/usr/share/keyrings/intel-graphics.gpg] https://repositories.intel.com/gpu/ubuntu jammy/lts/2350 unified" | \ sudo tee /etc/apt/sources.list.d/intel-gpu-jammy.list sudo apt update

-

-

Install drivers

sudo apt-get update # Install out-of-tree driver sudo apt-get -y install \ gawk \ dkms \ linux-headers-$(uname -r) \ libc6-dev sudo apt install -y intel-i915-dkms intel-fw-gpu # Install Compute Runtime sudo apt-get install -y udev \ intel-opencl-icd intel-level-zero-gpu level-zero \ intel-media-va-driver-non-free libmfx1 libmfxgen1 libvpl2 \ libegl-mesa0 libegl1-mesa libegl1-mesa-dev libgbm1 libgl1-mesa-dev libgl1-mesa-dri \ libglapi-mesa libgles2-mesa-dev libglx-mesa0 libigdgmm12 libxatracker2 mesa-va-drivers \ mesa-vdpau-drivers mesa-vulkan-drivers va-driver-all vainfo sudo reboot

-

Configure permissions

sudo gpasswd -a ${USER} render newgrp render # Verify the device is working with i915 driver sudo apt-get install -y hwinfo hwinfo --display

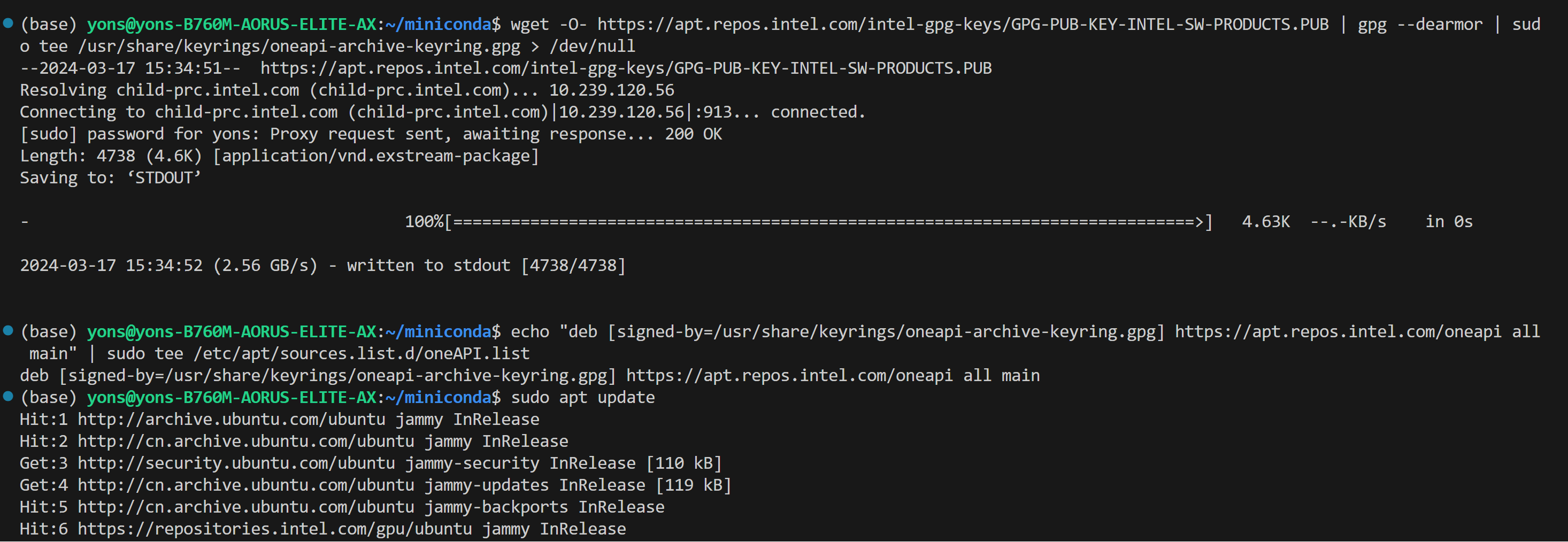

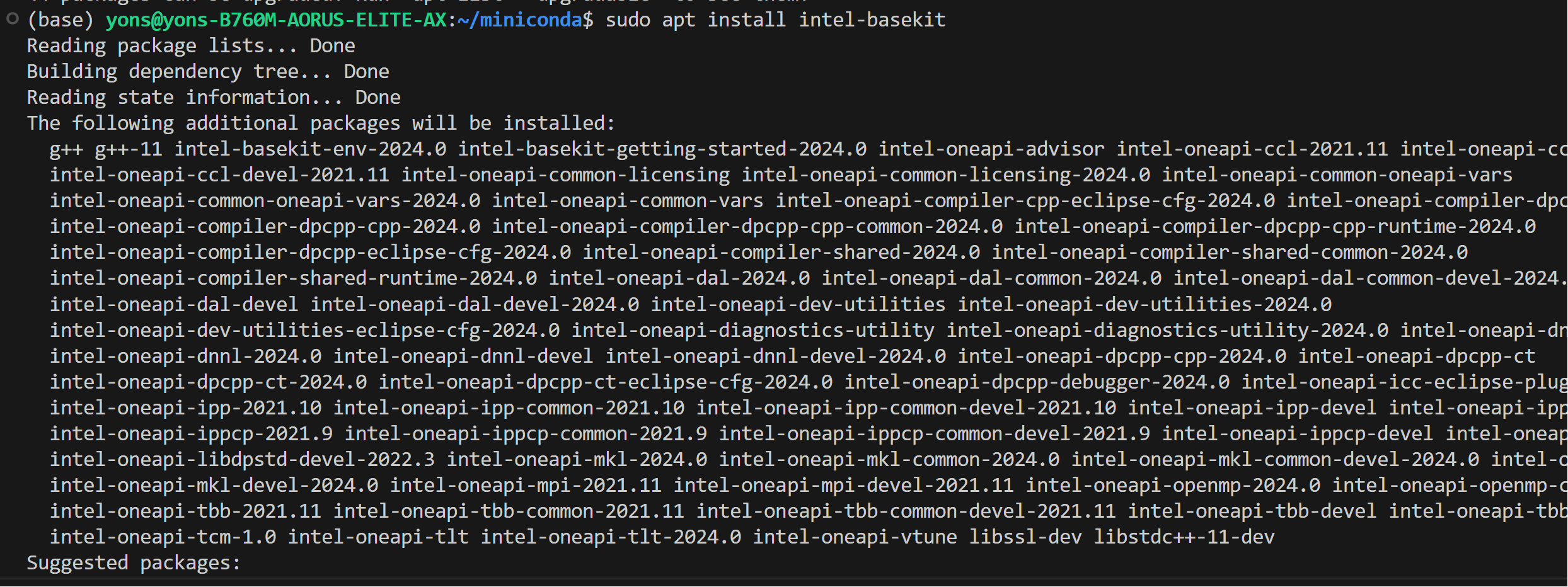

IPEX-LLM requires installation of oneAPI 2024.0 for Intel GPU on Linux

wget -O- https://apt.repos.intel.com/intel-gpg-keys/GPG-PUB-KEY-INTEL-SW-PRODUCTS.PUB | gpg --dearmor | sudo tee /usr/share/keyrings/oneapi-archive-keyring.gpg > /dev/null

echo "deb [signed-by=/usr/share/keyrings/oneapi-archive-keyring.gpg] https://apt.repos.intel.com/oneapi all main" | sudo tee /etc/apt/sources.list.d/oneAPI.list

sudo apt update

sudo apt install intel-oneapi-common-vars=2024.0.0-49406 \

intel-oneapi-common-oneapi-vars=2024.0.0-49406 \

intel-oneapi-diagnostics-utility=2024.0.0-49093 \

intel-oneapi-compiler-dpcpp-cpp=2024.0.2-49895 \

intel-oneapi-dpcpp-ct=2024.0.0-49381 \

intel-oneapi-mkl=2024.0.0-49656 \

intel-oneapi-mkl-devel=2024.0.0-49656 \

intel-oneapi-mpi=2021.11.0-49493 \

intel-oneapi-mpi-devel=2021.11.0-49493 \

intel-oneapi-dal=2024.0.1-25 \

intel-oneapi-dal-devel=2024.0.1-25 \

intel-oneapi-ippcp=2021.9.1-5 \

intel-oneapi-ippcp-devel=2021.9.1-5 \

intel-oneapi-ipp=2021.10.1-13 \

intel-oneapi-ipp-devel=2021.10.1-13 \

intel-oneapi-tlt=2024.0.0-352 \

intel-oneapi-ccl=2021.11.2-5 \

intel-oneapi-ccl-devel=2021.11.2-5 \

intel-oneapi-dnnl-devel=2024.0.0-49521 \

intel-oneapi-dnnl=2024.0.0-49521 \

intel-oneapi-tcm-1.0=1.0.0-435Important

Please make sure to reboot the machine after the installation of GPU driver and oneAPI is complete:

sudo rebootDownload and install the Miniforge as follows if you don't have conda installed on your machine:

wget https://github.com/conda-forge/miniforge/releases/latest/download/Miniforge3-Linux-x86_64.sh

bash Miniforge3-Linux-x86_64.sh

source ~/.bashrcYou can use conda --version to verify you conda installation.

After installation, create a new python environment llm:

conda create -n llm python=3.11Activate the newly created environment llm:

conda activate llmWith the llm environment active, use pip to install ipex-llm for GPU. Choose either US or CN website for extra-index-url:

-

For US:

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/us/

-

For CN:

pip install --pre --upgrade ipex-llm[xpu] --extra-index-url https://pytorch-extension.intel.com/release-whl/stable/xpu/cn/

Note

If you encounter network issues while installing IPEX, refer to this guide for troubleshooting advice.

-

You can verify if

ipex-llmis successfully installed by simply importing a few classes from the library. For example, execute the following import command in the terminal:source /opt/intel/oneapi/setvars.sh python > from ipex_llm.transformers import AutoModel, AutoModelForCausalLM

To use GPU acceleration on Linux, several environment variables are required or recommended before running a GPU example. Choose corresponding configurations based on your GPU device:

-

For Intel Arc™ A-Series and Intel Data Center GPU Flex:

For Intel Arc™ A-Series Graphics and Intel Data Center GPU Flex Series, we recommend:

# Configure oneAPI environment variables. source /opt/intel/oneapi/setvars.sh # Recommended Environment Variables for optimal performance export USE_XETLA=OFF export SYCL_CACHE_PERSISTENT=1 # [optional] under most circumstances, the following environment variable may improve performance, but sometimes this may also cause performance degradation export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

-

For Intel Data Center GPU Max:

For Intel Data Center GPU Max Series, we recommend:

# Configure oneAPI environment variables. source /opt/intel/oneapi/setvars.sh # Recommended Environment Variables for optimal performance export LD_PRELOAD=${LD_PRELOAD}:${CONDA_PREFIX}/lib/libtcmalloc.so export SYCL_CACHE_PERSISTENT=1 export ENABLE_SDP_FUSION=1 # [optional] under most circumstances, the following environment variable may improve performance, but sometimes this may also cause performance degradation export SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS=1

Please note that

libtcmalloc.socan be installed byconda install -c conda-forge -y gperftools=2.10 -

For Intel iGPU:

# Configure oneAPI environment variables. source /opt/intel/oneapi/setvars.sh export SYCL_CACHE_PERSISTENT=1 export BIGDL_LLM_XMX_DISABLED=1

Note

Please refer to this guide for more details regarding runtime configuration.

Note

The environment variable SYCL_PI_LEVEL_ZERO_USE_IMMEDIATE_COMMANDLISTS determines the usage of immediate command lists for task submission to the GPU. While this mode typically enhances performance, exceptions may occur. Please consider experimenting with and without this environment variable for best performance. For more details, you can refer to this article.

Now let's play with a real LLM. We'll be using the phi-1.5 model, a 1.3 billion parameter LLM for this demostration. Follow the steps below to setup and run the model, and observe how it responds to a prompt "What is AI?".

-

Step 1: Activate the Python environment

llmyou previously created:conda activate llm

-

Step 2: Follow Runtime Configurations Section above to prepare your runtime environment.

-

Step 3: Create a new file named

demo.pyand insert the code snippet below.# Copy/Paste the contents to a new file demo.py import torch from ipex_llm.transformers import AutoModelForCausalLM from transformers import AutoTokenizer, GenerationConfig generation_config = GenerationConfig(use_cache = True) tokenizer = AutoTokenizer.from_pretrained("tiiuae/falcon-7b", trust_remote_code=True) # load Model using ipex-llm and load it to GPU model = AutoModelForCausalLM.from_pretrained( "tiiuae/falcon-7b", load_in_4bit=True, cpu_embedding=True, trust_remote_code=True) model = model.to('xpu') # Format the prompt question = "What is AI?" prompt = " Question:{prompt}\n\n Answer:".format(prompt=question) # Generate predicted tokens with torch.inference_mode(): input_ids = tokenizer.encode(prompt, return_tensors="pt").to('xpu') # warm up one more time before the actual generation task for the first run, see details in `Tips & Troubleshooting` # output = model.generate(input_ids, do_sample=False, max_new_tokens=32, generation_config = generation_config) output = model.generate(input_ids, do_sample=False, max_new_tokens=32, generation_config = generation_config).cpu() output_str = tokenizer.decode(output[0], skip_special_tokens=True) print(output_str)

Note:

When running LLMs on Intel iGPUs with limited memory size, we recommend setting

cpu_embedding=Truein thefrom_pretrainedfunction. This will allow the memory-intensive embedding layer to utilize the CPU instead of GPU. -

Step 5. Run

demo.pywithin the activated Python environment using the following command:python demo.py

Example output on a system equipped with an 11th Gen Intel Core i7 CPU and Iris Xe Graphics iGPU:

Question:What is AI?

Answer: AI stands for Artificial Intelligence, which is the simulation of human intelligence in machines.

When running LLMs on GPU for the first time, you might notice the performance is lower than expected, with delays up to several minutes before the first token is generated. This delay occurs because the GPU kernels require compilation and initialization, which varies across different GPU types. To achieve optimal and consistent performance, we recommend a one-time warm-up by running model.generate(...) an additional time before starting your actual generation tasks. If you're developing an application, you can incorporate this warmup step into start-up or loading routine to enhance the user experience.