You can find an in depth walkthrough for training a Core ML model here.

git clone the repo and cd into it by running the following command:

git clone https://github.com/cloud-annotations/object-detection-ios.git

cd object-detection-iosCopy the model_ios directory generated from the classification walkthrough and paste it into the object-detection-ios/Core ML Object Detection folder of this repo.

In order to develop for iOS we need to first install the latest version of Xcode, which can be found on the Mac App Store

Launch Xcode and choose Open another project...

Then in the file selector, choose object-detection-ios.

Now we’re ready to test! First we’ll make sure the app builds on our computer, if all goes well, the simulator will open and the app will display.

To run in the simulator, select an iOS device from the dropdown and click run.

Since the simulator does not have access to a camera, and the app relies on the camera to test the classifier, we should also run it on a real device.

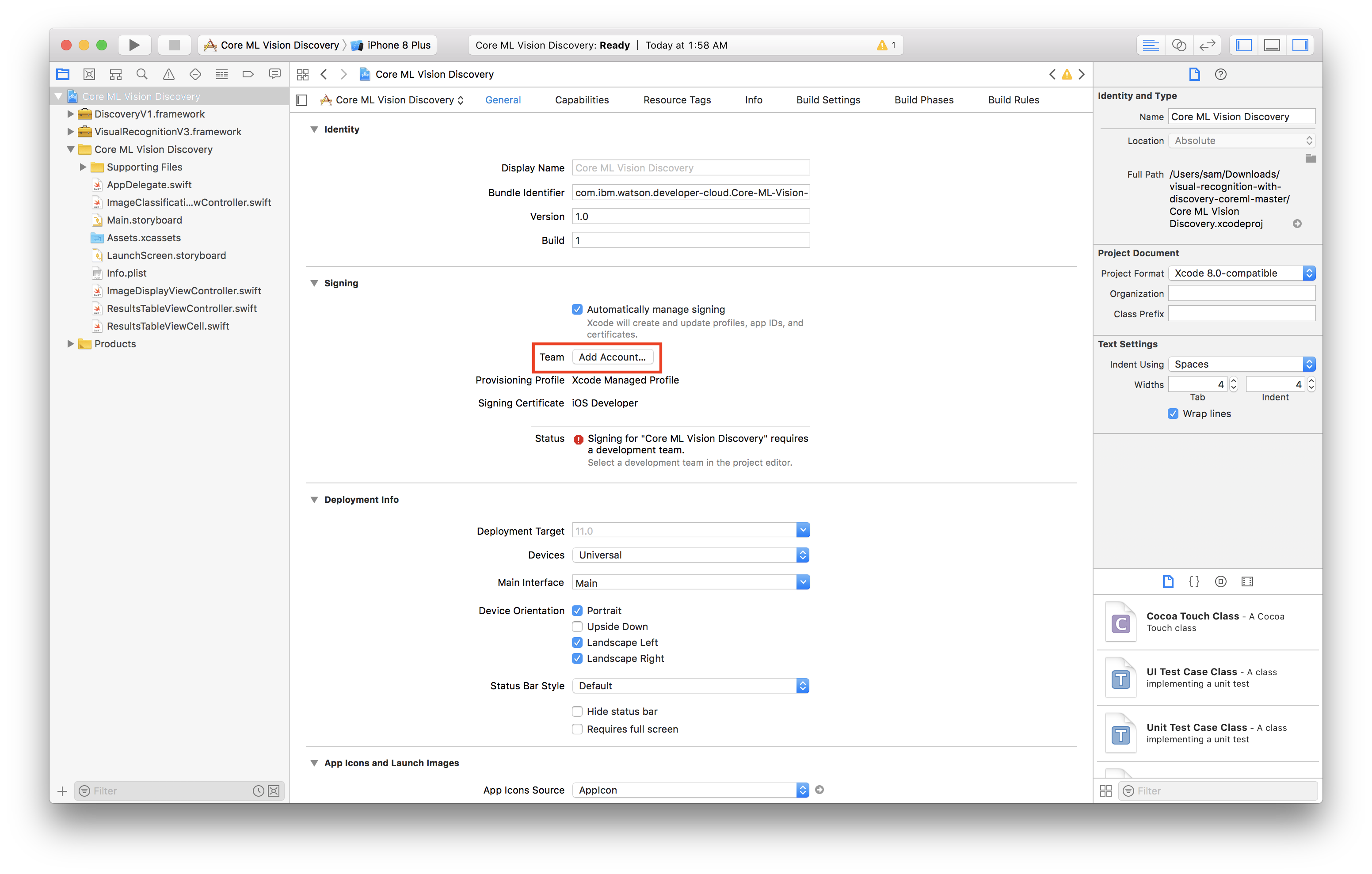

- Select the project editor (The name of the project with a blue icon)

- Under the Signing section, click Add Account

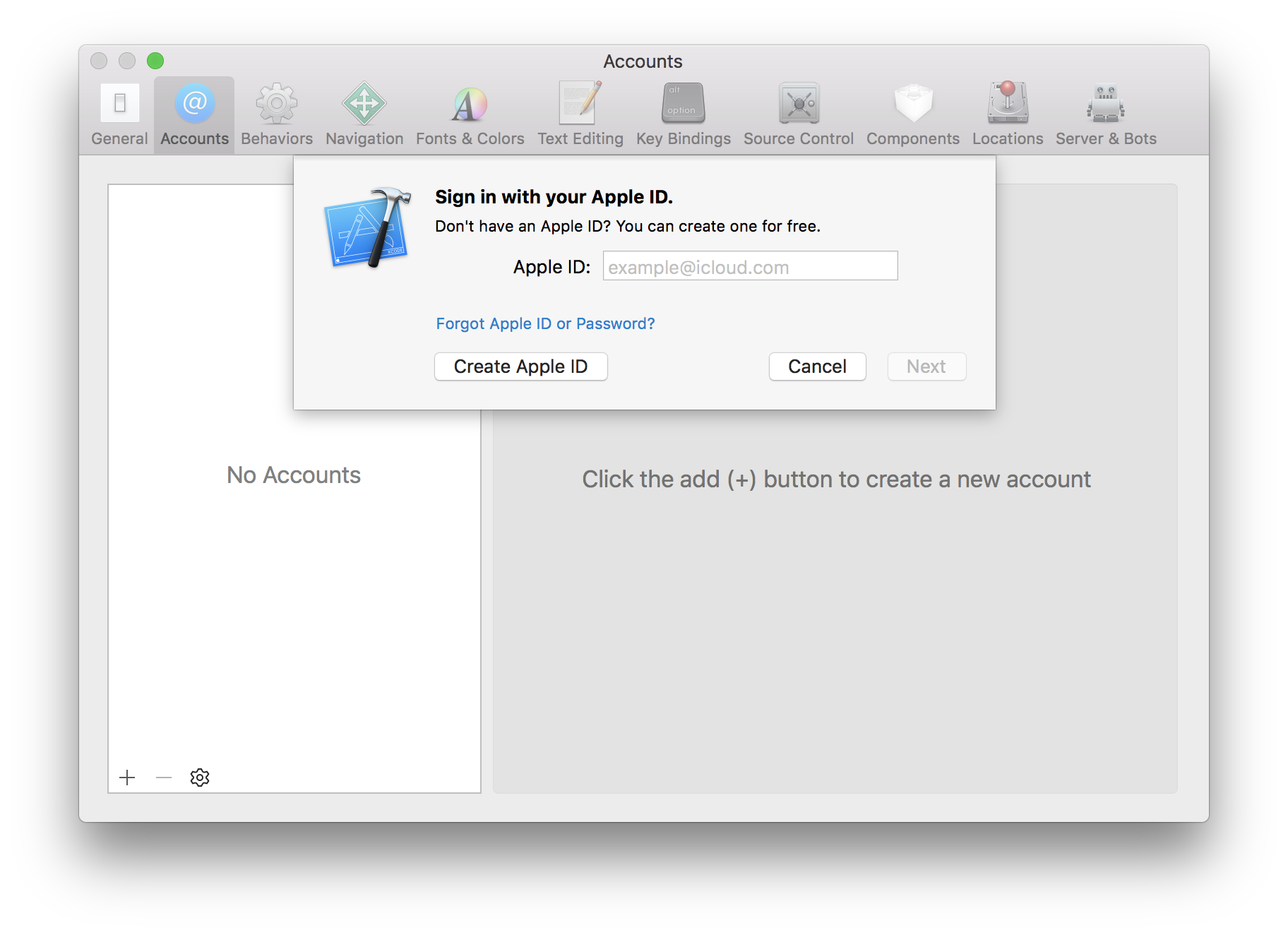

- Login with your Apple ID and password

- You should see a new personal team created

- Close the preferences window

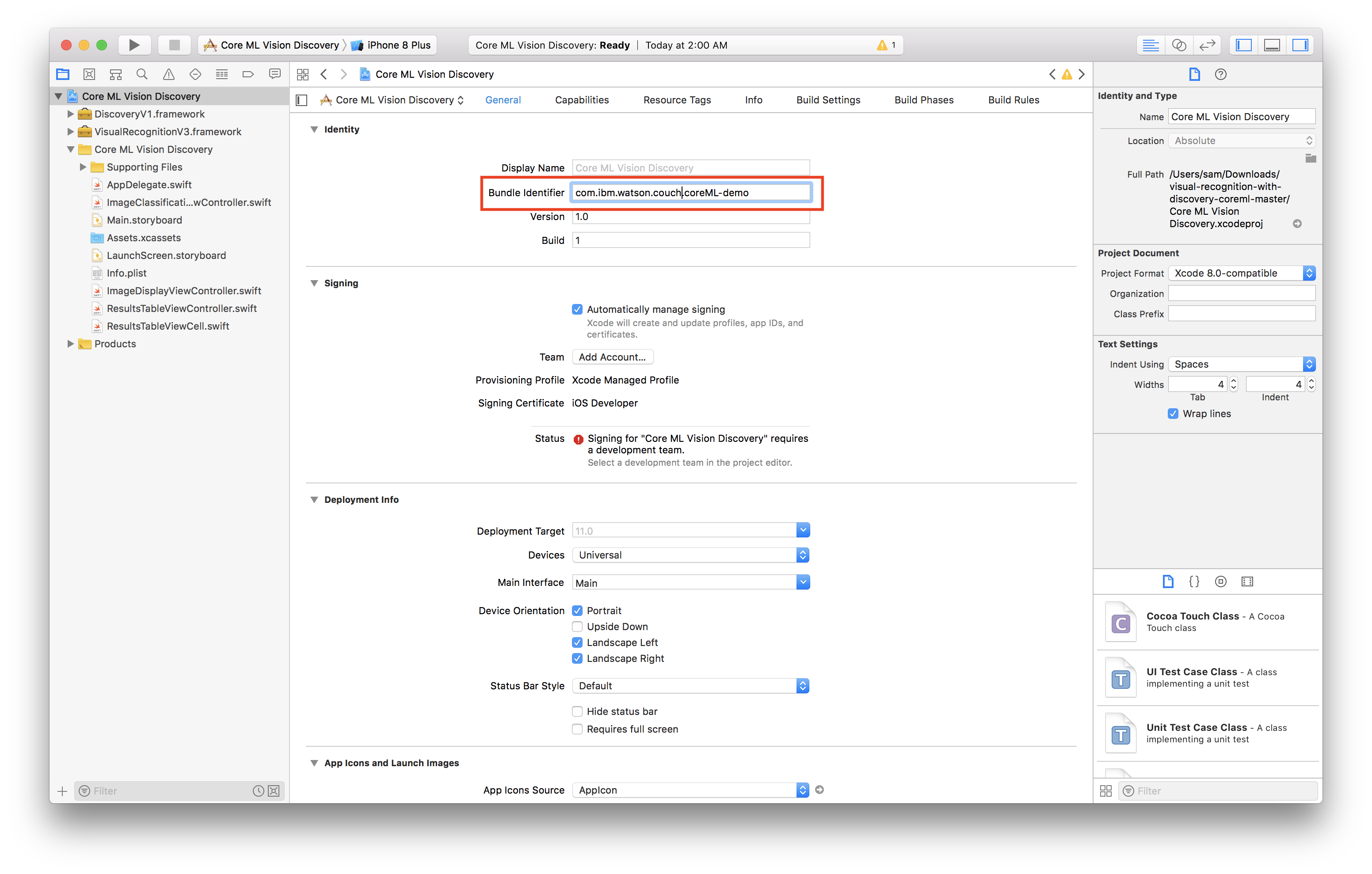

Now we have to create a certificate to sign our app with

- Select General

- Change the bundle identifier to

com.<YOUR_LAST_NAME>.Core-ML-Vision - Select the personal team that was just created from the Team dropdown

- Plug in your iOS device

- Select your device from the device menu to the right of the build and run icon

- Click build and run

- On your device, you should see the app appear as an installed appear

- When you try to run the app the first time, it will prompt you to approve the developer

- In your iOS settings navigate to General > Device Management

- Tap your email, tap trust

Now you're ready to run the app!