-

-

Notifications

You must be signed in to change notification settings - Fork 35

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add Reference Citation Extractor #191

Conversation

Add XYZ at 123 to eyecite capability Improve and recognize parallel citations

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Everything looks good and very well structured. I like that you added several test cases, not just what should work.

Only found one typo in a comment and some suggestions for docstrings . It can now be merged without any problems. These are very small details.

|

I don’t think our testing files are as large as we state on the packaging. I downloaded the ten percent sample and ran it locally. It appears to only contain 7600 rows of opinions. A far cry from ten percent moniker of the 10 million opinion objects in the database. On the flip side that extrapolates to 126,000 reference citations that could be added to the citation database. Also - the auto generated markdown here appears to reverse the gains and losses columns. I'm not sure why - but locally it did not do that - seems to create the markdown correctly—identifying the gains as gains. Above, it shows these are classified as losses, but you can see from the output that this isn’t the case. I’ll add some notes to the Eyecite report issue to clarify this. On a final note, the Eyecite report did catch a regex bug that was causing a number of essentially empty citations to be found. I fixed the bug and added several additional tests to ensure this is properly handled moving forward. |

|

Nice to see the eyecite report finding bugs; weird that it's backwards, but I guess it must have always been that way. I don't know why the 10 percent file is the wrong size, but probably I made it using a random sample method that doesn't guarantee a particular count (and probably I had an error setting the percentage?). Seems to be work OK though, I guess.

That comes out to |

|

@mlissner - I think our wires are crossed here. this found 91 reference citations (excluding the much more common I suspect references to cases) in the 7,600 sample file. So unless my math is wrong (10,549,603 opinions / 7,600) * 91 ~= 126,317 reference citations |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Man, I don't know this code all that well anymore, but I think this looks pretty good. I guess one thing that'd give me more confidence would be more tests. Would it be possible to add a few more, including ones where the current code isn't good enough (like, perhaps, it can't find the plaintiff, or other known failure modes)?

I can't quite suss them out, but I think it'd be helpful to have them written down, even if they're known to fail.

| @@ -307,6 +307,27 @@ def disambiguate_reporters( | |||

| ] | |||

|

|

|||

|

|

|||

| def filter_citations(citations: List[CitationBase]) -> List[CitationBase]: | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do we have a test case for this, so I can see what it's supposed to do?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I added a test in find test that shows how it is used. Essentially it's meant to be a back stop against older or oddly named reporters now and in the future.

For example, Miles is a reporter from a way back. It envisions a scenario where

Miles v. Smith 1 US 1 - .... 101 Miles 100 (1850), .... in 101 Miles at 105

In this scenario we have a FULL Cite, a Second Full Cite and a Short Cite. But the final one could also be a Reference Cite. The function filters out the reference citation.

Also - since reference citations are found after each full case citation is found, they are found out of sequential order. This function also sorts our newly filtered list by span.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

OK. If that's important, let's explain that in the docstring, because it's pretty hard to understand what's going on here otherwise (at least for me).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

sounds good.

Limit the names that can be used to better formatted plaintiff/defendants Add tests to show filtering/ordering reference citaitons And refactor add defendant for edge case where it could be only whitespace. typos etc.

|

I ran this latest batch with the 1 percent file on my machine and it added 1188 new correct reference citations. This extrapolates to 118,800 new reference citations in the dataset under strict standards. |

That's surprisingly few, no? I'd expect at least one or two per case, and about 10× more than that across the full data set. Are we missing citations we should be grabbing? |

|

No I dont think so. Remember these reference citations all require the format [NAME] at [PAGE]. so I think its not a format that is used as often as you would expect. I think you are right though that when we add any reference - like in Roe. we are going to have many more. That should be done in a separate PR |

The Eyecite Report 👁️Gains and LossesThere were 0 gains and 13 losses. Click here to see details.

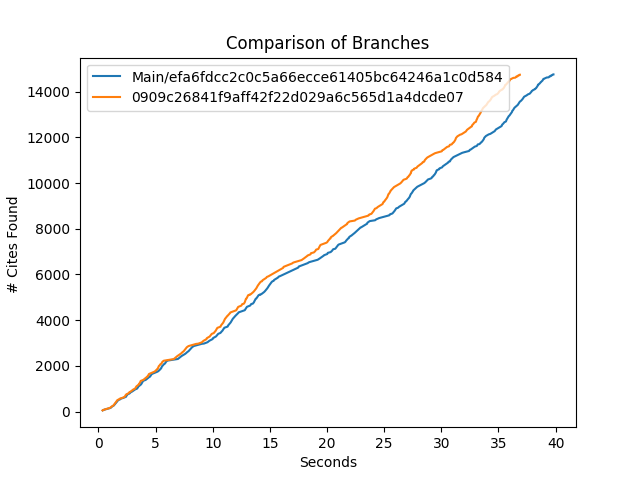

Time ChartGenerated Files |

|

@mlissner any chance this is ready? I'd like to get this merged before I update the more advanced complex citation parsing finished? |

|

Sorry, I didn't realize it was waiting on me. Merged, thank you! |

|

@mlissner thank you |

Add ReferenceCitation to find citations like Foo at 123,

Requires a full citation to be present and previous something like Foo v. Bar. 1 U.S. 1.

Also fixes the extraction of defendant/plaintiff name when parallel citations exist.