Pytorch implementation of SCL-Domain-Adaptive-Object-Detection

Please follow faster-rcnn repository to setup the environment. This code is based on the implemenatation of Strong-Weak Distribution Alignment for Adaptive Object Detection. We used Pytorch 0.4.0 for this project. The different version of pytorch will cause some errors, which have to be handled based on each envirionment.

For convenience, this repository contains implementation of:

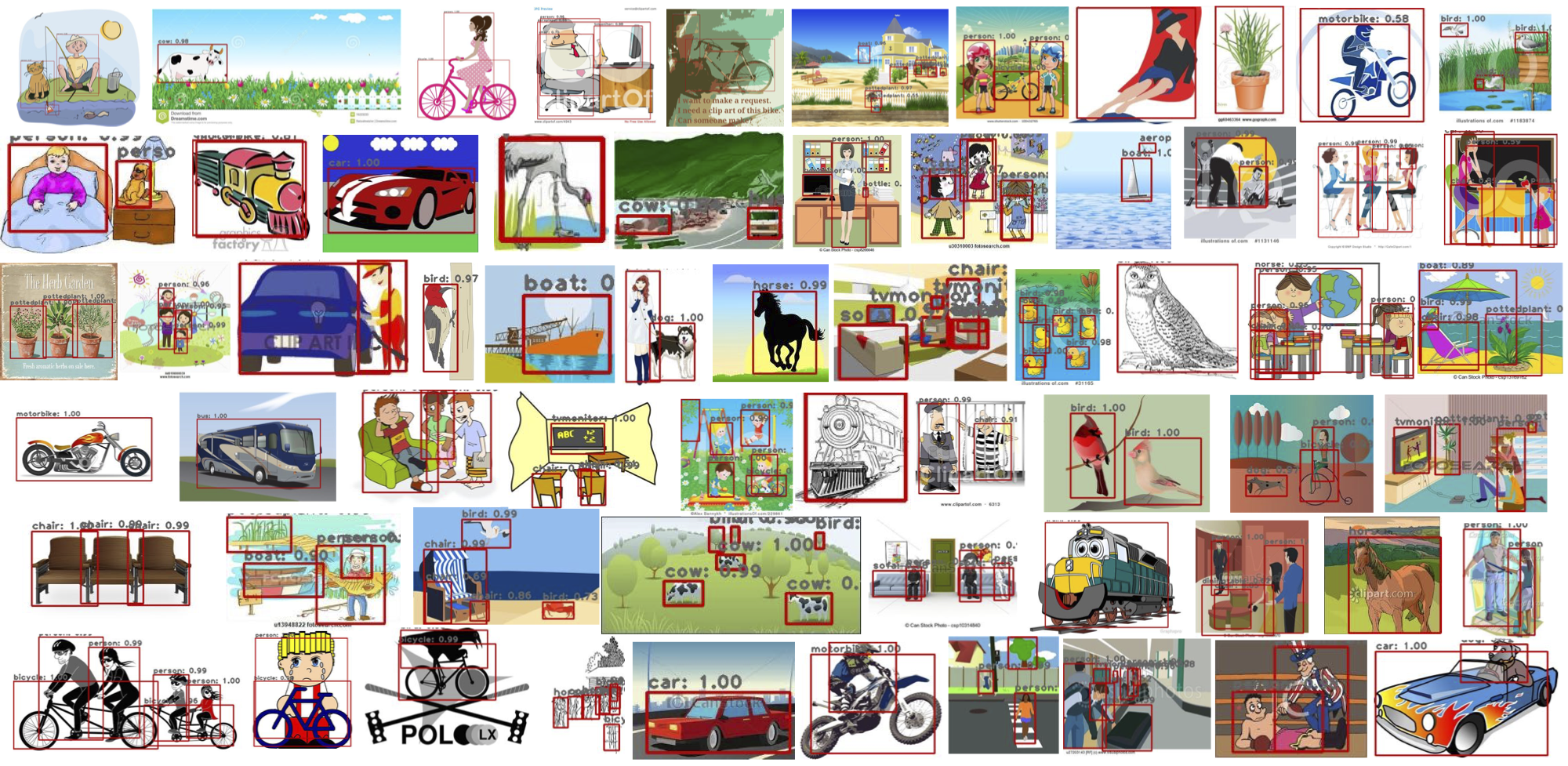

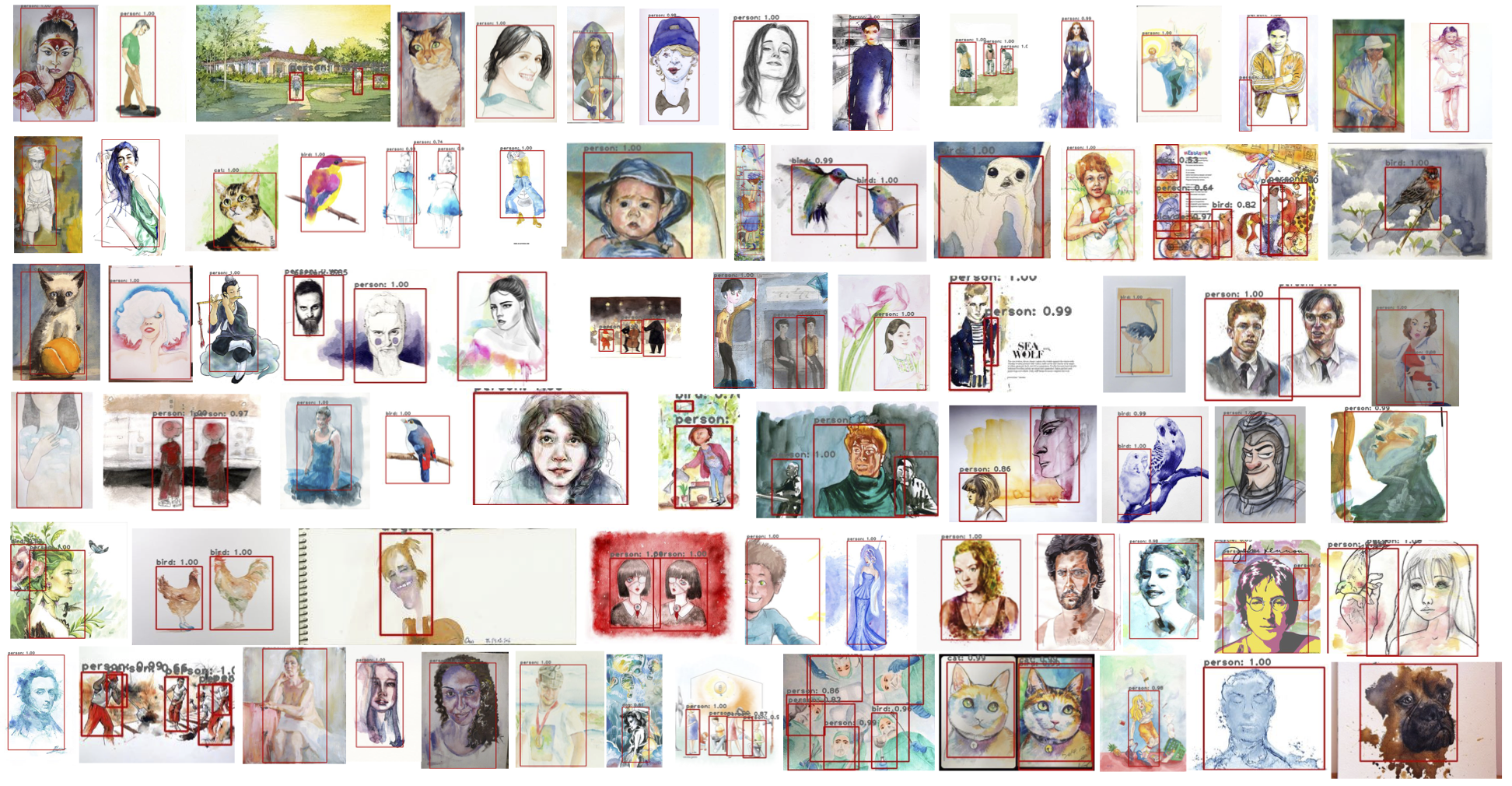

- SCL: Towards Accurate Domain Adaptive Object Detection via Gradient Detach Based Stacked Complementary Losses (link)

- Strong-Weak Distribution Alignment for Adaptive Object Detection, CVPR'19 (link)

- Domain Adaptive Faster R-CNN for Object Detection in the Wild, CVPR'18 (Our re-implementation) (link)

We have included the following set of datasets for our implementation:

- CitysScapes, FoggyCityscapes: Download website Cityscapes, see dataset preparation code in DA-Faster RCNN

- Clipart, WaterColor: Dataset preparation instruction link Cross Domain Detection.

- PASCAL_VOC 07+12: Please follow the instructions in py-faster-rcnn to prepare VOC datasets.

- Sim10k: Website Sim10k

- Cityscapes-Translated Sim10k: TBA

- KITTI - For data prepration please follow VOD-converter

- INIT - Download the dataset from this website and data preparation file can be found in this repository in data preparation folder.

It is important to note that we have written all the codes for Pascal VOC format. For example the dataset cityscape is stored as:

$ cd cityscape/VOC2012

$ ls

Annotations ImageSets JPEGImages

$ cd ImageSets/Main

$ ls

train.txt val.txt trainval.txt test.txt

Note: If you want to use this code on your own dataset, please arrange the dataset in the format of PASCAL, make dataset class in lib/datasets/, and add it to lib/datasets/factory.py, lib/datasets/config_dataset.py. Then, add the dataset option to lib/model/utils/parser_func.py and lib/model/utils/parser_func_multi.py.

Note: Kindly note that currently our code support only batch size = 1. batch size>1 may cause some errors.

Write your dataset directories' paths in lib/datasets/config_dataset.py.

for example

__D.CLIPART = "./clipart"

__D.WATER = "./watercolor"

__D.SIM10K = "Sim10k/VOC2012"

__D.SIM10K_CYCLE = "Sim10k_cycle/VOC2012"

__D.CITYSCAPE_CAR = "./cityscape/VOC2007"

__D.CITYSCAPE = "../DA_Detection/cityscape/VOC2007"

__D.FOGGYCITY = "../DA_Detection/foggy/VOC2007"

__D.INIT_SUNNY = "./init_sunny"

__D.INIT_NIGHT = "./init_night"

We used two pre-trained models on ImageNet as backbone for our experiments, VGG16 and ResNet101. You can download these two models from:

To provide their path in the code check __C.VGG_PATH and __C.RESNET_PATH at lib/model/utils/config.py.

Our trained model

We are providing our models for foggycityscapes, watercolor and clipart.

- Adaptation form cityscapes to foggycityscapes:

- VGG16 - Google Drive

- ResNet101 - Google Drive

- Adaptation from pascal voc to watercolor:

- Resnet101 - Google Drive

- Adaptation from pascal voc to clipart:

- Resnet101 - Google Drive

We have provided sample training commands in train_scripts folder. However they are only for implementing our model.

I am providing commands for implementing all three models below.

For SCL: Towards Accurate Domain Adaptive Object Detection via Gradient Detach Based Stacked Complementary Losses -:

CUDA_VISIBLE_DEVICES=$1 python trainval_net_SCL.py --cuda --net vgg16 --dataset cityscape --dataset_t foggy_cityscape --save_dir $2

For Domain Adaptive Faster R-CNN for Object Detection in the Wild -:

CUDA_VISIBLE_DEVICES=$1 python trainval_net_dfrcnn.py --cuda --net vgg16 --dataset cityscape --dataset_t foggy_cityscape --save_dir $2

For Strong-Weak Distribution Alignment for Adaptive Object Detection -:

CUDA_VISIBLE_DEVICES=$1 python trainval_net_global_local.py --cuda --net vgg16 --dataset cityscape --dataset_t foggy_cityscape --gc --lc --save_dir $2

We have provided sample testing commands in test_scripts folder for our model. For others please take a reference of above training scripts.

If you use our code or find this helps your research, please cite:

@article{shen2019SCL,

title={SCL: Towards Accurate Domain Adaptive Object Detection via

Gradient Detach Based Stacked Complementary Losses},

author={Zhiqiang Shen and Harsh Maheshwari and Weichen Yao and Marios Savvides},

journal={arXiv preprint arXiv:1911.02559},

year={2019}

}