Project Page | Paper | arXiv | Video

- Unsupervised: No need of gathering costly PBS data for training your models. Furthermore, PBNS qualitatively outperforms supervised learning.

- Physical consistency: PBNS can generate collision-free cloth-consistent predictions. Cloth consistency ensures no texture distortion or noisy surfaces.

- Cloth properties: Define per-vertex cloth properties. Neurally simulate different fabrics within the same outfit.

- Multiple layers of cloth: PBNS is the only approach able to explicitly handle multiple layers of cloth. This allows modelling whole outfits, instead of single garments.

- Complements: PBNS is not limited to garments. Outfits can be complemented with gloves, boots and more. This defines a common framework for outfit animation.

- Extremely efficient: PBNS can achieve over 14.000 FPS (for an outfit with 24K triangles!). No previous work comes even close to this level of performance. Additionally, the memory footprint of the model is just a few MBs. Because of this, PBNS is the only solution that can be applied in real scenarios like videogames and smartphones (portable virtual try-ons). Moreover, training takes barely a few minutes, even without a GPU!

- Simple formulation: PBNS formulation is the standard on 3D animation. Thus, integrating our approach into ANY rendering pipeline requires minimal engineering effort.

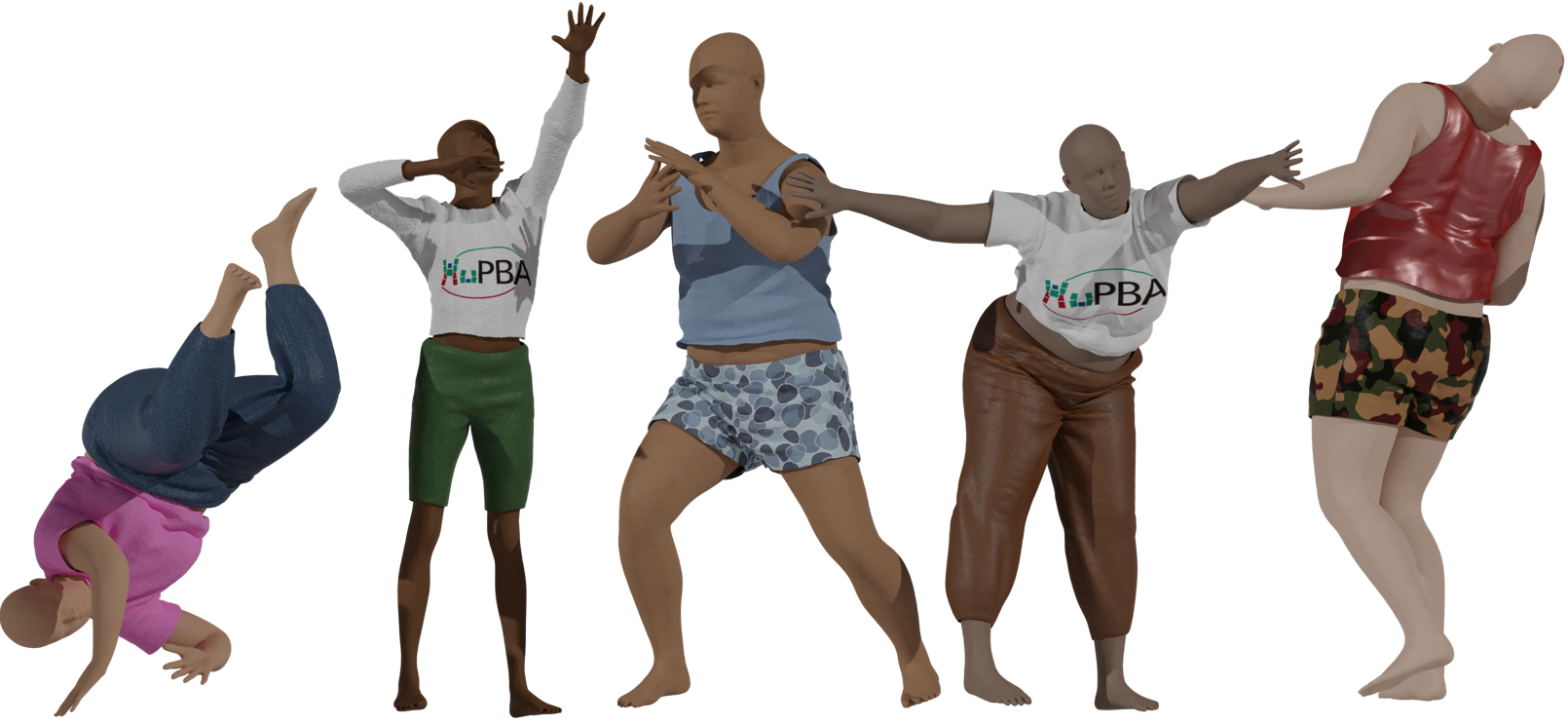

We present a methodology to automatically obtain Pose Space Deformation (PSD) basis for rigged garments through deep learning. Classical approaches rely on Physically Based Simulations (PBS) to animate clothes. These are general solutions that, given a sufficiently fine-grained discretization of space and time, can achieve highly realistic results. However, they are computationally expensive and any scene modification prompts the need of re-simulation. Linear Blend Skinning (LBS) with PSD offers a lightweight alternative to PBS, though, it needs huge volumes of data to learn proper PSD. We propose using deep learning, formulated as an implicit PBS, to un-supervisedly learn realistic cloth Pose Space Deformations in a constrained scenario: dressed humans. Furthermore, we show it is possible to train these models in an amount of time comparable to a PBS of a few sequences. To the best of our knowledge, we are the first to propose a neural simulator for cloth. While deep-based approaches in the domain are becoming a trend, these are data-hungry models. Moreover, authors often propose complex formulations to better learn wrinkles from PBS data. Supervised learning leads to physically inconsistent predictions that require collision solving to be used. Also, dependency on PBS data limits the scalability of these solutions, while their formulation hinders its applicability and compatibility. By proposing an unsupervised methodology to learn PSD for LBS models (3D animation standard), we overcome both of these drawbacks. Results obtained show cloth-consistency in the animated garments and meaningful pose-dependant folds and wrinkles. Our solution is extremely efficient, handles multiple layers of cloth, allows unsupervised outfit resizing and can be easily applied to any custom 3D avatar.

Hugo Bertiche, Meysam Madadi and Sergio Escalera

PBNS formulation also allows unsupervised outfit resizing. That is, retargetting to the desired body shape and control over tightness.

Just as standard PBNS, it can deal with complete outfits with multiple layers of cloth, different fabrics, complements, ...

Due to the simple formulation of PBNS and no dependency from data, it can be used to easily enhance any 3D custom avatar with realistic outfits in a matter of minutes!

This repository is split into two folders. One is the standard PBNS for outfit animation. The other contains the code for PBNS as a resizer.

Within each folder, you will find instructions on how to use each model.

@article{10.1145/3478513.3480479,

author = {Bertiche, Hugo and Madadi, Meysam and Escalera, Sergio},

title = {PBNS: Physically Based Neural Simulation for Unsupervised Garment Pose Space Deformation},

year = {2021},

issue_date = {December 2021},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

volume = {40},

number = {6},

issn = {0730-0301},

url = {https://doi.org/10.1145/3478513.3480479},

doi = {10.1145/3478513.3480479},

abstract = {We present a methodology to automatically obtain Pose Space Deformation (PSD) basis for rigged garments through deep learning. Classical approaches rely on Physically Based Simulations (PBS) to animate clothes. These are general solutions that, given a sufficiently fine-grained discretization of space and time, can achieve highly realistic results. However, they are computationally expensive and any scene modification prompts the need of re-simulation. Linear Blend Skinning (LBS) with PSD offers a lightweight alternative to PBS, though, it needs huge volumes of data to learn proper PSD. We propose using deep learning, formulated as an implicit PBS, to un-supervisedly learn realistic cloth Pose Space Deformations in a constrained scenario: dressed humans. Furthermore, we show it is possible to train these models in an amount of time comparable to a PBS of a few sequences. To the best of our knowledge, we are the first to propose a neural simulator for cloth. While deep-based approaches in the domain are becoming a trend, these are data-hungry models. Moreover, authors often propose complex formulations to better learn wrinkles from PBS data. Supervised learning leads to physically inconsistent predictions that require collision solving to be used. Also, dependency on PBS data limits the scalability of these solutions, while their formulation hinders its applicability and compatibility. By proposing an unsupervised methodology to learn PSD for LBS models (3D animation standard), we overcome both of these drawbacks. Results obtained show cloth-consistency in the animated garments and meaningful pose-dependant folds and wrinkles. Our solution is extremely efficient, handles multiple layers of cloth, allows unsupervised outfit resizing and can be easily applied to any custom 3D avatar.},

journal = {ACM Trans. Graph.},

month = {dec},

articleno = {198},

numpages = {14},

keywords = {physics, garment, simulation, deep learning, animation, pose space deformation, neural network}

}