-

Notifications

You must be signed in to change notification settings - Fork 790

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Feature-Request] HF-Net #1215

Comments

|

Hi, I am also looking at implementing of global descriptor for rtabmap. There are some repos already implemented in HF-NET TensorRT also, I tested it. Should we consider that? should not be so hard to add to the rtabmap codebase. I just don't know how to integrate it with RTABMap memory management for image retrieval. |

|

As explained in #1105, we added an interface to feed a global descriptor (can be any format, see also rtabmap_python to easily compress/uncompress numpy matrices) at the same time than image or lidar data to rtabmap node. For images, the easiest way would be to make a node combining the image topics into a RGBDImage topic (including global descriptor field), connected as input to rtabmap node (with Currently, there is no internal loop closure detector based on global descriptor. An external loop closure detector can get global descriptors of all nodes in WM (and LTM) by calling service Back in the days, when we added the GlobalDescriptor table in the database the goal was indeed to add NETVLAD global descriptor support to improve/combine with the actual loop closure detection done inside RTAB-Map (currently based only on local visual descriptors, a.k.a. bags-of-words approach). Currently the external loop closure detection seems to most flexible for any loop closure detection approach, though it requires ROS/ROS2. I guess to do it internally in standalone version we would need to add python global descriptor approach (like we did for external python ML local keypoints/descriptors or for ML feature matching) to avoid re-implementing in c++ every new ML global descriptors coming up. It has been a while since I read on the subject, but is there a common way to do global descriptor matching in current state-of-the-art that could be used with many global descriptors? Is just a naive nearest neighbor approach between global descriptors enough to find the closest ones? If so, that could worth putting the time to implement it inside RTAB-Map so that we can better integrate loop closure hypotheses between global descriptors and BOW (combine them or switch between them). I think we would not have to modify the memory management approach, as it is based on the current actual loop closure hypotheses to know which ones to retrieve from LTM first (loop closure hypotheses would already include the score of the global descriptor matching). |

In fact, I was relatively vague about this part of the concept before. I just tend to introduce some deep learning methods in loop closure detection, because they should be able to adapt to changing environments better. For odometry I tend to use traditional methods because this part is well-modeled. I'm also curious about what form the global descriptor can take. cmr_lidarloop and VLAD both use feature vectors, and KNN is used for matching. I did some rough searches and there didn't seem to be any more good options for matching. The summary here can be referred to. Therefore, I think we can first implement global retrieval based on KNN. My plan is to add nanoflann support first #906. This should help both with performance and cross-platform usage. Then I can add ANMS by the way #1127.

Using onnxruntime seems to be much more convenient. But I'm new to dotnet. |

The biggest obstacle to adding nanoflann is maintaining compatibility of parameters. Adding it to the end of GMS can lead to confusing logic. Any suggestions? |

|

I think matching global descriptor with KNN could be a good first step. For nanoflann implementation, I left a new comment on #906 on how that could be integrated. |

|

Good news, HF-Net now works on OAK cameras. Performance in preliminary tests looks pretty good, slightly faster than SuperPoint with 320×200 input. If the global head is removed, it has acceptable real-time performance even with 640×400 input. I won't provide this cropped model because we definitely want to use the full HF-Net. You can download the converted blob file from my new repository. It should be noted that I did not use the previous ONNX file for conversion, but took a different route. I directly converted the TensorFlow model to OpenVINO IR and then compiled it into a blob file. I gusse that this may avoid some model conversion problems, such as the FP16 overflow issue encountered by the SuperPoint model on L2 Norm. Below is the OpenVINO IR visualized in Netron. You can see that in the previous ONNX file L2 Norm was broken down into a series of basic operations, while in OpenVINO IR it is a single NormalizeL2 layer. I've added local head part in #1193 and it looks good. It is also easy to add the global head part, but I am a little unclear about your previous definition of GlobalDescriptor. I know how to fill |

|

That looks great! Note that #1193 has been in Draft mode for a while, just checking if it is on purpose or it could be ready to be reviewed/merged. For GlobalDescriptor: rtabmap/corelib/include/rtabmap/core/GlobalDescriptor.h Lines 54 to 56 in 33b875e

These fields don't have a fixed purpose yet. The CMR loop closure detector is using |

|

I filled the global descriptor. Unfortunately this part of the data still looks wrong. All values are close to 0.015625. This means that a numerical overflow occurred somewhere. I need to debug the model again. My main suspicion is the ReduceSum layer. It's often dangerous in FP16 inference. ReduceSum was also generated when L2 Norm was decomposed in the previous SuperPoint model. I submitted the code first because after debugging the model I only needed to update the blob file. The model may require scaling in some parts. |

|

I have updated the blob files. The real problem with global head is caused by NormalizeL2 in OpenVINO. Elements after intra-normalization are all 1 or -1. This is not data overflow or underflow, but rather a failure to sum along the set dim. I managed to avoid the problem and now all outputs look reasonable. |

|

Closing since HF-Net inference has been implemented. Future work will explore the use of global descriptor. |

@matlabbe @cdb0y511

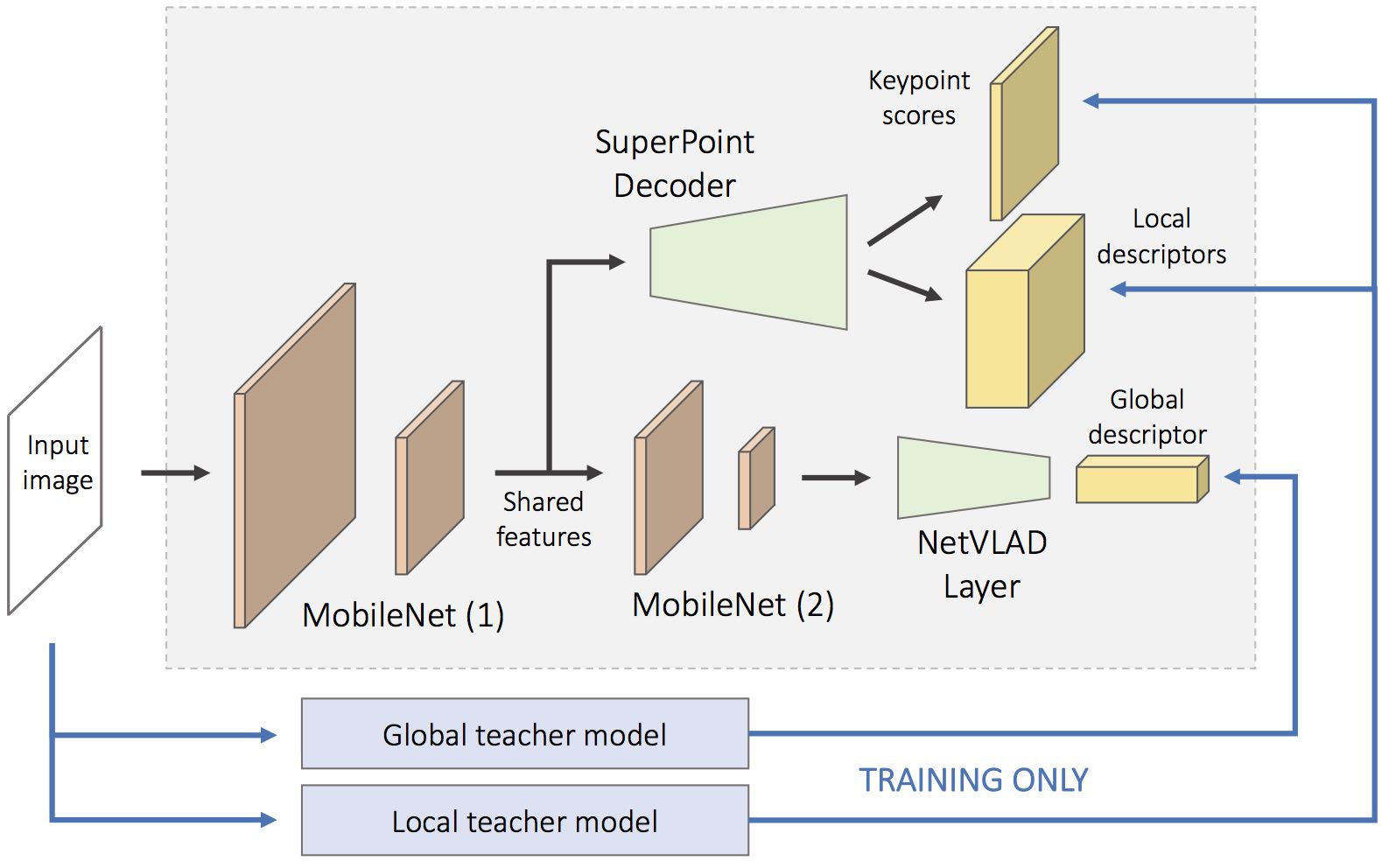

Hi, when I further looked at the work related to SuperPoint, I noticed that it seemed that HF-Net could also be added. It provides global descriptors for coarse localization in addition to SuperPoint.

RTAB-Map reserves an interface for global descriptor, but does not implement related functions (#1105). Therefore, I am thinking about whether this part can be implemented universally and how to combine it with the existing memory management mechanism of RTAB-Map. My initial feeling is that it can help retrieval, and secondly it may be further used to improve loop closure hypotheses.

The difficulty in integrating HF-Net is that it is implemented in TensorFlow 1. I only have a few old devices with the required environment. So I also converted it to ONNX first. But I'm more curious about whether it will work on OAK. That would be really fun if it could. This model file looks a bit large, I guess it is mainly because of the fully connected layer at the end of the global head. Even if OAK can't run it, I will try to prune it into a MobileNet based SuperPoint implementation. Some people have tried to train SuperPoint based on MobileNet directly but did not achieve good results. If OAK can run it, I will not develop the inference solution on the host for the time being. But if you are interested, you can also develop it. This is an ONNX file that I converted but haven't tested yet.

https://drive.google.com/file/d/1xKJi1RfKZTLQ0JACD4H76HRpxr6kFgtn/view?usp=sharing

The text was updated successfully, but these errors were encountered: