-

Notifications

You must be signed in to change notification settings - Fork 2

Home

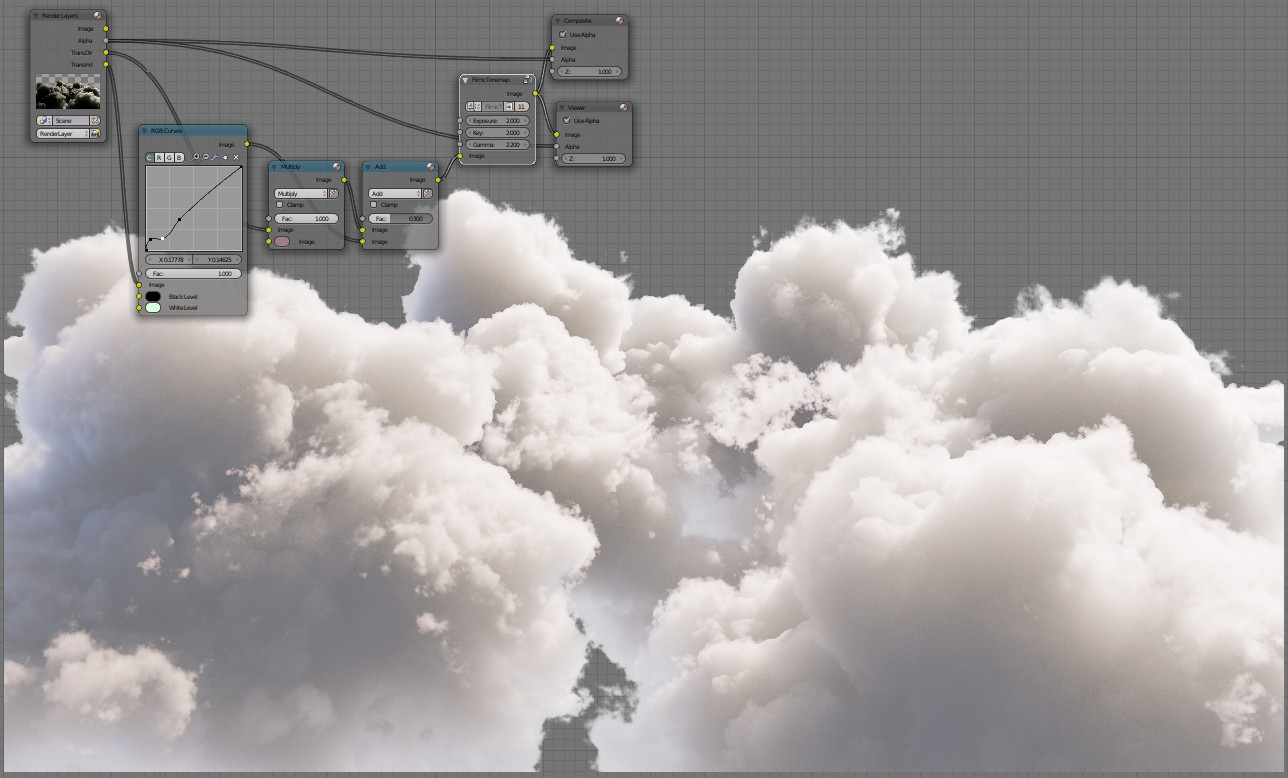

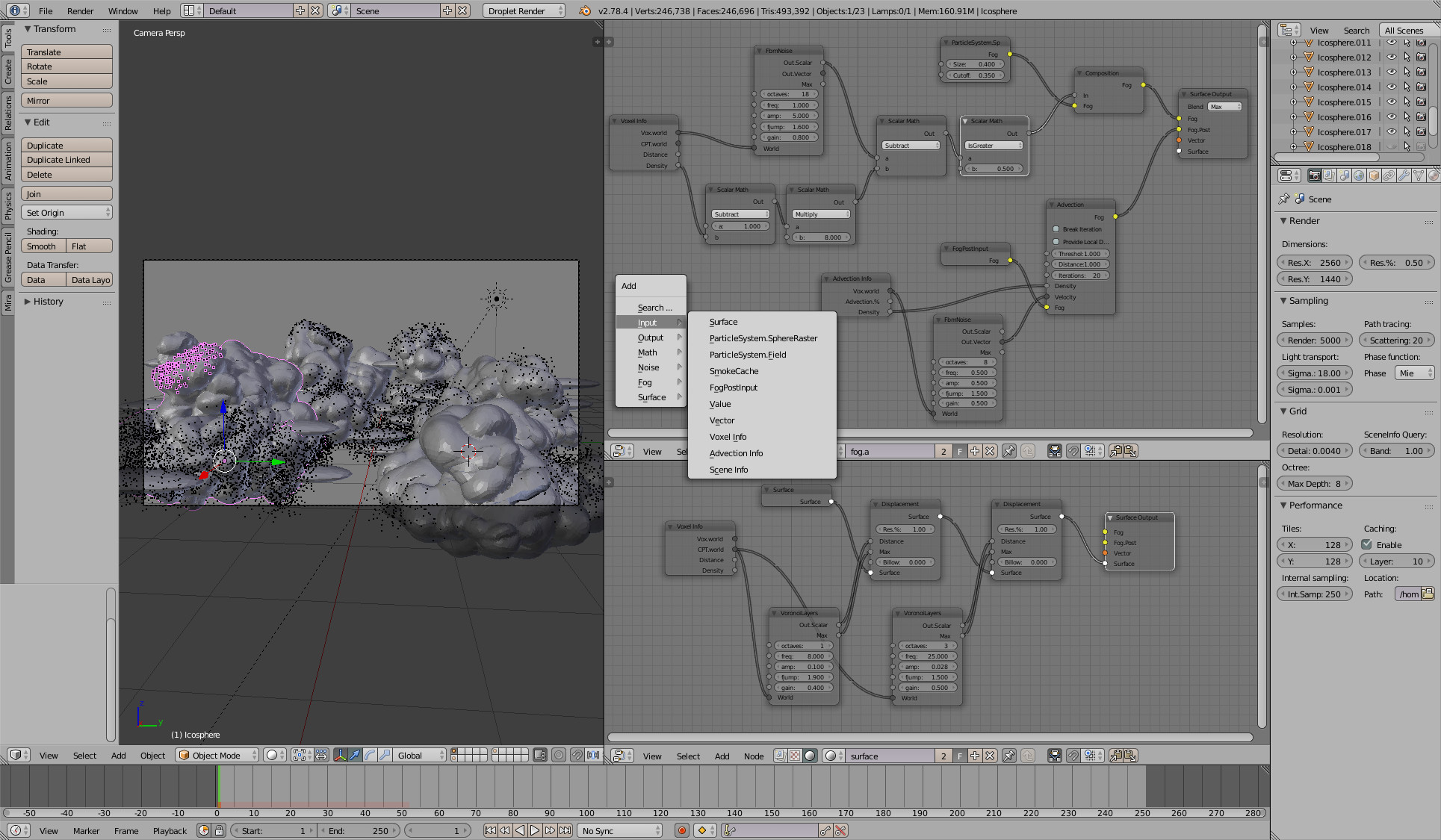

Volumetric cloud modeling and rendering for Blender.

-

Node based approach to implicit surface and fog volume modeling. Initial properties for clouds, fog and velocity fields are modeled with Blender. Surfaces and particle systems are then internally converted, modified and stored sparsely with OpenVDB grids. Nodes for typical surface and volume operations, such as displacement, composition and advection are provided.

-

Physically based cloud rendering. A render engine specifically designed for volumetric rendering. Hybrid code for simultaneous distance field and fog volume sampling. Full global illumination with sky lighting. Mie phase sampling with spectral approximation. Every step from modeling to rendering is highly multithreaded and vectorized. Efficient parallel acceleration is achieved using Intel's TBB library.

-

Full blender integration with seamless data export/import. No temporary files, render targets or additional export steps required. Works with official Blender builds starting from 2.77.

Being relatively new the project is highly WIP. To achieve the desired high realism current focus is to improve the tools for cloud modeling. This also involves lots of time consuming testing with different parameters and potential approaches. Documentation and examples will be added later, when the project is considered ready for public testing.

Improvements to the rendering side will follow the modeling progress. Current implementation is a brute-force Monte-Carlo path tracer with basic hierarchical and MIS optimizations. The renderer is currently CPU-only. However, the code is purposely compact and independent of OpenVDB or (optionally) any other libraries, and as such readily portable to GPU.

Note that Droplet does not apply any tone mapping or gamma correction. For correct results these will always have to be done manually afterwards. It is advisable to do all the testing with blender started from a terminal. Uncached displacement and advection steps may depending on the resolution and complexity take a significant portion of time, and before the actual rendering starts, the engine does not currently provide any additional feedback besides stdout.

Only Linux operating systems on x86_64 are currently supported. It should be possible to also build and work on Windows, although testing has been less active recently.

-

Install or build the required dependencies:

- openvdb

- tbb

- python3.5 + numpy

- embree (optional, for occlusion testing)

- cmake

-

Create a build directory, for example:

$ mkdir build-release $ cd build-release -

Droplet uses Cmake to configure itself. By default a release version with SSE3 support will be built. AVX2 or SSE4.2 can be enabled by passing an additional definition. For SSE4.2, for example:

$ cmake -DUSE_SSE4=ON ..

-

Build the project by typing

$ make -j

-

Copy or symlink the produced libdroplet.so to Python library directory, usually /usr/lib/python3.5/site-packages. Copy the contents of 'addon' to Blender addons location by first creating a directory for it, such as 'droplet_render'. Enable the addon from Blender's settings menu.