This package includes fastfood and dense transformation wrappers for pytorch modules, primarily to reproduce results from Li, Chunyuan, et al. "Measuring the intrinsic dimension of objective landscapes." arXiv preprint arXiv:1804.08838 (2018) - see below for info.

-

All contributions are welcome! Please raise an issue for a bug, feature or pull request!

-

Give this repo a star! ⭐

pip install intrinsic-dimensionality

import os

os.environ["CUDA_VISIBLE_DEVICES"] = DEVICE_NUM

import torch

from torch import nn

import torchvision.models as models

from intrinsic import FastFoodWrap

class Classifier(nn.Module):

def __init__(self, input_dim, n_classes):

super(Classifier, self).__init__()

self.fc = nn.Linear(input_dim, n_classes)

self.maxpool = nn.AdaptiveMaxPool2d(1)

def forward(self, x):

x = self.maxpool(x)

x = x.reshape(x.size(0), -1)

x = self.fc(x)

return x

def get_resnet(encoder_name, num_classes, pretrained=False):

assert encoder_name in ["resnet18", "resnet50"], "{} is a wrong encoder name!".format(encoder_name)

if encoder_name == "resnet18":

model = models.resnet18(pretrained=pretrained)

latent_dim = 512

else:

model = models.resnet50(pretrained=pretrained)

latent_dim = 2048

children = (list(model.children())[:-2] + [Classifier(latent_dim, num_classes)])

model = torch.nn.Sequential(*children)

return model

# Get model and wrap it in fastfood

model = get_resnet("resnet18", num_classes=YOUR_NUMBER_OF_CLASSES).cuda()

model = FastFoodWrap(model, intrinsic_dimension=100, device=DEVICE_NUM)Full thread about reproducibility results is available here. Note that some hyper-parameters were not listed in the paper - I raised issues on Uber's Github repo here.

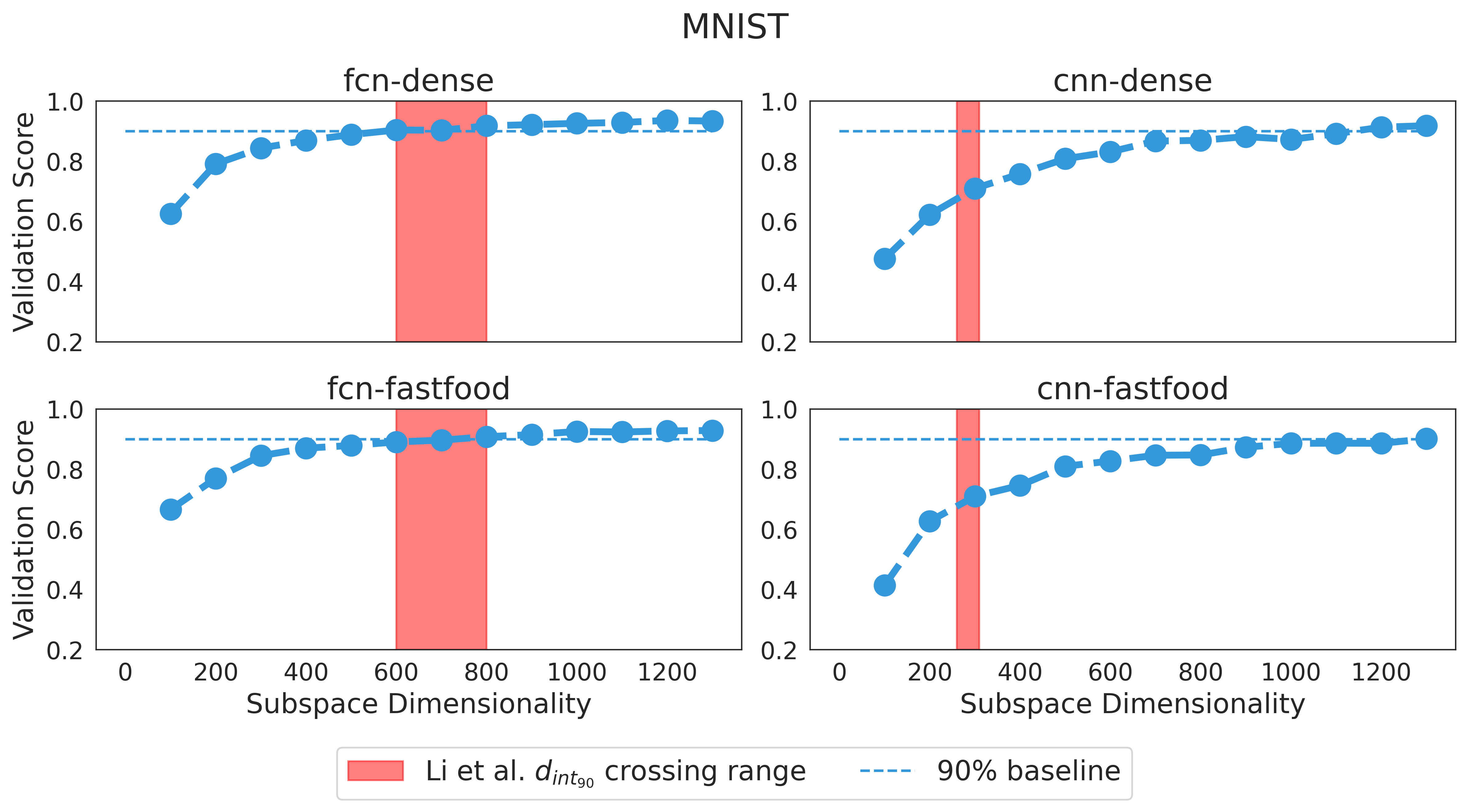

I am able to reproduce their MNIST results with LR=0.0003, batch size 32 for both dense and fastfood transformations using FCN (fcn-dense, fcn-fastfood). However, not for LeNet (cnn-dense, cnn-fastfood).

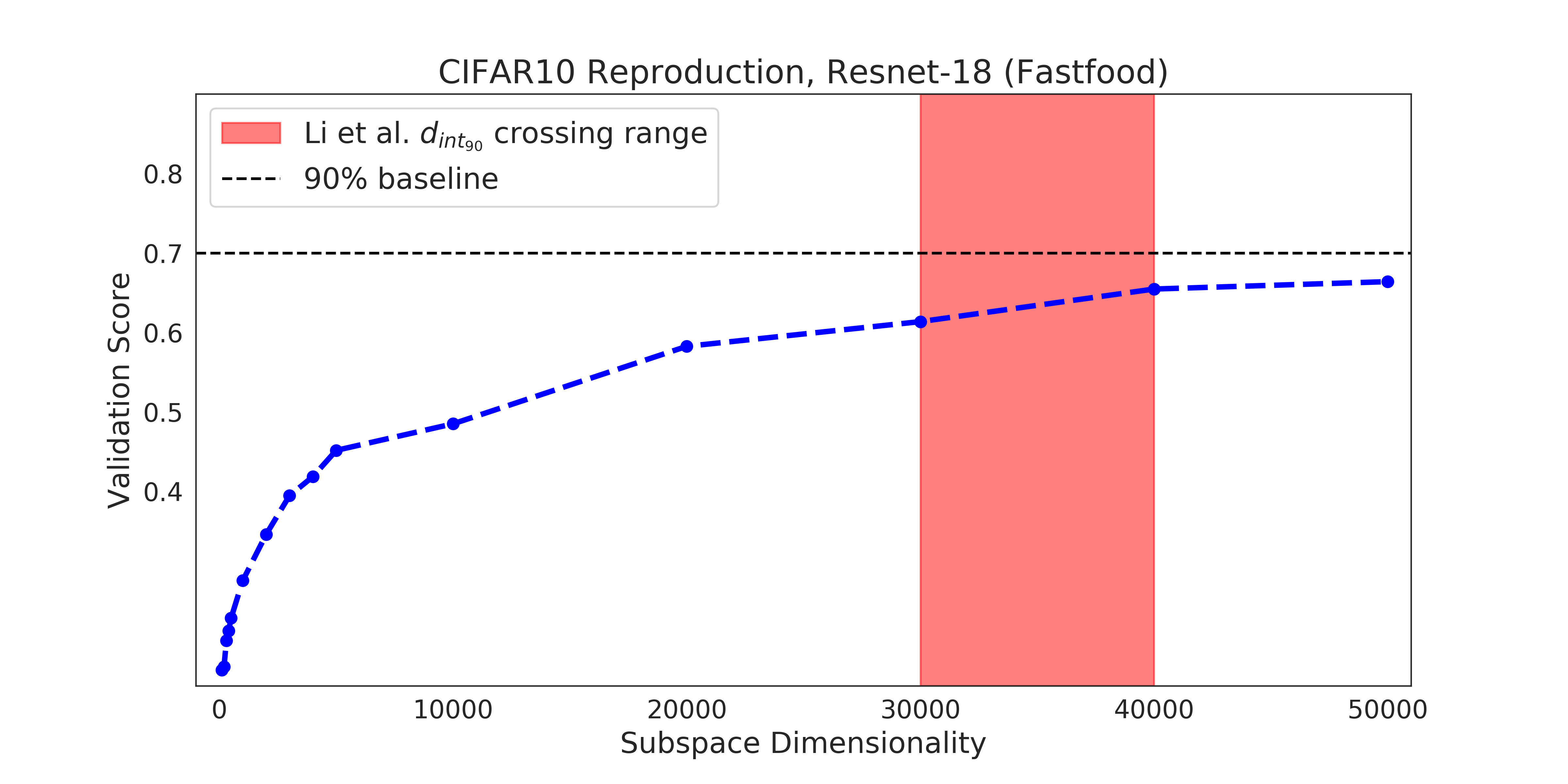

For CIFAR-10, with far larger resnet (Resnet-18 11mil param) vs 280k 20-layer resnet used in the paper, results appear to be similar. FCN results in appendix (Fig S7) suggest some variation is to be expected.

@misc{jgamper2020intrinsic,

title = "Intrinsic-dimensionality Pytorch",

author = "Gamper, Jevgenij",

year = "2020",

url = "https://github.com/jgamper/intrinsic-dimensionality"

}

@article{li2018measuring,

title={Measuring the intrinsic dimension of objective landscapes},

author={Li, Chunyuan and Farkhoor, Heerad and Liu, Rosanne and Yosinski, Jason},

journal={arXiv preprint arXiv:1804.08838},

year={2018}

}