This repository contains the Unity project and scenes, and the Python3 scripts for experiments described in the article:

“Neural interface instrumented virtual reality headsets: Toward next-generation immersive applications,” IEEE Systems, Man, and Cybernetics Magazine, vol. 6, no. 3, pp. 20–28, 2020. Link

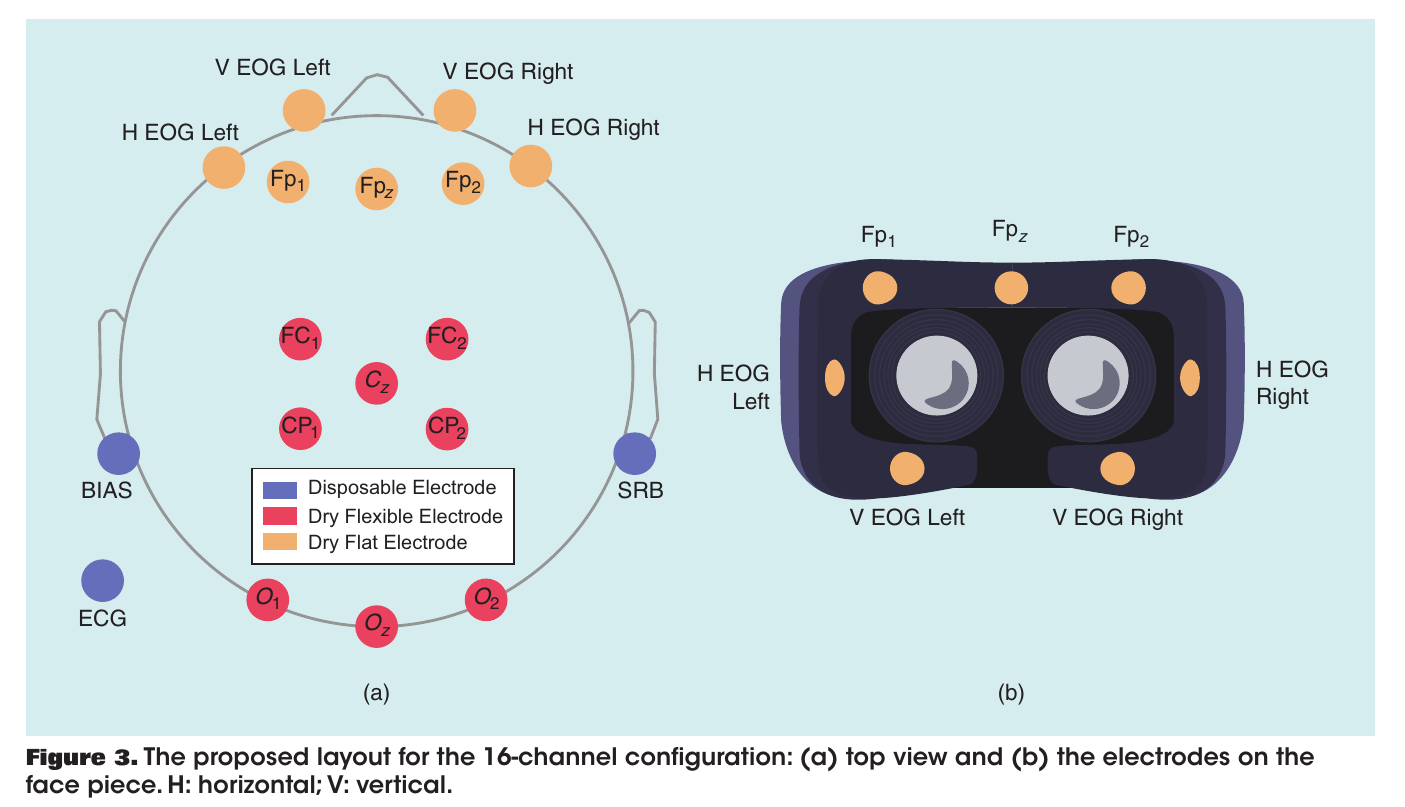

The article describes the development of system that combines an open-source, wireless, and multimodal (BMI) with an off-the-shelf head-mounted display (HMD) for VR/AR application. The proposed system is capable of measuring electroencephalography (EEG), electrooculography (EOG), electrocardiography (ECG) and facial electromyography (EMG) signals in a portable, wireless and non-invasive way.

The BMI-HMD system (Figure 3) is comprised of three main parts:

- The biopotential amplifier module, based on the OpenBCI Cyton board (Figure 1a and 1b)

- The HMD used was the Oculus Development Kit 2 (Figure 2)

- Dry electrodes (Figure 1c and 1d)

Three validation scenarios were developed to acquire EEG, EOG and facial EMG signals. Moreover, in each of these three scenarios ECG is recorded.

It is comprised of three 30-second stages where the user performs:

- resting with open eyes

- staring at a blinking sphere (12.5 Hz) to evoke SSVEP

- resting with closed eyes

The user is asked to follow a target for approximately 5 minutes. The target moved from the center of the field-of-view to one of eight positions: right, right-up, up, left-up, left, left-down, down, and right-down.

Facial EMG signals can be used to detect different facial expressions. During 5 minutes, the user is presented with one of four cues that indicate what facial expression to perform.

Besides Unity and Python3, the experiments require MuLES for the acquisition and synchronization of physiological signals.

- Execute MuLES, select the device to stream and port 30000.

- Click on Play on the Unity scene under evaluation

- Run the Python3 that corresponds to the evaluation scenario.