-

Notifications

You must be signed in to change notification settings - Fork 37

Deployments

By default k3d add cluster to ~/.kube/config file.

We can choose default cluster with

> kubectl config use-context k3d-<cluster-name>or setting KUBECONFIG enviroment

> export KUBECONFIG=$(k3d kubeconfig write <cluster-name>)K3D provide some commands to manage kubeconfig

get kubeconfig from cluster dev

> k3d kubeconfig get <cluster-name>create a kubeconfile file in $HOME/.k3d/kubeconfig-dev.yaml

> kubeconfig write <cluster-name>get kubeconfig from cluster(s) and merge it/them into a file in $HOME/.k3d or another file

> k3d kubeconfig merge ...Deploy kuebernetes dashboard with

> kubectl config use-context k3d-<cluster-name>

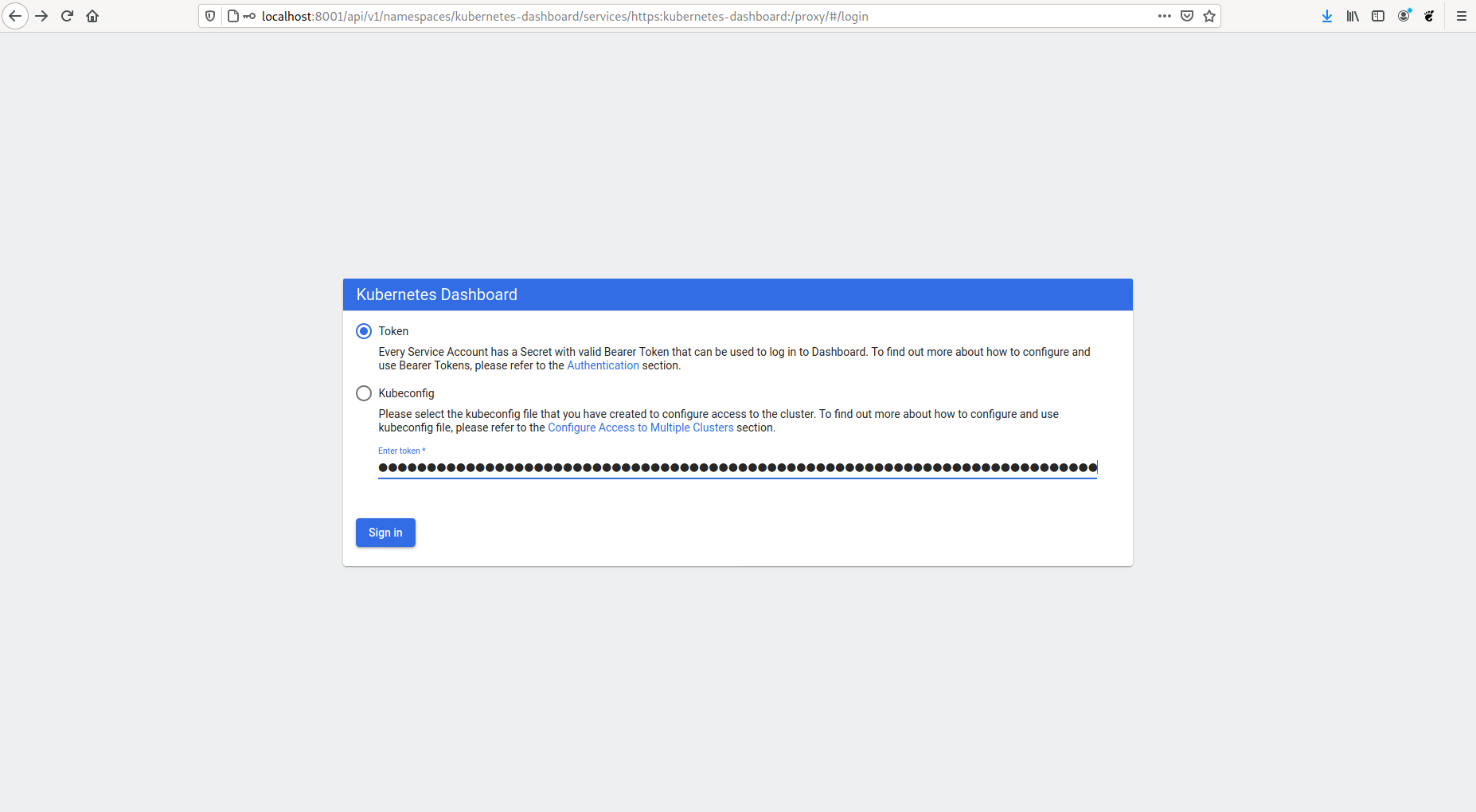

> kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yamlNow we need create a dashboard acount and bind this acount to cluster-admin-role

> kubectl create serviceaccount dashboard-admin-sa

> kubectl kubectl create clusterrolebinding dashboard-admin-sa --clusterrole=cluster-admin --serviceaccount=default:dashboard-admin-saafter create this user get user token with

> kubectl describe secret $(kubectl get secrets | grep ashboard-admin-sa | awk '{ print $1 }')

Name: dashboard-admin-sa-token-bcf79

Namespace: default

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin-sa

kubernetes.io/service-account.uid: 96418a0c-60bd-4eab-aff9-4df4c6c46408

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 570 bytes

namespace: 7 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjNDVFdKdVBZNndaVk5RWkh6dUxCcVRJVGo4RlQwUjFpWHg4emltTXlxRGsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRhc2hib2FyZC1hZG1pbi1zYS10b2tlbi1iY2Y3OSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tc2EiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5NjQxOGEwYy02MGJkLTRlYWItYWZmOS00ZGY0YzZjNDY0MDgiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpkYXNoYm9hcmQtYWRtaW4tc2EifQ.sfnBn4BWTpMK8_jd7EL-G2HvWSE7unW1lqsQ27DMT1D0WpuOQ-o1cEkrEqXFvXIYW8b7ciVcuNHhtQuWswmPbfQ6C8X_d1vbdpXoopVLPvkuHpFTbNMKtagBWJQlY1IepnCP_n4Q6neO82tjJ4uD_zC86RZ9-MebrVYNU5mjGtJ7XygH3c577wqBeIh1YgOvhY_K62QY3FJOHsX1_nTdKF4vphnzQjdIXhkpdCbzYuhvAmg1S7KOS6XFLOH9ytc_elY8k4T7w1UnmxmNPUIQo2fD4hQI-VqT42LruE5CXsrqPxml1aFz-FOID3049m7ZpQez70Ro3n73eHnKSLrDdANow execute

> kubectl proxy

Starting to serve on 127.0.0.1:8001now open URL: http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/#/login

and use Token to login

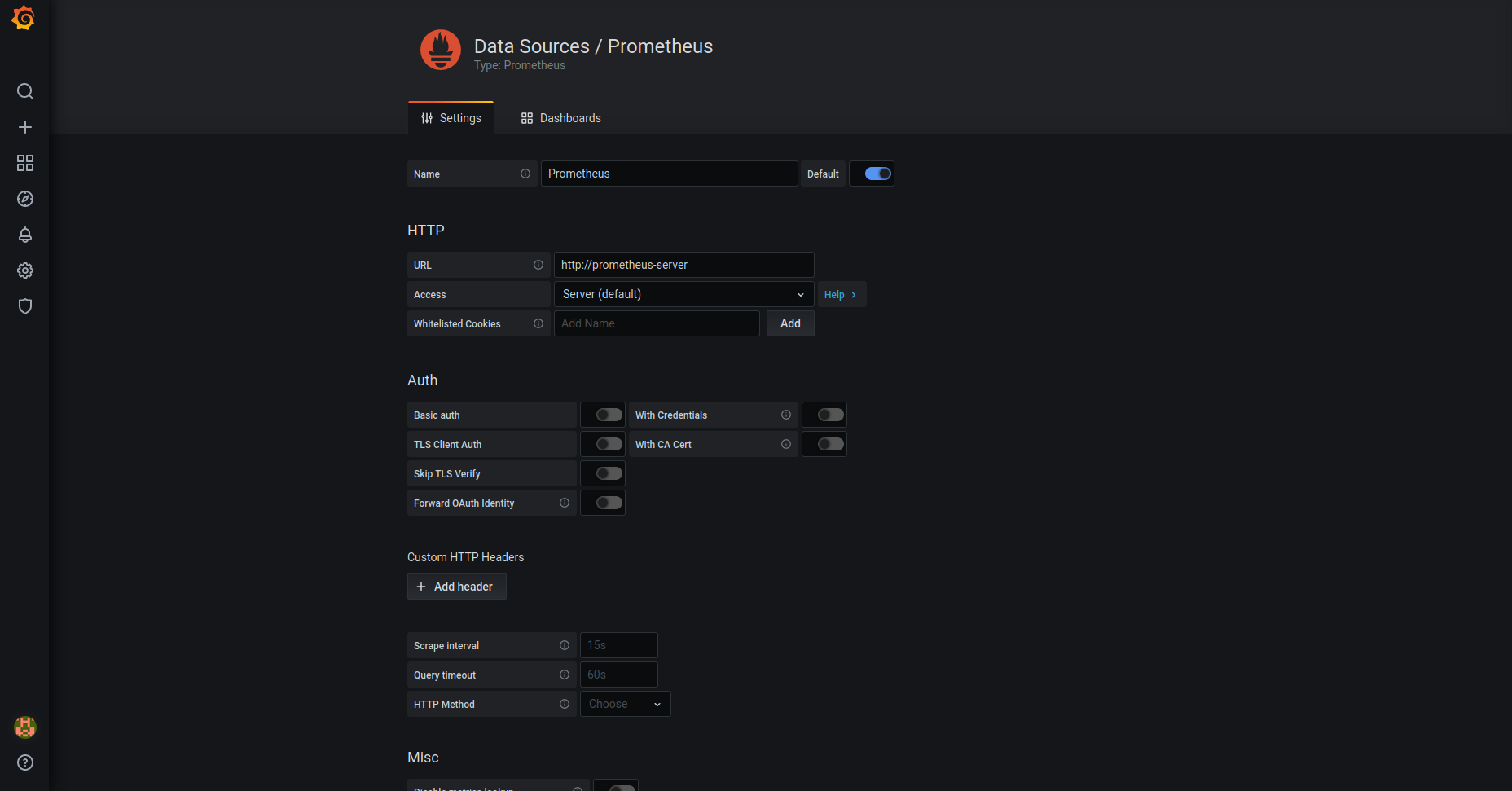

First deploy Prometheus & Grafana and create an ingress entry for Grafana, You can also create another ingress for Prometheus if you need

> helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

> helm repo add stable https://charts.helm.sh/stable

> helm repo update

> helm install --namespace prometheus --create-namespace prometheus prometheus-community/prometheus

> helm install --namespace prometheus --create-namespace grafana stable/grafana --set sidecar.datasources.enabled=true --set sidecar.dashboards.enabled=true --set sidecar.datasources.label=grafana_datasource --set sidecar.dashboards.label=grafana_dashboard

> cat <<EOF | kubectl create -f -

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

namespace: prometheus

annotations:

ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- host: grafana.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: grafana

port:

number: 80

EOFOnce all is installed we can retrieve Grafana credentials to login with admin user.

> kubectl get secret --namespace prometheus grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echoNow we can access Grafana and configure prometheus as datasource for cluster metrics

> kubectl apply -f https://raw.githubusercontent.com/portainer/portainer-k8s/master/portainer.yamlOnce portainer is deployed you can access thought loadbalancer

> kubectl -n portainer get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

portainer LoadBalancer 10.43.243.166 192.168.96.2 9000:31563/TCP,8000:30316/TCP 9m7s> kubectl config use-context k3d-k3d-cluster

> kubectl create deployment nginx --image=nginx

> kubectl create service clusterip nginx --tcp=80:80

> kubectl apply -f nginx-ingress.yml# apiVersion: networking.k8s.io/v1beta1 # for k3s < v1.19

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

annotations:

ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80Testing deployments:

> curl localhost:4080

> curl -k https://localhost:4443

> kubectl get po --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system metrics-server-86cbb8457f-5bpzr 1/1 Running 0 78m 10.42.0.3 k3d-dev-cluster-server-0 <none> <none>

kube-system local-path-provisioner-7c458769fb-hd2cc 1/1 Running 0 78m 10.42.1.3 k3d-dev-cluster-agent-0 <none> <none>

kube-system helm-install-traefik-4qh5z 0/1 Completed 0 78m 10.42.0.2 k3d-dev-cluster-server-0 <none> <none>

kube-system coredns-854c77959c-jmp94 1/1 Running 0 78m 10.42.1.2 k3d-dev-cluster-agent-0 <none> <none>

kube-system svclb-traefik-6ch8f 2/2 Running 0 78m 10.42.0.4 k3d-dev-cluster-server-0 <none> <none>

kube-system svclb-traefik-9tmk4 2/2 Running 0 78m 10.42.1.4 k3d-dev-cluster-agent-0 <none> <none>

kube-system svclb-traefik-h8vgj 2/2 Running 0 78m 10.42.2.3 k3d-dev-cluster-agent-1 <none> <none>

kube-system traefik-6f9cbd9bd4-6bjp4 1/1 Running 0 78m 10.42.2.2 k3d-dev-cluster-agent-1 <none> <none>

default nginx-6799fc88d8-vcjp5 1/1 Running 0 29m 10.42.2.4 k3d-dev-cluster-agent-1 <none> <none>

> kubectl scale deployment nginx --replicas 4

> kubectl get po --all-namespaces -o widepersistence-app.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/tmp/k3dvol"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo

spec:

selector:

matchLabels:

app: echo

strategy:

type: Recreate

template:

metadata:

labels:

app: echo

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: task-pv-claim

containers:

- image: busybox

name: echo

volumeMounts:

- mountPath: "/data"

name: task-pv-storage

command: ["ping", "127.0.0.1"]> kubectl apply -f persistence-app.yml

> kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

task-pv-volume 1Gi RWO Retain Bound default/task-pv-claim manual 2m54s

> kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

task-pv-claim Bound task-pv-volume 1Gi RWO manual 11s

> kubectl get pods

NAME READY STATUS RESTARTS AGE

echo-58fd7d9b6-x4rxj 1/1 Running 0 16sK3D Cluster 101