-

Notifications

You must be signed in to change notification settings - Fork 27

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Merge pull request #499 from testsigmahq/idea-1825

Added 3 New FAQs & Updated Mobile Debugger Documentations

- Loading branch information

Showing

4 changed files

with

270 additions

and

5 deletions.

There are no files selected for viewing

142 changes: 142 additions & 0 deletions

142

src/pages/docs/FAQs/web-apps/failure-to-link-text-capture.md

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,142 @@ | ||

| --- | ||

| title: "Test Case Failures Due to Link Text Capture Issues" | ||

| pagetitle: "Resolving Test Case Failures Due to Link Text Capture Issues" | ||

| metadesc: "Test Case Failures due to link text capture issues? Learn how to fix mismatches between UI and HTML text in automated tests to avoid errors." | ||

| noindex: false | ||

| order: 24.17 | ||

| page_id: "test-case-failures-due-to-link-text-capture-issues" | ||

| search_keyword: "" | ||

| warning: false | ||

| contextual_links: | ||

| - type: section | ||

| name: "Contents" | ||

| - type: link | ||

| name: "Prerequisites" | ||

| url: "#prerequisites" | ||

| - type: link | ||

| name: "Identifying Link Text Capture Issues" | ||

| url: "#identifying-link-text-capture-issues" | ||

| - type: link | ||

| name: "Changing Element Details" | ||

| url: "#changing-element-details" | ||

| - type: link | ||

| name: "Dealing with UI and HTML Text Mismatches" | ||

| url: "#dealing-with-ui-and-html-text-mismatches" | ||

| --- | ||

|

|

||

| --- | ||

|

|

||

| In automated testing, test failures often occur when the recorder captures link text differently between the UI and HTML, such as in uppercase in the UI and lowercase in HTML. This guide helps you identify the issue and adjust settings to ensure you capture the correct elements in your tests, avoiding these failures. | ||

|

|

||

| --- | ||

|

|

||

| ## **Prerequisites** | ||

|

|

||

| Before you proceed, ensure you know how to create or update an [Element](https://testsigma.com/docs/elements/web-apps/create-manually/) and a [Test Case](https://testsigma.com/docs/test-cases/manage/add-edit-delete/). | ||

|

|

||

| --- | ||

|

|

||

| ## **Identifying Link Text Capture Issues** | ||

|

|

||

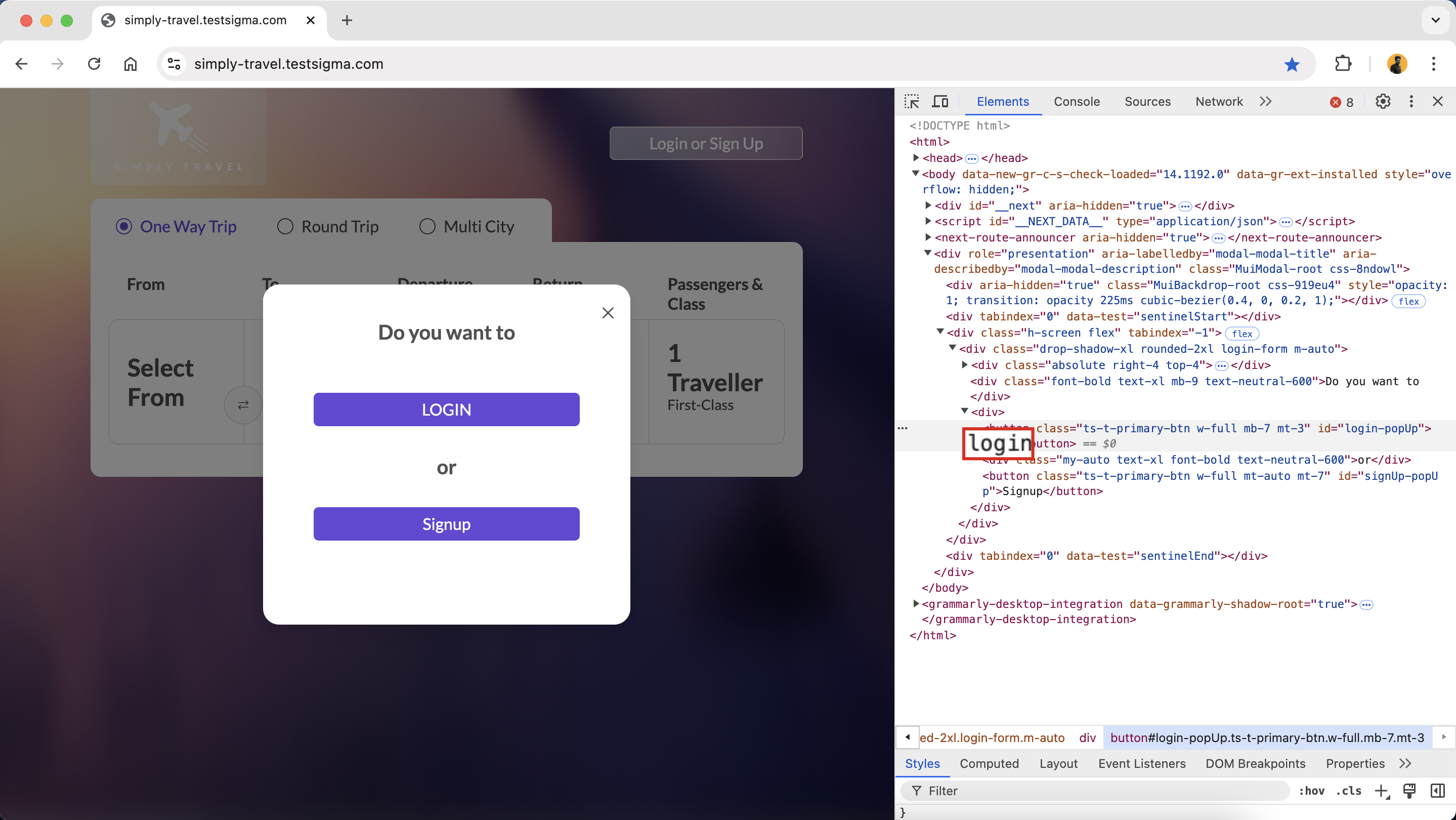

| - Check if the recorder captures link text from the HTML instead of the visible text in the UI. | ||

| - Compare the captured text with what you see in the UI to spot differences.  | ||

|

|

||

| <style> | ||

| .example-container { | ||

| border: 1px solid #ccc; | ||

| border-radius: 4px; | ||

| padding: 0.5em; | ||

| margin: 0.5em 0; | ||

| background-color: #f9f9f9; | ||

| } | ||

| .example-title { | ||

| color: #004d00; | ||

| font-weight: bold; | ||

| display: flex; | ||

| align-items: center; | ||

| } | ||

| .example-title span { | ||

| margin-right: 5px; | ||

| } | ||

| .example-list { | ||

| list-style: none; | ||

| padding: 0; | ||

| } | ||

| .example-list li { | ||

| margin-bottom: 0.5em; | ||

| } | ||

| </style> | ||

|

|

||

| <div class="example-container"> | ||

| <div class="example-title"> | ||

| <span>ℹ️</span>Example: | ||

| </div> | ||

| <ul class="example-list"> | ||

| <p>If the UI displays <strong>LOGIN</strong> but the HTML shows <strong>login</strong>, the recorder may capture the lowercase version, which could lead to a test case failure.</p> | ||

| </ol> | ||

| </ul> | ||

| </div> | ||

|

|

||

| --- | ||

|

|

||

| ## **Changing Element Details** | ||

|

|

||

| - Change the locator setting in your test automation tool from **Link text** to **XPath**. | ||

| - This adjustment allows the recorder to identify the element based on its structure rather than just the text. | ||

| - If needed, manually update the captured link text to match the UI-visible text, ensuring the test case reflects what users see on the screen.  | ||

|

|

||

| [[info | NOTE:]] | ||

| | Using XPath or other reliable locators reduces the risk of capturing incorrect text, especially when differences exist between UI and HTML text. | ||

|

|

||

| --- | ||

|

|

||

| ## **Dealing with UI and HTML Text Mismatches** | ||

|

|

||

| - Compare the UI text with the HTML source to identify any inconsistencies that might cause the recorder to capture the wrong text. | ||

| - Modify your test case to use the visible UI text for element identification. | ||

| - Ensure the locator strategy aligns with the format users see in the UI. | ||

| - Consider using unique attributes like data-test-id or other identifiers instead of relying solely on link text. This approach minimises the risk of mismatches between the UI and HTML representations. | ||

|

|

||

| <style> | ||

| .example-container { | ||

| border: 1px solid #ccc; | ||

| border-radius: 4px; | ||

| padding: 0.5em; | ||

| margin: 0.5em 0; | ||

| background-color: #f9f9f9; | ||

| } | ||

| .example-title { | ||

| color: #004d00; | ||

| font-weight: bold; | ||

| display: flex; | ||

| align-items: center; | ||

| } | ||

| .example-title span { | ||

| margin-right: 5px; | ||

| } | ||

| .example-list { | ||

| list-style: none; | ||

| padding: 0; | ||

| } | ||

| .example-list li { | ||

| margin-bottom: 0.5em; | ||

| } | ||

| </style> | ||

|

|

||

| <div class="example-container"> | ||

| <div class="example-title"> | ||

| <span>ℹ️</span>Example: | ||

| </div> | ||

| <ul class="example-list"> | ||

| <p>If the UI shows <strong>SUBMIT</strong>, but the HTML has it as <strong>submit</strong>, adjust your test case using the visible UI text to account for this difference.</p> | ||

| </ol> | ||

| </ul> | ||

| </div> | ||

|

|

||

| [[info | NOTE:]] | ||

| | - You should implement more automated solutions (like XPath or unique attributes) to enhance test stability and reduce the need for ongoing manual intervention. | ||

| | - In contrast, manual updates to captured text can resolve specific issues. | ||

|

|

||

| --- | ||

|

|

||

|

|

65 changes: 65 additions & 0 deletions

65

src/pages/docs/FAQs/web-apps/viewing-full-testplan-execution-video.md

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,65 @@ | ||

| --- | ||

| title: "Viewing the Full Video of the Test Plan Execution" | ||

| pagetitle: "Full Test Plan Execution Video: Steps to Access and View" | ||

| metadesc: "Viewing the full video of the test plan execution is easy. Re-run your test plan with the reset session disabled to see the complete execution video." | ||

| noindex: false | ||

| order: 24.18 | ||

| page_id: "viewing-the-full-video-of-the-test-plan-execution" | ||

| search_keyword: "" | ||

| warning: false | ||

| contextual_links: | ||

| - type: section | ||

| name: "Contents" | ||

| - type: link | ||

| name: "Prerequisites" | ||

| url: "#prerequisites" | ||

| - type: link | ||

| name: "Understanding the Reset Session Feature" | ||

| url: "#understanding-the-reset-session-feature" | ||

| - type: link | ||

| name: "Disabling the Reset Session for every test case" | ||

| url: "#disabling-the-reset-session-for-every-test-case" | ||

| - type: link | ||

| name: "Viewing the Full Execution Video" | ||

| url: "#viewing-the-full-execution-video" | ||

| --- | ||

|

|

||

| --- | ||

|

|

||

| You might face an issue where you can only view the video for individual test cases rather than the entire test plan. Enabling the reset session feature for each test machine causes the video recording to stop after each test case. This documentation will guide you through resolving this issue. | ||

|

|

||

| --- | ||

|

|

||

| ## **Prerequisites** | ||

|

|

||

| Before you proceed, ensure you understand the concepts of creating a [Test Plan](https://testsigma.com/docs/test-management/test-plans/overview/), [Test Suite](https://testsigma.com/docs/test-management/test-suites/overview/), and [Test Machine](https://testsigma.com/docs/test-management/test-plans/manage-test-machines/). | ||

|

|

||

| --- | ||

|

|

||

| ## **Understanding the Reset Session Feature** | ||

|

|

||

| - The **Reset Session** feature resets the environment before each test case runs, ensuring that each test starts fresh. | ||

| - However, this action causes the video recording to stop and restart with each test case, resulting in separate videos for each test instead of one complete video for the entire test plan. | ||

| - As a result, you only see individual test case videos rather than a full video of the whole test plan execution. | ||

|

|

||

| --- | ||

|

|

||

| ## **Disabling the Reset Session for every test case** | ||

|

|

||

| 1. Navigate to **Test Plans** and create a new test plan or open an existing one. | ||

| 2. On the **Create** or **Edit Test Plan** page, go to the **Add Test Suites & Link Machine Profiles** tab. | ||

| 3. If the test machine is already linked, click the **Settings** icon under the **Test Machine** tab. Click the **Link to Test Machine** icon to add a new machine profile. | ||

| 4. In the **Edit Test Machine or Device Profile** overlay, locate the checkbox labelled **Reset session for every test case**. | ||

| 5. Uncheck this box to disable the reset session for all test cases. | ||

| 6. Click **Create** or **Update Profile** to save your changes.  | ||

|

|

||

| --- | ||

|

|

||

| ## **Viewing the Full Execution Video** | ||

|

|

||

| 1. **Re-run** the test plan with the reset session feature turned off. Ensure the test plan is executed. | ||

| 2. After the execution, go to the **Run Result** section and open Test Run to view the video. | ||

| 3. Click on the **Test Machines** tab and then click **Play Video** to watch the full test plan execution video as a single file.  | ||

|

|

||

| --- | ||

|

|

58 changes: 58 additions & 0 deletions

58

src/pages/docs/FAQs/web-apps/visual-differences-ignored-areas.md

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,58 @@ | ||

| --- | ||

| title: "Visual Differences Not Highlighted in Ignored Areas of Test Case" | ||

| pagetitle: "Visual Differences Not Highlighted in Ignored Areas" | ||

| metadesc: "Learn how to identify and resolve issues where visual differences are not highlighted in ignored regions of test cases. Ensure accurate visual testing results." | ||

| noindex: false | ||

| order: 24.19 | ||

| page_id: "visual-differences=not-highlighted-in-ignored-areas-of-test-case" | ||

| search_keyword: "" | ||

| warning: false | ||

| contextual_links: | ||

| - type: section | ||

| name: "Contents" | ||

| - type: link | ||

| name: "Prerequisites" | ||

| url: "#prerequisites" | ||

| - type: link | ||

| name: "Identifying and Adjusting Ignored Regions" | ||

| url: "#identifying-and-adjusting-ignored-regions" | ||

| - type: link | ||

| name: "Re-running and Reviewing Visual Differences" | ||

| url: "#re-running-and-reviewing-visual-differences" | ||

| --- | ||

|

|

||

| --- | ||

|

|

||

| Visual differences in test cases may not be highlighted if they fall within ignored regions. This document will help you resolve issues where visual differences in test cases are not highlighted because they fall within ignored regions. It will guide you in identifying and resolving this issue by walking you through the necessary adjustments to your test case settings. | ||

|

|

||

| --- | ||

|

|

||

| ## **Prerequisites** | ||

|

|

||

| Before you proceed, ensure you know how to use [Visual Testing](https://testsigma.com/docs/visual-testing/configure-test-steps/). | ||

|

|

||

| --- | ||

|

|

||

| ## **Identifying and Adjusting Ignored Regions** | ||

|

|

||

| 1. Open your test case where the visual differences are not highlighted. | ||

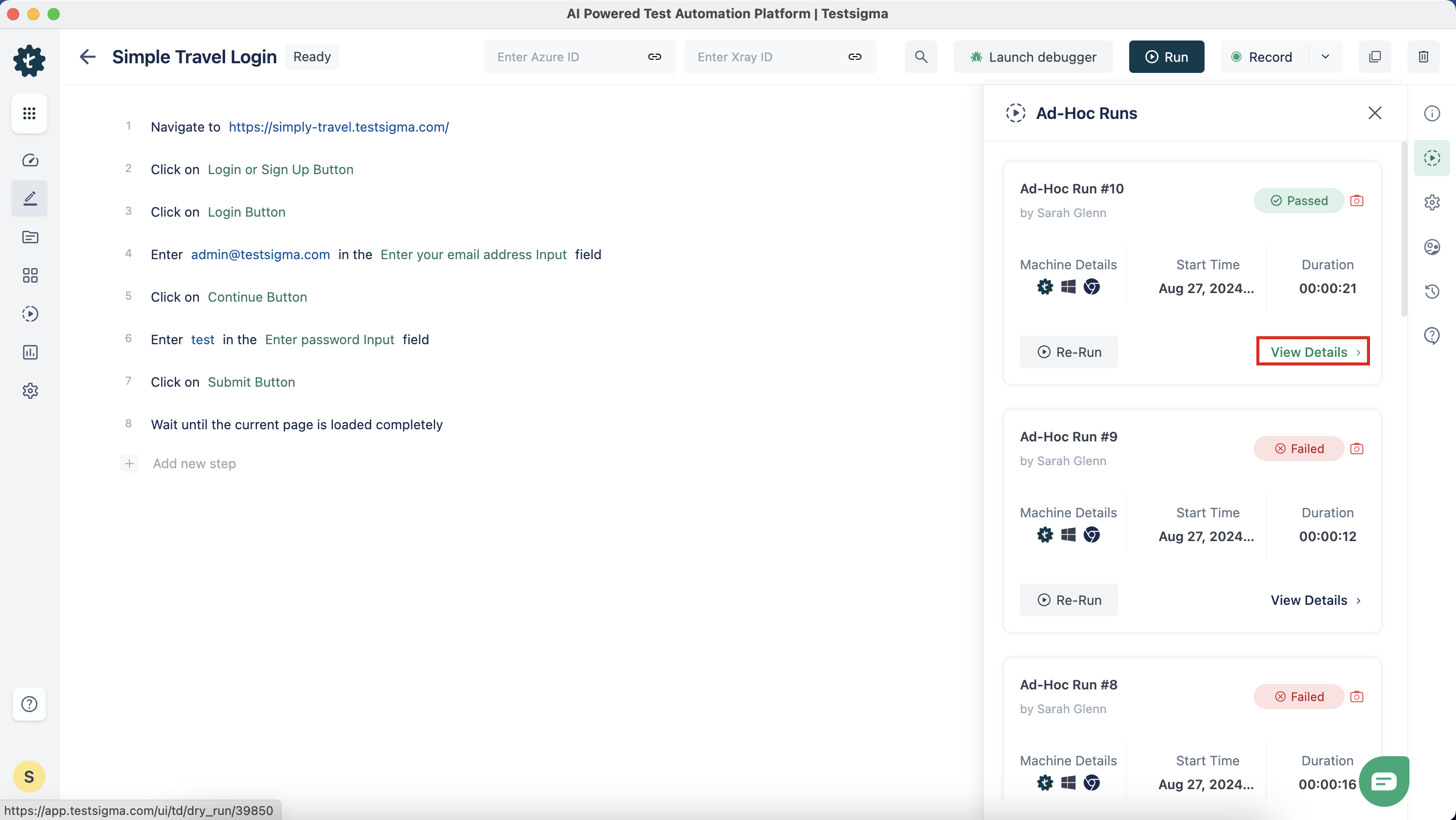

| 2. Click **Ad-hoc Runs** in the right navigation bar, then click **View Details** to open the test case result page.  | ||

| 3. Click the **Camera** icon to open the Visual Difference overlay screen. | ||

| 4. Select **Select region to ignore from visual comparison** and choose the areas you want to exclude from the visual comparison.  | ||

| 5. You can resize or move the ignored area by selecting **Resize/Move Ignored Regions** and adjusting the selected region on the screen as needed. | ||

| 6. Review the regions you marked as ignored and click **Save**. | ||

| [[info | NOTE:]] | ||

| | Note: In this test case, check the box to ignore the same region for all steps. | ||

| 7. Check the box to **Mark as the base image**.  | ||

| 8. Compare the current image with the reference image to identify any visual differences. | ||

| 9. Confirm that these differences fall within the ignored regions. | ||

|

|

||

| --- | ||

|

|

||

| ## **Re-running and Reviewing Visual Differences** | ||

|

|

||

| - Re-run the test case and view the execution results. | ||

| - Check if the visual differences are now appropriately highlighted. | ||

| - Review the updated test results to ensure all visual differences are highlighted correctly. | ||

|

|

||

| --- |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters