-

Notifications

You must be signed in to change notification settings - Fork 4.4k

Automatically clean up soft-deleted records after X days. #14862

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

…d update sentry-cron-monitor

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Greptile Overview

Summary

This PR implements automated cleanup of soft-deleted records after a configurable retention period (default 14 days) to address uniqueness constraint conflicts when the same records are recreated. The feature adds a `trashRetentionDays` field to the workspace entity that workspace owners can configure through the Security settings page.The implementation follows a well-architected pattern with three main components:

- WorkspaceTrashCleanupCronJob: A daily cron job (00:10 UTC) that queries all active workspaces and enqueues individual cleanup jobs

- WorkspaceTrashCleanupJob: BullMQ job processors that handle per-workspace cleanup operations

- WorkspaceTrashCleanupService: Core service that discovers tables with

deletedAtcolumns and permanently deletes records older than the retention period

The system includes quota enforcement (100k records per workspace per day) to prevent performance issues and uses calendar-based retention logic where records deleted on day X are cleaned up at midnight UTC boundaries X+14 days later. The frontend integration provides a new input component in the Security settings allowing workspace administrators to modify the retention period.

The database migration adds the new field with proper constraints, and comprehensive unit tests ensure the service handles success scenarios, error cases, and quota enforcement correctly.

Important Files Changed

Changed Files

| Filename | Score | Overview |

|---|---|---|

| packages/twenty-server/src/engine/workspace-manager/workspace-trash-cleanup/services/workspace-trash-cleanup.service.ts | 4/5 | Core service implementing automated cleanup logic with quota enforcement and PostgreSQL-specific optimizations |

| packages/twenty-server/src/engine/workspace-manager/workspace-trash-cleanup/crons/workspace-trash-cleanup.cron.job.ts | 4/5 | Daily cron job that queries active workspaces and enqueues individual cleanup jobs in parallel |

| packages/twenty-server/src/engine/core-modules/workspace/workspace.entity.ts | 4/5 | Adds trashRetentionDays field to workspace entity with default 14 days and validation constraints |

| packages/twenty-front/src/pages/settings/security/SettingsSecurity.tsx | 4/5 | Implements UI for configuring trash retention days in workspace security settings |

| packages/twenty-server/src/database/typeorm/core/migrations/common/1759182953990-add-workspace-trash-retention.ts | 4/5 | Database migration adding trashRetentionDays field with default value and check constraint |

| packages/twenty-front/src/modules/users/graphql/fragments/userQueryFragment.ts | 2/5 | Adds trashRetentionDays field to GraphQL fragment but missing ViewFragment import causes compilation error |

| packages/twenty-server/src/engine/workspace-manager/workspace-trash-cleanup/workspace-trash-cleanup.module.ts | 4/5 | Module definition encapsulating all trash cleanup functionality with proper dependency injection |

| packages/twenty-front/src/modules/settings/components/SettingsOptions/SettingsOptionCardContentInput.tsx | 4/5 | New reusable component for settings input fields with icon, title, and description |

| packages/twenty-server/src/engine/workspace-manager/workspace-trash-cleanup/jobs/workspace-trash-cleanup.job.ts | 4/5 | BullMQ job processor that delegates cleanup operations to the service layer |

| packages/twenty-server/src/engine/workspace-manager/workspace-trash-cleanup/services/tests/workspace-trash-cleanup.service.spec.ts | 5/5 | Comprehensive test suite with 100% coverage of service public API including quota enforcement |

| packages/twenty-front/src/generated/graphql.ts | 5/5 | Auto-generated GraphQL types adding trashRetentionDays field to workspace schema |

| packages/twenty-server/src/engine/workspace-manager/workspace-trash-cleanup/commands/workspace-trash-cleanup.cron.command.ts | 5/5 | Command for registering the trash cleanup cron job following established patterns |

| packages/twenty-server/src/engine/core-modules/workspace/services/workspace.service.ts | 4/5 | Adds permission validation for trashRetentionDays field requiring WORKSPACE permissions |

| packages/twenty-server/src/engine/core-modules/workspace/dtos/update-workspace-input.ts | 5/5 | GraphQL input DTO with proper validation decorators for trashRetentionDays field |

| packages/twenty-server/src/engine/core-modules/twenty-config/config-variables.ts | 5/5 | Configuration variable for maximum records per workspace deletion quota (100k default) |

| packages/twenty-server/src/database/commands/cron-register-all.command.ts | 5/5 | Registers new trash cleanup cron command with existing cron infrastructure |

| packages/twenty-server/src/engine/workspace-manager/workspace-manager.module.ts | 5/5 | Imports WorkspaceTrashCleanupModule into workspace management system |

| packages/twenty-server/src/database/commands/database-command.module.ts | 5/5 | Adds WorkspaceTrashCleanupModule to database command infrastructure |

| packages/twenty-server/src/engine/workspace-manager/workspace-trash-cleanup/constants/workspace-trash-cleanup.constants.ts | 5/5 | Simple constant definition for daily cron pattern at 00:10 UTC |

| packages/twenty-front/src/modules/settings/components/SettingsOptions/stories/SettingsOptionCardContentInput.stories.tsx | 4/5 | Storybook stories demonstrating the new settings input component states |

| packages/twenty-front/src/modules/auth/states/currentWorkspaceState.ts | 5/5 | Adds trashRetentionDays to frontend workspace state type definition |

| packages/twenty-front/src/testing/mock-data/users.ts | 5/5 | Updates test mock data to include trashRetentionDays field for consistency |

| packages/twenty-front/src/modules/object-metadata/hooks/tests/useColumnDefinitionsFromFieldMetadata.test.ts | 5/5 | Updates test workspace mock to include new trashRetentionDays field |

| packages/twenty-front/src/modules/apollo/services/tests/apollo.factory.test.ts | 5/5 | Updates test mock workspace object with trashRetentionDays field |

Confidence score: 4/5

- This PR implements a well-architected automated trash cleanup system with proper separation of concerns, quota enforcement, and comprehensive testing

- Score reflects some complexity in the calendar-based retention logic and PostgreSQL-specific optimizations that could benefit from additional edge case testing

- Pay close attention to

packages/twenty-front/src/modules/users/graphql/fragments/userQueryFragment.tswhich has a missing ViewFragment import that will cause compilation errors

Sequence Diagram

sequenceDiagram

participant User

participant SettingsUI as "Settings UI"

participant WorkspaceService as "Workspace Service"

participant CronJob as "Workspace Trash Cleanup Cron"

participant Queue as "Message Queue"

participant TrashCleanupJob as "Trash Cleanup Job"

participant TrashCleanupService as "Trash Cleanup Service"

participant Database as "Database"

User->>SettingsUI: "Update trash retention days"

SettingsUI->>WorkspaceService: "updateWorkspace(trashRetentionDays)"

WorkspaceService->>Database: "Save workspace settings"

WorkspaceService-->>SettingsUI: "Updated workspace"

SettingsUI-->>User: "Settings saved"

Note over CronJob: "Daily at 00:10 UTC"

CronJob->>Database: "Get active workspaces"

Database-->>CronJob: "List of workspaces with retention settings"

loop "For each workspace"

CronJob->>Queue: "Enqueue WorkspaceTrashCleanupJob"

end

Queue->>TrashCleanupJob: "Process cleanup job"

TrashCleanupJob->>TrashCleanupService: "cleanupWorkspaceTrash(workspaceId, schemaName, retentionDays)"

TrashCleanupService->>Database: "Discover tables with deletedAt column"

Database-->>TrashCleanupService: "List of table names"

loop "For each table (up to 100k records limit)"

TrashCleanupService->>Database: "DELETE records older than retention period"

Database-->>TrashCleanupService: "Deleted count"

end

TrashCleanupService-->>TrashCleanupJob: "Success result with total deleted count"

TrashCleanupJob-->>Queue: "Job completed"

24 files reviewed, 4 comments

| isTwoFactorAuthenticationEnforced | ||

| trashRetentionDays | ||

| views { | ||

| ...ViewFragment |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

syntax: Missing import for ViewFragment. Add import { VIEW_FRAGMENT } from '@/views/graphql/fragments/viewFragment'; and include ${VIEW_FRAGMENT} at the bottom with other fragments.

| ...ViewFragment | |

| import { | |

| AVAILABLE_WORKSPACE_FOR_AUTH_FRAGMENT, | |

| AVAILABLE_WORKSPACES_FOR_AUTH_FRAGMENT, | |

| } from '@/auth/graphql/fragments/authFragments'; | |

| import { OBJECT_PERMISSION_FRAGMENT } from '@/settings/roles/graphql/fragments/objectPermissionFragment'; | |

| import { ROLE_FRAGMENT } from '@/settings/roles/graphql/fragments/roleFragment'; | |

| import { BILLING_SUBSCRIPTION_FRAGMENT } from '@/users/graphql/fragments/billingSubscriptionsFragment'; | |

| import { CURRENT_BILLING_SUBSCRIPTION_FRAGMENT } from '@/users/graphql/fragments/currentBillingSubscriptionFragement'; | |

| import { WORKSPACE_URLS_FRAGMENT } from '@/users/graphql/fragments/workspaceUrlsFragment'; | |

| import { VIEW_FRAGMENT } from '@/views/graphql/fragments/viewFragment'; | |

| import { DELETED_WORKSPACE_MEMBER_QUERY_FRAGMENT } from '@/workspace-member/graphql/fragments/deletedWorkspaceMemberQueryFragment'; | |

| import { PARTIAL_WORKSPACE_MEMBER_QUERY_FRAGMENT } from '@/workspace-member/graphql/fragments/partialWorkspaceMemberQueryFragment'; | |

| import { WORKSPACE_MEMBER_QUERY_FRAGMENT } from '@/workspace-member/graphql/fragments/workspaceMemberQueryFragment'; | |

| import { gql } from '@apollo/client'; |

Prompt To Fix With AI

This is a comment left during a code review.

Path: packages/twenty-front/src/modules/users/graphql/fragments/userQueryFragment.ts

Line: 84:84

Comment:

**syntax:** Missing import for ViewFragment. Add `import { VIEW_FRAGMENT } from '@/views/graphql/fragments/viewFragment';` and include `${VIEW_FRAGMENT}` at the bottom with other fragments.

```suggestion

import {

AVAILABLE_WORKSPACE_FOR_AUTH_FRAGMENT,

AVAILABLE_WORKSPACES_FOR_AUTH_FRAGMENT,

} from '@/auth/graphql/fragments/authFragments';

import { OBJECT_PERMISSION_FRAGMENT } from '@/settings/roles/graphql/fragments/objectPermissionFragment';

import { ROLE_FRAGMENT } from '@/settings/roles/graphql/fragments/roleFragment';

import { BILLING_SUBSCRIPTION_FRAGMENT } from '@/users/graphql/fragments/billingSubscriptionsFragment';

import { CURRENT_BILLING_SUBSCRIPTION_FRAGMENT } from '@/users/graphql/fragments/currentBillingSubscriptionFragement';

import { WORKSPACE_URLS_FRAGMENT } from '@/users/graphql/fragments/workspaceUrlsFragment';

import { VIEW_FRAGMENT } from '@/views/graphql/fragments/viewFragment';

import { DELETED_WORKSPACE_MEMBER_QUERY_FRAGMENT } from '@/workspace-member/graphql/fragments/deletedWorkspaceMemberQueryFragment';

import { PARTIAL_WORKSPACE_MEMBER_QUERY_FRAGMENT } from '@/workspace-member/graphql/fragments/partialWorkspaceMemberQueryFragment';

import { WORKSPACE_MEMBER_QUERY_FRAGMENT } from '@/workspace-member/graphql/fragments/workspaceMemberQueryFragment';

import { gql } from '@apollo/client';

```

How can I resolve this? If you propose a fix, please make it concise.There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Not sure what this means and if it has anything to do with my changes. If it does, please let me know what this really means as in why is it needed..

packages/twenty-front/src/pages/settings/security/SettingsSecurity.tsx

Outdated

Show resolved

Hide resolved

| const cutoffDate = new Date(); | ||

|

|

||

| cutoffDate.setUTCHours(0, 0, 0, 0); | ||

| cutoffDate.setDate(cutoffDate.getDate() - trashRetentionDays + 1); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

logic: The + 1 in the cutoff calculation means records are kept for trashRetentionDays - 1 days, not the full retention period

Prompt To Fix With AI

This is a comment left during a code review.

Path: packages/twenty-server/src/engine/workspace-manager/workspace-trash-cleanup/services/workspace-trash-cleanup.service.ts

Line: 146:146

Comment:

**logic:** The `+ 1` in the cutoff calculation means records are kept for trashRetentionDays - 1 days, not the full retention period

How can I resolve this? If you propose a fix, please make it concise.There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That's intentional. If the CRON runs on October 15, 2025, we want to delete all records with deletedAt < October 2, 2025 so that records deleted on October 1, 2025 are hard-deleted.

...erver/src/engine/workspace-manager/workspace-trash-cleanup/workspace-trash-cleanup.module.ts

Outdated

Show resolved

Hide resolved

|

🚀 Preview Environment Ready! Your preview environment is available at: http://bore.pub:10019 This environment will automatically shut down when the PR is closed or after 5 hours. |

|

I feel my config is properly set and this has to do with the CI - run already passed for 5fff113. |

| const { workspaceId, schemaName, trashRetentionDays } = input; | ||

|

|

||

| try { | ||

| const tableNames = await this.discoverTablesWithSoftDelete(schemaName); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't think we gain anything by first FETCHING before DELETING? My intuition is that it's just more costly to do it this way but maybe I'm missing something?

| private async discoverTablesWithSoftDelete( | ||

| schemaName: string, | ||

| ): Promise<string[]> { | ||

| const rawResults = await this.objectMetadataRepository |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe it makes sens to use the raw query builder here but if you can use high-level methods that's always better. We might not keep this "find tables" piece anyway

| cutoffDate: Date, | ||

| limit: number, | ||

| ): Promise<number> { | ||

| const result = await this.dataSource.query( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Typically an example where you're going much too low in abstraction. You're loosing a lot of value by doing this.

For example in WorkspaceDeleteQueryBuilder which is called when you call delete(), you'll see there's an eventEmitterService. By going "raw sql" you lose this so people won't get destroy webhooks / there will be no destroy events (maybe there's some cascade deleting happening or other parts of the app that want to be aware you destroyed records)

...c/engine/workspace-manager/workspace-trash-cleanup/crons/workspace-trash-cleanup.cron.job.ts

Outdated

Show resolved

Hide resolved

...c/engine/workspace-manager/workspace-trash-cleanup/crons/workspace-trash-cleanup.cron.job.ts

Outdated

Show resolved

Hide resolved

...s/settings/components/SettingsOptions/__stories__/SettingsOptionCardContentInput.stories.tsx

Outdated

Show resolved

Hide resolved

...nty-front/src/modules/settings/components/SettingsOptions/SettingsOptionCardContentInput.tsx

Outdated

Show resolved

Hide resolved

...nty-front/src/modules/settings/components/SettingsOptions/SettingsOptionCardContentInput.tsx

Outdated

Show resolved

Hide resolved

...c/engine/workspace-manager/workspace-trash-cleanup/crons/workspace-trash-cleanup.cron.job.ts

Outdated

Show resolved

Hide resolved

| import { Process } from 'src/engine/core-modules/message-queue/decorators/process.decorator'; | ||

| import { Processor } from 'src/engine/core-modules/message-queue/decorators/processor.decorator'; | ||

| import { MessageQueue } from 'src/engine/core-modules/message-queue/message-queue.constants'; | ||

| import { WorkspaceTrashCleanupService } from 'src/engine/workspace-manager/workspace-trash-cleanup/services/workspace-trash-cleanup.service'; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think I would not consider this a workspace-manager service, simply call it trash-cleanup and put it one level above? Workspace Manager tend to be a complex area so maybe best not too overload it with simpler stuff like this for now. But honestly it wasn't a bad choice, you could argue both

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I added it to workspace-manager since it was performing admin work on the workspace.

Seemed logical, but it's also fair to move it up since workspace-manager handles the complex bit.

|

I think given the number of mistakes I made in this issue, I need to re-think a few things from SQL queries to re-usability to types. Will push an update soon and try to trace things better. |

…ngsOptionCardContentCounter with a showButtons prop for reusability

…nsure it follows patterns of other cron.job.ts files

|

Resolved a few comments by closely following how other cron.job.ts and job.ts files are set. Also replaced the SettingsOptionCardContentInput with SettingsOptionCardContentCounter and updated story. Still working on the service, will leave a comment once done. |

|

Ready for another review. Re-thought the solution to go through TwentyORM. It's using cached version of database table names to reduce the round-trip, but cleaning up in configurable batches (1000 as default) to avoid database locks with massive queries.

Find is an extra query here. Say that we have 50 tables and only the last one has 150k deletedAt records < cutoff date. We go through 49 queries for find and short-circuit when we find nothing to delete, then another 200 to delete 100k records in chunks of 1000 (until we increase the chunk size). So, a total of 249 queries here per workspace in the worst-case. Increase objects to 100 and the total goes to 299 if we have over 100k records to clean-up in the final table. Scaling this seems reasonable, but open to comments. One more case that I have not yet covered in this PR: when trashRetention is 0, softDelete should not come into the picture. As per current implementation, even at 0, the next CRON clears the record out. I think we can cover this in a separate PR, unless you want otherwise. For webhooks, even though I started going through the TwentyORM layer, I was not able to receive anything at https://webhook.site when the CRON ran. I tried selecting Will appreciate some help/clarity on the webhook thing - do we have a destroy event for hard-delete and if we do, should it not have run when I set to "all"? |

| @IsOptional() | ||

| isTwoFactorAuthenticationEnforced?: boolean; | ||

|

|

||

| @Field({ nullable: true }) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Not nullable in the UI or in the DB I think? Are you sure it should be nullable here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I set it nullable for the UI to be able to call the mutation without a value for trashRetentionDays, but I will revisit the logic to confirm.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I looked into this and I found that we have set nullable for a bunch of other fields in the input DTO.

It is such even though we have default values for those fields.

Sending null intentionally from the mutation throws a database error for all of them (unless null is allowed), but if we omit those fields in the mutation, database does not cause any issues since we're not updating the value for those fields.

We can handle it gracefully by removing fields containing "null" at runtime, but it's going to be a larger refactor than just trashRetentionLogic - instead of adding if-statements inside the service method, the ideal solution would be to use some utility function and then test other DTOs as well.

That said, trashRetentionDays follows the existing pattern and we are not inserting null into the database, we're just allowing the mutation to "not send" trashRetentionDays when the mutation is called.

If I remove { nullable: true } from the Field decorator, I get the following when updating the displayName.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Oh okay, thanks for these details!

packages/twenty-server/src/engine/core-modules/twenty-config/config-variables.ts

Outdated

Show resolved

Hide resolved

| }) | ||

| @CastToPositiveNumber() | ||

| @IsOptional() | ||

| TRASH_CLEANUP_BATCH_SIZE = 1000; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Same comment as above

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Done.

| }); | ||

| } | ||

| } | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In Harshit's open PR we set priorities for queues. I think this will help a lot for this kind of use-case to avoid clogging. Typically here we want to set a very low priority

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

He has defined a file called message-queue-priority.constants.ts to hold priority values. I believe his PR would need to be merged for me to assign a priority in the same file.

...r/src/database/typeorm/core/migrations/common/1759182953990-add-workspace-trash-retention.ts

Outdated

Show resolved

Hide resolved

|

You have run out of free Bugbot PR reviews for this billing cycle. This will reset on November 15. To receive reviews on all of your PRs, visit the Cursor dashboard to activate Pro and start your 14-day free trial. |

| // Daily at 00:10 UTC | ||

| export const TRASH_CLEANUP_CRON_PATTERN = '10 0 * * *'; | ||

|

|

||

| export const TRASH_CLEANUP_MAX_RECORDS_PER_WORKSPACE = 1_000_000; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We try to keep one export per file. I will merge this PR to move on but could you please include this fix in any of your future PRs?

|

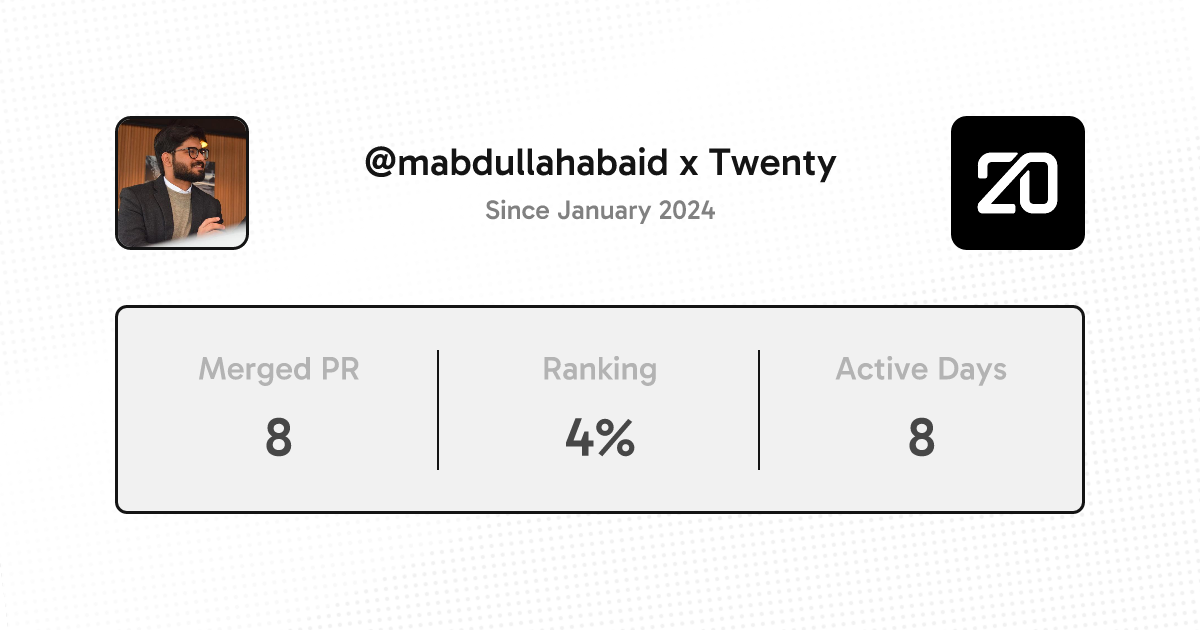

Thanks @mabdullahabaid for your contribution! |

…4862) Closes twentyhq#14726 ### Added - `trashRetentionDays` field to workspace entity (default: 14 days) - Automated trash cleanup using BullMQ jobs - Daily cron (00:10 UTC) that enqueues cleanup jobs for all active workspaces - Per-workspace limit: 100k records deleted per day - Calendar-based retention: records deleted on day X are cleaned up X+14 days later (at midnight UTC boundaries) ### Architecture - **Cron (WorkspaceTrashCleanupCronJob):** Runs daily, enqueues jobs in parallel for all workspaces - **Job (WorkspaceTrashCleanupJob):** Processes individual workspace cleanup - **Service (WorkspaceTrashCleanupService):** Discovers tables with `deletedAt`, deletes old records with quota enforcement - **Command:** `npx nx run twenty-server:command cron:workspace:cleanup-trash` to register the cron ### Testing - Unit tests for service with 100% coverage of public API - Tested quota enforcement, error handling, and edge cases --------- Co-authored-by: Félix Malfait <[email protected]>

Closes #14726

Added

trashRetentionDaysfield to workspace entity (default: 14 days)Architecture

deletedAt, deletes old records with quota enforcementnpx nx run twenty-server:command cron:workspace:cleanup-trashto register the cronTesting