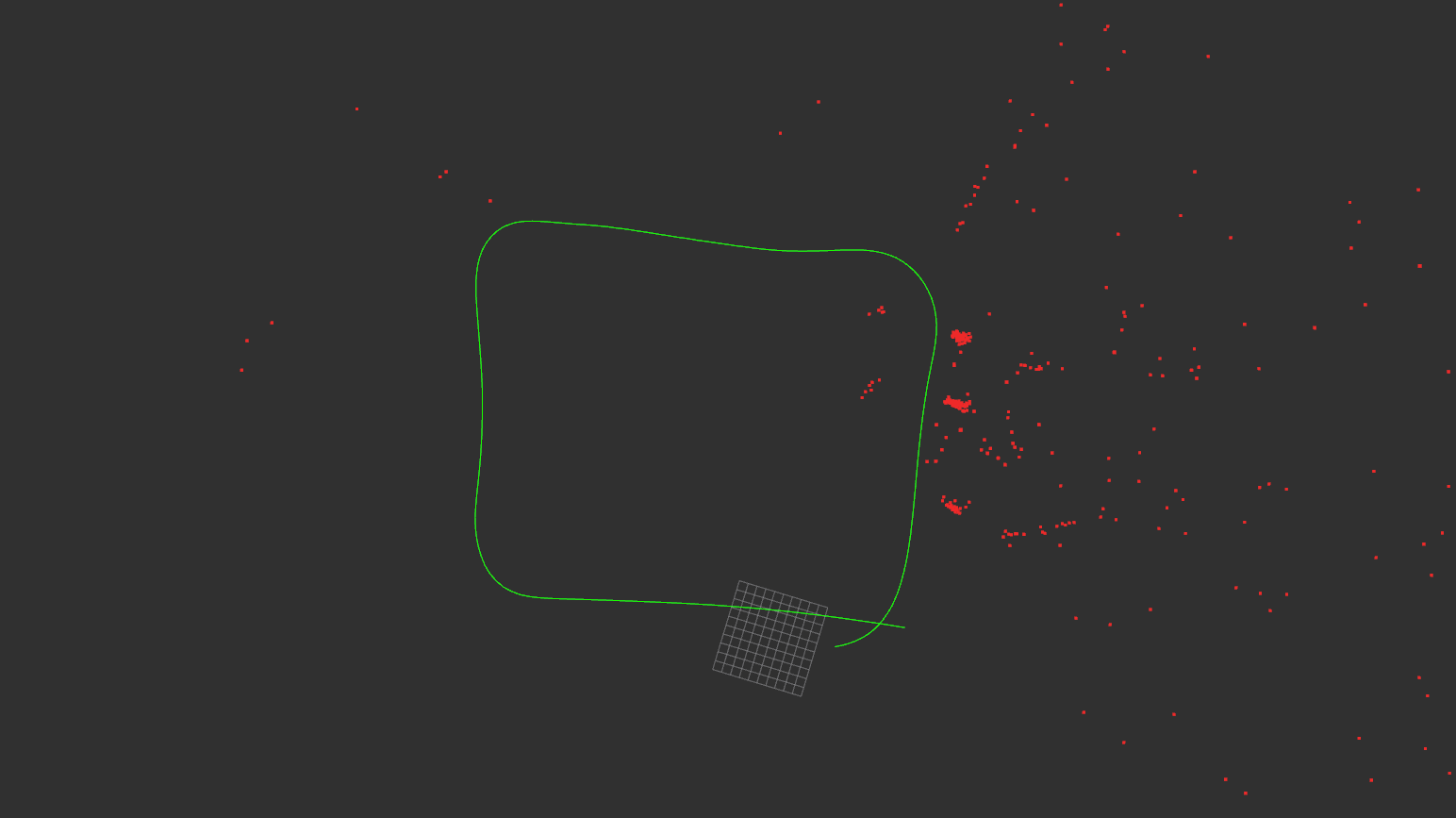

This project is designed for students to learn the front-end and back-end in a Simultaneous Localization and Mapping (SLAM) system. The objective is that using feature_tracker in VINS-MONO as front-end, and GTSAM as back-end to implement a visual inertial odometry (VIO) algorithm for real-data collected by a vehicle: The MVSEC Dataset. The code is modified based on original code from CPI.

Specifically, we are learning how to utilize a front-end package and use IMUFactor, SmartProjectionPoseFactor and ISAM2 optimizer in GTSAM to achieve a simple but straight-forward VIO system.

Install ROS:

Ubuntu 18.04: http://wiki.ros.org/melodic/Installation/Ubuntu.

Ubuntu 16.04: http://wiki.ros.org/kinetic/Installation/Ubuntu.

Install GTSAM as a thirdparty: https://github.com/borglab/gtsam.

Clone this ros workspace:

$ git clone https://github.com/ganlumomo/VisualInertialOdometry.gitTry out the feature_tracker package to see how does it work and what does it output.

Refer examples ImuFactorsExample.cpp and ISAM2Example_SmartFactor.cpp to implement the following functions defined in GraphSolver.h:

// For IMU preintegration

set_imu_preintegration

create_imu_factor

get_predicted_state

reset_imu_integration

// For smart vision factor

process_feat_smartYou are free to change the function signature, but make sure change other places accordingly.

Build the code and launch the ros node with rosbag data:

$ cd VisualInertialOdometry

$ catkin_make

$ source devel/setup.bash

$ roslaunch launch/mvsec_test.launch

$ rosbag play mvsec_test.bagTest data can be downloaded here: mvsec_test.bag.

Write one-page project summary about your implementation and result.

VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator (PDF)

IMU Preintegration on Manifold for Efficient Visual-Inertial Maximum-a-Posteriori Estimation (PDF)

Eliminating conditionally independent sets in factor graphs: A unifying perspective based on smart factors (PDF)

The Multivehicle Stereo Event Camera Dataset: An Event Camera Dataset for 3D Perception (PDF)