-

Notifications

You must be signed in to change notification settings - Fork 2

Hardware_Design

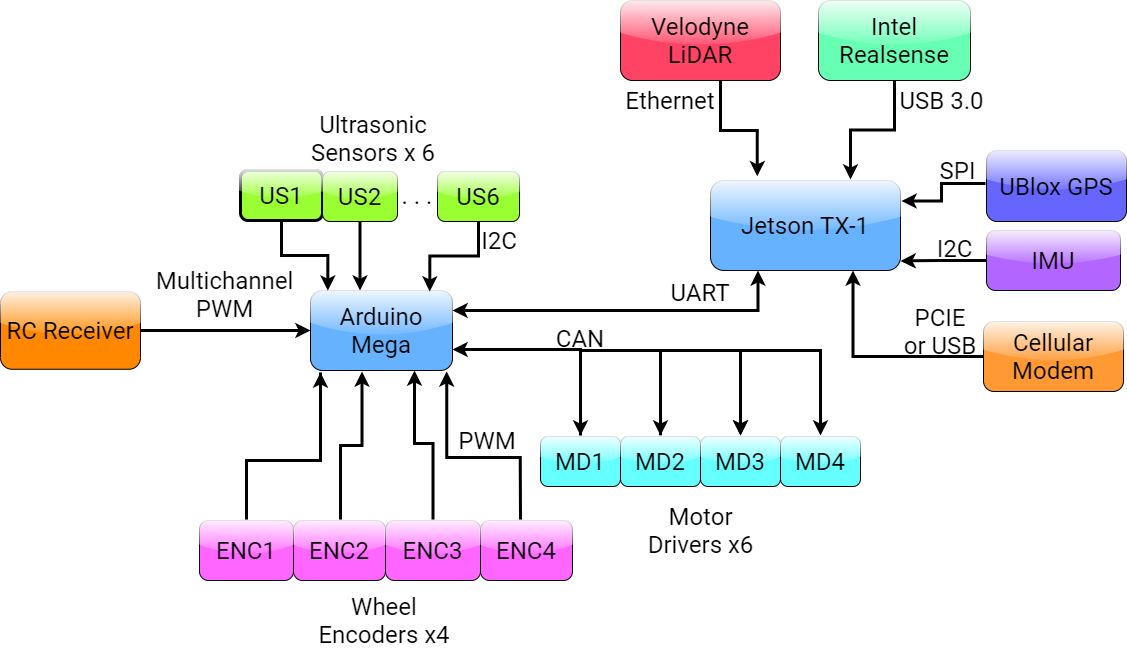

The following figure provides an overview of the entire hardware architecture and shows the categories that each piece of hardware belong to. The decision to use the sensors in the dotted box in this figure and the specific hardware selected is described in the following sections The next figure shows the communication protocol for each wired communication in the hardware architecture.

Proposed hardware architecture

Proposed hardware architecture

Communication protocol for wired communication

Communication protocol for wired communication

For all decided components, descriptions of the sensors and reasons for the decisions will be provided in the proceeding sections. Some components have still not been decided upon. These components include a mechanical brake, the autonomy hardware mounting system, wire harnesses, and an encoder mounting system, which all belong to the category of general mechanical components. All the undecided components are not critical to the early system design as they mostly pertain to field testing which will occur later in the project. Please refer to the Future Work page for more information on the undecided components.

Based on all the decided hardware components, a power consumption budget is provided in the following table. Each battery on the robot supplies 540 Watts per hour. According to the power budget, four batteries will be needed to satisfy the operation duration requirement of 1.5 hours (NF1.2). Currently only two batteries are installed on the robot so it is recommended that two more be added in the future. For a detailed power budget description, visit the Hardware section of our Design Document

To provide accurate position information, a combination of a GPS (Global Positioning System) module, wheel encoders, IMU (Inertial Measurement Unit), LiDAR (Light Detection and Ranging), and RGB-D (Red Green Blue-Depth) camera data will be used. Fusing this data increases the accuracy of the robot’s position estimate. The combination of these sensors will ensure that we can meet the requirement of sub-meter position accuracy (with the use of a SLAM (Simultaneous Localization and Mapping) algorithm. In addition, the use of all of these sensors provides for a better map output and obstacle detection that can be used for motion planning (than using just one sensor). The hardware selection of these sensors is described in the following sections.

Sensor Suite Justification

Lidar

A LiDAR sensor allows the robot to map its surroundings with reliable range data, and in varying ambient light conditions. In combination with a GPS, and an IMU, a high resolution LiDAR scanner allows the autonomy computer to perform SLAM and obstacle detection with less processing power on the compared to systems with low resolution lidar which will require more computation to achieve similar range detection accuracy. It also provides an alternative to using solely cameras for object detection, as deterministic methods that do not involve any machine learning can be employed to detect obstacles.

GPS and IMU

Initially, off-the-shelf integrated GPS/GNSS (Global Navigation Satellite System) and IMU options were researched to provide accurate robot localization in an effort to meet our time constraints. Devices such as the VectorNav VN-200, Novatel SPAN CPT7, and Duro Inertial Ruggedized Receiver were compared, all of which cost between 3000-8000USD.

One of the features that these systems often implement is dynamic alignment. This occurs when sufficient motion allows the system to estimate heading based on the correlation of IMU acceleration measurement and change in velocity measured by the GPS receiver (“VN-300 Dual Antenna Gnss/Ins,” n.d.).

However, further research showed that slow moving platforms, such as the PropBot under the set ODDs (Operational Design Domain), do not experience sufficient acceleration to perform dynamic alignment. Due to this, dynamic alignment is not a required or useful feature for the PropBot system.

An alternative to this is using a magnetometer for heading measurement, but this is only feasible when the magnetic environment can be controlled by the user. In the case of PropBot, the robot will be hosting different types of lab equipment, and the robot will need to drive through different types of environments.

Thus a method to infer heading without dynamic alignment and magnetometers will need to be used. This can be done with SLAM algorithms with the help of other sensors such as LiDAR, RGB-D, and wheel encoders. These SLAM algorithms use different sensors as inputs to a filter. Due to this, it is important that the sensor data is unfiltered, as the filter used in SLAM algorithms will not perform as well if the inputs are already filtered. Due to this line of reasoning, off–the-shelf integrated GPS/GNSS and IMU options are not feasible because they provide filtered position outputs and are significantly more expensive than buying GPS and IMU units separately. In addition, using just a GPS/IMU solution for positioning is not ideal under GPS loss conditions because IMU sensors on their own can build up a lot of errors over time.

RGB-D Camera

Cameras are highly effective in classification and object tracking. As deep-learning fueled vision algorithms improve, the robustness of dynamic obstacle detection with cameras will continue to improve, and thus will be very beneficial to the robot’s autonomy system. However, it does have the weakness of requiring non-deterministic algorithms to identify these obstacles, so it is beneficial to pair this sensor with others for motion planning.

Final Choice: Velodyne Puck

Selection Criteria

| Required Feature | Justification |

|---|---|

| LiDAR shall have high sunlight resistance. | Intense sunlight can prevent scanner from being able to read its own returning light pulses. |

| LiDAR shall support hardware or software methods to mitigate interference from rain/fog/dust | Precipitation can interfere with LiDARs ability to detect obstacles by reducing the intensity of the signal reflected from the target or by causing false positive detections. |

| LiDAR shall have built-in temperature control system and operating range between -20∘C to 50∘C | Varying the temperature will vary the Wavelength of semiconductor laser. This will result in inaccurate detection of the returning laser beams |

| LiDAR shall have environmental rating of at least IP67 (protection from dust and water) | Robot will be in operating outdoors in various weather conditions. |

| Sample rate 200000 points/sec | Capturing higher amounts of data-points results in more reliable mapping, localization, and moving object detection. |

| LiDAR shall have distance range > 40m | Robot travels at a maximum speed of 10km/h (4m/s). |

| LiDAR shall have Horizontal FOV of ≥ 270 degrees | Robot should be able to track objects in its frontal and side zone. |

| LiDAR shall have Vertical FOV of ≥ 30 degrees | LiDAR will be mounted on the robot at least 0.75m above ground in order to create a comprehensive scan of its environment for wave propagation data analysis. The robot should be able to detect an object of 0.2 m height (height of small dog) from 2 m away. |

| Power consumption should be < 20W | Minimize power consumption to achieve desired operation time. |

Selection Justification

For a detailed trade study, please refer to Section 4.1.4

The sensor configuration which meets the requirements stated in the table while still minimizing cost and maximizing ease of integration is the Velodyne Puck. Mounting a single Puck at the front of the robot reduces the complexity of the SLAM and object detection/response algorithms and has a lower processing power requirements compared to more complex LiDAR setups. The Puck is widely used in mobile robotics projects so its specifications have been validated by many research groups who have also provided support in simulation software such as Airsim, CARLA, and other Gazebo. The Puck also supports multi-echo-technology which mitigates the interference from rain/fog/dust without loss in performance.

A drawback of this LiDAR configuration is that it does not provide a 360 degree view around the robot. This can be problematic when the robot makes sharp turns which increases the risk of colliding with moving objects approaching the robot’s side zone (i.e. cyclists). However, this can be mitigated in the future by installing safety blinkers to alert nearby road users at least 2 seconds before the robot is about to make a turn.

Horizontal and vertical field of view of LiDAR configuration

Horizontal and vertical field of view of LiDAR configuration

Final choice: Ublox NEO-M8P-2

Selection Criteria

A potential solution to achieving high precision position accuracy is using a custom differential GPS system. Differential GPS adjusts real time GPS signals using a fixed known position (Racelogic 2018). Some GPS chips (Ublox NEO-M8P) even allow the base station to move and calculate the relative position of the moving “rover” to a sub-cm accuracy. The following are the pros and cons of using a differential GPS system for PropBot.

Custom Differential GPS Station

Pros

Provides up to cm level accuracy.

Cons

Will not work when robot loses GPS signal.

Will not provide too much added benefit in comparison to Real-Time Kinematic positioning systems (“Real-Time Kinematic and Differential Gps,” n.d.).

Base station GPS will require power.

Base station GPS will need to be mounted in open sky area and will need casing to shield against the elements.

This type of system would add complexity to PropBot and make it reliant on base stations for its navigation. Since PropBot needs other sensors such as LiDAR and camera for autonomous decision making, it makes sense to use these sensors to aid in localization with normal GPS inputs. The localization algorithm that is to be used is described in a later section and boasts up to a 5cm position accuracy level.

Due to this, differential GPS systems will not be considered, but Real-Time Kinematic (RTK) GPS systems will be considered.

| Required Feature | Justification |

|---|---|

| GPS system should have metre level accuracy and us GNSS. | According to the related tags, position data must be accurate to 0.5m (NF3.2), and at least metre level GPS accuracy will be required for the robot’s localization algorithms. |

| GPS system should be a standalone device (no IMU) that does not do internal filtering on the position. | Due to the use of a localization algorithm that uses multiple inputs, the position estimate must be unfiltered for optimal results. |

| GPS system should have casing that shields it from light rain, or allow for a plastic case to be easily implemented for an existing circuit board. | Due to time constraints and environmental conditions, a prepackaged GPS is needed that at most requires additional plastic casing to be made. |

| GPS system should have a time-pulse (PPS) signal. | A time-pulse will mitigate the computer’s clock drift and ensure that the collected data is time stamped accurately. |

Selection Justification

For a detailed trade study, please refer to Section 4.1.2

The Ublox NEO-M8P-2 has by-far the best position accuracy performance and is the only system that has an option to use RTK.

Final choice: MPU-9250

Selection Criteria

The required features of the IMU are described in the table .

| Required Feature | Justification |

|---|---|

| IMU system should be a standalone device that does not do internal filtering on the position. | Due to the use of a localization algorithm that uses multiple inputs, the position estimate must be unfiltered for optimal results. |

| IMU system should have casing that shields it from light rain, or allow for a plastic case to be easily implemented for an existing circuit board. | Due to time constraints and environmental conditions, a prepackaged IMU is needed that at most requires additional plastic casing to be made. |

Selection Justification

For a detailed trade study, please refer to Section 4.1.3

The MPU-9250 is the best option because its accelerometer’s range is 2g, which is around 20m/s2 Since the robot doesn’t move that fast or increase its speed in a sudden due to the maximum speed limit of 10 km/h (around 2.78 m/s), that range is more than enough for the PropBot. More importantly, in comparison with the other IMU option, MPU-9250 has an open-source ROS driver. This will satisfy the above mentioned drivers and facilitate the integration between the IMU and the autonomy computer.

Final choice: Intel Realsense

Selection Criteria

In order to select an appropriate camera for forward vision many different vendors were considered. As well the existing cameras available such as the Intel Realsense were considered to see if the currently available resources are already sufficient.

The main considerations for selecting the camera are the camera’s connection interface and its imaging/sensor characteristics. The Jetson TX-1 is fairly limited in IO, particularly in USB 3.0 ports, in which only one exists. As a result cameras with other connectivity options like CSI-MIPI or Ethernet are preferred.

| Required Feature | Justification |

|---|---|

| Global shutter is preferred over rolling shutter. |

Global shutter reduces imaging artifacts for fast moving objects. Less artifacting improves object detection. |

| Resolution should be 720p or 1080p |

Low resolution makes it hard to resolve detail at long distance.High resolution uses too much processing power. Low resolution sensors usually have bigger pixel sizes, which provides better low light imaging. |

|

Wider FOV (but not too wide) is desired. Minimum of 60 |

Larger field of view allows the robot to see objects coming from the sides sooner. Very high FOV lenses (fisheye) distort image, which is undesired for object detection. |

| Waterproof and/or rugged casing is desired |

Pre-made waterproofing and rugged cases would allow for more flexible mounting positions. Removes the need to design custom waterproof casing which can be complex for cameras (“Compare Intel Realsense Depth Cameras (Tech Specs and Review),” n.d.) |

Selection Justification

For a detailed trade study, please refer to Section 4.1.5

It is important to note that our client has already provided us with a Intel Realsense camera. The Intel Realsense over has the second best sensor characteristics mainly due to the inclusion of global shutter. The depth data can also be used for localization. Due to its high performance and ability to be used for localization, the Intel Realsense is the best option.

A con of the Intel Realsense is the poor horizontal FOV of 70 degrees especially since the Leopard Imaging has a 92 degree horizontal FOV lens with 1% distortion. The low horizontal FOV means PropBot needs to rely more on LiDAR and other sensors to detect objects coming on its sides.

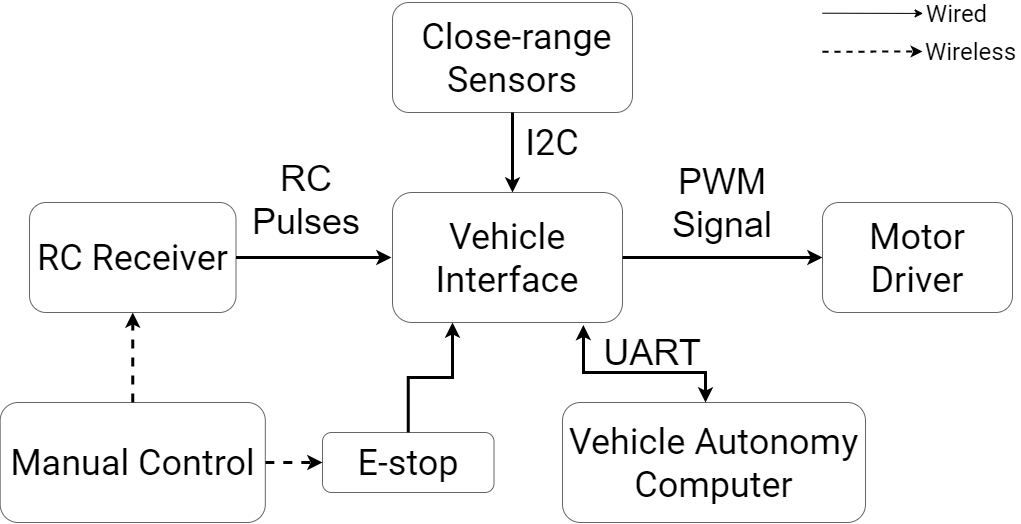

The following figure shows all the hardware elements that are involved in the robot control subsystem. This section will elaborate on all these hardware elements.

Robot control involves receiving motion information from a source (RC or autonomy computer), and converting the information into a motion intention (PWM signals, CAN commands, RS-232 messages, etc.) that will be sent to the motor drivers. All of this must occur while considering inputs from the close-range sensor network and the E-stop (which is again a hardware zero speed or brake command assertion mechanism, not a power-off switch). All the information is ingested by the vehicle interface (one or more control devices) and then sent out to the various peripheral devices. The interface acts more like a command relay system, with a bit of extra functionality.

Simplified Robot Control System Diagram

Simplified Robot Control System Diagram

Final Choice: Arduino Mega

Selection Justification

For a detailed trade study, please refer to section 4.2.3 in the "Design Specifications" document in Propbot/docs/Reports

The need for either drivers with integrated processors or a separate device for vehicle interfacing is motivated by the ability to have the autonomy side be hardware agnostic. Although the RoboteQ has integrated control electronics and can be daisy-chained to create a connected system, writing custom code for the RoboteQ drivers eliminates firmware portability. To ensure hardware decoupling and firmware portability and scalability, the decision was made to add in a separate controller: the "Vehicle Interface". The simplest option is an Arduino device due to its affordability, availability, and ease of use. Further, switching between various Arduino platforms requires little to no changes.

Bi-directional interfaces

The following table is an overview of what the vehicle interface communicates with and how.

| Item | Interface | Details |

|---|---|---|

| Autonomy computer | USB (UART) | USB 2.0 or 3.0 |

| Remote controller | RC pulse | 2.4GHz, 6 Channel |

| Motor drivers | CAN | requires a CAN shield |

Vehicle Interface and Motor Driver Communication

The current motor drivers operate purely via PWM commands. The newly selected motor drivers - RoboteQ SBL1360A (see section 2.4.2) - use true serial (inverter twisted pairs, [-10V, +10V]), whereas the Arduino utilizes Transistor-Transistor Logic (TTL) serial, which is [0V, +3.3V/+5V]. To facilitate communication across the differing voltage levels, either resistors and level-shifters can be used on the driver side to convert to TTL, or a fully integrated RS-232 to TTL converter can be purchased. However, RS-232 would be mostly ideal for communicating with one device, such as a single motor driver. But, communicating over RS-232 to a single driver and then having that driver relay the message over CAN introduces latency. Instead, the vehicle interface should act as the master and the drivers as the slaves. Further, it is possible that more than one device will make up the vehicle interface as the number of input ports come to limit the integration of additional peripheral devices for improved emergency response, and driving capabilities. Therefore it is important to use a communication protocol that supports multiple master devices, such as CAN.

RS-232 vs. CAN

RS-232 can be accomplished via two wires and can be extended to complex multi-wire protocols. However, the main drawback of RS-232 is that the transmitter and receiver configurations are single-ended. This causes the system to be very susceptible to noise, especially at higher baud rates (up to 19.2Kbps (“How Rs232 Works,” n.d.))

CAN is a complex protocol, however it allows for multiple masters along with multiple connected communication nodes at a higher baud rate (up to 1Mbps for CANopen (“CANOPEN: An Application Layer Protocol for Industrial Automation,” n.d.)) with more noise efficiency. Luckily, there is a lot of support on both the Arduino side and the RoboteQ side for CAN protocols (CANopen, RoboCAN, etc). In terms of hardware support, CAN adapter boards for the Arduino Mega are available so nothing custom has to be created. For these reasons, the vehicle interface will communicate with the motor drivers via CAN.

Final Choice: RoboteQ SBL1360A

Selection Criteria

Currently, the system utilizes 24V/15A motor driver that is untraceable and has no datasheet online. We are assuming those motor drivers are BLDC (Brushless Direct Current) based on the similar motor drivers we find online (“24V 250W Brushless Motor Controller Electric Bicycle Speed Control for E-Bike and Scooter” 2019).

Given that the complexity of motor control will affect our approach to the rest of the self-driving system, it is necessary to move to the motor drivers that have established support and possess extensive functionality: tachometer, encoder integration, commutation sensor feedback, support for multiple communication protocols, daisy-chaining support, etc. Although implementing four such optimal motor drivers may cost over 1000 CAD, we believe it would significantly decrease the time to achieve safe non-autonomous driving capabilities and allow for more focus on the self-driving platform.

| Required Feature | Justification |

|---|---|

| Accurate speed control | As this robot is in initial stages, the speed is limited to 10km/h to ensure the safety of robot and other road users.This speed will allow for the robot user to achieve a small stopping distance during manual control, and easily hit the onboard E-stop if needed. |

| Robot shall perform complex maneuvers (e.g. turn, decelerate, stop) | The robot must be able to perform the required maneuvers in order to appropriately navigate and avoid obstacles. |

| Robot shall facilitate longitudinal motor set control via remote communication | The robot must be able to take commands from a remote controller. A user must be able to have control over the motion, so the longitudinal motor sets must be able to move independently. |

Given these requirements, we are now looking for either standalone motor drivers or a system of motor drivers that can be daisy-chained together to facilitate complete control of the robot. Further, cheaper motor drivers could be integrated into the system for the non-motion motors to improve braking by either de-energizing or energizing the braking system. The requirements of the motor drivers are listed in the table .

Selection Justification

For a detailed trade study, please refer to Section 4.2.2

Among all the options, the RoboteQ SBL1360A best fits all the requirements outlined for the motor driver.

In addition, it requires no additional controller like a standalone motor driver would given that the motor driver can be daisy chained together:

The SBL1360A accepts commands received from an RC radio, Analog Joystick, wireless modem, or microcomputer. Using CAN bus, up to 127 controllers can be networked on a single twisted pair cable. Numerous safety features are incorporated into the driver to ensure reliable and safe operation. (“RoboteQ Sbl1360,” n.d.)

Further, it is an intelligent system, and so the functionality and visibility of the system can be customized: (Note: the "controller" in the quotation below refers to the motor driver).

The controller’s operation can be extensively automated and customized using Basic Language scripts. The driver can be configured, monitored and tuned in real time using a RoboteQ’s free PC utility. The controller can also be reprogrammed in the field with the latest features by downloading new operating software from RoboteQ. (“RoboteQ Sbl1360a,” n.d.)

Currently, a hardware switch connects the entire electrical system to the battery bank. In the unlikely event that power must be cut to the entire, the switch can be flipped to cut power to entire system, which acts as a power lockout assertion mechanism.

Existing power-off switch

Existing power-off switch

| Required Feature | Justification |

|---|---|

| The fallback ready user shall be able to kill power to the system via power-off switch. | In an extreme case, the switch can be flipped to cut power to the entire system. |

To halt speed commands to the motor drivers, an emergency stop button will be used. The vehicle interface will be interrupted and shall put the system into "coast" in which no speed commands are sent. Provided the legacy motor drivers are swapped for ones that are fully functional, a "brake" command could be integrated (braking via inverting the wheel motor electromotive force (EMF)) and would replace "coast" in the E-stop context.

Additionally, a remote halt option is available via a switch on the remote controller. Again, this will not cut power to the motors, but will put the wheel motors into either "coast" ("brake" desired).

| Required Feature | Justification |

|---|---|

| The fallback ready user shall be able halt motion commands to the robot via e-stop. | In the event of an emergency, the button can be pressed to stop sending motion commands to the system. |

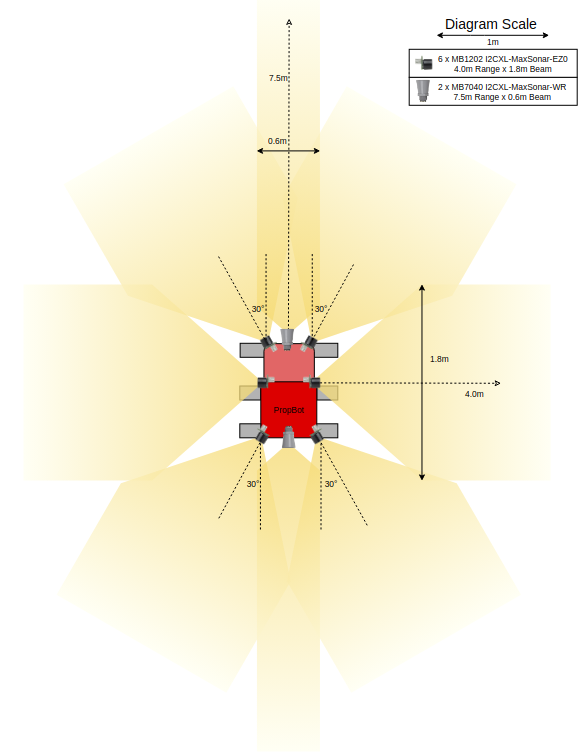

Final choice: 6 x MB1202 MaxSonar, 2 x MB7040

Selection Criteria

The main purpose of the close range sensors is to provide a final fallback collision avoidance mechanism for the robot. The requirements for the close-range sensor are described in the table . The minimum requirements are fairly low due to the large variety of sensors with different characteristics.

| Required Feature | Justification |

|---|---|

|

Detects long distance. Minimum 2 meters. |

Longer range gives the robot more distance to react and stop. |

|

Detects Wide Arc. Minimum |

A wider arc reduces the blind spots of the robot. |

|

Good Resolution/Accuracy. At most 10 cm. |

Should be accurate enough to be useful. But the main goal is not distance estimation. |

|

Weather proof. Minimum IPX3. |

Allows the sensor to be placed in more optimal positions. No need to design a case. |

Selection Justification

For a detailed trade study, please refer to Section 4.2.1

Overall most sensors go far beyond our requirements for accuracy as the close-range sensors are used to detect whether an object is within a certain threshold distance rather than using its exact range values. Additionally the high sample rates of 50-60 Hz are not necessary, as PropBot moves slowly (max speed is 10km/h).

The sensor that is the best option is the MaxSonar. While it has high $100 price tag, no other sensor comes close in terms of detection range. It also has a good variety of digital interface options for easy integration and completely waterproof casing. The main disadvantage is the low 10Hz sample rate, but given the long 10m range and Propbot’s slow speeds, it is likely to be sufficient: given that the robot drives at a max speed of 10km/hr (2.8m/s) and stops 1 second after the stop command is given (as defined by requirement NF4.1), the sensors should trigger when the robot is at least 5m away from a obstacle (accounting for some latency in the braking system). This indicates an update rate which is larger than (2.8)/(10-5) = 0.56Hz is adequate.

From the comparison of sensors it shows that the MaxSonar line of ultrasonic sensors have the best performance characteristics. There are many models of the MaxSonar with different use cases. To choose the appropriate sensors we analyzed their usage on PropBot which is for a fallback to avoid collision.

The most desired characteristic for this use case is a wide coverage area. This is where the MB1202 MaxSonar provides the best performance with its wide beam. These wide beam sensors can be placed around the robot to provide 360 degree coverage. Additionally, two MB7040 are added to do more accurate range prediction for the front and back of the robot. This is necessary as the robot primarily moves in these directions. Due to the wide beam of the MB1202, it may produce more false alarms, thus having a narrow beam sensor lets the robot more accurately see the free space in front.

Figure shows the optimal sensor mounting for full coverage around the vehicle. It is important to note that the beam width and range values here are fairly conservative as the datasheet values for detection of very small objects (1 inch diameter dowel). Larger objects such as humans or vehicles can be detected at greater distances.

| Model | Range | Beam Width | Connection |

|---|---|---|---|

| 4.0m | 1.8m | I2C | |

| 7.5m | 0.6m | I2C |

Ultrasonic sensor mounting and coverage

Ultrasonic sensor mounting and coverage

Final Choice: FS-T6

Selection Criteria

The selection criteria for the remote controller is described in the table .

| Required Feature | Justification | Specification |

|---|---|---|

| The E-stop button on the PropBot is remotely linked | Users do not need to get to the PropBot and press the E-stop button in emergency cases. | The manual control device shall have some programmable buttons or switches. |

| Robot shall facilitate longitudinal motor set control via remote communication | The two sets of wheels move differently when the PropBot is making a turn. | The manual control device shall have two joysticks. |

Selection Justification

There are three types of remote controllers: infrared, voice, and radio frequency (RF). The infrared remote controller is unreliable in the sunlight and the voice remote controller can be easily affected by other non-users near the robot.

The RF remote controller is the best option. We want to use the remote controller to operate four basic functions, which are:

Move the left-side wheels forward/backward

Move the right-side wheels forward/backward

Remote E-stop assertion mechanism (switch/button)

Remote wwitch between manual and autonomous control modes

Hence, the remote controller should have at least four independent channels. The FS-T6 is a six-channel transmitter with two joysticks and four programmable switches. This provides a joystick for each set of wheels and programmable switches for a remote kill switch and a autonomy to manual mode switch. Figure 7 demonstrates how the four functions mentioned above are distributed on the transmitter. The remote controller receiver is FS-R6B, which is a six-channel receiver.

FS-T6 transmitter

FS-T6 transmitter