-

Notifications

You must be signed in to change notification settings - Fork 6

Development backend

This page assumes a working knowledge of Python/Django.

Like most modern Django sites, the code is split into "apps". This page breaks

down the GMS apps and describes the functionality in each. From the project

root (./webapp):

-

Home - landing pages, notices, about, request forms, arbitrary pages (

/page.html) - Events - list and display events

- News - list and display news items, news feed web scrapers (e.g. BioCommons)

- People - the Galaxy team

- Utils - not a Django app, but a collections of functions that are used throughout the application.

Other aspects worth reading about:

This is a great place to start in the backend, because it is a high-touch point and is relatively simple in design.

If we take a look at the routes available in home/urls.py, we can see that the root URL points to views.index:

"""URLS for static pages."""

from django.urls import path, re_path

from . import views, redirects

urlpatterns = [

path('', views.index, name="home_index"),

# ...We can see how this view is rendered by checking out the index function in home/views.py:

def index(request, landing=False):

"""Show homepage/landing page."""

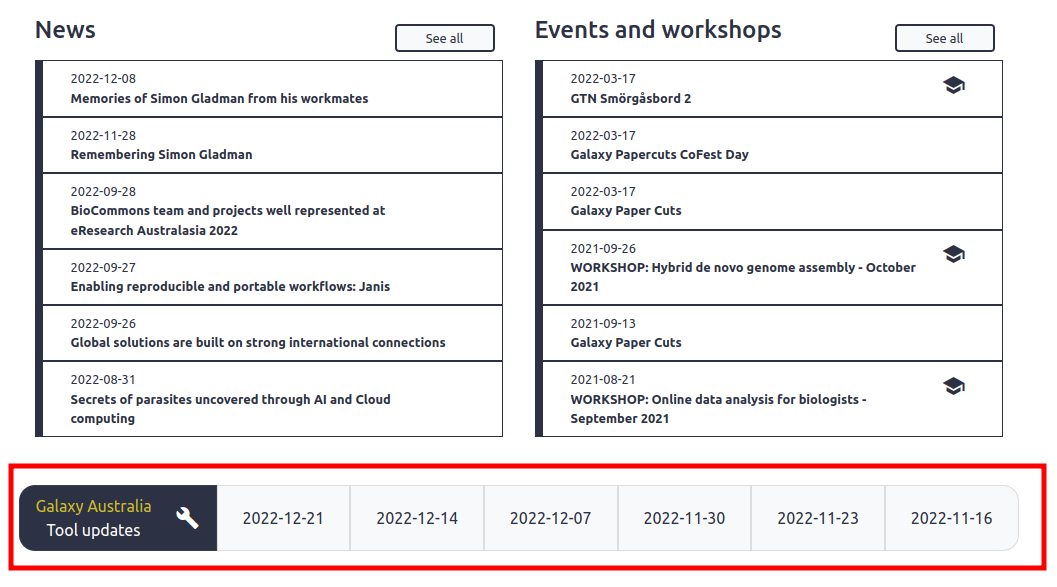

news_items = News.objects.filter(is_tool_update=False)

events = Event.objects.all()

notices = Notice.objects.filter(

enabled=True,

subsites__name='main',

)

tool_updates = News.objects.filter(is_tool_update=True)

if not request.user.is_staff:

news_items = news_items.filter(is_published=True)

events = events.filter(is_published=True)

notices = notices.filter(is_published=True)

tool_updates = tool_updates.filter(is_published=True)

return render(request, 'home/index.html', {

'landing': landing,

'notices': notices.order_by('order'),

'news_items': news_items.order_by('-datetime_created')[:6],

'events': events.order_by('-datetime_created')[:6],

'tool_updates': tool_updates.order_by('-datetime_created')[:6],

})For what seems like a fairly static page there is actually quite a lot to look at here. We fetch records from the database through the News, Event and Notice models and pass them to render() with the appropriate HTML template. You can check out index.html to see how these vars (notices, news_items etc.) are rendered. The landing variable should actually be deprecated now, since it is no longer used by the frontend.

What we cover above is a very standard flow for creating or updating a webpage. We usually know the URL from interacting with the browser, so urls.py will lead us to the request handler in views.py. From here, data logic is exported to models.py, form logic to forms.py and other complex logic to various other places. But views.py is very much the root of our request-response cycle. It is the "controller" in MVC architecture.

A few things to note here:

- Tool updates are just a special kind of news item that is displayed separately.

- For public (non-staff) users we filter out unpublished material. For admins, these are displayed with a "red eye" icon.

- This view is for the

mainsubsite, so we only display Notices posted to this subsite. Other subsites (e.g.genome) can have their own Notices.

As you would expect, the index page renders the main landing page. For rendering other landing pages (e.g. for subsites), we need to look at the landing view. A glance at urls.py shows us that the URL path (e.g. /landing/genome) is parsed to give us the subdomain name. This is then passed to the view function:

def landing(request, subdomain):

"""Show landing pages for *.usegalaxy.org.au subsites."""

template = f'home/subdomains/{subdomain}.html'

try:

get_template(template)

except TemplateDoesNotExist:

return HttpResponseNotFound('<h1>Page not found</h1>')

subsite = Subsite.objects.get(name=subdomain)

notices = subsite.notice_set.filter(

enabled=True,

)

if not request.user.is_staff:

notices = notices.filter(is_published=True)

return render(request, template, {

'notices': notices,

})To keep things DRY here, we use a single view to render all subsite landing pages. As you can see, the only data passed to the templates is Notices. For now this is fine, as these pages will mostly contain static content, but in the future we might want to pass some other data. For more complex cases it's better to break them out into a dedicated view function, as with index.

A.K.A "subdomains" / "community domains"...

Most of the administration/creation of subsites is done on Galaxy AU itself. GMS has a very thin model layer to facilitate the display of multiple landing page.

The framework above shows how alternate landing pages are returned for a given domain. Pretty simple. But one small piece has not been mentioned which allows cross-posting of Notices across landing pages: the Subsite model:

# home/models.py

class Subsite(models.Model):

"""Galaxy subsite which will consume a custom landing page."""

name = models.CharField(max_length=30, unique=True, help_text=(

"This field should match the subdomain name. e.g."

" for a 'genome.usegalaxy.org' subsite, the name should be 'genome'."

" This also determines the URL as: '/landing/<subsite.name>'. The"

" HTML template for this landing page must be created manually."

))

def __str__(self):

"""Represent self as string."""

return self.nameAs I said before, it is a very thin layer with only one field. A name is enough for admins to create a subsite quickly and link Notice items to it when they are created. We can see that happening in the Notice model and web admin:

# home/models.py

class Notice(models.Model):

"""A site notice to be displayed on the home/landing pages."""

# ...

subsites = models.ManyToManyField(

Subsite,

help_text=(

"Select which subdomain sites should display the notice."

),

)

This allows us to make this query in views.py:

Notice.objects.filter(enabled=True, subsites__name=subdomain)In the future, we might add more fields to the Subsite model to associate other data with subites.

Here we are just modifying Django's default web admin for available models (defined in models.py). Everything is pretty standard Django stuff so shouldn't need much special clarification, but it is a good reference for how the Django admin can be modified to do what you want. Most of the logic here is ordering and filtering of fields.

For UserAdmin (administration of the User model), we also specify custom forms (defined in admin_forms.py) for the "Add" and "Change" views. This allows us to replace a weird password-hash field (the default password widget) with a more useful password change field. The nice thing about this is we don't have to edit or re-write HTML templates, we just modify the Form class that will be used for rendering.

This is a fairly critical function of GMS - support request forms for general enquiry, tool requests and data quota requests.

We can start again with a glance at urls.py to get a sense of the available routes:

"""URLS for static pages."""

from django.urls import path, re_path

from . import views, redirects

urlpatterns = [

# ...

path('request', views.user_request, name="user_request"),

path('request/tool', views.user_request_tool, name="user_request_tool"),

path('request/quota', views.user_request_quota, name="user_request_quota"),

path('request/support',

views.user_request_support, name="user_request_support"),

path('request/alphafold',

views.user_request_alphafold, name="user_request_alphafold"),

# ...

]It's pretty obvious from the URL patterns what these views are for. These view handlers are 100% boilerplate Django:

def user_request_tool(request):

"""Handle user tool requests."""

form = ResourceRequestForm()

if request.POST:

form = ResourceRequestForm(request.POST)

if form.is_valid():

logger.info('Form valid. Dispatch content as email.')

form.dispatch()

return render(request, 'home/requests/success.html')

logger.info("Form was invalid. Returning invalid feedback.")

# logger.info(pformat(form.errors))

return render(request, 'home/requests/tool.html', {'form': form})- Pass the POST data to a new Form object

- If the form is valid, do something (we use a

form.dispatchmethod here) - Return some feedback to the user (success/form error)

- Or, if it was a GET request, just return a blank form

This demonstrates a good way to structure Django code - use views to carry out web logic, and pass off other logic to some other part of the application. In this case, it makes more sense for the "form" related logic to be carried out in forms.py. Let's have a look at the dispatch handler. Obviously when a user submits these forms successfully we want GMS to send an email to the helpdesk. So, we have a single dispatch_form_mail function to take care of all the generic mail logic in one place:

def dispatch_form_mail(

to_address=None,

reply_to=None,

subject=None,

text=None,

html=None):

"""Send mail to support inbox.

This should probably be sent to a worker thread.

"""

# ...This takes care of the very routine process of building multipart email objects, which are dispatched with a retry loop. If this fails three times, it writes the error and text body to the error log. In production, this is hooked up to the #gms-alerts Slack channel which the devs should be subscribed to. This has saved our butts a few times because failed form submissions come through Slack, rather than being lost or buried in log files.

You will also notice that we are sending mail with the utils.postal module. It turns out that the Postal mail server is REALLY fussy about SMTP headers, so we have written the utils.postal.send_mail wrapper to make sure this goes smoothly. With any other mail server we could just use email.send, as the code suggests.

As I've noted in the code above, this function should probably run asyncronously on a worker thread so that web threads are not wasting their precious time sending mail. But they send mail pretty fast, and are blocked for less than a second which is fairly acceptable. Since we don't have users submitting these forms constantly, we can easily get away with this. However, it would be very wise to limit the amount of POST requests per IP address to prevent abuse of these endpoints.

Ok, let's take a look at the dispatch method for the ResourceRequestForm (tool/dataset requests):

def dispatch(self):

"""Dispatch form content as email."""

data = self.cleaned_data

template = (

'home/requests/mail/'

f"{data['resource_type']}"

)

dispatch_form_mail(

reply_to=data['email'],

subject=(

f"New {data['resource_type']}"

" request on Galaxy Australia"

),

text=render_to_string(f'{template}.txt', {'form': self}),

html=render_to_string(f'{template}.html', {'form': self}),

)Our dispatch_form_mail handler lets us write these nice, thin dispatch methods:

- Render the template path based on the resource type (tool/data)

- Call

dispatch_form_mail, passing rendered templates for both a text and HTML mail body

We created a request form for users to request access to the AlphaFold tool. This form is similar to the others, but with a few checks to reduce the admin burden of invalid requests. The submitted email address should be:

-

A registered AU institution address (defined here)

-

A registered usegalaxy.org.au user

-

We can take a look

AlphafoldRequestFormin forms.py to see the solution, where we have added some logic to theForm.cleanmethod:

class AlphafoldRequestForm(forms.Form):

"""Form to request AlphaFold access."""

# ...

def clean_email(self):

"""Validate email address."""

email = self.cleaned_data['email']

if not is_institution_email(email):

raise ValidationError(

(

'Sorry, this is not a recognised Australian institution'

' email address.'

),

field="email",

)

return emailAgain, we are creeping into logic that is not strictly form-related so this has been exported to the utils.institution module, keeping the logic here clean and easy to read. This module has a few simple functions that cross-reference the submitted value against an institutional email list read from JSON files.

- For the Galaxy account issue, we export the logic to

utils.galaxy.is_registered_email. This function uses the BioBlend library to check whether the submitted email address matches a usegalaxy.org.au account. This has to be handled carefully - returning a "user not found" message would enable phishing of Galaxy account email addresses through this form. Instead, we return "success" in either case and inform the user by email that their request couldn't be completed.

We often get asked to put up arbitrary static pages on GMS, so it's nice to have a framework for doing this easily with no overhead.

Any URL ending with *.html will be served with the page view handler. To create a new webpage with this handler, simply add an HTML template to home/templates/home/pages. You'll find an example.html file in there that you can copy and fill out with your content. There are always a few other pages in there you can use as examples too.

Once your file exists, you can access it at site.com/<mytemplate>.html. That's it.

Note that this works great for static pages, but if you want to render some data you might need to write a dedicated view to fetch and pass the data.

The events app is fairly straightforward. At the code level it interacts with the News app, since they share the Tags and Supporter models, which can be linked to multiple News and Event records to be displayed like so:

The other thing the Event and News models have in common is rendering of markdown text content.

For boths models, admins write the article body in markdown and GMS then renders it to HTML. Some markdown rendering logic is in the utils.markdown module, but most is taken care of directly by the markdown2 Python library through a custom template tag.

We do need to modify the web admin add/change Event forms to allow markdown entry. This is done by injecting a bit of CSS/JavaScript from the simplemde.js ("simple markdown editor") library (with a sprinkle of our own for polish).

This can be done fairly easily in admin.py by declaring a Meta class:

# events/admin.py

class EventAdmin(admin.ModelAdmin):

"""Administer event items."""

class Media:

"""Assets for the admin page."""

js = (

# Load simplemde over a CDN:

'//cdn.jsdelivr.net/simplemde/latest/simplemde.min.js',

# some js to add *required* label to appropriate fields:

'home/js/admin-required-fields.js',

# Configuring the markdown editor:

'home/js/admin-mde.js',

)

css = {

'screen': (

# Load simplemde over a CDN:

'//cdn.jsdelivr.net/simplemde/latest/simplemde.min.css',

# Some modifications to the markdown editor styling:

'home/css/admin-mde.css',

),

}This gives us a nice markdown editor field in the web admin form:

As we said, the rending of markdown to HTML is taken care of by the markdown2 library. But there is one part of markdown rendering that we have to take care of in GMS - rendering the src attribute of uploaded images into the markdown. If you take a look at the Admin docs for events, you'll see that admins have to option to upload images to be displayed in the body markdown. For tagging these images in markdown, a placeholder tag (e.g. ) is used for the src attribute. In the backend, when the model instance is saved, we want to replace these tags with the real URI for the uploaded image(s). The important thing to understand here is when this is happens. This is handled using Django's signals, which allow you to provide handlers for certain model events, model save and delete:

# events/signals.py

@receiver(post_save, sender=EventImage)

def render_markdown_image_uris(sender, instance, using, **kwargs):

"""Replace EventImage identifiers with real URIs in submitted markdown."""

event = Event.objects.get(id=instance.event_id)

event.render_markdown_uris()Here we do very little:

- Fetch the event instance related to the uploaded image

- Call the

render_markdown_urismethod on that model instance

This approach works without getting too complicated, but it is a bit of a hacky way of handling this. The issue is that this logic requires event.body to be rendered once for every EventImage that is saved. Ideally, we would do this only once, after saving the last image. However, it's difficult to tell whether it is the last image using signals. By definition, any remaining images have not yet been saved and are therefore not available to query! In reality we rarely have more than 2 images to save, so this redundant processing is tolerable.

As suggested before, both News and Event models share this logic. They actually both share the EventImage model too, which is a little misleading. EventImage should probably be renamed ArticleImage.

Most of the logic here is copied or imported from Events. We will cover a few exceptions here.

This has one purpose - to allow Jenkins to post "tool updates" as News articles (though the API could easily allow posting regular News articles too). Tool updates are rendered separate to regular News items on the landing page:

The News API (accessible at /news/api/create) is fairly straightforward.

It relies on the APIToken model for Authentication - admins can create new API tokens through the Django web admin.

As long as the token provided in the POST request matches one in the database, the request will be accepted. A GET request will return some basic documentation on the API interface:

POST requests take the following parameters:

------------------------------------

api_token: [REQUIRED] Authenticate with the server

body: [REQUIRED] Body of the post in markdown format

title: Title of the post to create. Required if not tool update.

tool_update: If tool update, title created automatically (true/false)

It expects body to be in a section-delimited format with YAML metadata followed by the article in markdown format. The YAML header is parsed to created News model fields (though in fact we just hard-code these in the API handler because they are always the same).

For example:

---

site: freiburg

title: 'Galaxy Australia tool updates 2022-12-14'

tags: [tools]

supporters:

- galaxyaustralia

- melbinfo

- qcif

---

### Tools installed

| Section | Tool |

|---------|-----|

| **Convert Formats** | vcf2maf [e8510e04a86a](https://toolshed.g2.bx.psu.edu/view/iuc/vcf2maf/e8510e04a86a) |

### Tools updated

| Section | Tool |

|---------|-----|

| **Assembly** | bionano_scaffold [faee8629b460](https://toolshed.g2.bx.psu.edu/view/bgruening/bionano_scaffold/faee8629b460) |

| **FASTQ Quality Control** | multiqc [abfd8a6544d7](https://toolshed.g2.bx.psu.edu/view/iuc/multiqc/abfd8a6544d7) |

| **Graph/Display Data** | ggplot2_heatmap [10515715c940](https://toolshed.g2.bx.psu.edu/view/iuc/ggplot2_heatmap/10515715c940)<br/>ggplot2_point [5fe1dc76176e](https://toolshed.g2.bx.psu.edu/view/iuc/ggplot2_point/5fe1dc76176e) |You can see this API being called by Jenkins in usegalaxy-au-tools.

At the time of writing, most of Galaxy AU news items actually come from the BioCommons website.

We wrote a web scraper to pull down any new articles from their Galaxy news webpage and link to them from GMS using the external article feature. This creates a News item with an external URL instead of a body, which opens in a new tab when clicked.

The scraper is scheduled to run every morning by calling a Django manage.py command from cron. It scans the site for any new articles, and creates News instances for any new ones that it finds. How does it know whether the article is new? It checks whether an News instance exists with external=URL.

# The crontab entry

0 9 * * * cd /srv/galaxy-media-site/webapp && /home/ubuntu/galaxy-content-site/.venv/bin/python manage.py scrape_news

In the future we could add other web scraper plugins to ingest news articles (or other content) from other sources, such as the Galaxy Community Hub.

This is a really tiny app. It should really have been part of home.

- Add/edit Galaxy AU team members with the Person model

- Render and display them with views.index

Nothing complicated here.

Most of the facilities found in utils have been discussed elsewhere. Here are a few more bits...

Since we are coming to rely on AAF to authenticate our users, it's nice to help them figure out whether they can actually use AAF.

We take advantage of an XML document published by AAF at https://md.aaf.edu.au/aaf-metadata.xml

We request and parse this document to display as a webpage on GMS:

With the current configuration, the document is cached for 72 hours to reduce the overhead a little.

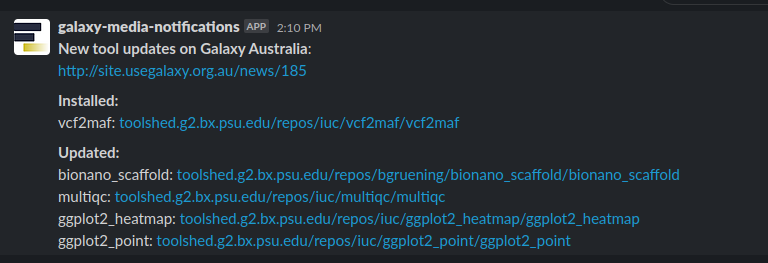

Johan asked if he could receive tool updates in a specific format over Slack for his reporting.

The utils.notifications module serves this specific purpose.

These messages currently go to the #galaxy-notifications channel in the BioCommons BYOD Slack workspace.

- The

notify_tool_updatefunction is called directly from the tool update API - Updates are parsed directly from the article's markdown body

- A Slack message is posted to look something like this:

Tests are written with standard Django framework components, and are found in the tests.py file of each app.

To keep things DRY we have created test/data/*.py files in each app which contain data for creating test model instances.

For example, take a look at home/test/data/notices.py:

from .subsites import TEST_LABS

TEST_NOTICES = [

{

"data": {

"notice_class": "info",

"title": "Test Notice 1",

"body": "# Test notice body\n\nThe notice body in markdown.",

"enabled": True,

"is_published": True,

},

"relations": {

"subsites": [TEST_LABS[0]],

},

},

# ...These dictionaries provides enough data for us to create a bunch of Notices quickly.

They are reused in test cases across all apps.

You'll notice the following pattern being used in the setUp method across tests:

for notice in TEST_NOTICES:

subsites = notice['relations']['subsites']

notice = Notice.objects.create(**notice['data'])

for subsite in subsites:

subsite = Subsite.objects.get(name=subsite['name'])

notice.subsites.add(subsite)We can then close the loop on this when writing assertions in our tests:

self.assertContains(

response,

TEST_NOTICES[0]['data']['title'],

)Quite a bit of logic is tested by an index page test case after creating Notice, Event and News objects. The test then asserts that appropriate content are returned in the landing page. There are still quite a few areas that lack test coverage, but these the simplest parts of the application that are unlikely to break. For example, the people` app currently has zero test coverage, but does so little that it doesn't seem worthwhile writing tests here.

There is a dedicated settings module for test - webapp.settings.test.

This module has been hard-coded into manage.py to ensure that the correct settings are always used.

Currently the only difference with test settings is that a disposable MEDIA_ROOT is set (see below).

To implement a global setUp/tearDown method we have subclassed django.test.TestCase.

This takes care of MEDIA_ROOT disposal after tests have been run.

Otherwise, the MEDIA_ROOT ends up full of media files created by test setup!

We have also implemented a global setUp that sets the log level to ERROR so

that unittest output does not get littered with log statements.

To inherit these generic methods, test cases should subclass webapp.tests.TestCase, rather than django.tests.TestCase.

If you implement a setUp/tearDown of your own, remember to call e.g. super().setUp():

class MyTestCase(TestCase):

def setUp(self):

super().setUp()

# Your setup code here...We use a pretty conventional setup for modular settings. All settings modules inherit from webapp/settings/base.py, and we are typically using either:

- webapp/settings/dev.py for development

- webapp/settings/prod.py for deployment

The settings module is set with the env variable DJANGO_SETTINGS_MODULE which defaults to webapp.settings.prod.

To use dev settings locally, you need to set DJANGO_SETTINGS_MODULE=webapp.settings.dev in your virtual env.

All settings modules rely on building a context from environment variables (contained in a .env file) using the dotenv library. Most of the content of the settings files are self-explanatory, with a few parts worth documenting.

- utils.paths.ensure_dir: this function is used to ensure that required directories exist at runtime, like:

LOG_ROOT = ensure_dir(BASE_DIR / 'webapp/logs')This can help prevent some annoying errors following deployment and helps to keep the codebase DRY (so we don't need to handle missing dirs everywhere).

-

home.context_processors.settings is added to

TEMPLATES['OPTIONS']['context_processors']to make certain settings variables accessible in all template context. This lets us do things like:

<!-- header.html -->

<a class="navbar-brand" href="/">

<span class="australia">Galaxy {{ GALAXY_SITE_NAME }}</span> {{ GALAXY_SITE_SUFFIX }}

</a>This custom context processor is defined here.

- validate.env: raise an exception if required env variables are missing/incorrect

-

CSRF_COOKIE_DOMAINandCSRF_TRUSTED_ORIGINSare required for form submission from a usegalaxy.org.au origin (i.e. when GMS is inside aniframe)

Logging configuration is also defined as part of settings. Read on...

- This module is automatically activated with

python manage.py test -

MEDIA_ROOTis set to a disposable temp dir - See the testing section above for more info

Logging config is expressed in DictConfig here.

There are six log handlers that direct logging output to various places:

-

debug_file: the most verbose logging. All messages. Very noisy. -

main_file: all log messages aboveINFOlevel. -

error_file: all log messages aboveERRORlevel. -

error_mail: send everything aboveERRORlevel as email to ADMINs (this doesn't seem to be working but should really be fixed) -

error_slack: send everything aboveERRORlevel as Slack message to the channel declared by theSLACK_CHANNEL_IDenv variable (currently#gms-alerts). This is the most useful handler for picking up errors. Make sure you are subscribed to this Slack channel if you're a GMS developer! -

console: this is what you see when running locally withrunserver- and this also gets written to thesyslogwhen running with systemd.

Custom handlers

There is only one, for sending Slack notifications. It relies on the utils.slack module that is used across the rest of the application.

Filters

There are a few annoying log messages that we don't care about too much. There are two filters that are used to exclude these messages:

- 401 errors arising from

invalid http_host header. We see lots of these in production from vulnerability scanners. -

VariableDoesNotExisterrors. We shouldn't really have these in templates but generally it's ok for them to fail silently.

Neither of these filters are applied to the console handler, so you can still debug them in dev.

You might just like to SSH into the web server as do:

sudo journalctl -fu webapp

# ~~ or ~~

sudo journalctl -u webapp --since "2 hours ago"... but you might find the more granular file logging useful:

less /srv/sites/webapp/webapp/logs/main.logFor error logs, you can probably just check the #gms-alerts Slack channel.